By Murray Zhu, Head of Technical Operations and Maintenance of InMobi

The high integration of open-source technologies and cloud-native has allowed the open-source big data platform of Alibaba Cloud to accumulate rich practical experience in functionality, usability, and security. It has been serving thousands of enterprises and helped them focus on the advantages of their core business, shorten the development cycle, reduce difficulties of operations and maintenance, and expand more business innovations.

This article mainly shares the best practices of InMobi based on the open-source big data service of Alibaba Cloud.

InMobi is an AI-driven and effect-driven global platform for mobile advertising and marketing technology. Based on a large number of applications and users connected globally, InMobi provides services for mobile advertising promotion and marketing technology for domestic brands and applications and offers application advertising commercialization and monetization services for application developers. The platform was established in 2007 and entered the Chinese market in 2011. It is oriented by technology research and development and occupies an important position in the mobile advertising platform industry. Its professional technologies are leading in China and globally. InMobi has reached over one billion monthly active and independent users through localization service teams in 23 countries and regions globally. It provides tens of thousands of fine-grained audience classifications, thousands of dimensional tags, data from customized sample libraries of tens of millions of users, and accurate mobile advertising using location-based services (LBS).

As a leading technology enterprise, InMobi was acclaimed as one of the CNBC Disruptor 50 in 2019 and one of the Most Innovative Companies by the Fast Company magazine in 2018.

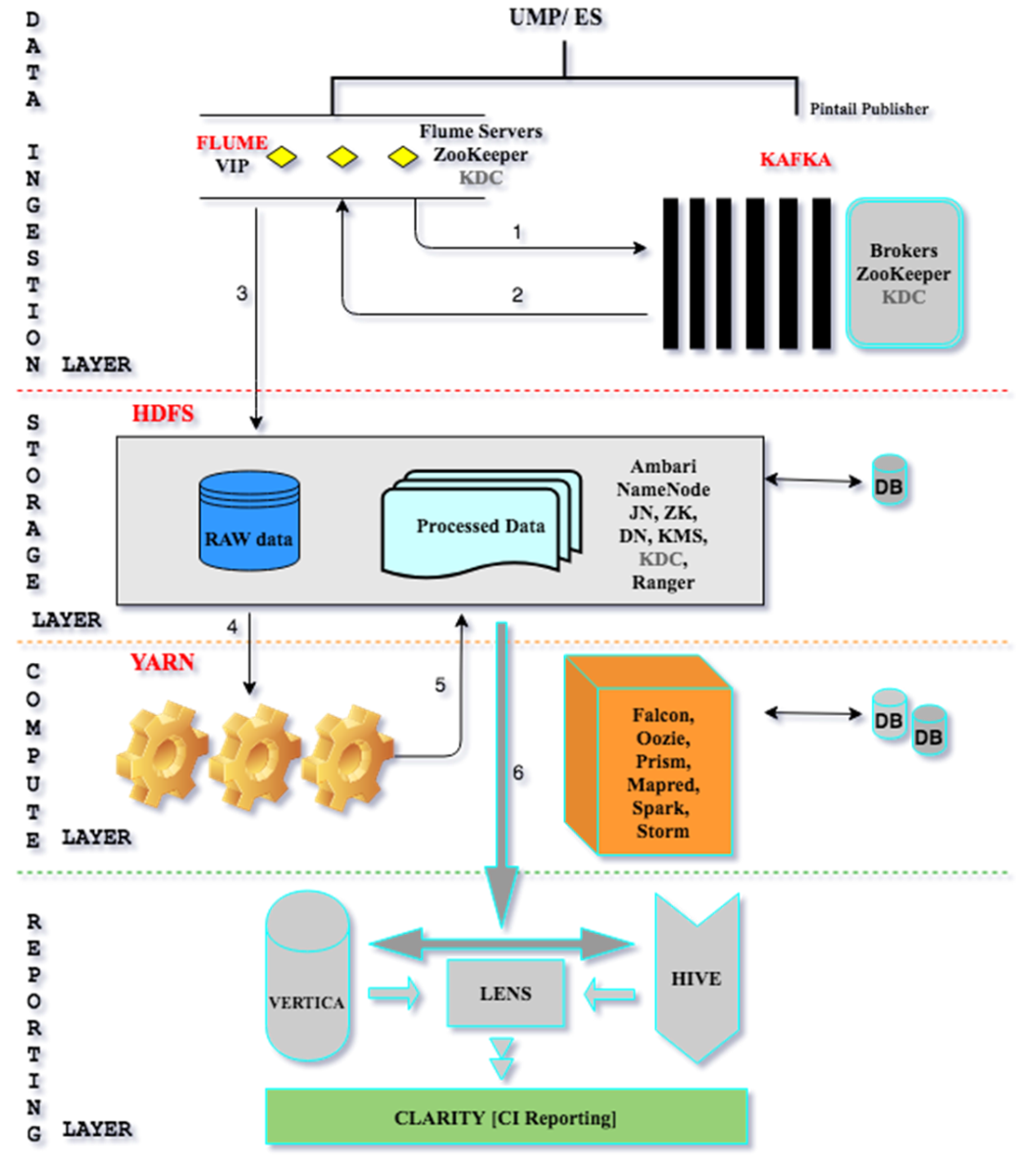

The preceding figure shows the original big data cluster architecture for InMobi in China, which is divided into the data ingestion layer, storage layer, compute layer, and reporting layer. First, advertising data in the advertising front-end part (especially RR and other data) is ingested through the data ingestion layer. Then, the data is stored in the offline HDFS big data cluster, and data tasks are processed through the compute cluster. Finally, the processed tasks are displayed to end users through reports.

Some problems are gradually exposed during the operations and maintenance of the big data cluster:

When compute resources are insufficient, some tasks need to be allocated (or even suspended), and important tasks need to be run first, which is not good for generating reports.

The real-time capability of data reports is poor, which cannot match the needs of the business party to display reports in minutes.

Based on the three typical problems above, InMobi thinks about the optimization solutions in the following part:

Open more big data service nodes on the cloud and overcome the shortage of the compute and storage capabilities by using the elastic capabilities of the big data service, especially for some temporary scenarios where resource usage is relatively tight, such as the 618 and Double 11 shopping festivals.

As an open-source product, ClickHouse has been implemented on a large scale in the business scenarios of Internet companies in China.

The real-time data warehouse system solves the problem of the real-time capability of data reports, making it reach the minute level at least and reach the second level for reports with special requirements.

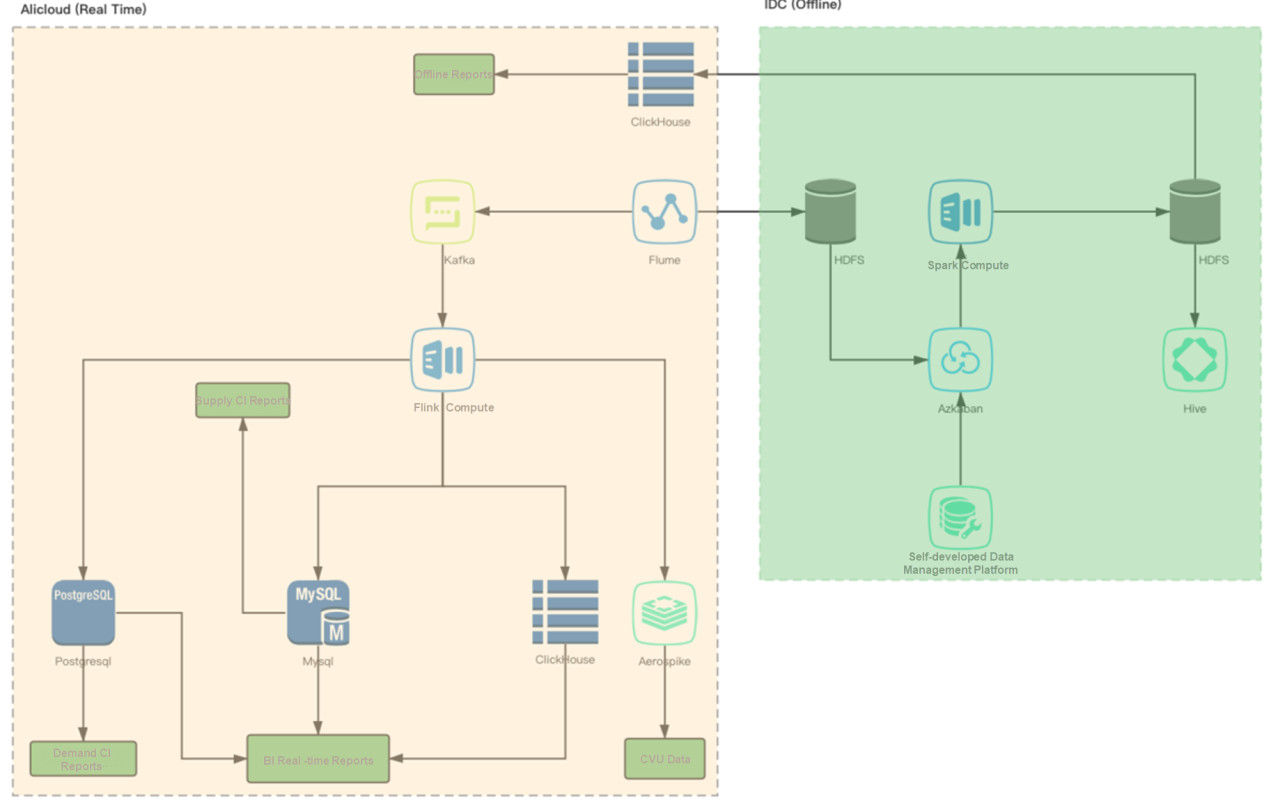

As shown in the preceding figure, the optimized big data cluster architecture is divided into two parts:

Read RR logs from Kafka and write them to real-time reports through ClickHouse. Read useful data from Kafka and store them to MySQL and PostgreSQL based on business requirements.

Use Flume to store all raw data in Kafka to the entire HDFS cluster and then perform data analysis and data regulation. In the offline big data cluster, all the business requirements of offline reports run through Spark tasks. Finally, the tasks are written back to ClickHouse to display offline data reports.

The architecture of Hologres separates storage and compute. The compute is fully deployed on Kubernetes, and the storage can use shared storage. You can select HDFS or OSS on the cloud based on business requirements to implement elastic expansion of resources and solve concurrent problems caused by insufficient resources. It is very suitable for the advertising business scenarios of InMobi.

Flink performs ETL processing for stream and batch data, writes the processed data into Hologres for unified storage and queries and enables the business end to directly connect to Hologres to provide online services, improving production efficiency.

These are the best practices of InMobi based on the open-source big data service of Alibaba Cloud.

57 posts | 5 followers

FollowAlibaba Cloud Community - March 25, 2022

Alibaba EMR - March 16, 2021

Alibaba Cloud Native Community - March 14, 2022

Alibaba Developer - September 6, 2021

Alibaba Clouder - July 12, 2019

Alibaba Clouder - January 4, 2021

57 posts | 5 followers

Follow Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Omnichannel Data Mid-End Solution

Omnichannel Data Mid-End Solution

This all-in-one omnichannel data solution helps brand merchants formulate brand strategies, monitor brand operation, and increase customer base.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by Alibaba EMR