By Yuhao Wu (Yuyi) Responsible for R&D of Query Optimization for AnalyticDB for PostgreSQL

As the digital age develops, there are more sources and generation methods of data, and a large number of text data is contained. We usually choose to use a database or data warehouse to store this data. However, extracting valuable information from text data and analyzing it efficiently often requires the cooperation of multiple data processing systems. It has a high user threshold and high maintenance cost.

Based on the experience of Alibaba Cloud users using AnalyticDB for PostgreSQL, this article introduces how AnalyticDB for PostgreSQL implements an all-in-one full-text search business and elaborates on its dominant technology.

Typically, when we use a data warehouse to process and analyze text data, real-time data writing, full-text search, and task scheduling capability of the data warehouse are musts. However, how can we use a data warehouse system to complete all the preceding functions? It often faces the following challenges:

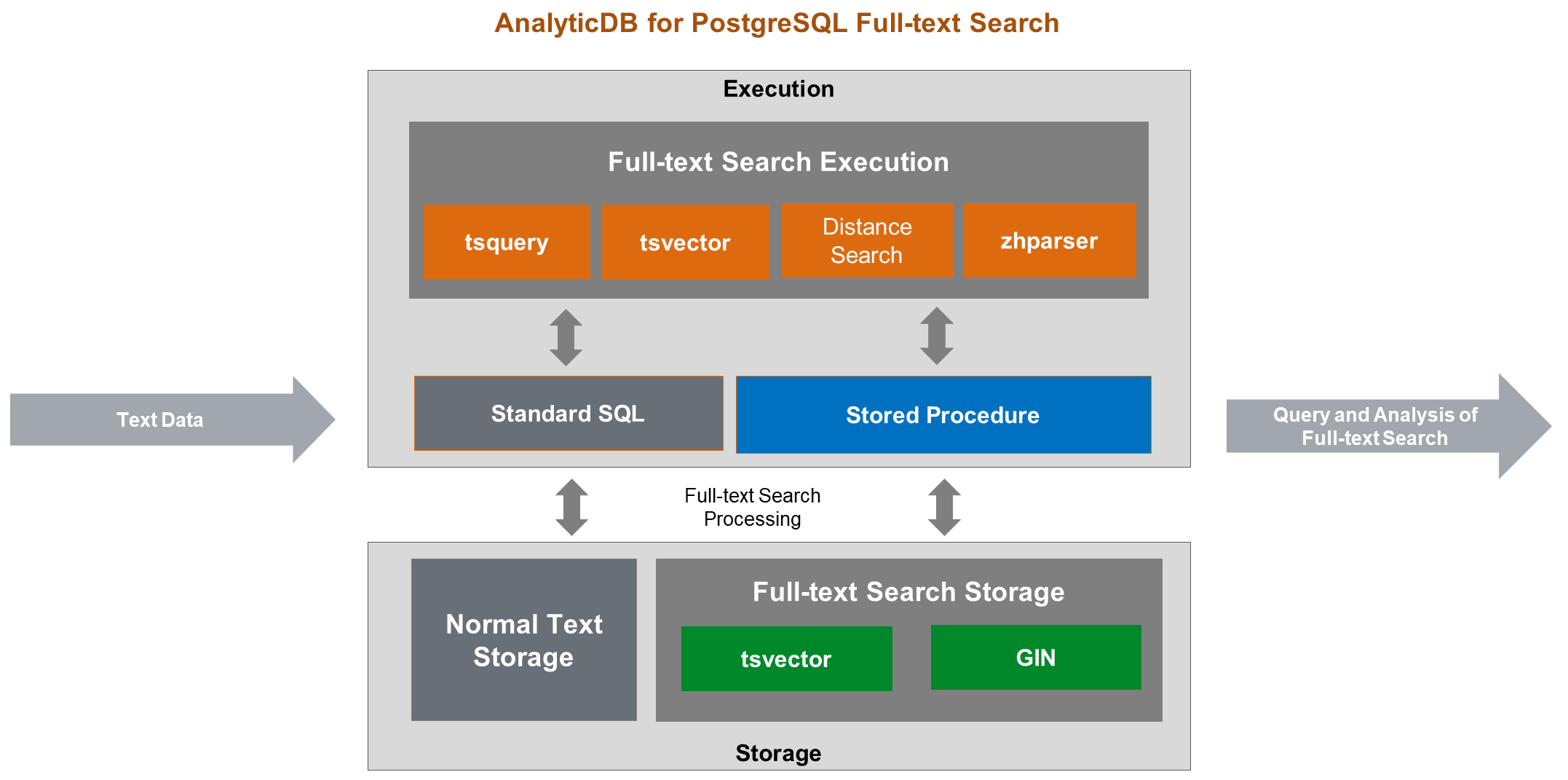

AnalyticDB for PostgreSQL has full-text search and data processing capabilities and can solve the problems above better. The following figure shows the process of the all-in-one full-text search business of AnalyticDB for PostgreSQL. We will share the key technologies later.

Full-text search generally refers to the ability of a database to convert natural language text into data that can be queried. For example, finding specific query terms in a text stored in a database and sorting them by the number of occurrences is a typical full-text search application. Most databases provide basic functions for text queries. We can use expressions (such as LIKE to find and search text in queries). However, these methods lack many necessary functions in modern database businesses.

Next, I will introduce how AnalyticDB for PostgreSQL implements the full-text search.

AnalyticDB for PostgreSQL uses the PostgreSQL kernel to provide a comprehensive Full=Text Search function and provides fast query performance through text precomputation. The precomputation includes the following steps:

1. Parse Text into Symbols

Text words (in the form of symbols) are classified into different types (such as numbers, adjectives, and adverbs). Different types of symbols can process differently. The PG kernel uses the default parser to parse symbols and provides the ability of a custom parser to parse texts in different languages.

2. Convert Symbols to Words

Compared with symbols, after normalization, different forms of words are combined (such as the words satisfy and satisfies mentioned above), allowing the full-text search to search efficiently based on semantics. The PG kernel uses dictionaries for this step and provides a custom dictionaries function.

3. Optimize Word Storage for Efficient Queries

For example, the PG kernel provides the tsvector (text search vector) data type, which converts text parsing into ordered data with word information and enables efficient full-text search by querying such data with tsquery (text search query) syntax.

tsvector is used to store a series of distinct words and their sequence, position, and other information. We can use to_tsvector provided by PG to automatically convert text to tsvector. Let's use the English sentence a fat cat jumped on a mat and ate two fat rats as an example.

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats');

to_tsvector

---------------------------------------------------------------

'ate':9 'cat':3 'fat':2,11 'jump':4 'mat':7 'rat':12 'two':10

(1 row)The result of tsvector contains a series of words and is sorted according to the order of words. At the same time, each word is followed by its position information in the sentence. For example, fat: 2,11 means fat is in the second and eleventh position of the sentence. In addition, the tsvector result omits the conjunctions (and, on) and normalizes some words (jumped, the past tense of jump, is normalized into jump).

tsvector precomputes and converts the text. Next, we need tsquery to query and analyze tsvector.

tsquery is used to store and query words in tsvector. PG also provides to_tsquery to convert text into tsquery. Then, we can use tsvector and full-text search operators to complete full-text search queries.

For example, we can use the @@ operator to find whether tsvector contains the words in tsquery:

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat');

?column?

----------

t

(1 row)

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cats');

?column?

----------

t

(1 row)The query result shows that if the query result of the example statement for the word 'cat' is t (true), it indicates a query matching. At the same time, the query result of the word cats is also t, because cats is the plural of cat, which is also query matching in language semantics.

tsquery supports the Boolean operator & (AND), | (OR), and !(NOT), so you can easily build a search query that combines conditions:

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat | dog');

?column?

----------

t

(1 row)

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat & dog');

?column?

----------

f

(1 row)When using full-text search, we want to find whether the text contains a certain word and do further analysis based on phrases and word groups. The full-text search tsquery method of PG supports the phrase search operator , where N is an integer that indicates the distance between the specified words. For example, if we want to find if the text has a phrase with cat followed by jump, we can use the <1> operator to find it.

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat<1>jump');

?column?

----------

t

(1 row)Furthermore, finding a specific word combination can be achieved using the distance search method:

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat<2>mat');

?column?

----------

f

(1 row)

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat<4>mat');

?column?

----------

t

(1 row)Based on the full-text search function of PG and by combining with the community capability, AnalyticDB for PostgreSQL has developed a full-text search in-depth and further supports full-text search – range-distance search operator , where M and N both indicate the distance between words. The distance between specified words is within the range between N and M. For example, you can use <1,5> to search and figure out if the text contains a phrase with a distance of less than or equal to 5 between cat and mat.

postgres=# select to_tsvector('a fat cat jumped on a mat and ate two fat rats') @@ to_tsquery('cat<1,5>mat');

?column?

----------

t

(1 row)In some database businesses, a large amount of Chinese text information is stored (such as user evaluation forms and address information). Analyzing Chinese text also needs a full-text search function. However, since words are the smallest morpheme unit in Chinese, they are not separated by spaces as English in writing. As a result, it is difficult to obtain desired word segmentation results in line with Chinese semantics if the default full-text search of PG is used. For example, if we use the default method of PG tsvector to segment Chinese sentences, the results obtained cannot meet the requirements.

postgres=# select to_tsvector('你好,这是一条中文测试文本');

to_tsvector

-----------------------------------

'你好':1 '这是一条中文测试文本':2

(1 row)Simple Chinese Word Segmentation (SCWS) is an open-source Chinese word segmentation engine based on word frequency dictionaries. It can segment a whole paragraph of Chinese text into correct words. SCWS is developed in the C Programming Language and can be directly used as a dynamic-link library to access applications. Combined with the good code extension ability of PG, we can use SCWS in PG to complete Chinese word segmentation.

zhparser plug-in, a Chinese word segmentation plug-in, is developed based on the features of SCWS. It is compatible with the full-text search capability of PG and provides a variety of function configuration options and user-defined dictionaries.

In AnalyticDB for PostgreSQL, the zhparser plug-in is installed by default. You can configure zhparser based on your requirements for Chinese word segmentation. For example, you can create a parser named zh_cn and configure word segmentation policies.

--- Create a word segmentation parser.

CREATE TEXT SEARCH CONFIGURATION zh_cn (PARSER = zhparser);

--- Add nouns, verbs, adjectives, idioms, exclamations, temporary idioms , and custom word segmentation policies.

ALTER TEXT SEARCH CONFIGURATION zh_cn ADD MAPPING FOR n,v,a,i,e,l,x WITH simple;Please visit this link for more information about how to use the plug-in.

After completing the basic configuration, we can use the Chinese word segmentation capability to develop Chinese search services. The use example is listed below:

postgres=# select to_tsvector('zh_cn','你好,这是一条中文测试文本');

to_tsvector

----------------------------------------------

'中文':3 '你好':1 '文本':5 '测试':4 '这是':2

(1 row)Similarly, we can use tsquery combined with zhparser for text search:

postgres=# select to_tsvector('zh_cn','你好,这是一条中文测试文本') @@ to_tsquery('zh_cn','中文<1,3>文本');

?column?

----------

t

(1 row)zhparser provides the Chinese custom thesaurus function. If the default thesaurus does not meet the word segmentation requirements, we can update the custom thesaurus and optimize the query results in real-time. The system table of zhparser, zhparser.zhprs_custom_word, is a user-oriented custom dictionary table. You only need to update the system table to customize words. The table structure of zhparser.zhprs_custom_word is listed below:

Table "zhparser.zhprs_custom_word"

Column | Type | Collation | Nullable | Default

--------+------------------+-----------+----------+-----------------------

word | text | | not null |

tf | double precision | | | '1'::double precision

idf | double precision | | | '1'::double precision

attr | character(1) | | | '@'::bpchar

Indexes:

"zhprs_custom_word_pkey" PRIMARY KEY, btree (word)

Check constraints:

"zhprs_custom_word_attr_check" CHECK (attr = '@'::bpchar OR attr = '!'::bpchar)Custom words are in the word column. The tf and idf columns are used to set the weight of custom words. Please refer to term frequency-inverse document frequency (TF-IDF). The attributes of word segmentation or stop words of custom words are in the attr column.

In AnalyticDB for PostgreSQL, a custom thesaurus is database-level and stored in the data directory of the corresponding database for each data node. The following shows how to use the custom thesaurus in AnalyticDB for PostgreSQL.

Let’s use the previous example statement 你好,这是一条中文测试文本 as an example. If we expect 测试 and 文本 not to be segmented into two words and expect 测试文本 to be a single-word segmentation, we only need to insert the corresponding word segmentation into the zhparser.zhprs_custom_word system table and reload it to take effect.

postgres=# insert into zhparser.zhprs_custom_word values('测试文本');INSERT 0 1postgres=# select sync_zhprs_custom_word(); --load custom word segmentation sync_zhprs_custom_word------------------------

(1 row)postgres=# \q –reestablish the connection

postgres=# select to_tsvector('zh_cn','你好,这是一条中文测试文本'); to_tsvector----------------------------------------- '中文':3 '你好':1 '测试文本':4 '这是':2(1 row)The custom thesaurus also supports the stop word feature. For example, if we do not want the word 这是 as a separate word segmentation, we can insert corresponding words and control symbols in the custom thesaurus to stop specific word segmentation.

postgres=# insert into zhparser.zhprs_custom_word(word, attr) values('这是','!');

INSERT 0 1

postgres=# select sync_zhprs_custom_word();

sync_zhprs_custom_word

------------------------

(1 row)

postgres=# \q --Reestablish the connection

postgres=# select to_tsvector('zh_cn','你好,这是一条中文测试文本');

to_tsvector

---------------------------------------

'中文':3 '你好':1 '是':2 '测试文本':4

(1 row)Full-text search and query services may involve a large amount of text data. At this time, using indexes properly can improve query performance. An inverted index is a data structure that stores data and position relationships and is used to process a large number of text searches in data systems. How does an inverted index improve text search performance? Let's explain it with an example.

There is a Document that stores a series of Text, and each text has a corresponding ID. The structure of this table is listed below:

| Document | |

| ID | Text |

| 1 | This is a Chinese test text. |

| 2 | Use of Chinese word segmentation plug-in |

| 3 | Database Full-text Search |

| 4 | Chinese-based Full-text Search |

When we want to find out all the texts containing the word 中文, we need to search all the contents of the Text one by one under this data structure. When there is a large amount of data, queries will be costly. We can solve this problem by creating an inverted index, whose index structure contains the words in each text and the corresponding text positions of the words. A possible inverted index data structure is listed below:

| Term | ID |

| Chinese | 1, 2, 4 |

| Full Text | 3, 4 |

| Database | 3 |

| Text | 1 |

| ... | ... |

Using this data structure will make it simple to find all the text containing 中文. The IDs of texts can be directly located according to the index information, thus avoiding a large number of text data scanning.

AnalyticDB for PostgreSQL provides the General Inverted Index (GIN) function to improve the query performance of the tsvector type.

CREATE INDEX text_idx ON document USING GIN (to_tsvector('zh_cn',text));SQL stored procedures refer to a series of SQL statements stored together in a database. When using the stored procedure, the user can specify the name of the stored procedure, use parameters, and call the procedure at the appropriate time to implement the same or different businesses.

The kernel version of AnalyticDB for PostgreSQL is upgraded to PG 12, and it can support the stored procedure capability better. Using stored procedure reasonably allows us to obtain the following benefits in business development:

The stored procedure syntax of the AnalyticDB for PostgreSQL is the same as the SQL standard:

CREATE [ OR REPLACE ] PROCEDURE

name ( [ [ argmode ] [ argname ] argtype [ { DEFAULT | = } default_expr ] [, ...] ] )

{ LANGUAGE lang_name

| TRANSFORM { FOR TYPE type_name } [, ... ]

| [ EXTERNAL ] SECURITY INVOKER | [ EXTERNAL ] SECURITY DEFINER

| SET configuration_parameter { TO value | = value | FROM CURRENT }

| AS 'definition'

| AS 'obj_file', 'link_symbol'

} ...In AnalyticDB for PostgreSQL 6.0, functions can implement most of the stored procedure features. We recommend using functions to implement stored procedure businesses. However, the stored procedure is still a long-awaited feature for many AnalyticDB for PostgreSQL users and PostgreSQL practitioners. The main reasons are listed below:

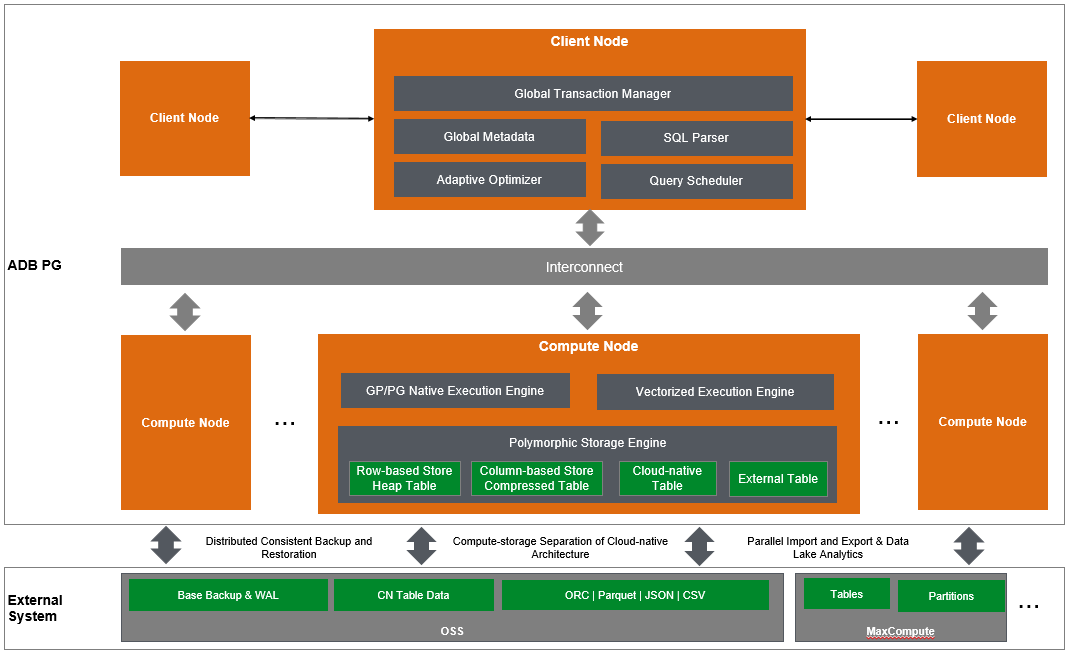

AnalyticDB for PostgreSQL is built based on the open-source projects PostgreSQL and GreenPlum. AnalyticDB for PostgreSQL 7.0 was released in 2022. The PostgreSQL kernel version was upgraded to PG 12, making it more competitive in terms of features, performance, enterprise-level capability, and security. AnalyticDB for PostgreSQL adopted Massively Parallel Processing (MPP) architecture to provide flexible extensibility and efficient data analysis performance, supporting distributed transactions and providing highly available database services. The architecture diagram of AnalyticDB for PostgreSQL is listed below:

AnalyticDB for PostgreSQL consists of client nodes and compute nodes. The client nodes are responsible for global transaction management, global metadata storage, SQL parsing, rewriting, executing plan generation, planning adaptive optimization, and computing scheduling. The compute nodes mainly include execution engines and storage engines. The execution engine supports both the powerful native engine of Greenplum and PostgreSQL and the self-developed vectorized engine that supports performance optimization in data analysis scenarios. The polymorphic storage engine supports local row-based store heap tables, column-based store compressed tables, external tables, and cloud-native tables based on the compute-storage separation architecture. The client and compute nodes use dual replicas to ensure high availability. It also provides linear scale-out of computing and storage resources through horizontal and vertical extension.

Users can flexibly select and configure compute nodes and storage nodes to meet resource requirements for data processing and analysis performance by using the distributed data warehouse architecture of AnalyticDB for PostgreSQL.

AnalyticDB for PostgreSQL + LLM: Building The Enterprise-specific Chatbot in the Generative AI Era

Alibaba Cloud MaxCompute - January 21, 2022

Alibaba Cloud Community - January 29, 2022

ApsaraDB - July 2, 2020

Alibaba Cloud MaxCompute - September 30, 2022

Alibaba Cloud New Products - August 10, 2020

Alibaba Clouder - May 20, 2020

PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn MoreMore Posts by ApsaraDB