By Wang Xu, Senior Technical Expert at Ant Financial

This article is compiled from Lecture 28 of "CNCF x Alibaba Cloud-Native Technology Open Course".

Phil Karlton once said, "There are only two hard things in computer science: cache invalidation and naming things."

No matter whether you are an expert or novice developer, it is difficult to name containers. As far as system software is concerned, the Linux container technology once used the names jail, zone, virtual server, and sandbox. Likewise, in the early virtualization technology stack, a type of virtual machine (VM) was also called a container. After all, the word "container" refers to an item used to contain, encapsulate, and isolate objects. The word "container" is so widely used that Wikipedia, which is well known for its rigor, includes an entry about OS-level virtualization. This bypasses the question of what a container actually is.

Docker was born in 2013. Over the following several years, the container concept, together with immutable infrastructure and cloud-native, replaced the fine-grained combined deployment mode of "software package + configuration" and defined a software stack through a simple declarative policy and immutable container. On the subject of application deployment, I want to stress this point:

A container in the cloud-native context is essentially an application container, which can be understood as an application package that is encapsulated in a standard format and runs in a standard operating system (Linux ABI in most cases), or can be understood as the program or technology that runs this application package.

This is my definition, but it is back up by the Open Container Initiative (OCI). The OCI defines the environments of the applications in containers and how such applications are run. Based on this definition, you can see which executable files in the root file system are executed, the user who executes these files, the type of CPU is required, and the requirements for memory resources, external storage, and sharing.

Therefore, an application can be encapsulated into a standard-format container to run in a standard operating system.

Building on this consensus, we can turn to the secure container concept. My co-founder Zhao Peng and I once named our technology "virtual container". To attract attention, we used the slogan "Secure as VM, Fast as Container". Users beset with security issues started to call our technology "secure container". The name "secure container" stuck even though our technology only provides extra isolation in a security system. We define secure containers as follows:

Secure container is a runtime technology that provides a complete operating system execution environment (Linux ABI in most cases) to container applications. A secure container isolates application execution from the host operating system to prevent the application from directly accessing host resources. This provides extra protection between container hosts or between containers.

This is a detailed definition of secure containers.

Secure containers are related to the indirect layer. The indirect layer was proposed by Linus Torvalds at LinuxCon 2015 as follows:

A security issue arises from a bug and can be avoided by adding an isolation layer.

Why do we introduce the isolation layer to ensure security? In a large operating system like Linux, it is theoretically impossible to verify whether programs are free of bugs. A security threat escalates into a security issue when a bug is exploited. A security framework and patching do not guarantee security, so extra isolation is required to reduce vulnerabilities and the risk of intrusion when a vulnerability is exploited.

This is how secure containers came about.

In December 2017, we launched the Kata Containers project at KubeCon. It is a secure container project based on two earlier projects: runV and Intel's Clear Container. Both projects were initiated in May 2015, before the speech given by Linus Torvalds at KubeCon 2015.

The two projects are easy enough to understand.

However, VMs do not run fast enough, which hinders their application in a container environment. If we can increase the container speed, we can develop a secure container technology that uses a VM as its isolation layer. This is one of the ideas behind Kata Containers. We wanted to create a pod sandbox in Kubernetes through a VM. Kata Containers has used QEMU, Firecracker, ACRN, and Cloud Hypervisor to provide VM functions.

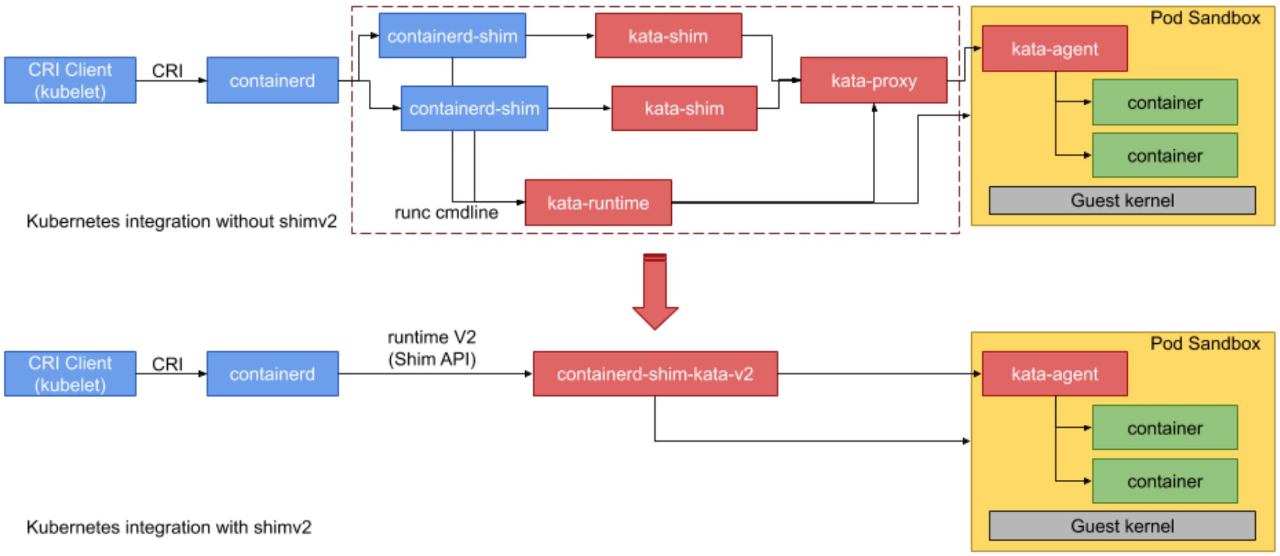

The following figure shows how to integrate Kata Containers with Kubernetes through containerd. CRI-O can also be used.

Kata Containers is generally used in Kubernetes. First, Kubelet finds containerd or CRI-O through the container runtime interface (CRI). At this time, operations such as mirroring are performed by containerd or CRI-O. According to the request, Kubelet converts the runtime-related requirement to an OCI spec and sends it to the OCI runtime for execution. The OCI runtime can be kata-runtime (shown in the upper part of the figure) or the simplified containerd-shim-kata-v2 (shown in the lower part). The process is as follows:

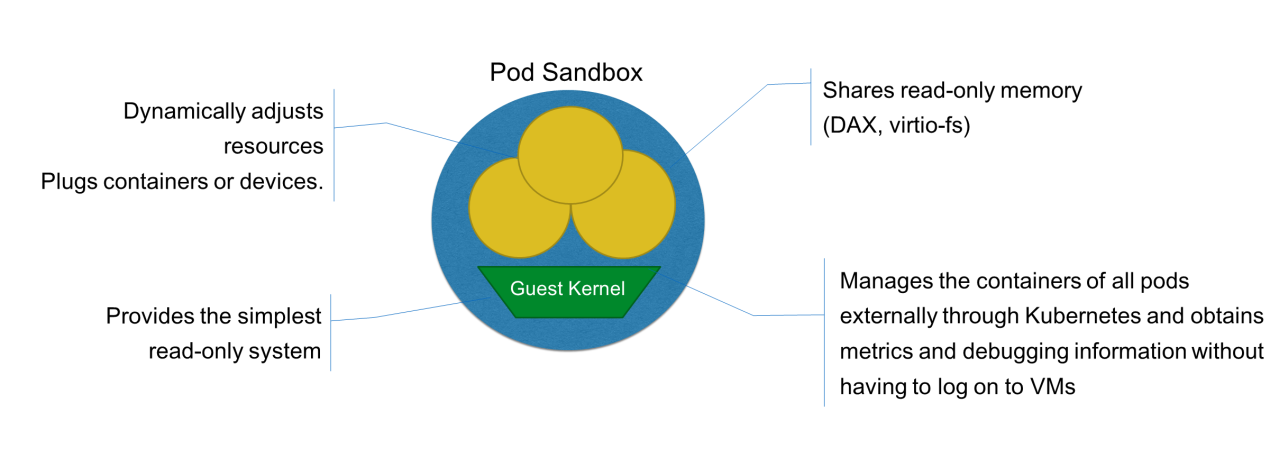

In the pod sandbox, a guest kernel runs container packages and applications, but it does not contain a complete operating system. Kata Containers is not like a conventional VM. From the container's perspective, Kata Containers only includes the container engine and reduces memory overhead by lowering memory usage and sharing memory resources.

Compared with a conventional VM, Kata Containers consumes less overhead and starts up faster. In most cases, it is as secure as a VM and runs as fast as a container. In addition to security technologies, Kata Containers provides other advantages that are lacking in conventional VMs, such as improved elasticity and no manual operations on physical machines. Related technologies include pluggable dynamic resources and virtio-fs. Kata Containers is designed to share the content (such as container rootfs) of a host file system with a VM in Kata Containers.

The direct access (DAX) technology designed for non-volatile storage and memory can be used to share read-only memory resources between pod sandboxes, between pods, and between containers. This saves a lot of memory between different pod sandboxes. Kubernetes manages the containers of all pods externally, and obtains metrics and debugging information from the external environment. This saves users the trouble of logging on to VMs. Kata Containers supports containerized operations and works like a VM at the underlying layer. However, Kata Containers is virtualized based on cloud-native.

gVisor is also called process-level virtualization and is different from Kata Containers.

In May 2018, at KubeCon held in Copenhagen, Google published the gVisor secure container they had developed internally over five years in response to Kata Containers. gVisor is a secure container solution different from Kata Containers.

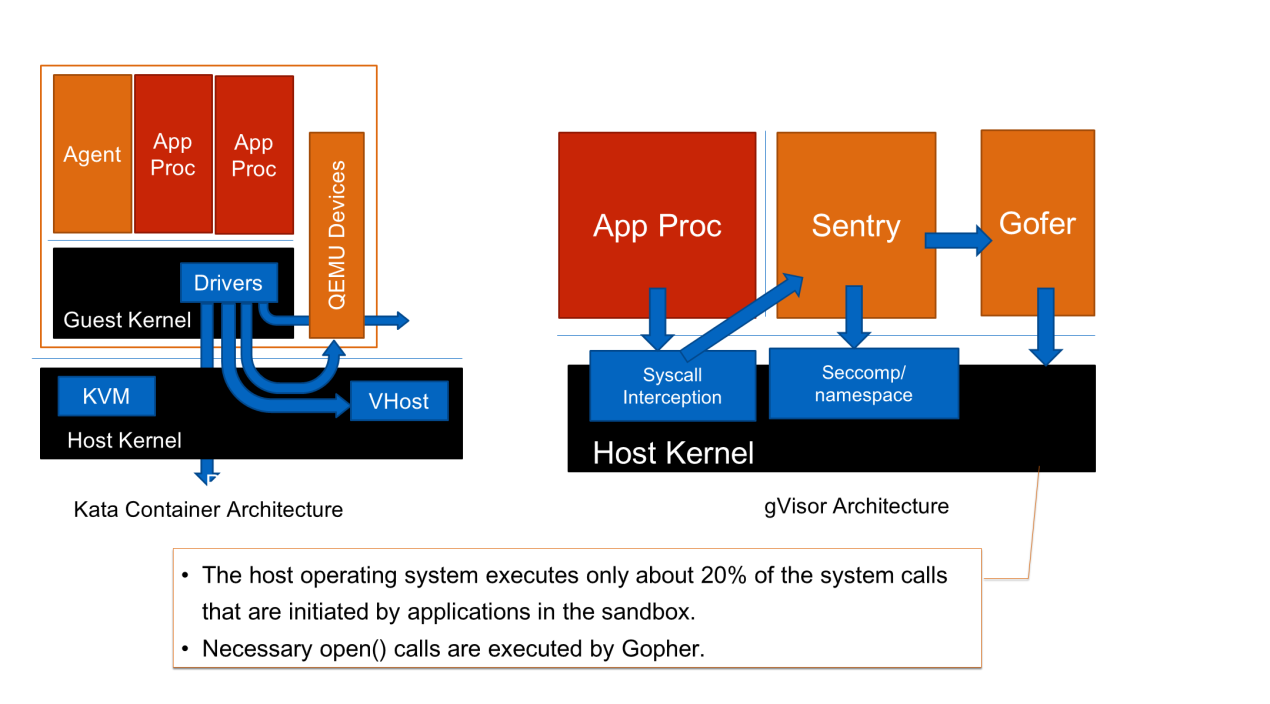

Kata Containers builds an inter-container isolation layer by combining and transforming existing isolation technologies. gVisor's design is more concise.

As shown on the right in the preceding figure, gVisor is rewritten in the Go language and includes an operating system kernel in user mode. This kernel is called Sentry and it is independent of virtualization and VMs. gVisor uses an internal platform capability to enable the host operating system to perform an operation so that Sentry performs all operations on the operating system on behalf of applications. Some of these operations are completed by the operating system, but the majority are completed by Sentry.

gVisor is an application-oriented isolation layer and different from a VM. It is used to run a Linux program in the Linux operating system. As an isolation layer, gVisor ensures security in the following ways:

Linux has about 300 system calls, only about 60 of which are initiated to the operating system from Sentry. The developers of gVisor have done some research on operating system security and found that most successful attacks against the operating system come from infrequent system calls.

Infrequent system calls are implemented on legacy paths and maintained by a small number of developers. The code on popular paths is more secure because it has been reviewed many times. Therefore, gVisor is designed to shield applications' access to infrequent system calls from the operating system and forward these access requests to Sentry for processing.

Security is significantly improved if access requests from Sentry to the host are initiated only through system calls on verified, mature, and popular paths. The number of system calls is reduced by 4/5, but the possibility of attack is even less than 1/5.

In a UNIX operating system with many files, open() can be used to perform various actions. Therefore, many attacks are mounted through open(). In gVisor, open() is implemented in an independent process called Gofer. An independent process is easily restricted in secure computing mode (seccomp) and protected by system limits and capability drops. Gofer can perform fewer actions than open() and can be executed by a non-root user. This improves system security.

The Go language helps improve memory security, so gVisor can better defend against attacks and avoid memory issues. However, the Go language cannot meet certain system-level requirements. gVisor developers admitted that they had made many adjustments to Go Runtime and provided these adjustments to the Go community.

The gVisor architecture is simple, aesthetic, and clean. As a result, it is gaining favor among many developers. However, kernel re-implementation can only be done by a software giant like Google. A similar implementation is Microsoft's WSL 1. gVisor is not free of issues despite its advanced design.

Therefore, gVisor will not be able to provide a perfect solution anytime soon, but it can be adapted to certain scenarios and can provide a great inspiration I think this inspiration may influence the future development of operating systems and CPU instruction sets. I believe that Kata Containers and gVisor will evolve in the future, and hope that a public solution to all application execution issues will become available.

A secure container provides an isolation layer and other features that go beyond security.

A secure container implements isolation to protect the host from application issues, which may arise from external attacks or unexpected errors. The secure container also prevents different pods from affecting each other. This extra isolation layer provides something more than security. The secure container improves system scheduling, quality of service (QoS), and application information protection.

The traditional container technology of operating systems is an extension of kernel process management. A container process is a set of associated processes that are visible to a host scheduling system. All the containers or processes in a pod are scheduled and managed by the host. This means that the existence of many containers places a heavy load on the host kernel. This load incurs considerable overhead in many actual environments.

Boosted by constantly advancing computer technologies, an operating system may have a large number of CPU and memory resources amounting to hundreds of gigabytes. If many containers are allocated, high overhead is incurred in the scheduling system. After a secure container is installed, we cannot see complete information on the host. The isolation layer schedules the applications at this layer so that the host only schedules sandboxes. This reduces the host's scheduling overhead and improves scheduling efficiency.

The secure container isolates each application to avoid interference between containers and between containers and the host. This improves QoS. A secure container is designed to protect the host from the impact of malicious or problematic applications within containers. The secure container can also be deployed on the cloud to protect against malicious attacks.

A secure container prevents unauthorized access to user data by encapsulating everything that the user runs in itself. Application data is protected by sandboxes, and access to this data is not required during the host O&M process. Accessing user data from the cloud requires user authorization, but users are not always sure granting access may leave them open to attack. If sandboxes are properly encapsulated, extra access authorization by users is not required. This improves the protection of user privacy.

Considering the prospects for future development, a secure container is more than security isolation. The secure container provides an isolation layer whose kernel is independent of the host kernel and is dedicated to applications and services. This allows the functions of the host and applications to be properly allocated and optimized. With such great potential, the secure containers of the future may be able to reduce isolation overhead and improve application performance. The isolation technology will improve the cloud-native infrastructure.

Let's summarize what we have learned in this article.

Getting Started with Kubernetes | Kubernetes Container Runtime Interface

634 posts | 55 followers

FollowAlibaba Container Service - October 23, 2019

Alibaba Developer - June 12, 2020

Alibaba Developer - June 23, 2020

Alibaba Cloud Community - November 14, 2024

Alibaba Developer - May 8, 2019

Alibaba Clouder - September 3, 2020

634 posts | 55 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Financial Services Solutions

Financial Services Solutions

Alibaba Cloud equips financial services providers with professional solutions with high scalability and high availability features.

Learn More SOFAStack™

SOFAStack™

A one-stop, cloud-native platform that allows financial enterprises to develop and maintain highly available applications that use a distributed architecture.

Learn MoreMore Posts by Alibaba Cloud Native Community