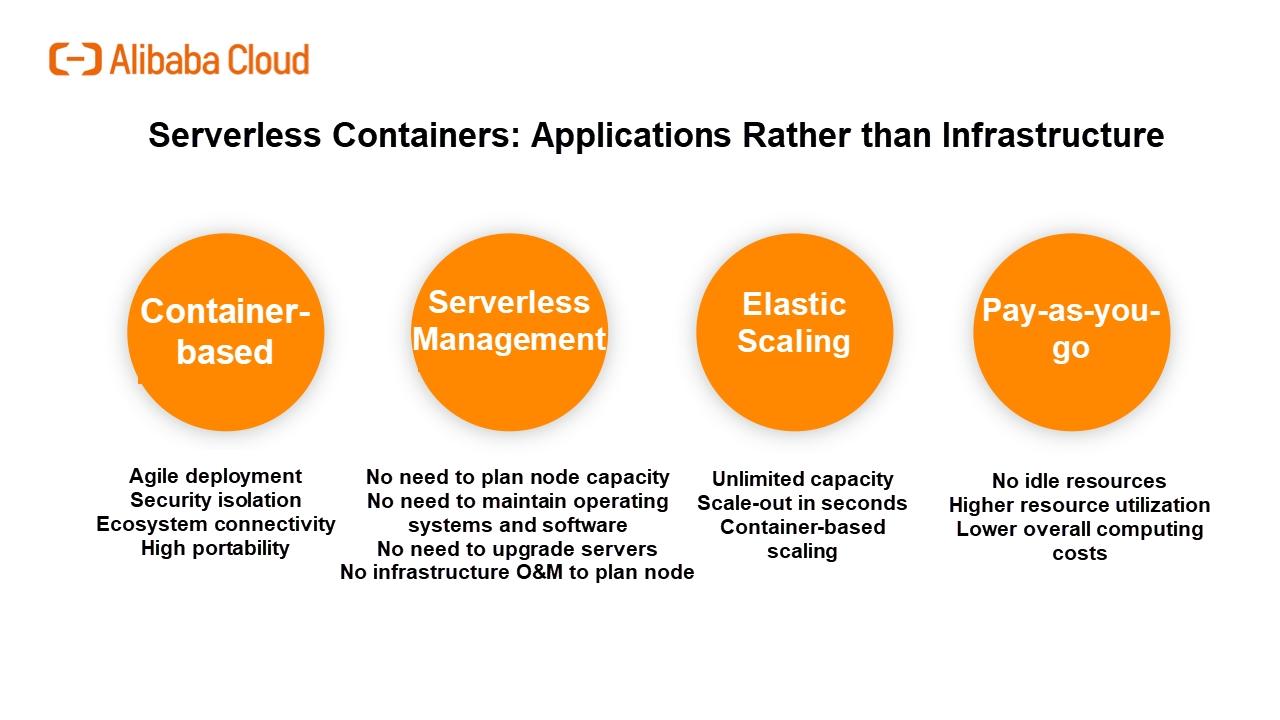

Serverless containers allow deploying container applications without purchasing or managing servers. Serverless containers significantly improve the agility and elasticity of container application deployment and reduce computing costs. This allows users to focus on managing business applications rather than infrastructure, which greatly improves application development efficiency and reduces O&M costs.

Kubernetes has become the de-facto standard for container orchestration systems in the industry. Kubernetes-based cloud-native application ecosystems, such as Helm, Istio, Knative, Kubeflow, and Spark on Kubernetes, use Kubernetes as a cloud operating system. Serverless Kubernetes has attracted the attention of cloud vendors. On the one hand, it simplifies Kubernetes management through a serverless mode, freeing businesses from capacity planning, security maintenance, and troubleshooting for Kubernetes clusters. On the other hand, it further unleashes the capabilities of cloud computing and implements security, availability, and scalability on infrastructure, differentiating itself from the competition.

Unlike standard Kubernetes, serverless Kubernetes is deeply integrated with Infrastructure as a Service (IaaS). The product modes of serverless Kubernetes help public cloud vendors improve their scale, efficiency, and capabilities through technological innovation. In terms of architecture, serverless containers are divided into two layers: container orchestration and the computing resource pool. Next, we will take a close look at the two layers and share our key ideas about the serverless container architecture and products.

The success of Kubernetes in container orchestration is due to the reputation of Google and the hard work of the Cloud Native Computing Foundation (CNCF). In addition, it is backed by Google Borg's experience and improvements in large-scale distributed resource scheduling and automated O&M. The technical points are as follows:

1. Declarative APIs: Kubernetes uses declarative APIs. This means developers can focus on their applications rather than system execution details. For example, different resource types such as Deployment, StatefulSet, and Job abstract different types of workloads. The level-triggered implementation based on declarative APIs provides a more robust distributed system implementation for Kubernetes than edge-triggered.

2. Scalable Architecture: All Kubernetes components are implemented and interact with each other based on consistent and open APIs. Third-party developers provide field-specific extended implementations through Custom Resource Definition (CRD) or Operator, which greatly improves the capabilities of Kubernetes.

3. Portability: With various abstractions such as Service Load Balancer (SLB), Ingress, Container Network Interface (CNI), and Container Storage Interface (CSI), Kubernetes shields business applications from the implementation differences of infrastructure and allows the flexible migration of data.

Serverless Kubernetes must be compatible with the Kubernetes ecosystem, provide the core value of Kubernetes, and be deeply integrated with cloud capabilities.

It allows users to directly use Kubernetes' declarative APIs and is compatible with Kubernetes application definitions, requiring no changes to Deployment, StatefulSet, Job, and Service.

Serverless Kubernetes is fully compatible with the extension mechanism, allowing it to support more workloads. In addition, the components of serverless Kubernetes strictly comply with Kubernetes' state approximation control method.

Kubernetes makes full use of cloud capabilities, such as resource scheduling, load balancing, and service discovery. This radically simplifies the design of container platforms, increases the scale, and reduces O&M complexity. These implementations are transparent to users, which ensures portability. In this way, existing applications are smoothly deployed on serverless Kubernetes or deployed on both traditional containers and serverless containers.

Read this article to learn more about serverless Kubernetes and the analysis of serverless Kubernetes in terms of architecture design and infrastructure.

Kubernetes makes all Node resources a resource pool in the cluster, and its scheduling unit is the Pod. A Pod can have multiple containers. It is just like a person holding ECS resources or computing resources in their left hand, holding containers in their right hand, and then matches the two, and in this way the person is playing the role of a container orchestration system.

However, the Cloud Native concept comes up quite frequently lately, and many people are confused about the connection between Cloud Native and Kubernetes. How can we determine whether an application is a Cloud Native application? In my opinion, three criteria are available:

First, the application can make resources a pool;

Second, the application can quickly access the network of the pool. Kubernetes has a layer of its own independent network, and I only need to specify the service name I want to access, that is, it can quickly access various service discovery functions through the service mesh;

Third, the application has the failover function. If a pool contains a host, or a node is down, and thus the entire application is unavailable, then it is definitely not a Cloud Native application.

From these three points, we can see that Kubernetes is doing very well. First, let's look at the concept of a resource pool. A large Kubernetes cluster is a resource pool. We no longer have to worry about the host of an application. All we have to do is publish the deployed yaml file to Kubernetes. It will automatically make these schedules, and it can quickly access the network of the entire application. In addition, the failover is also automatic. This article will share how to implement an elastic CI/CD system based on Kubernetes.

Taints spoil a node electronically - marking it as undesirable for Pods. Pods specify tolerations - meaning they will tolerate a node with certain taints.

You can use taints and tolerations to deliberately prevent certain Pods from running on a node, or, to deliberately let certain Pods run on a node ( for example Pods that need ssd or GPUs, etc. ).

This tutorial contains several examples of taints and tolerations to help you get practical experience of this abstract concept.

Container Service for Kubernetes (ACK) is a fully managed service. ACK is integrated with services such as virtualization, storage, network and security, providing user a high performance and scalable Kubernetes environments for containerized applications. Alibaba Cloud is a Kubernetes Certified Service Provider(KCSP)and ACK is certified by Certified Kubernetes Conformance Program which ensures consistent experience of Kubernetes and workload portability.

Elastic Container Instance (ECI) is an agile and secure serverless container instance service. You can easily run containers without managing servers. Also you only pay for the resources that have been consumed by the containers. ECI helps you focus on your business applications instead of managing infrastructure.

This course aims to help IT companies who want to container their business applications, and cloud computing engineers or enthusiasts who want to learn container technology and Kubernetes. By learning this course, you can fully understand what Kubernetes is, why we need Kubernetes, the basic architecture of Kubernetes, some core concepts and terms of Kubernetes, and how to build a Kubernetes cluster on the Alibaba cloud platform, so as to provide reference for the evaluation, design and implementation of application containerization.

Through this course, you will not only learn about Alibaba Cloud Container Service for Kubernetes and its applicable scenarios, but also learn how to use Terraform to flexibly deploy of ACK clusters and realize blue and green deployment.

Elastic Container Instance – A Serverless Marvel, Part 1: Agility at Its Best

2,593 posts | 793 followers

FollowAlibaba Cloud Serverless - December 17, 2020

Alibaba Clouder - August 31, 2018

Alibaba Container Service - February 18, 2021

Alibaba Clouder - September 24, 2020

Aliware - March 19, 2021

Alibaba Cloud Native Community - December 6, 2022

2,593 posts | 793 followers

Follow Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Serverless Workflow

Serverless Workflow

Visualization, O&M-free orchestration, and Coordination of Stateful Application Scenarios

Learn More Serverless Application Engine

Serverless Application Engine

Serverless Application Engine (SAE) is the world's first application-oriented serverless PaaS, providing a cost-effective and highly efficient one-stop application hosting solution.

Learn More Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn MoreMore Posts by Alibaba Clouder