By Li Jinsong (Zhixin)

As a unified computing engine, Flink is designed to provide a unified stream and batch processing experience and technology stack. Flink 1.9 incorporated Blink code, whereas Flink 1.10 improved a lot of functions and optimized performance. Flink now runs all TPC-DS queries and is very competitive in performance. Flink 1.10 is an SQL engine with productive-level availability and the unified batch and stream processing capabilities.

In the big data batch computing field, with the maturity of the Hive data warehouse, the most popular model is the Hive Metastore + computing engine. Common computing engines include Hive on MapReduce, Hive on Tez, Hive on Spark, Spark Integrate Hive, Presto Integrate Hive, and Flink Batch SQL, now that it has reached production availability with the release of Flink 1.10.

The performance and cost of the selected computing engine are critical for building a computing platform. Therefore, Ververica's flink-SQL-benchmark [1] project provides a TPC-DS Benchmark test tool based on Hive Metastore to make tests more closely resemble real production jobs.

Also, it uses 20 hosts to test 3 engines: Flink 1.10, Hive 3.0 on MapReduce, and Hive 3.0 on Tez. The engine performance is tested on two dimensions:

1) Flink 1.10 vs. Hive 3.0 on MapReduce

2) Flink 1.10 vs. Hive 3.0 on Tez

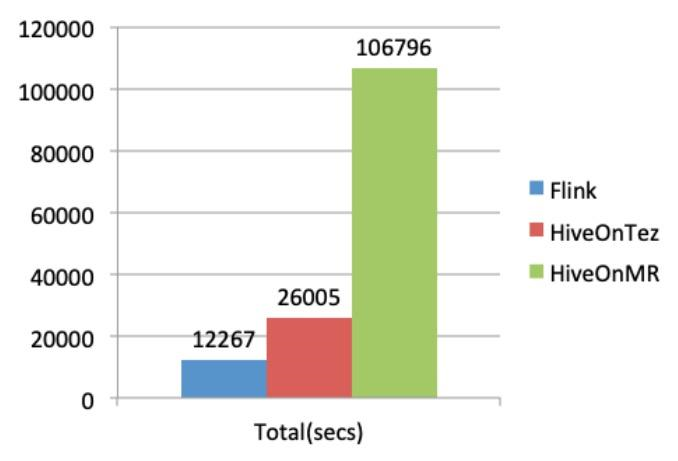

The total duration performance of the three engines is shown in the following figure:

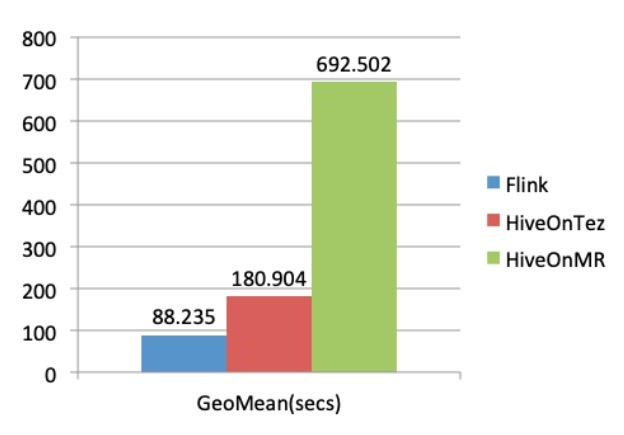

The geometrical mean performance results are shown in the following figure:

This article only tests the data sets of the preceding engines on a 10-TB dataset. Select a specific data set based on any cluster size and use the flink-sql-benchmark tool to run comparison tests for more engines.

Environment and Optimizations

1) Environment Preparation

2) Dataset Generation

i) Generate the TPC-DS dataset in a distributed manner and load the TEXT data set to Hive. The raw data is in CSV format. We recommend generating data in a distributed manner. This is a relatively time-consuming step (the flink-sql-benchmark tool integrates the TPC-DS tool.)

ii) Convert Hive TEXT tables to ORC tables. The ORC format is a common Hive data file format, and its row-column hybrid storage facilitates quick analysis and provides a high compression ratio. Run the following query statement:

create table ${NAME} stored as ${FILE} as select * from ${SOURCE}. ${NAME};

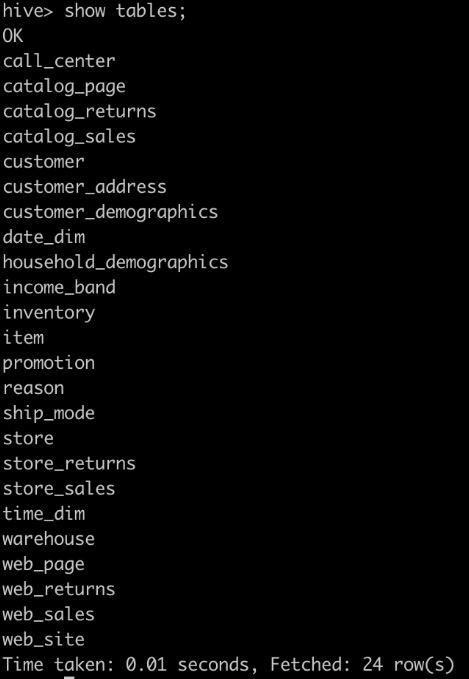

As shown in the figure, 7 fact tables and 17 dimension tables officially described by TPC-DS are generated.

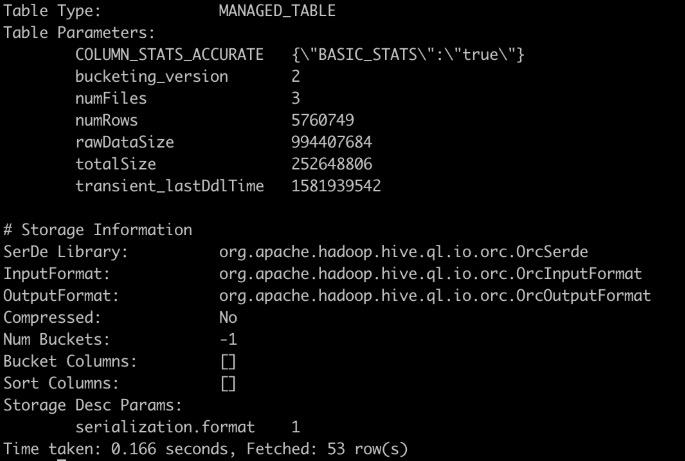

While analyzing Hive tables, the statistical information is very important for the query optimization of analysis jobs. For complex SQL statements, the execution efficiency of the plan may vary greatly. Flink reads both Hive table statistics and Hive partition statistics and performs cost-based optimization (CBO) based on these statistics. Run the following command:

analyze table ${NAME} compute statistics for columns;

3) Run Queries on Flink

i) Prepare the Flink environment and build the Flink Yarn Session environment. We recommend using the Standalone or Session mode in order to reuse the Flink process to speed up analytical jobs.

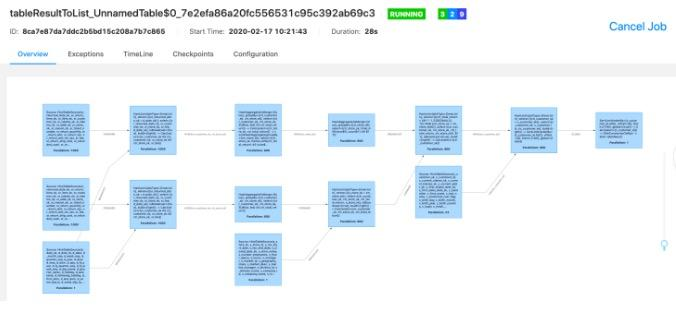

ii) Write code to run Queries and collect execution time statistics. Directly reuse the flink-tpcds project in flink-sql-benchmark.

iii) Run FLINK_HOME/flink to start the program, execute all queries, wait for the process to end, and calculate the execution time.

4) Run Queries on Other Engines

i) Set up the environment according to the information on the official website of another engine.

ii) Thanks to standard Hive datasets, you may use other engines to read Hive data.

iii) During runtime, note that cluster bottlenecks, such as CPU or disk bottlenecks, must occur if the operation method and parameters are reasonable. Therefore, performance tuning is required.

Flink 1.9 had already done a lot of work while incorporating Blink code, including deep CodeGeneration, Binary storage and computing improved CBO, and Batch Shuffler. This laid a solid foundation for future performance breakthroughs.

Flink 1.10 continues to improve Hive integration and meet the production-level Hive integration standard. Following other improvements were made in performance and out-of-the-box use:

Flink 1.10 has implemented many parameter optimizations to improve the overall out-of-the-box experience. However, due to some limitations of batch and stream integration, some parameters need to be configured separately. This section provides a general analysis of these parameters.

Table Layer Parameters

table.optimizer.join-reorder-enabled = true: This parameter needs to be enabled manually. Currently, major engines rarely enable JoinReorder by default. Enable this parameter when statistical information is relatively complete. Generally, reorder errors are rare.table.optimizer.join.broadcast-threshold = 1010241024: Change the value of this parameter from the default 1 MB to 10 MB. Currently, the Flink broadcasting mechanism needs to be improved, so the default value is 1 MB. However, when the concurrency is low, this parameter may be set to a maximum of 10 MB.table.exec.resource.default-parallelism = 800: This is the Operator's concurrency setting. For an input of 10 TB, we recommend setting the concurrency to 800. We do not recommend setting an overly large value. The higher the concurrency, the greater the pressure on all parts of the system.TaskManager Parameter Analysis

taskmanager.numberOfTaskSlots = 10: This parameter sets the number of slots in a single TM.TaskManager memory parameters: The memory of TaskManager is divided into three types: management memory, network memory, and other JVM-related memory. Refer to the documents on the official website to set these parameters properly.taskmanager.memory.process.size = 15000m: This parameter sets the total memory of TaskManager. After subtracting the size of other memory. Generally, leave 3 to 5 GB of memory in the heap.taskmanager.memory.managed.size = 8000m: This parameter sets the management memory, which is used for Operator computing. A reasonable configuration allocates 300 to 800 MB memory for each slot.taskmanager.network.memory.max = 2200mb: Task point-to-point communication requires four buffers. Based on the concurrency, about 2 GB is required for this parameter. Obtain this value through testing. If the buffer memory is insufficient, an exception occurs.Network Parameter Analysis

taskmanager.network.blocking-shuffle.type = mmap: Shuffle read uses mmap to get the system manage the memory. This is a convenient approach.taskmanager.network.blocking-shuffle.compression.enabled = true: This enables compression for Shuffle. This parameter is reused for batch and stream processing. We strongly recommend enabling compression for batch jobs. Otherwise, the disk will incur a bottleneck.Scheduling Parameter Analysis

cluster.evenly-spread-out-slots = true: This parameter sets the system to evenly schedule tasks to each TaskManager, which helps achieve full resource utilization.jobmanager.execution.failover-strategy = region: Global retry is enabled by default. Enable region retry to enable single-node failover.restart-strategy = fixed-delay: The retry policy needs to be set manually. By default, no retry is performed.Other timeout-related parameters are used to avoid the network jitter caused by large amounts of data during scheduling and operation and to prevent subsequent job failure.

Going forward, the Flink community will further perfect Flink functions while continuing to improve its performance:

1] https://github.com/ververica/flink-sql-benchmark

2] https://github.com/ververica/flink-sql-benchmark/blob/master/flink-tpcds/flink-conf.yaml

3] http://jira.apache.org/jira/browse/FLINK-14802

4] https://issues.apache.org/jira/browse/FLINK-11899

5] https://cwiki.apache.org/confluence/display/FLINK/FLIP-53%3A+Fine+Grained+Operator+Resource+Management

6] https://github.com/ververica/flink-sql-gateway

7] https://github.com/ververica/flink-jdbc-driver

8] https://cwiki.apache.org/confluence/display/FLINK/FLIP-92%3A+Add+N-Ary+Stream+Operator+in+Flink

206 posts | 56 followers

FollowApache Flink Community China - September 27, 2020

Apache Flink Community China - July 28, 2020

Apache Flink Community China - July 28, 2020

Apache Flink Community China - July 28, 2020

Apache Flink Community China - July 28, 2020

Apache Flink Community China - February 19, 2021

206 posts | 56 followers

Follow Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Media Solution

Media Solution

An array of powerful multimedia services providing massive cloud storage and efficient content delivery for a smooth and rich user experience.

Learn More Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn MoreMore Posts by Apache Flink Community