By Li Jinsong, an Apache Flink Committer and Alibaba Technical Expert

On July 6, the Apache Flink community announced the release of Flink 1.11, which, after months of planning and usability optimization starting in early March, marked a notable improvement in the Flink user experience.

As one of the core modules in Flink, the Structured Query Language (SQL) of Flink is essential to improving integrated processing of streaming and batch data. Flink SQL has been significantly enhanced in this release with over ten new major features, wider application possibilities, further streamlining, and simplified operations.

Some of Flink SQL's most significant new features are as follows:

Since stream computing emerged as a popular computational paradigm, more people are using this technology in their programming work. Some of them are senior big data engineers, others may include developers with less experience, such as Java beginners or data analysts who are not yet familiar with programming. In this case, the challenges for stream programming are no longer limited to high computational intensity or massive volumes of data. One top priority for improving the adoption rate of real-time data processing is to increase developers' productivity by shortening the learning curve of stream computing.

As one of the most commonly used languages for data analysis tasks, SQL offers a concise way for its users to express their business logic. Apache Flink is a unified computing engine for batch and stream data processing, it is designed to provide full SQL support. The implementation of Flink SQL conforms to ANSI SQL. SQL helps programmers manage their heavy workloads with only a few lines of code.

Some highlights during the development of Flink SQL are given below:

The SQL module has become increasingly important in Flink, and is supported by a growing user base. Meanwhile, SQL-based Python and machine learning APIs are going through rapid development. As one of the most commonly used APIs in Flink, Flink SQL will become more important for integrations within the Flink ecosystem.

After this release, Flink SQL supports the following scenarios:

In addition, Flink 1.11 improves the usability of Flink SQL, fixes bugs from previous versions, and improves the API experience.

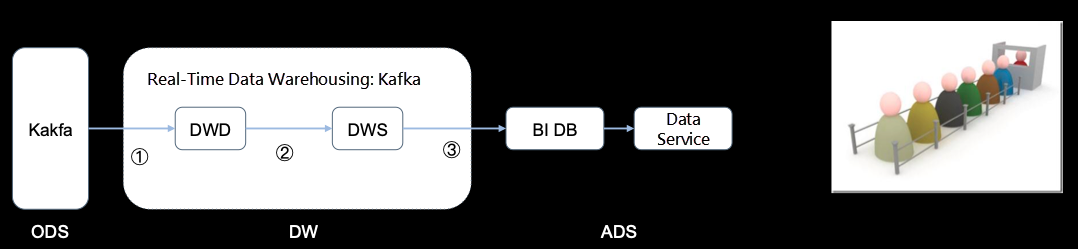

CDC is a set of software design patterns commonly used in databases. Debezium and Canal are popular CDC tools that are used to stream changes in specified formats to Kafka. In the previous practices, specific logic was required to parse and transform CDC data to make it "INSERT-only". This workaround method not only complicated the follow-up processing, but also added to the difficulty of overall operations.

To solve this problem, Flink SQL must offer native support for streaming data changes when handling changelogs.

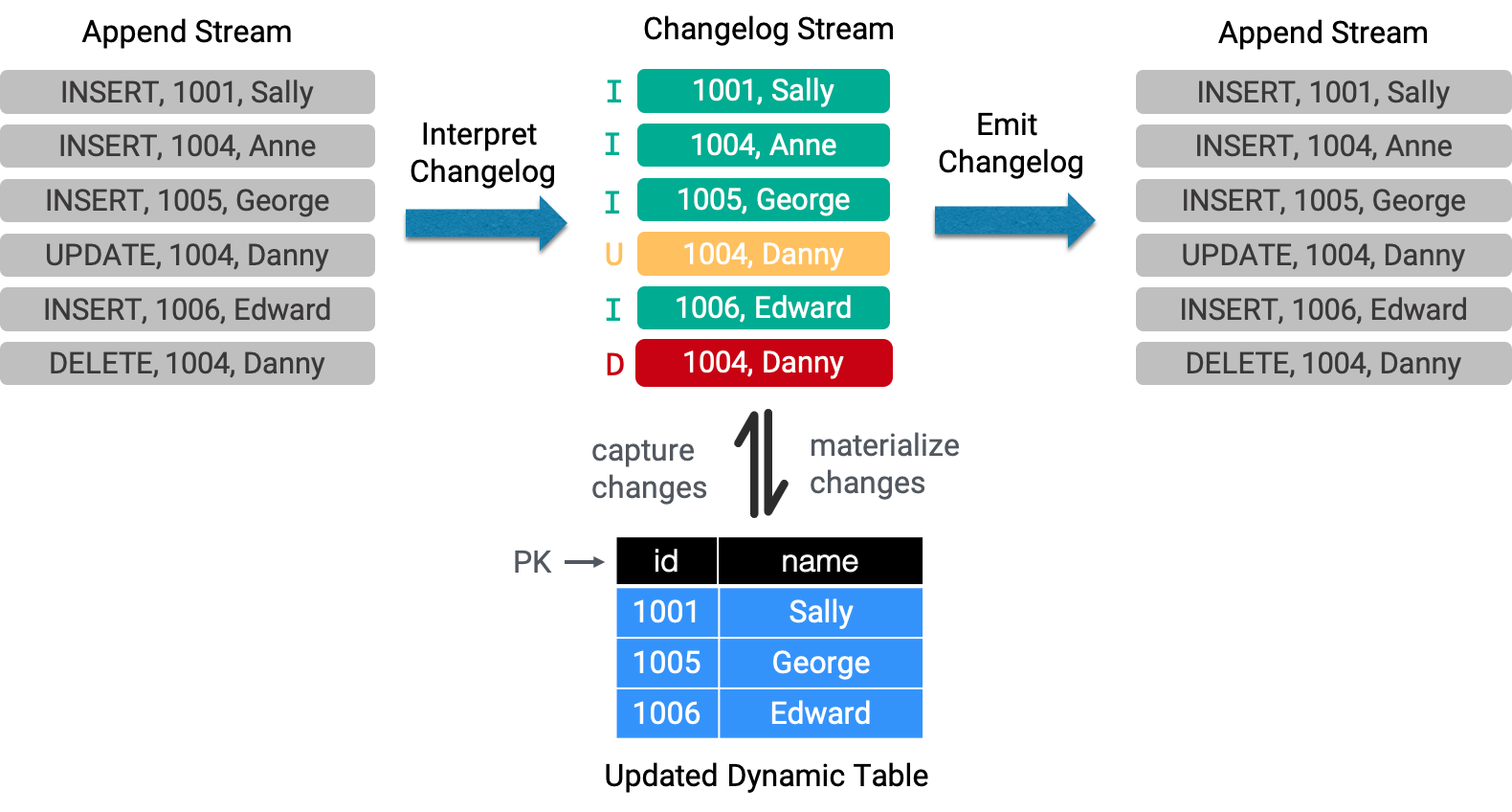

Stream computing handles the calculation of continuous data streams and therefore computes constantly changing results. The results of each calculation performed by an aggregate function are single local values which generate changelog streams. The preceding figure shows the process of writing aggregated data to Kafka, which was impossible to do when Kafka only supported "INSERT-only" data.

Prior to Flink 1.11, the input of CDC data was not supported due to the limitations of sources and sinks. Flink 1.11 introduces new table sources and sink interfaces to ingest and emit CDC data and provides support for the Debezium and Canal formats (FLIP-105). This means that dynamic table sources are no longer limited to "append-only" operations and can ingest these external changelogs (INSERT events), interpret them into change operations (INSERT, UPDATE, and DELETE events), and emit them downstream with the change type.

As shown in the preceding figure, the data synchronized from CDC to Kafka is theoretically an appended stream with the changelog identifier in the file format. The most widely used method is to regard this changelog identifier as a common field.

Starting from Flink 1.11, this identifier is declared as a format. This format indicates that data can be identified and interpreted by Flink into changelog streams in Flink and can be processed in accordance with SQL semantics. Similarly, Flink SQL can generate changelog stream output (no built-in support in Flink 1.11). This allows you to write all types of SQL queries to Kafka as long as the formats comply with changelogs.

You have to specify either "format=debezium-json" or "format=canal-json" in your CREATE TABLE statement to consume changelogs using SQL DDL.

CREATE TABLE my_table (

...

) WITH (

'connector'='...', -- e.g. 'kafka'

'format'='debezium-json'

);Flink 1.11 provides ready-to-use interfaces. However, you need to pay attention to the following considerations when you use Flink 1.11:

Apart from offering support for the aforementioned changelogs, the redefinition of sources and sinks in Flink 1.11 also provides solutions to many known issues.

The following code demonstrates an example of using the new sources and sinks (see the official documentation for details):

CREATE TABLE kafka_table (

...

) WITH (

'connector' = 'kafka-0.10',

'topic' = 'test-topic',

'scan.startup.mode' = 'earliest-offset',

'properties.bootstrap.servers' = 'localhost:9092',

'format' = 'json',

'json.fail-on-missing-field' = 'false'

);Flink 1.11 maintains forward compatibility by retaining the old source and sink connectors and allowing you to use the connector.type key to fall back to them.

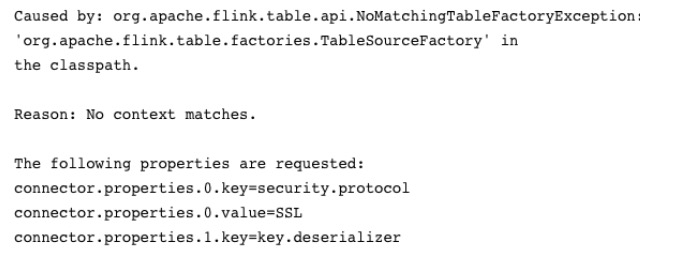

Before Flink 1.11, users often encountered the "NoMatchingTableFactoryException" error:

This message indicated that the system could not find a suitable table factory for a DDL. This occurred due to one of the following reasons:

However, judging by the error message alone, it is impossible to know which key or even which part of the code caused this problem.

public interface Factory {

String factoryIdentifier();

⋯

}This problem is solved in Flink 1.11 by introducing a TableFactory interface to allow users to search for a factory that corresponds to a specific factory identifier. In this case, if the system cannot find a factory, it means that the factory class is not in the classpath. On the other hand, if a result is returned for a factory search, you can conduct further checks for verification.

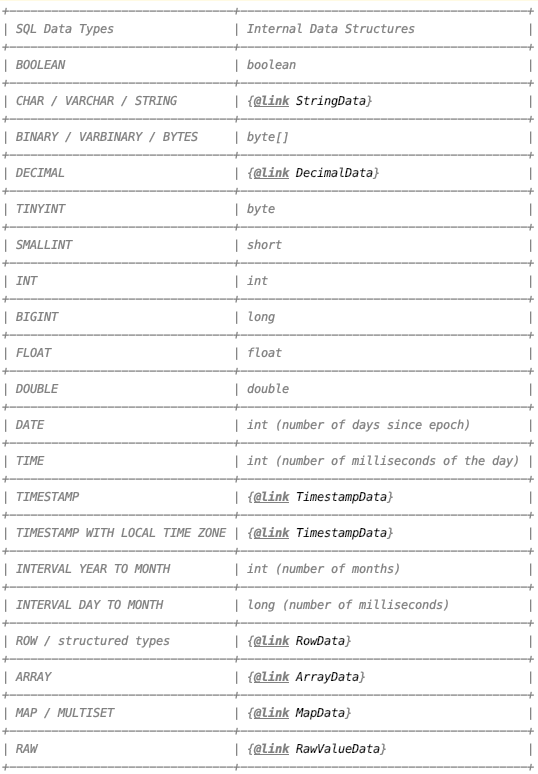

In earlier versions of Flink, you could define data structures using source and sink interfaces, and the framework would convert the user-defined data structures into internal data structures identifiable to Flink SQL:

public interface TableSource<T> {

TypeInformation<T> getReturnType();

...

}You can define a TableSource and implement getReturnType() to specify the conversion.

However, you may sometimes find that the type declared in the DDL statement does not match the one returned by the getReturnType() method, especially when two different mechanisms are involved in determining a type, such as the RTTI (runtime type information) feature and the DataType in SQL. In addition, low match precision issues can also cause type mismatches.

This problem is also fixed in Flink 1.11. Developers of connectors can no longer define data structures and can only use the internal RowData structure of Flink SQL. This solution guarantees correct matches between default types and DDL statements, and does not require verification by the framework.

The internal RowData structure has the following functions:

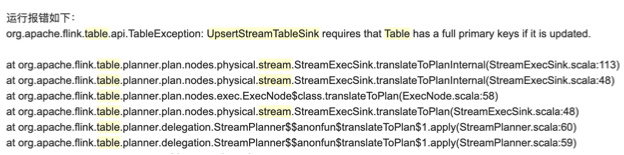

A typical stream computing scenario is the writing of aggregated data into upsert sinks, such as the Java Database Connectivity (JDBC) and HBase sinks. The following error messages are often received when the system processes complex SQL queries:

According to these messages, the UpsertStreamTableSink interface requires the Table to have full primary key information. Otherwise, the task throws an exception. This is caused by the UpsertStreamTableSink mechanism of Flink, which, as the name suggests, is a sink the requires the key information from the table to update.

One way to retrieve the primary key is to use the optimizer to infer from the query, as is shown in the following figure.

This method is very effective and also conforms to the semantics for simple queries. However, if you use it for complex queries, such as using aggregate, JOIN, and then aggregate, you can expect exceptions because optimizers often fail to get primary keys, or the SQL does not support retrieving primary keys with optimizers.

Therefore, in Flink 1.11, this mechanism is replaced by using the CREATE TABLE statement. For example, to create a jdbc_table, you must explicitly write the primary key in the definition. The phrase NOT ENFORCED indicates that primary key constraints are not enforced because connectors may lack the ability to carry out the enforcement. Once a primary key is specified, the framework receives the decision to update the JDBC sink with the primary key. In this way, the results are not affected by the complexity of queries and you can refer to the definitions to acquire a clear understanding of how updates will be performed.

CREATE TABLE jdbc_table (

id BIGINT,

...

PRIMARY KEY (id) NOT ENFORCED

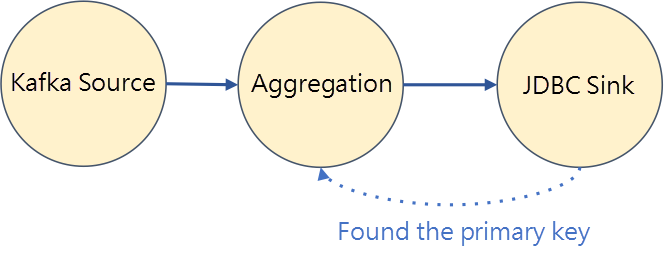

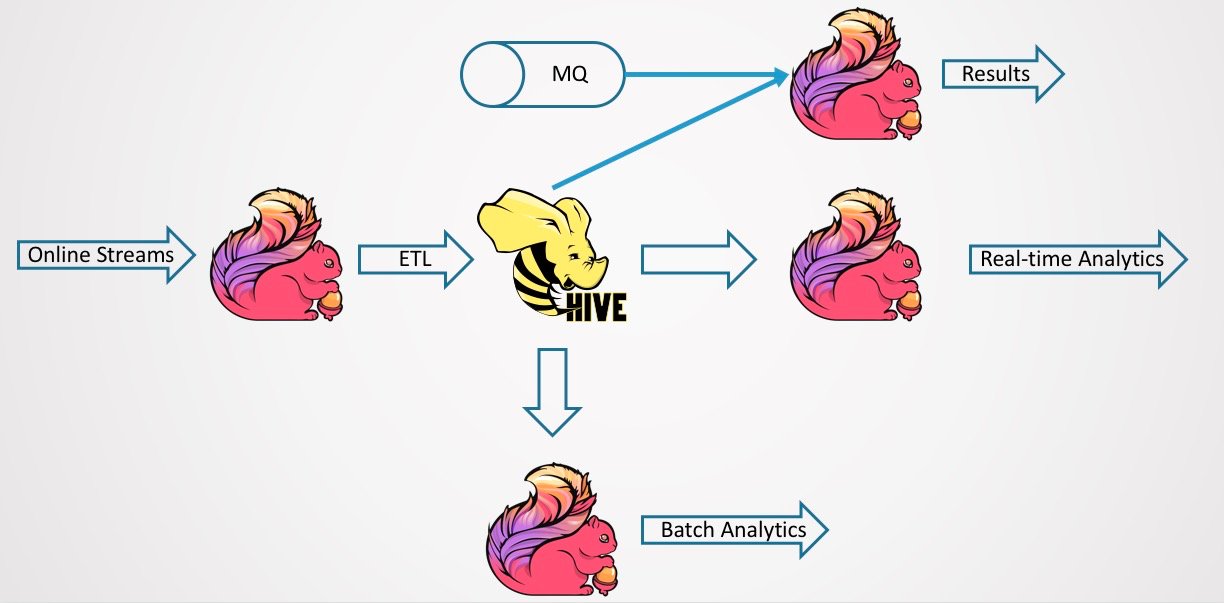

)First, let's look at the traditional Hive data warehouse infrastructure. The following figure shows a typical Hive data warehouse. Generally, the extracting, transforming, and loading (ETL) procedure uses scheduling tools to schedule jobs, such as on a daily or hourly basis. The scheduling process reflects the combination of latencies. In this example, Table1 is generated first during scheduling, then Table2, and then Table3, as computation latencies add up.

However, this is too slow and the latency is too high. Furthermore, the process of putting data into the Hive database and the previous ETL scheduling also leads to high latency in ad-hoc analysis. Consequently, the results of ad-hoc analysis are always stale data.

This is where real-time data warehouses come in. They allow data to be read and written to Kafka, and then exported to the business intelligence (BI) database. This enables real-time queries with real-time data processing. The real-time ETL in Kafka provides millisecond-level latency. Real-time data warehouses store data based on queues or real-time databases. This meets the requirements of real-time data processing with minimal latency. However, real-time data warehouses still have some drawbacks:

Can we build a Lambda architecture that features the advantages of both offline and real-time warehouses?

The answer is yes. However, this would involve incredibly high costs. First, the costs for maintenance, computing, and storage would add up to a big amount. Second, this would result in repeated development. To keep data consistency, when the developers of the offline data warehouse finish writing Hive and SQL statements, the corresponding SQL statements also need to be written for the real-time data warehouse. In addition, the necessary communication, migration, and data comparison between two development teams would also result in high costs. The migrations that would accompany increasing demands for real-time analysis would cause more and more repetition in development work. Such costs may be acceptable for a few important tasks, but for a large number of tasks, the maintenance costs would be way too much.

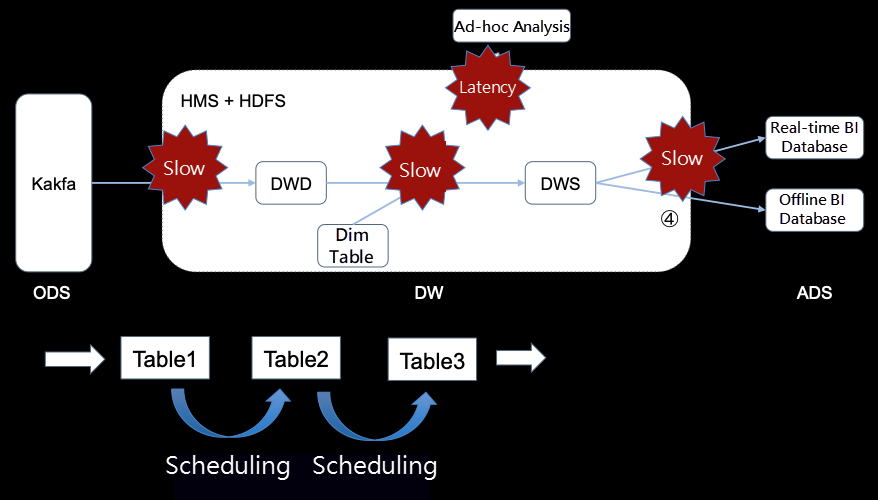

Is there a way to enjoy the benefits of both ad-hoc and real-time analyses? One solution is to join real-time streaming data with offline Hive data, which would represent a major improvement because the data would be quasi-real-time. This is implemented in Flink 1.11, as shown in the following figure. As we can see from the figure, data is exported in streams to the BI database, and ad-hoc analysis can also be performed. The most significant feature is the support for joining streaming data in Flink.

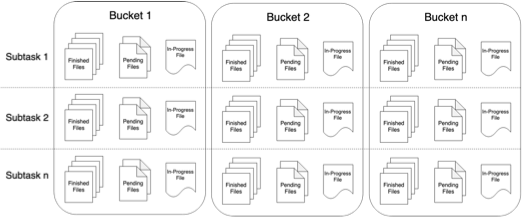

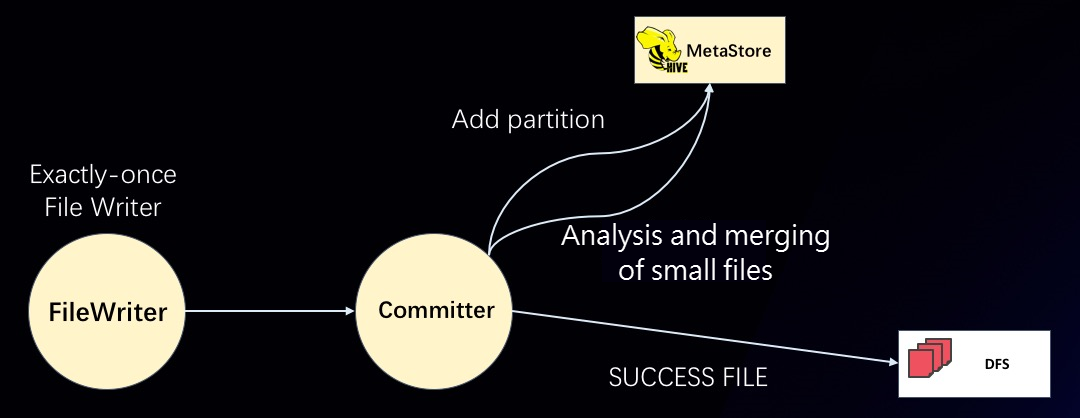

In earlier Flink versions, the streaming file sink wrote incoming data into buckets at the DataStream layer. The streaming file sink is a quasi-real-time sink that guarantees exactly-once semantics.

It is based on the two-phase commit protocol (2PC):

This file system writer is very useful. However, in the Hive data warehouse, visibility into data movement relies on the Hive metastore. But how is the Hive metastore notified during this process?

The partition commit mechanism is added at the StreamingFileSink layer.

In addition to files, partitions will also be committed. As shown in the preceding figure, the file writer corresponds to the previous StreamingFileSink and guarantees exactly-once semantics. The file writer is followed by a partition committer, which supports the following commit policies:

After the committer is hung up in the writer, the commit trigger determines when to commit.

A large number of ETL jobs exist in the Hive data warehouse. These jobs are often run periodically with a scheduling tool. This causes two major problems:

For these offline ETL jobs, the following features are added to Flink 1.11 to support real-time Hive streaming reading:

You can even use a 10-minute partition strategy and use Flink's Hive streaming source and Hive streaming sink to greatly improve the real-time performance of the Hive data warehouse to the quasi-real-time level, and receive responses in minutes. At the same, ad-hoc queries on all tables are also supported for better flexibility.

SELECT * FROM hive_table /*+ OPTIONS('streaming-source.enable'='true', 'streaming-source.consume-start-offset'='2020-05-20') */;In addition to scanning, Flink 1.11 also supports temporal joins, which is often referred to as streaming dim joins.

SELECT

o.amout, o.currency, r.rate, o.amount * r.rate

FROM

Orders AS o

JOIN LatestRates FOR SYSTEM_TIME AS OF o.proctime AS r

ON r.currency = o.currencyThe "cache all" mode is used and partition detection is not supported, which makes this feature better suited to small tables.

Flink SQL is ANSI-SQL compliant, while the Hive Query Language (HiveQL) uses a different HQL syntax. This makes Flink SQL and Hive different in both syntax and semantics.

Therefore, it is important to help Hive users learn the syntax and semantics of Flink SQL. Flink 1.11 currently supports the Hive dialect, which enables you to write in HQL in the Flink ecosystem. Currently, the Hive dialect only supports DDL statements. Support for queries will be gradually added in future versions.

The Hive integration is a major update with comprehensive functionality, but the runtime environment is complex. Is there a lightweight solution for file system reads and writes?

For a long time, the Flink Table has only supported the comma-separated values (CSV) format with the file system table, without support for partitioned files.

The revamped file system connector of Flink 1.11 now supports the following features and formats:

The file system connector requires only a few SQL statements and saves you the effort of building a Hive integration environment:

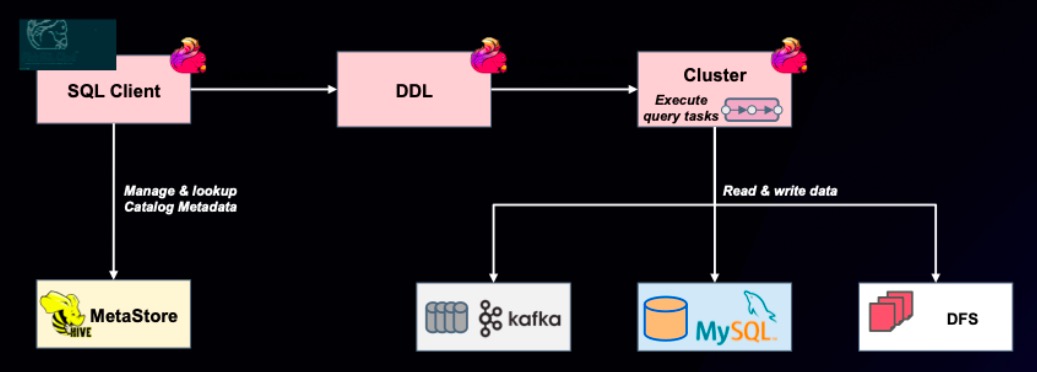

Since Flink 1.10, the Hive metastore has been used to store Table-related metadata in Flink streaming SQL. For example, you can use HiveCatalog to store Kafka tables. This allows you to use tables at startup.

By using DDL statements, you can submit SQL statements to a cluster to write data to Kafka, MySQL, or a distributed file system (DFS). Does this mean that with HiveCatalog, you only need to write DDL statements once and will then be able to use Kafka tables directly?

In some cases, the answer is yes. However, there are some limitations. For example, a Kafka table normally has some execution-related parameters. This is because the data retention period in Kafka is usually set to 15 days or shorter. Therefore, you need to specify the offset for each consumption because offsets are constantly changing. Consequently, the parameters for using the Kafka table are different each time you submit a job, and these parameters are stored in catalogs. As a result, you will have to create another table and rewrite the fields and parameters. Flink 1.11 provides the following solutions for this issue:

Table hints:

SELECT id, name FROM kafka_table1 /*+ OPTIONS('scan.startup.mode'='earliest-offset') */;LIKE syntax:

CREATE TABLE kafka_table2 WITH ( 'scan.startup.mode'='earliest-offset') LIKE kafka_table1;These two approaches are a perfect supplement to HiveCatalog. They allow you to avoid writing a large number of schemas every time you run a job.

Flink SQL 1.11 introduces three built-in connectors for easier debugging, testing, stress testing, and online observation.

These three connectors are designed to eliminate the impact of connectors on debugging or testing results. This helps solve the problem where connectors make it more difficult to identify problems and troubleshoot in stream computing.

TableEnvironment is very important as it functions as the entrance to SQL programming. The features of the preceding APIs are described as follows:

TableEnvironment caches execution plans. However, in previous versions of Flink, users found it difficult to work with multiple APIs. Therefore, the Flink community improved the API experience in Version 1.11 to better organize the APIs and prevent bugs.

Now, executeSql is a unified interface that supports queries, DDL statements, and DML statements.

In addition, you must consider the following rules if you want to use it with DataStream:

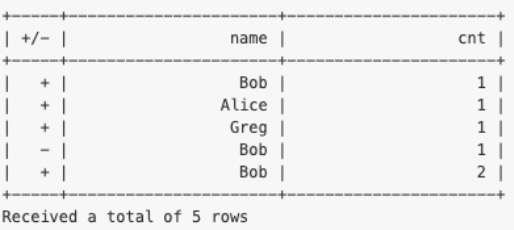

Apart from DDL support, the SQL Client in Flink 1.11 also supports tableau mode, which allows you to view query results printed on the console instead of having to switch pages.

You can visit the official website for the detailed documentation of the user interfaces introduced in this article.

Flink 1.11 has laid a solid foundation for CDC support by improving its internal mechanisms and APIs. In the future, more built-in CDC support will be introduced for binlogs and easily comprehensive Flink SQL syntax. The Flink community will continue its efforts to integrate stream and batch processing at the underlying layer to empower the SQL layer with more possibilities.

Flink 1.11: An Engine with Unified SQL Support for Batch and Streaming Data

Application of Apache Flink in Real-time Financial Data Lake

206 posts | 56 followers

FollowApache Flink Community China - July 28, 2020

Apache Flink Community China - July 28, 2020

Apache Flink Community China - February 19, 2021

Apache Flink Community China - May 13, 2021

Apache Flink Community China - May 18, 2022

Apache Flink Community China - July 28, 2020

206 posts | 56 followers

Follow Message Queue for Apache Kafka

Message Queue for Apache Kafka

A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More Storage Capacity Unit

Storage Capacity Unit

Plan and optimize your storage budget with flexible storage services

Learn More Hybrid Cloud Storage

Hybrid Cloud Storage

A cost-effective, efficient and easy-to-manage hybrid cloud storage solution.

Learn MoreMore Posts by Apache Flink Community