Llama2 is an open-source large language model (LLM) sourced by Meta. The model parameters range from 7 billion to 70 billion and support different versions, such as 7b, 13b, and 70b. Llama 2 uses two trillion tokens for training, with a 40% increase in the training data compared with Llama 1. Llama 2 models have a context length of 4,096, twice that of Llama 1 models. Llama2-chat is a fine-tuned version of Llama 2 catering to chat conversation scenarios. Llama2-chat uses SFT (supervised fine-tuning) and RLHF (human feedback reinforcement learning) for iterative optimization to better align with human preferences and improve security. The fine-tuning data includes publicly available instruction datasets and over one million human-labeled samples. Llama2-chat can be used as chat assistants in various natural language generation scenarios.

This article describes how to deploy a Llama 2 model or a fine-tuned model as a ChatLLM-WebUI application in Elastic Algorithm Service (EAS) of Platform for AI (PAI). The basic Llama2-13b-chat model is used in this topic.

The following section describes how to deploy the ChatLLM model as an AI-powered web application.

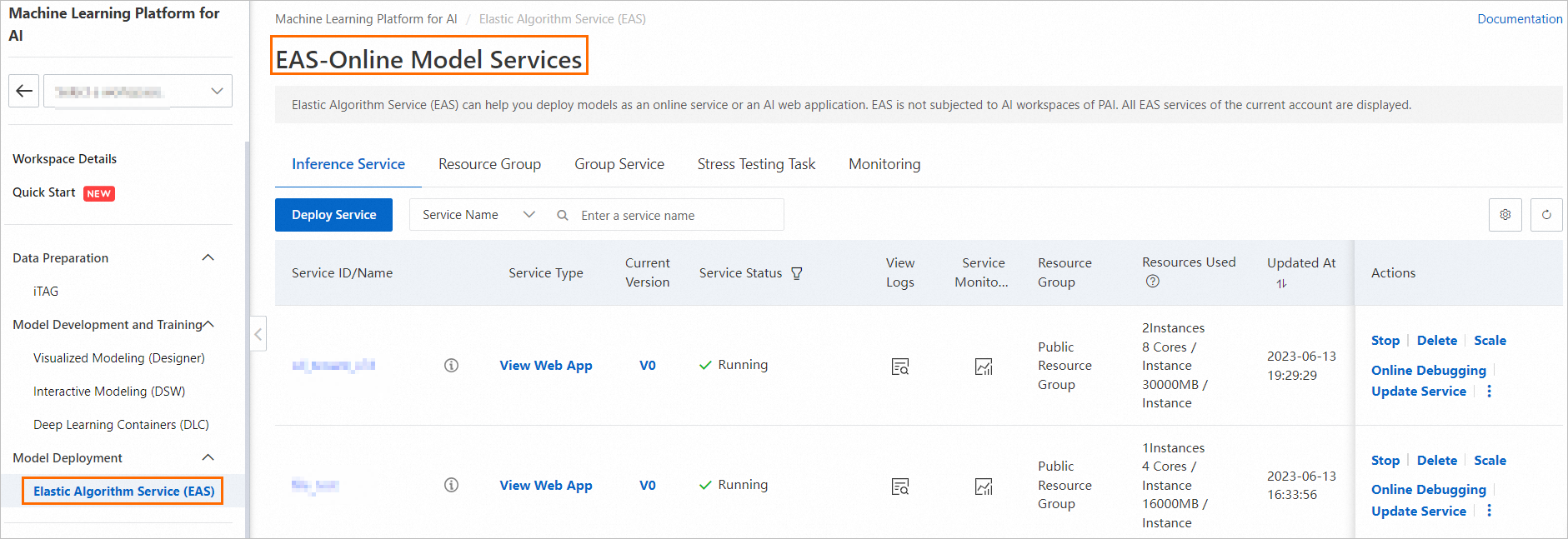

1. Go to the EAS page.

a) Log on to the PAI console.

b) In the left-side navigation pane, click Workspaces. On the Workspaces page, click the name of the workspace to which the model service that you want to manage belongs.

c) In the left-side navigation pane, choose Model Deployment>Elastic Algorithm Service (EAS) to go to the EAS-Online Model Services page.

2. On the EAS-Online Model Services page, click Deploy Service.

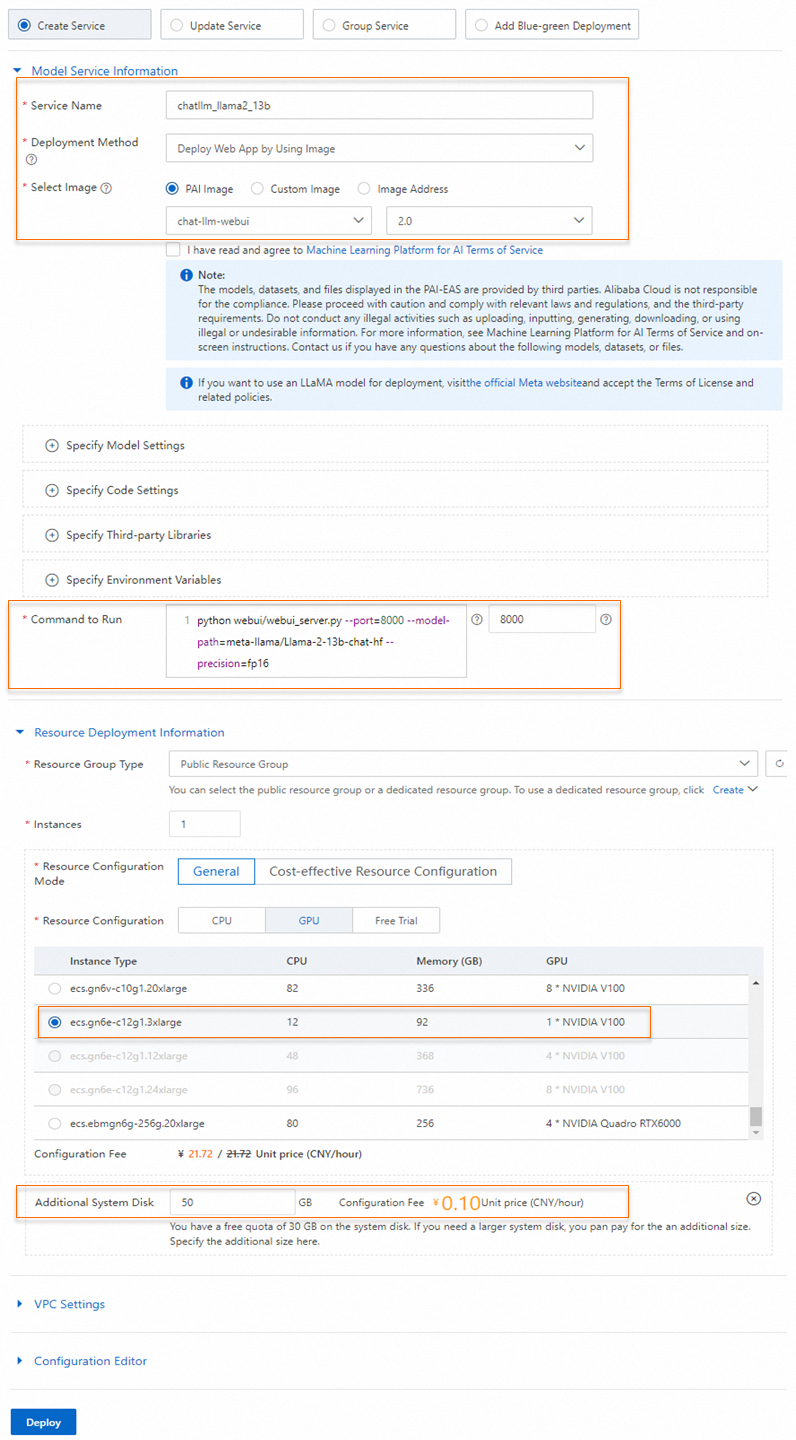

3. On the Deploy Service page, configure the following parameters.

| Parameter | Description |

| Service Name | The name of the service. In this example, chatllm_llama2_13b is used as the service name. |

| Deployment Mode | Select Deploy Web App by Using Image. |

| Select Image | Click PAI Image, select chat-llm-webui from the drop-down list, and then select 2.0 as the image version. Note: You can select the latest version of the image when you deploy the model service. |

| Command to Run | Select one of the following commands based on your model. The 13b model is used in this example. o Command to run if you use the 13b model: python webui/webui_server.py --port=8000 --model-path=meta-llama/Llama-2-13b-chat-hf --precision=fp16.o Command to run if you use the 7b model: python webui/webui_server.py --port=8000 --model-path=meta-llama/Llama-2-7b-chat-hf.Specify the port as 8000. |

| Resource Group Type | Select Public Resource Group. |

| Resource Configuration Mode | Select General. |

| Resource Configuration | You must select a GPU type. We recommend that you use the ecs.gn6e-c12g1.3xlarge instance type. • The 13b model requires an instance type that has the specification of gn6e instance family or higher. • We recommend that you use a GU 30 instance if you use the 7b model. |

| Additional System Disk | Set the value to 50 GB. |

4. Click Deploy. The deployment takes several seconds to complete.

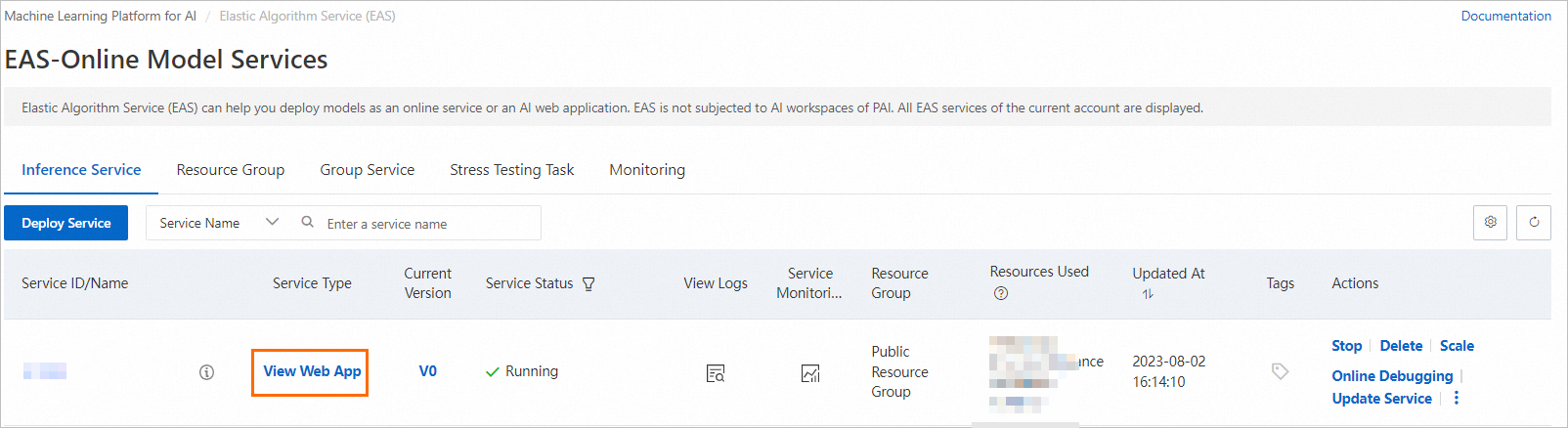

When the Model Status enters Running, the service is deployed.

1. Find the service that you want to manage and click View Web App in the Service Type column.

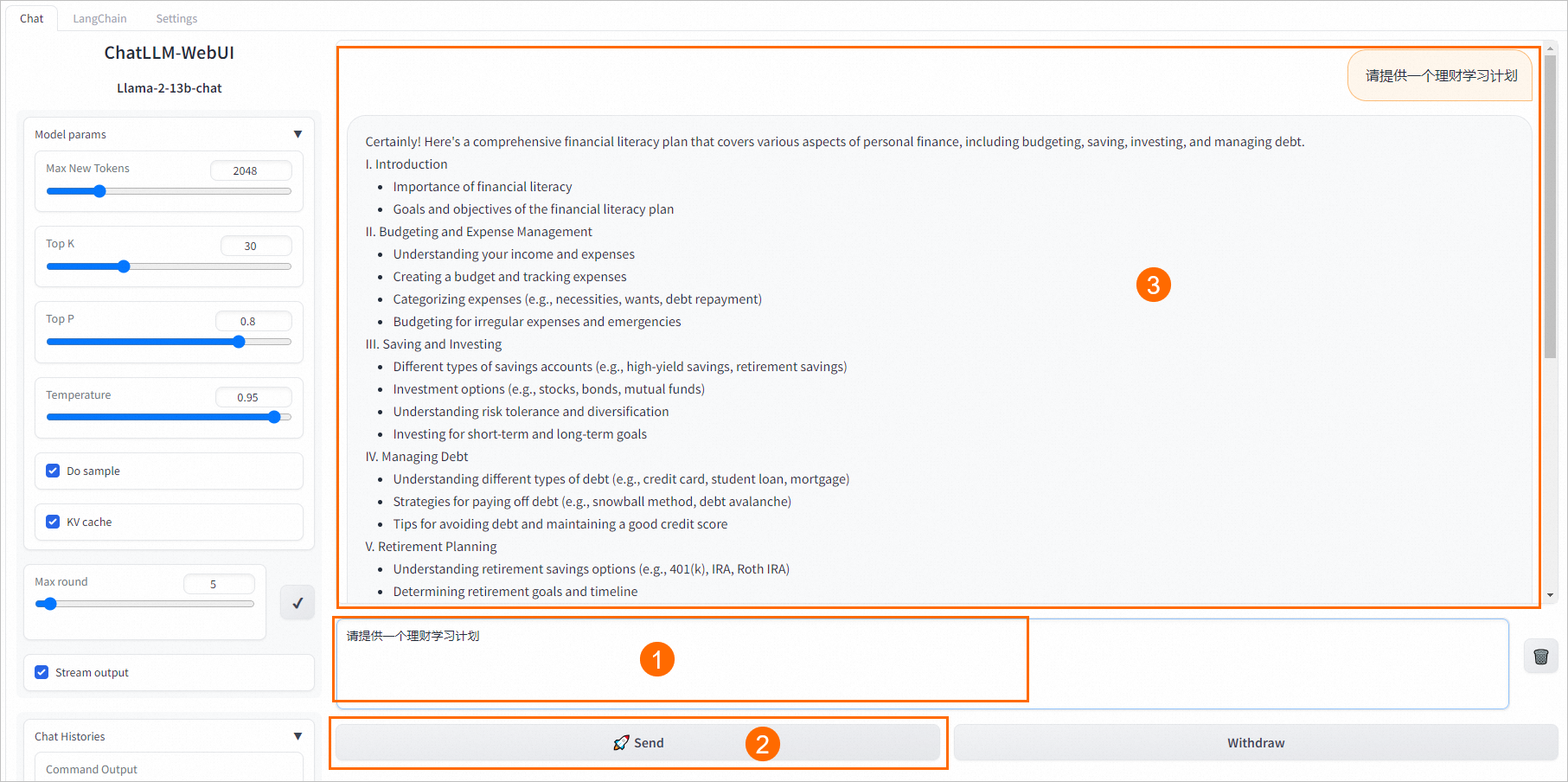

2. Perform model inference on the web UI page.

Enter a sentence in the input text box below the dialog box to start the dialogue. For example, please provide a financial learning plan. Click Send to start the dialogue.

• What is LangChain:

LangChain is an open source framework that allows AI developers to integrate LLMs such as GPT-4 with external data to achieve better performance and higher effectiveness with as little consumption of computing resources as possible.

• How does LangChain work:

LangChain divides a large data source, such as a 20-page PDF file, into sections and embeds them in a vector store.

LangChain processes the user input as natural language and stores the data locally as the knowledge base of the LLM. In each inference process, LangChain first searches for an answer similar to the input question in the local knowledge base, and input the local-generated answer and the user input together into the LLM to generate a custom answer.

• How to configure LangChain:

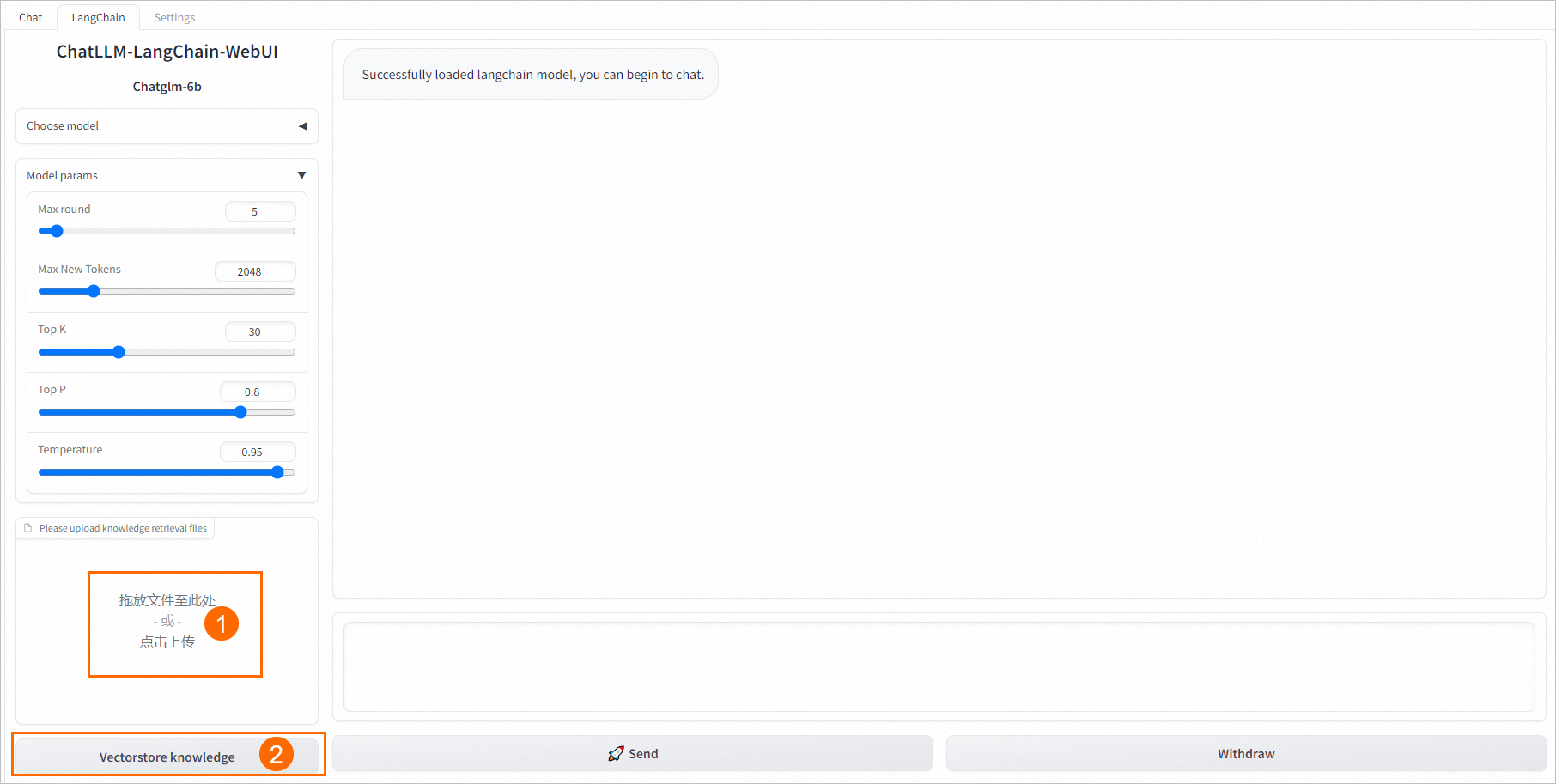

a) Click LangChain and go to the LangChain tab on the web UI page.

b) Upload custom data in the lower-left corner of the web UI page. You can upload files in TXT, MD, DOCX, and PDF formats.

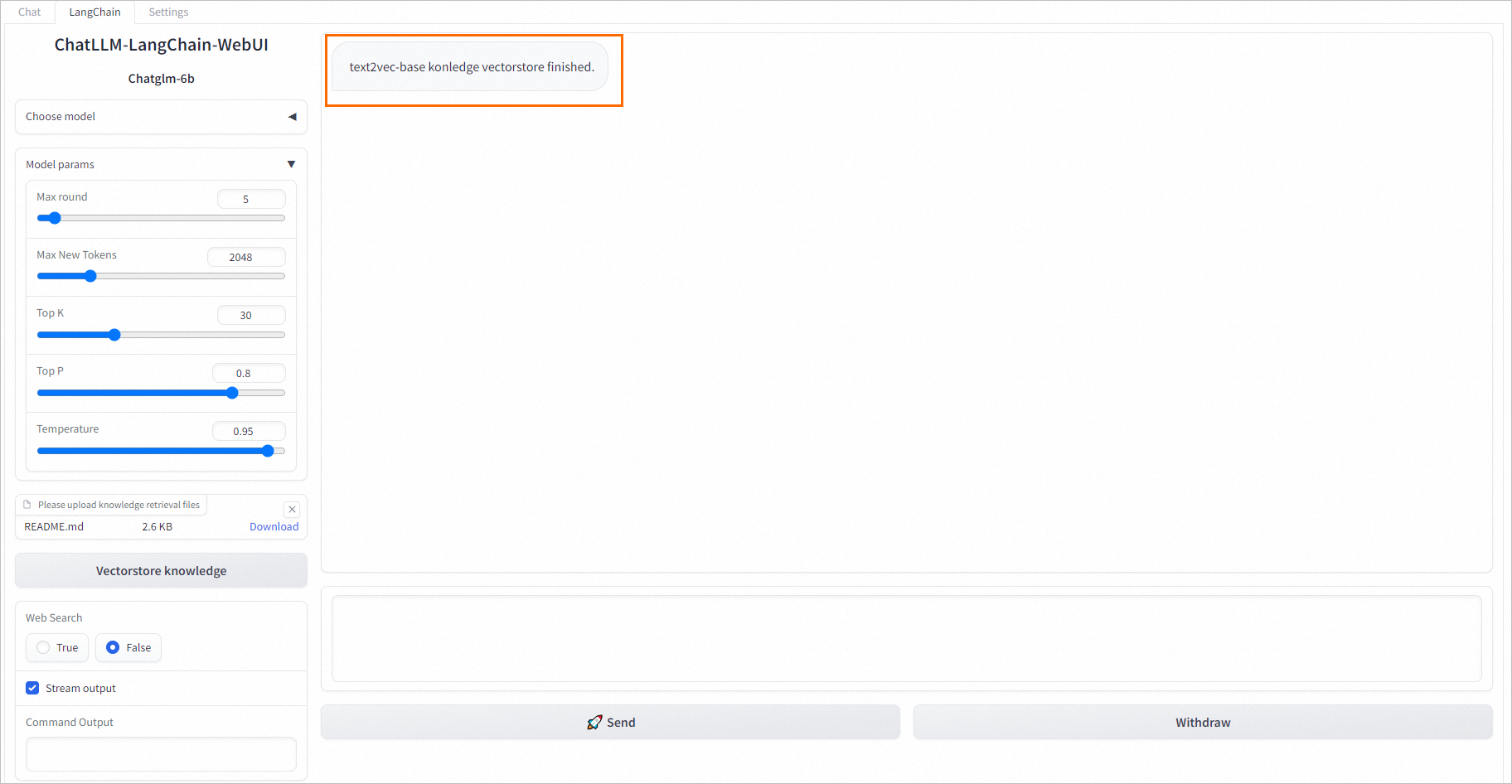

For example, you can drag and drop to upload a README.md file and click Vectorstore knowledge in the lower-left corner. The following result indicates that the custom data is loaded.

c) In the input box at the bottom of the web UI page, enter a sentence to start a dialogue.

For example, enter how to install deepspeed in the input box and click Send. The following figure shows the result.

EAS provides preset foundation models, such as Llama2, ChatGLM, and Tongyi Qianwen. Perform the following steps to switch to these models to deploy services.

| Model type | Deployment method | Command to Run |

| llama2-13b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=meta-llama/Llama-2-13b-chat-hf --precision=fp16 |

| llama2-7b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=meta-llama/Llama-2-7b-chat-hf |

| chatglm2-6b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=THUDM/chatglm2-6b |

| Qwen-7b (Tongyi Qianwen-7b) | API+WebUI | python webui/webui_server.py --port=8000 --model-path=Qwen/Qwen-7B-Chat |

| chatglm-6b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=THUDM/chatglm-6b |

| baichuan-13b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=baichuan-inc/Baichuan-13B-Chat |

| falcon-7b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=tiiuae/falcon-7b-instruct |

| baichuan2-7b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=baichuan-inc/Baichuan2-7B-Chat |

| baichuan2-13b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=baichuan-inc/Baichuan2-13B-Chat |

| Qwen-14b (Tongyi Qianwen-14b) | API+WebUI | python webui/webui_server.py --port=8000 --model-path=Qwen/Qwen-14B-Chat |

You can use OSS to mount a custom model. Perform the following steps to mount the custom model:

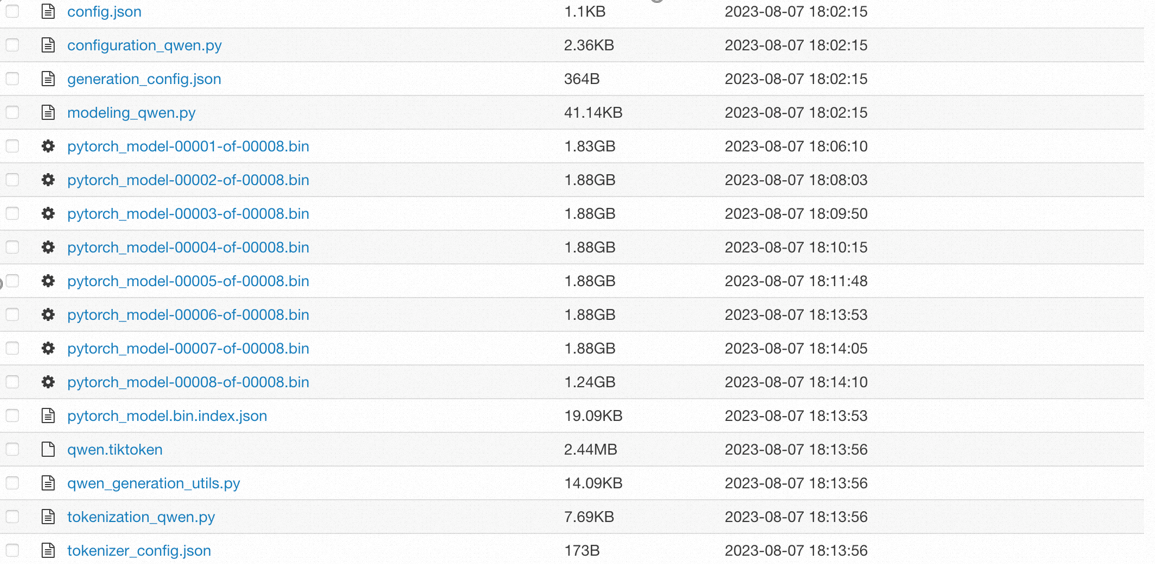

1. Upload the model and related configuration files to your OSS bucket directory. For more information about how to create a bucket and upload objects, see Create buckets and Upload objects.

The following figure provides a sample of the model file that you need to prepare:

The config.json file must be included in the configuration files. You must configure the config.json file based on the Huggingface model format. For more information about the sample file, see config.json.

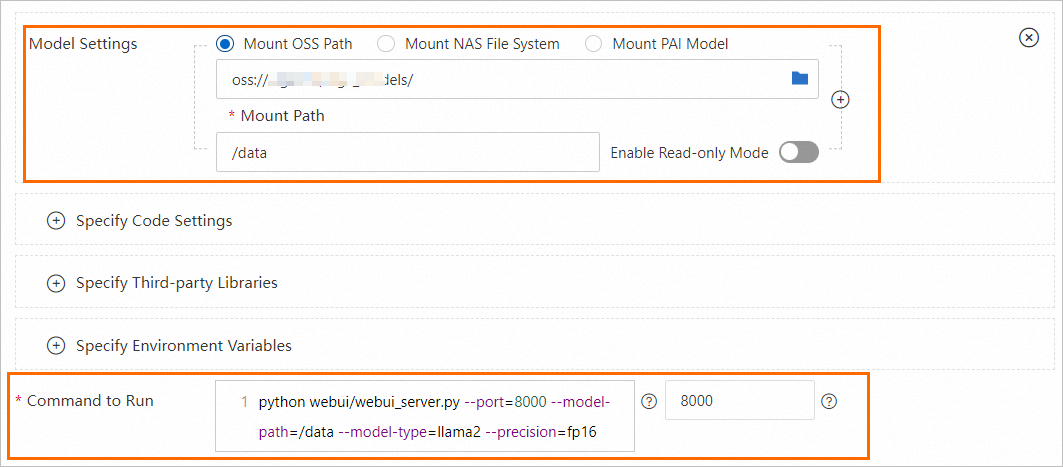

2. Click Update Service in the Actions column of the service.

3. In the Model Service Information section, specify the required parameters and click Deploy.

| Parameter | Description |

| Model Settings | Click Specify Model Settings to configure the model. • Select Mount OSS Path in Model Settings. Set the OSS bucket path to the path where the custom model files reside. Example: oss://bucket-test/data-oss/.• Set Mount Path to /data.• Enable Read-only Mode: turn off the read-only mode. |

| Command to Run | Add the following parameters to Command to Run: • --model-path: Set the parameter to /data. TSet the value to the mount path.• --model-type: the model type. For more information about commands to run for different types of models, see Commands to run in the following section. |

4. Command to run

| Model type | Deployment method | Command to Run |

| llama2 | API+WebUI | python webui/webui_server.py --port=8000 --model-path=/data --model-type=llama2 --precision=fp16 |

| chatglm2 | API+WebUI | python webui/webui_server.py --port=8000 --model-path=/data --model-type=chatglm |

| Qwen (Tongyi Qianwen) | API+WebUI | python webui/webui_server.py --port=8000 --model-path=/data --model-type=qwen |

| chatglm | API+WebUI | python webui/webui_server.py --port=8000 --model-path=/data --model-type=chatglm |

| falcon-7b | API+WebUI | python webui/webui_server.py --port=8000 --model-path=/data --model-type=falcon |

1. Obtain the service access endpoint and token.

a) Go to the PAI-EAS Model Online Service page. For more information, see the Deploy model service in EAS section in this article.

b) Click the name of the service to go to the Service Details tab.

c) In the Basic Information section, click Invocation Method. On the Public Endpoint tab, obtain the service token and endpoint.

2. Perform model inference by calling API operations.

o Non-streaming mode

The client uses the standard HTTP format. You can send requests of the following types when you use cURL commands to call the service.

• Send a STRING request

curl $host -H 'Authorization: $authorization' --data-binary @chatllm_data.txt –vReplace $authorization with the service token. Replace $host with the service endpoint. The file chatllm_data.txt is a plain text file that contains the question.

• Send a structured request

curl $host -H 'Authorization: $authorization' -H "Content-type: application/json" --data-binary @chatllm_data.json -v -H "Connection: close"You can use the chatllm_data.json file to configure inference parameters. The following code provides an example of the content format of the chatllm_data.json file:

{

"max_new_tokens": 4096,

"use_stream_chat": false,

"prompt": "How to install it?",

"system_prompt": "Act like you are programmer with 5+ years of experience."

"history": [

[

"Can you tell me what's the bladellm?",

"BladeLLM is an framework for LLM serving, integrated with acceleration techniques like quantization, ai compilation, etc. , and supporting popular LLMs like OPT, Bloom, LLaMA, etc."

]

],

"temperature": 0.8,

"top_k": 10,

"top_p": 0.8,

"do_sample": True,

"use_cache": True,

}The following table describes the parameters. Use the parameters based on your business requirements.

| Parameter | Description | Default value |

| max_new_tokens | The maximum length of the output token. The value indicates the number of tokens. | 2048 |

| use_stream_chat | Specify whether to use the streaming mode. | True |

| prompt | The user prompt. | "" |

| system_prompt | The system prompt. | "" |

| history | The conversation history. The value is of the List[Tuple(str, str)] type. | [()] |

| temperature | Specify the randomness of the model output. A larger value indicates a higher randomness. A value of 0 indicates a fixed output. The value is of the Float type and ranges from 0 to 1. | 0.95 |

| top_k | The number of outputs selected from the generated results. | 30 |

| top_p | The proportion of outputs selected from the generated results. The value is of the Float type and ranges from 0 to 1. | 0.8 |

| do_sample | Specify whether to enable output sampling. | True |

| use_cache | Specify whether to enable KV cache. | True |

You can also implement your own client based on the Python requests package. Example:

import argparse

import json

from typing import Iterable, List

import requests

def post_http_request(prompt: str,

system_prompt: str,

history: list,

host: str,

authorization: str,

max_new_tokens: int = 2048,

temperature: float = 0.95,

top_k: int = 1,

top_p: float = 0.8,

langchain: bool = False,

use_stream_chat: bool = False) -> requests.Response:

headers = {

"User-Agent": "Test Client",

"Authorization": f"{authorization}"

}

if not history:

history = [

(

"San Francisco is a",

"city located in the state of California in the United States. \

It is known for its iconic landmarks, such as the Golden Gate Bridge \

and Alcatraz Island, as well as its vibrant culture, diverse population, \

and tech industry. The city is also home to many famous companies and \

startups, including Google, Apple, and Twitter."

)

]

pload = {

"prompt": prompt,

"system_prompt": system_prompt,

"top_k": top_k,

"top_p": top_p,

"temperature": temperature,

"max_new_tokens": max_new_tokens,

"use_stream_chat": use_stream_chat,

"history": history

}

if langchain:

print(langchain)

pload["langchain"] = langchain

response = requests.post(host, headers=headers,

json=pload, stream=use_stream_chat)

return response

def get_response(response: requests.Response) -> List[str]:

data = json.loads(response.content)

output = data["response"]

history = data["history"]

return output, history

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--top-k", type=int, default=4)

parser.add_argument("--top-p", type=float, default=0.8)

parser.add_argument("--max-new-tokens", type=int, default=2048)

parser.add_argument("--temperature", type=float, default=0.95)

parser.add_argument("--prompt", type=str, default="How can I get there?")

parser.add_argument("--langchain", action="store_true")

args = parser.parse_args()

prompt = args.prompt

top_k = args.top_k

top_p = args.top_p

use_stream_chat = False

temperature = args.temperature

langchain = args.langchain

max_new_tokens = args.max_new_tokens

host = "EAS service public endpoint"

authorization = "EAS service public token"

print(f"Prompt: {prompt!r}\n", flush=True)

# You can set the system prompt of the language mode in the client request.

system_prompt = "Act like you are programmer with \

5+ years of experience."

# You can set the conversation history in the client request. The conversation history is stored in the client and is used for multi-round conversations. In most cases, the previous round of conversion information is used. The information is in the format of List[Tuple(str, str)]

history = []

response = post_http_request(

prompt, system_prompt, history,

host, authorization,

max_new_tokens, temperature, top_k, top_p,

langchain=langchain, use_stream_chat=use_stream_chat)

output, history = get_response(response)

print(f" --- output: {output} \n --- history: {history}", flush=True)

# The server returns a JSON response that includes the inference result and conversation history.

def get_response(response: requests.Response) -> List[str]:

data = json.loads(response.content)

output = data["response"]

history = data["history"]

return output, historyIn the preceding code:

o Streaming mode

The streaming mode uses the HTTP SSE method. Other settings are the same as the non-streaming mode. Sample code:

import argparse

import json

from typing import Iterable, List

import requests

def clear_line(n: int = 1) -> None:

LINE_UP = '\033[1A'

LINE_CLEAR = '\x1b[2K'

for _ in range(n):

print(LINE_UP, end=LINE_CLEAR, flush=True)

def post_http_request(prompt: str,

system_prompt: str,

history: list,

host: str,

authorization: str,

max_new_tokens: int = 2048,

temperature: float = 0.95,

top_k: int = 1,

top_p: float = 0.8,

langchain: bool = False,

use_stream_chat: bool = False) -> requests.Response:

headers = {

"User-Agent": "Test Client",

"Authorization": f"{authorization}"

}

if not history:

history = [

(

"San Francisco is a",

"city located in the state of California in the United States. \

It is known for its iconic landmarks, such as the Golden Gate Bridge \

and Alcatraz Island, as well as its vibrant culture, diverse population, \

and tech industry. The city is also home to many famous companies and \

startups, including Google, Apple, and Twitter."

)

]

pload = {

"prompt": prompt,

"system_prompt": system_prompt,

"top_k": top_k,

"top_p": top_p,

"temperature": temperature,

"max_new_tokens": max_new_tokens,

"use_stream_chat": use_stream_chat,

"history": history

}

if langchain:

print(langchain)

pload["langchain"] = langchain

response = requests.post(host, headers=headers,

json=pload, stream=use_stream_chat)

return response

def get_streaming_response(response: requests.Response) -> Iterable[List[str]]:

for chunk in response.iter_lines(chunk_size=8192,

decode_unicode=False,

delimiter=b"\0"):

if chunk:

data = json.loads(chunk.decode("utf-8"))

output = data["response"]

history = data["history"]

yield output, history

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--top-k", type=int, default=4)

parser.add_argument("--top-p", type=float, default=0.8)

parser.add_argument("--max-new-tokens", type=int, default=2048)

parser.add_argument("--temperature", type=float, default=0.95)

parser.add_argument("--prompt", type=str, default="How can I get there?")

parser.add_argument("--langchain", action="store_true")

args = parser.parse_args()

prompt = args.prompt

top_k = args.top_k

top_p = args.top_p

use_stream_chat = True

temperature = args.temperature

langchain = args.langchain

max_new_tokens = args.max_new_tokens

host = ""

authorization = ""

print(f"Prompt: {prompt!r}\n", flush=True)

system_prompt = "Act like you are programmer with \

5+ years of experience."

history = []

response = post_http_request(

prompt, system_prompt, history,

host, authorization,

max_new_tokens, temperature, top_k, top_p,

langchain=langchain, use_stream_chat=use_stream_chat)

for h, history in get_streaming_response(response):

print(

f" --- stream line: {h} \n --- history: {history}", flush=True)In the preceding code:

The WebSocket method can better manage the conversation history. You can use the WebSocket method to connect to the service and perform one or more rounds of conversation. Sample code:

import os

import time

import json

import struct

from multiprocessing import Process

import websocket

round = 5

questions = 0

def on_message_1(ws, message):

if message == "<EOS>":

print('pid-{} timestamp-({}) receives end message: {}'.format(os.getpid(),

time.time(), message), flush=True)

ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE)

else:

print("{}".format(time.time()))

print('pid-{} timestamp-({}) --- message received: {}'.format(os.getpid(),

time.time(), message), flush=True)

def on_message_2(ws, message):

global questions

print('pid-{} --- message received: {}'.format(os.getpid(), message))

# end the client-side streaming

if message == "<EOS>":

questions = questions + 1

if questions == 5:

ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE)

def on_message_3(ws, message):

print('pid-{} --- message received: {}'.format(os.getpid(), message))

# end the client-side streaming

ws.send(struct.pack('!H', 1000), websocket.ABNF.OPCODE_CLOSE)

def on_error(ws, error):

print('error happened: ', str(error))

def on_close(ws, a, b):

print("### closed ###", a, b)

def on_pong(ws, pong):

print('pong:', pong)

# stream chat validation test

def on_open_1(ws):

print('Opening Websocket connection to the server ... ')

params_dict = {}

params_dict['prompt'] = """Show me a golang code example: """

params_dict['temperature'] = 0.9

params_dict['top_p'] = 0.1

params_dict['top_k'] = 30

params_dict['max_new_tokens'] = 2048

params_dict['do_sample'] = True

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

# raw_req = f"""To open a Websocket connection to the server: """

ws.send(raw_req)

# end the client-side streaming

# multi-round query validation test

def on_open_2(ws):

global round

print('Opening Websocket connection to the server ... ')

params_dict = {"max_new_tokens": 6144}

params_dict['temperature'] = 0.9

params_dict['top_p'] = 0.1

params_dict['top_k'] = 30

params_dict['use_stream_chat'] = True

params_dict['prompt'] = "Hello! "

params_dict = {

"system_prompt":

"Act like you are programmer with 5+ years of experience."

}

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

ws.send(raw_req)

params_dict['prompt'] = "Please write a sorting algorithm in Python."

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

ws.send(raw_req)

params_dict['prompt'] = "Please convert to the Java implementation."

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

ws.send(raw_req)

params_dict['prompt'] = "Please introduce yourself?"

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

ws.send(raw_req)

params_dict['prompt'] = "Please summarize the dialogue above."

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

ws.send(raw_req)

# Langchain validation test.

def on_open_3(ws):

global round

print('Opening Websocket connection to the server ... ')

params_dict = {}

# params_dict['prompt'] = """To open a Websocket connection to the server: """

params_dict['prompt'] = """Can you tell me what's the MNN?"""

params_dict['temperature'] = 0.9

params_dict['top_p'] = 0.1

params_dict['top_k'] = 30

params_dict['max_new_tokens'] = 2048

params_dict['use_stream_chat'] = False

params_dict['langchain'] = True

raw_req = json.dumps(params_dict, ensure_ascii=False).encode('utf8')

ws.send(raw_req)

authorization = ""

host = "ws://" + ""

def single_call(on_open_func, on_message_func, on_clonse_func=on_close):

ws = websocket.WebSocketApp(

host,

on_open=on_open_func,

on_message=on_message_func,

on_error=on_error,

on_pong=on_pong,

on_close=on_clonse_func,

header=[

'Authorization: ' + authorization],

)

# setup ping interval to keep long connection.

ws.run_forever(ping_interval=2)

if __name__ == "__main__":

for i in range(5):

p1 = Process(target=single_call, args=(on_open_1, on_message_1))

p2 = Process(target=single_call, args=(on_open_2, on_message_2))

p3 = Process(target=single_call, args=(on_open_3, on_message_3))

p1.start()

p2.start()

p3.start()

p1.join()

p2.join()

p3.join()In the preceding code:

o Set authorization to the service token.

o Set host to the service access endpoint. Replace the http in the endpoint with ws.

o Use the use_stream_chat parameter to specify whether the client generates output in the streaming mode. The default value is True, which indicates that the server returns streaming data.

o Refer to the implementation method of the on_open_2 function in the preceding sample code to implement multi-round conversation.

The following tables describe the parameters that you can configure in the command.

| Parameter | Description | Default value |

| --model-path | Set the preset model name or the custom model path. • Example 1: Load a preset model. You can use a preset model in the meta-llama/Llama-2-* series, such as 7b-hf, 7b-chat-hf, 13b-hf, and 13b-chat-hf. Sample code: python webui/webui_server.py --port=8000 --model-path=meta-llama/Llama-2-7b-chat-hf.• Example 2: Load an on-premises custom model. Sample code: python webui/webui_server.py --port=8000 --model-path=/llama2-7b-chat. |

The default model: meta-llama/Llama-2-7b-chat-hf. |

| --cpu | You can use this parameter if you want to use the CPU to perform model inference. Sample code: python webui/webui_server.py --port=8000 –cpu. |

GPU is used for model inference by default. |

| --precision | Set the precision of the Llama2 model. FP32 and FP16 are supported. Sample code: python webui/webui_server.py --port=8000 --precision=fp32. |

The system automatically configures the precision used by the 7b model based on the GPU memory size. |

| --port | Specify the listening port of the web UI service. Sample code: python webui/webui_server.py --port=8000. |

8000 |

| --api-only | Start the service only by using APIs. By default, the service starts both the web UI and API server. Sample code: python webui/webui_server.py --api-only. |

False |

| --no-api | Start the service only by using the web UI. By default, the service starts both the web UI and API server. Sample code: python webui/webui_server.py --no-api. |

False |

| --max-new-tokens | The maximum length of the output token. The value indicates the number of tokens. Sample code: python api/api_server.py --port=8000 --max-new-tokens=1024. |

2048 |

| --temperature | Specify the randomness of the model output. A larger value indicates a higher randomness. A value of 0 indicates a fixed output. The value is of the Float type and ranges from 0 to 1. Sample code: python api/api_server.py --port=8000 --max_length=0.8. |

0.95 |

| --max_round | The number of dialogue rounds supported during inference. Sample code: python api/api_server.py --port=8000 --max_round=10. |

5 |

| --top_k | The proportion of outputs selected from the generated results. The value is of the Float type and ranges from 0 to 1. Sample code: python api/api_server.py --port=8000 --top_p=0.9. |

None |

| --top_p | The proportion of outputs selected from the generated results. The value is of the Float type and ranges from 0 to 1. Sample code: python api/api_server.py --port=8000 --top_p=0.9. |

None |

| --no-template | Models such as llama2 and falcon provide default prompt templates. You can set this parameter to manage the default prompt templates. Sample code: python api/api_server.py --port=8000 --no-template. |

A template is used by default. |

| --log-level | Specify the log output level. Valid values: DEBUG, INFO, WARNING, and ERROR. Sample code: python api/api_server.py --port=8000 --log-level=DEBUG. |

INFO |

| --export-history-path | You can use the EAS llm service to export the conversation history. Specify an output path to which the conversation history is exported when you start the service. In most cases, specify the parameter as a mount path of an OSS bucket. EAS service exports the records of the conversation that happens within 1 hour to a file. Sample code: python api/api_server.py --port=8000 --export-history-path=/your_mount_path. |

This feature is disabled by default. |

| --export-interval | The time period during which the conversation is recorded. Unit: seconds. For example, if you set the --export-interval to 3600, the conversation records of the previous hour are exported into a file. |

3600 |

Quickly Deploy Stable Diffusion for Text-to-Image Generation in EAS

44 posts | 1 followers

FollowAlibaba Cloud Data Intelligence - June 17, 2024

Farruh - July 25, 2023

Alibaba Cloud Data Intelligence - June 17, 2024

Alibaba Cloud Data Intelligence - June 18, 2024

Farruh - August 13, 2023

Farruh - January 12, 2024

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Web App Service

Web App Service

Web App Service allows you to deploy, scale, adjust, and monitor applications in an easy, efficient, secure, and flexible manner.

Learn More WAF(Web Application Firewall)

WAF(Web Application Firewall)

A cloud firewall service utilizing big data capabilities to protect against web-based attacks

Learn More Web Hosting Solution

Web Hosting Solution

Explore Web Hosting solutions that can power your personal website or empower your online business.

Learn MoreMore Posts by Alibaba Cloud Data Intelligence