A workspace is created. For more information, see Create a workspace.

MaxCompute resources and common computing resources are associated with the workspace. For more information, see Manage workspaces.

Each row of the input training data must contain a pair of question and answer, which corresponds to the following fields:

If your data field names do not meet the requirements, you can use a custom SQL script to preprocess the data. If your data is obtained from the Internet, redundant data or dirty data may exist. You can use LLM data preprocessing components for preliminary data cleaning and sorting. For more information, see LLM Data Processing.

1. Go to the Machine Learning Designer page.

2. Create a pipeline.

| No. | Description |

| 1 | Simple data preprocessing is performed only for end-to-end demonstration. For more information about data preprocessing, see LLM Data Processing. |

| 2 | Model training and offline inference are performed. The following components are used: • LLM Model Training This component encapsulates LLM models provided in QuickStart. The underlying computing is performed based on Deep Learning Containers (DLC) tasks. You can click the LLM Model Training-1 node on the canvas, and specify model_name on the Fields Setting tab in the right pane. This component supports multiple mainstream LLM models. In this pipeline, the qwen-7b-chat model is selected for training. • LLM Model Inference This component is used for offline inference. In this pipeline, the qwen-7b-chat model is selected for offline batch inference. |

3. Click the  button at the top of the canvas to run the pipeline.

button at the top of the canvas to run the pipeline.

4. After the pipeline is run as expected, view the inference result. To view the inference result, right-click the LLM Model Inference-1 node on the canvas and choose View Data > output directory to save infer results (OSS).

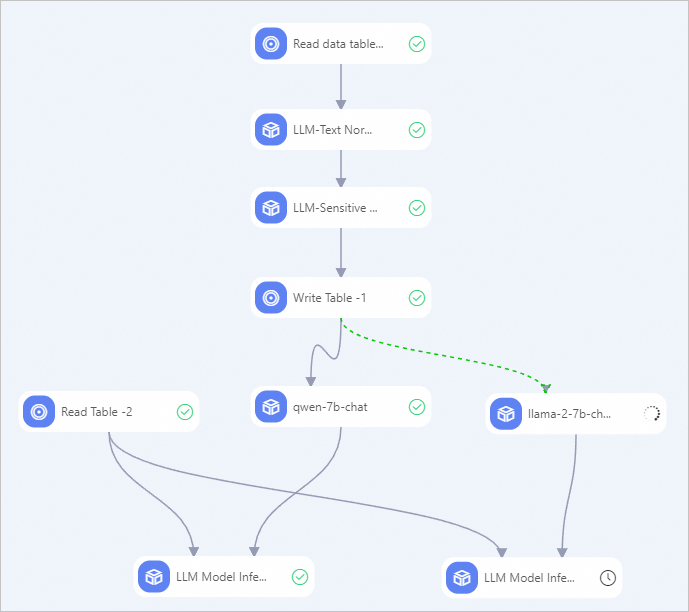

You can also use the same preprocessed data to perform training and inference for multiple models at the same time. For example, you can create the following pipeline to fine-tune the qwen-7b-chat and llama2-7b-chat models at the same time, and then use the same batch of test data to compare the inference results.

44 posts | 1 followers

FollowAlibaba Cloud Community - January 30, 2026

Alibaba Cloud Native Community - February 20, 2025

Alibaba Container Service - November 15, 2024

Alibaba Cloud Native - September 12, 2024

Alibaba Cloud Indonesia - January 22, 2026

Alibaba Container Service - July 25, 2025

44 posts | 1 followers

Follow Offline Visual Intelligence Software Packages

Offline Visual Intelligence Software Packages

Offline SDKs for visual production, such as image segmentation, video segmentation, and character recognition, based on deep learning technologies developed by Alibaba Cloud.

Learn More Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink offers a highly integrated platform for real-time data processing, which optimizes the computing of Apache Flink.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn MoreMore Posts by Alibaba Cloud Data Intelligence