This article describes how to deploy a web application based on the open source model Tongyi Qianwen and perform model inference on the web page or by using API operations in Elastic Algorithm Service (EAS) of Platform for AI (PAI).

Tongyi Qianwen-7b (Qwen-7B) is a 7 billion-parameter model of the Tongyi Qianwen foundation model series that is developed by Alibaba Cloud. Qwen-7B is a large language model (LLM) that is based on Transformer and trained on ultra-large-scale pre-training data. The pre-training data covers a wide range of data types, including a large number of texts, professional books, and code. In addition, the LLM AI assistant Qwen-7B-Chat is developed by using the alignment mechanism based on Qwen-7B.

EAS is activated. The default workspace and pay-as-you-go resources are created. For more information, see Activate PAI and create the default workspace.

Perform the following steps to deploy Qwen-7B as an AI-powered web application.

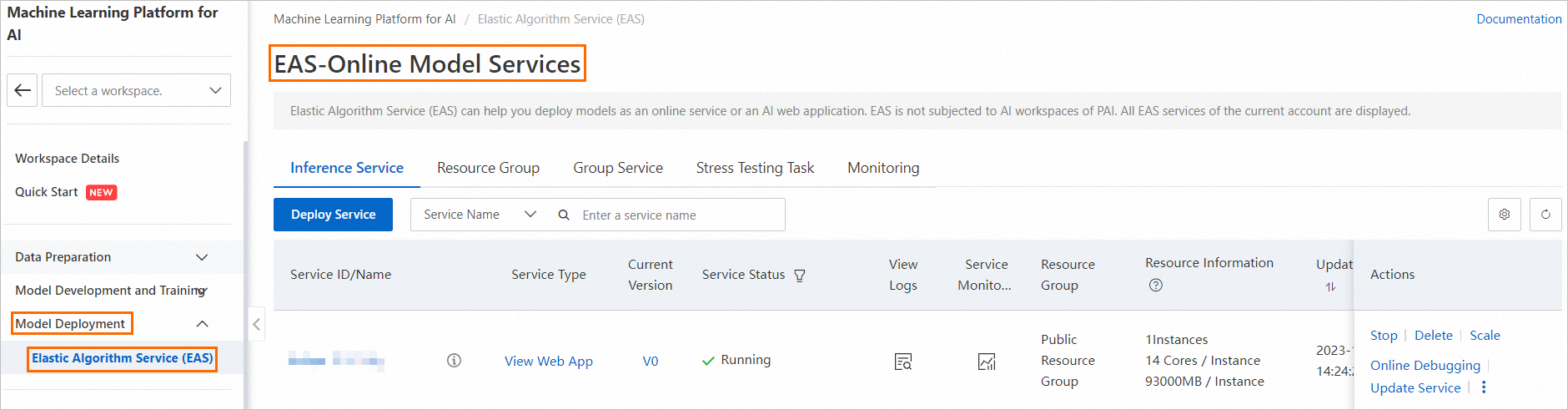

1. Go to the EAS-Online Model Services page.

a) Log on to the Platform for AI (PAI) console.

b) In the left-side navigation pane, click Workspaces. On the Workspaces page, click the name of the workspace to which the model service that you want to manage belongs.

c) In the left-side navigation pane, choose Model Deployment>Elastic Algorithm Service (EAS) to go to the EAS-Online Model Services page.

2. On the EAS-Online Model Services page, click Deploy Service.

3. On the Deploy Service page, configure the required parameters. The following table describes key parameters.

| Parameter | Description |

|---|---|

| Service Name | The name of the service. In this example, the service name qwen_demo is specified. |

| Deployment Mode | Select Deploy Web App by Using Image. |

| Select Image | Click PAI Image, select modelscope-inference from the image drop-down list, and then select 1.8.1 from the image version drop-down list. |

| Environment Variable | MODEL_ID: qwen/Qwen-7B-Chat TASK: chat REVISION: v1.0.5 For information about the related configurations, see the description of Qwen-7B-Chat on the ModelScope website. |

| Command to Run | Command: python app.pyPort number: 8000

|

| Resource Group Type | Select Public Resource Group. |

| Resource Configuration Mode | Select General. |

| Resource Configuration | Click GPU and select the ml.gu7i.c16m60.1-gu30 instance type. Note: In this example, the training requires an instance of the GPU type that has at least 20 GB of memory. We recommend that you use ml.gu7i.c16m60.1-gu30 to reduce costs. |

| Additional System Disk | Additional System Disk: 100. Unit: GB. |

4. Click Deploy. Go to the EAS-Online Model Services page. When the Service Status changes to Running, the model is deployed.

Note

In most cases, deployment requires approximately 5 minutes to complete. The amount of time that is required to complete a deployment varies based on the resource availability, service load, and configuration.

After you deploy the model, you can perform model inference by using different methods.

Note

In this example, the debugging information is in the list format. The input field is the input content, and the history field is the history dialogue. The body is a list that contains two sections. The first section is the question, and the second section is the answer to the question.

a) You can start the inference by entering a request without the history field. Example:

{"input": "Where is the provincial capital of Zhejiang?"}The service returns the result that contains the history field. Example:

Status Code: 200

Content-Type: application/json

Date: Mon, 14 Aug 2023 12:01:45 GMT

Server: envoy

Vary: Accept-Encoding

X-Envoy-Upstream-Service-Time: 511

Body: {"response":"The provincial capital of Zhejiang is Hangzhou. ","history":[["Where is the provincial capital of Zhejiang?","The provincial capital of Zhejiang is Hangzhou."]]} b) You can include the history field in the following request to perform a continuous conversation. Example:

{"input": "What about Jiangsu?", "history": [["Where is the provincial capital of Zhejiang?","The provincial capital of Zhejiang is Hangzhou."]]}The service returns the result. Example:

Status Code: 200

Content-Type: application/json

Date: Mon, 14 Aug 2023 12:01:23 GMT

Server: envoy

Vary: Accept-Encoding

X-Envoy-Upstream-Service-Time: 522

Body: {"response":"The provincial capital of Jiangsu is Nanjing.","history":[["Where is the provincial capital of Zhejiang?","The provincial capital of Zhejiang is Hangzhou."],[ "What about Jiangsu?","The provincial capital of Jiangsu is Nanjing."]]} You can call the service by calling API operations.

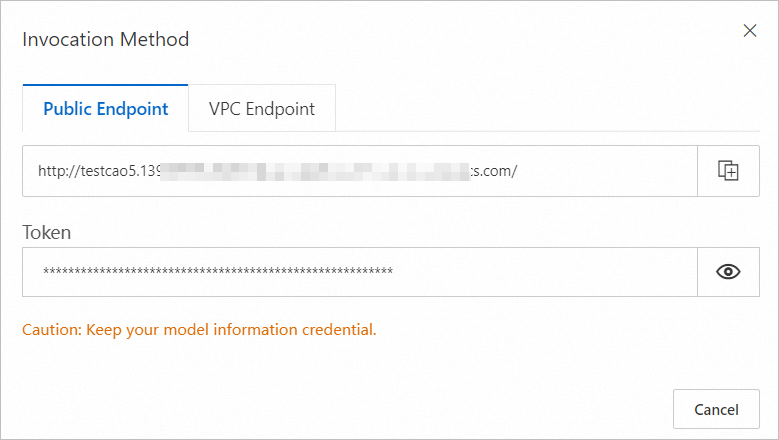

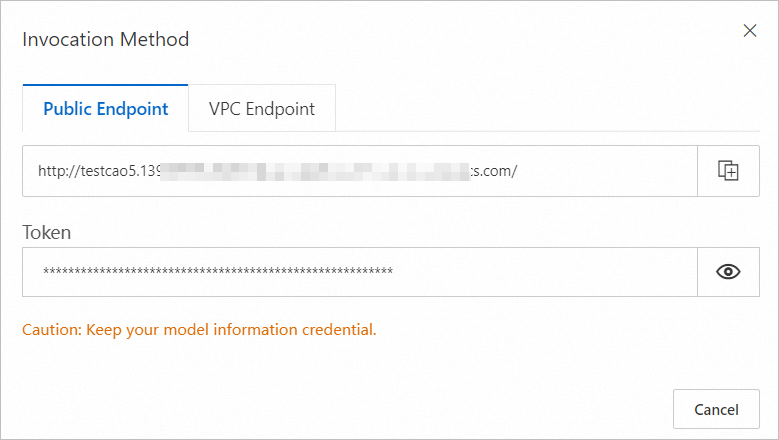

1. In the Basic Information section of the Service Details tab, click View Endpoint Information. In the Invocation Method dialog box, obtain the values of the Public Endpoint and Token parameters.

2. Call the service based on the information that you obtained in the terminal. Example:

curl -d '{"input": "What about Jiangsu?", "history": [["Where is the provincial capital of Zhejiang?", "The provincial capital of Zhejiang is Hangzhou."]]}' -H "Authorization: xxx" http://xxxx.comThe service returns the result. Example:

{"response":"The provincial capital of Jiangsu is Nanjing.","history":[["Where is the provincial capital of Zhejiang?","The provincial capital of Zhejiang is Hangzhou."],["What about Jiangsu?","The provincial capital of Jiangsu is Nanjing."]]}Send an HTTP request to the service based on your business requirements. For more information about debugging, refer to the SDK that is provided by PAI in the Deploy inference services topic. Sample Python code:

import requests

import json

data = {"input": "Who are you?"}

response = requests.post(url='http://qwen-demo.16623xxxxx.cn-hangzhou.pai-eas.aliyuncs.com/',

headers={"Authorization": "yourtoken"},

data=json.dumps(data))

print(response.text)

data = {"input": "What can you do?", "history": json.load (response.text)["history"]}

response = requests.post(url='http://qwen-demo.16623xxxxx.cn-hangzhou.pai-eas.aliyuncs.com/',

headers={"Authorization": "yourtoken"},

data=json.dumps(data))

print(response.text)1. In the Basic Information section of the Service Details tab, click View Endpoint Information. In the Invocation Method dialog box, obtain the values of the Public Endpoint and Token parameters.

2. In the terminal, run the following Python code to send a streaming request based on the information that you obtained.

#encoding=utf-8

from websockets.sync.client import connect

import os

import platform

def clear_screen():

if platform.system() == "Windows":

os.system("cls")

else:

os.system("clear")

def print_history(history):

print("Welcome to the Qwen-7B model. Start the conversation by entering a content. Press clear to clear the conversation history and stop to terminate the program.")

for pair in history:

print(f"\nUser: {pair[0]}\nQwen-7B: {pair[1]}")

def main():

history, response = [], ''

clear_screen()

print_history(history)

with connect("<service_url>", additional_headers={"Authorization": "<token>"}) as websocket:

while True:

query = input("\nUser: ")

if query.strip() == "stop":

break

websocket.send(query)

while True:

msg = websocket.recv()

if msg == '<EOS>':

break

clear_screen()

print_history(history)

print(f"\nUser: {query}")

print("\nQwen-7B: ", end="")

print(msg)

response = msg

history.append((query, response))

if __name__ == "__main__":

main()<service_url> with the endpoint that you obtained in Step 1 and replace http in the endpoint with ws.<token> with the service token that you obtained in Step 1.44 posts | 1 followers

FollowAlibaba Cloud Community - November 16, 2023

Alibaba Cloud Community - September 6, 2024

Alibaba Cloud Community - January 4, 2024

Alibaba Cloud Data Intelligence - June 17, 2024

JwdShah - February 13, 2024

Alibaba Cloud Data Intelligence - December 6, 2023

44 posts | 1 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn MoreMore Posts by Alibaba Cloud Data Intelligence

5220110775107666 May 4, 2024 at 5:37 pm

Bch