MaxCompute is a data warehouse for exabyte levels of data and it was previously known as Open Data Processing System. MaxCompute is a general purpose, fully managed, multi-tenancy data processing platform for large-scale data warehousing. MaxCompute supports various data importing solutions and distributed computing models, enabling users to effectively query massive datasets, reduce production costs, and ensure data security. Apart from providing a data lake and processing of exabyte levels of data, MaxCompute supports multiple computational models, reliable data security by enabling multi-level sandbox protection and monitoring. It is used for three main functionalities as listed below.

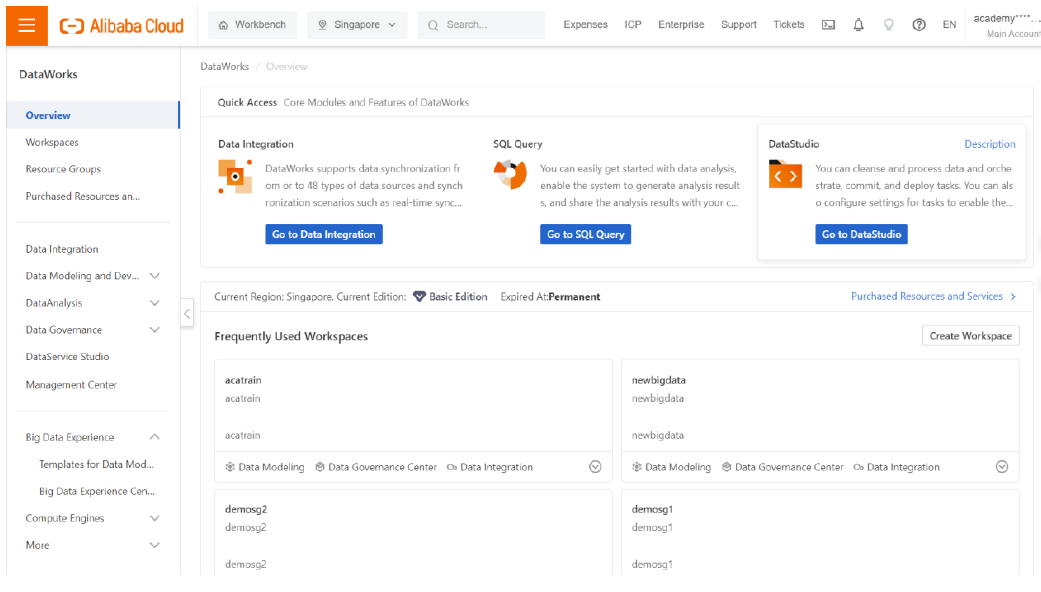

Data Integration uses data studio in Data works environment. Data processing uses Hive SQL to parse through the massive amount of data available and Quick BI is used to visualize the data in the form of a report or dashboard. For performing these operations, it is required to create a workspace in Data works. This blog explains the procedures to follow before creating nodes and performing tasks like data integration, data processing and data visualization. To start with, open the Alibaba Cloud Console and open Data Works.

Click on “Create Workspace” button.

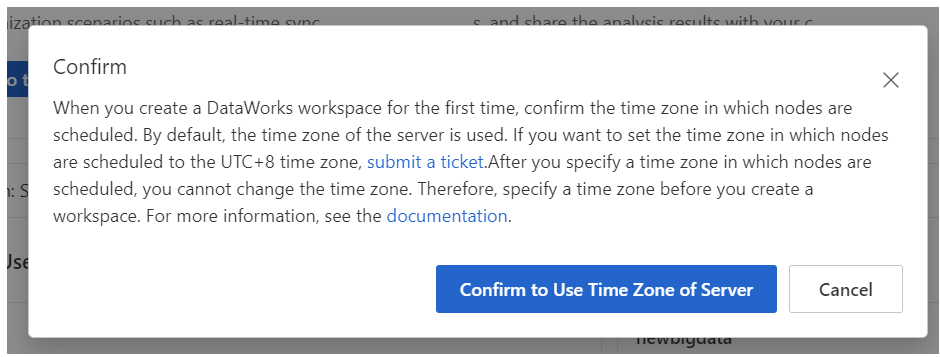

Now click on “Confirm to Use Time Zone of Server”.

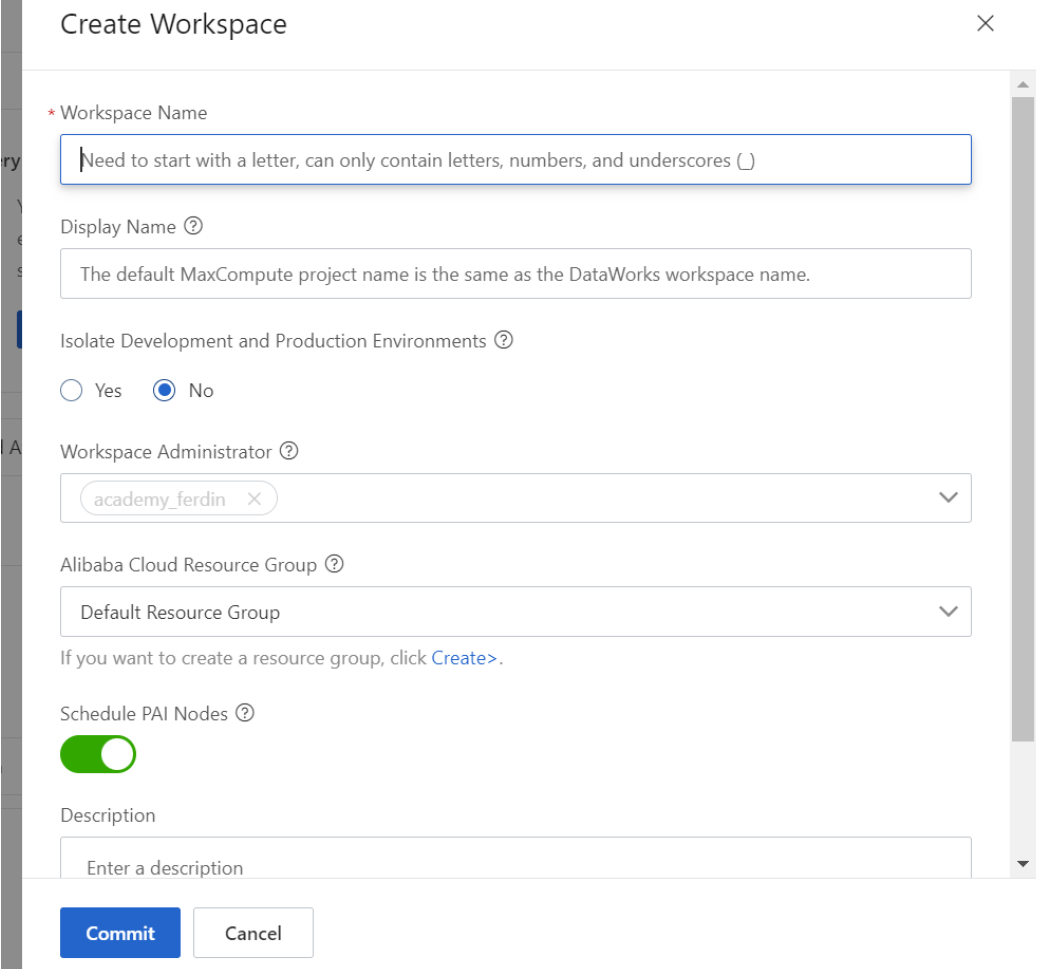

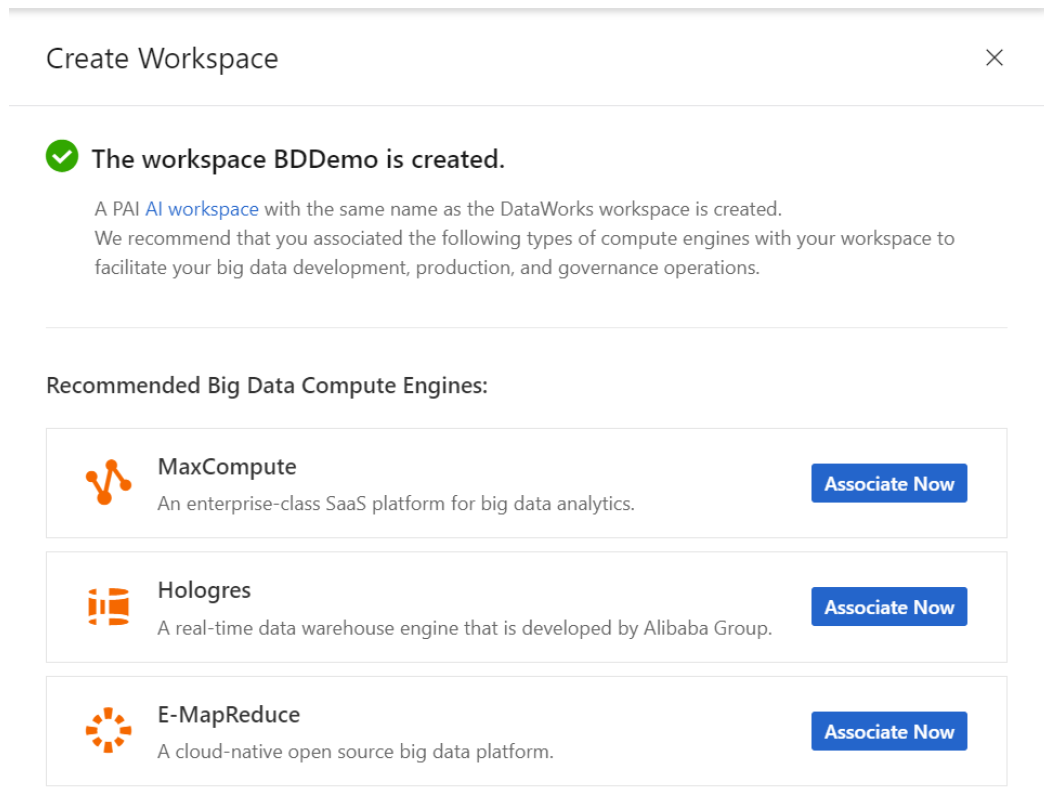

Enter all the details needed and then click “Commit”. Here for an example scenario, we create a Workspace name as “BDDemo” and then click on “Commit”. After committing the workspace, a PAI instance is associated by default and now we are left with an option to associate with data warehousing services like MaxCompute, Hologres and E-MapReduce. We choose to associate to MaxCompute as of now. There are many other options also available. For getting availed with those, we need to get into Management Center.

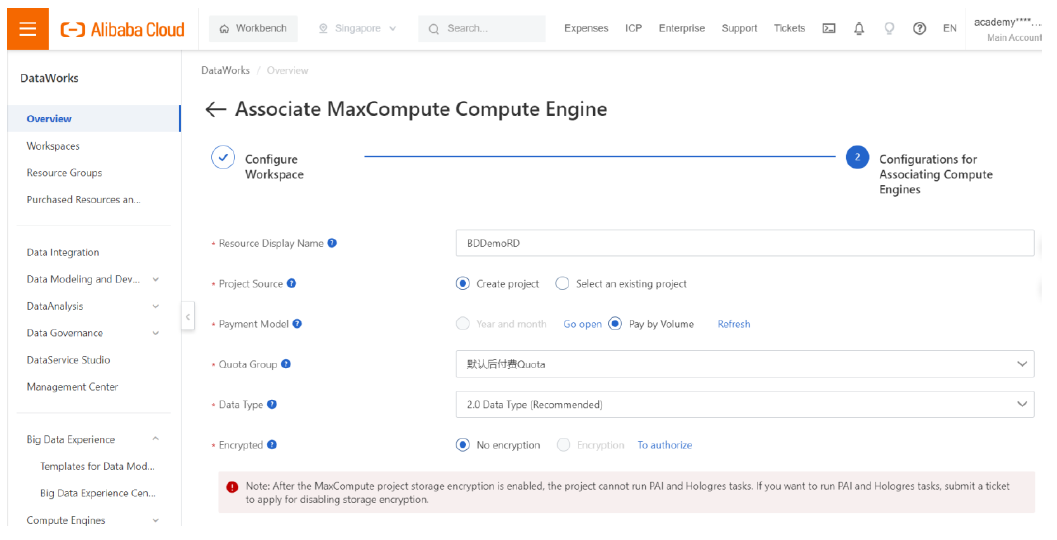

Click on “Associate Now” near MaxCompute. For an example, we enter the name of Resource Display name as “BDDemoRD”. Click on Pay by Volume and select the quota available from the dropdown box.

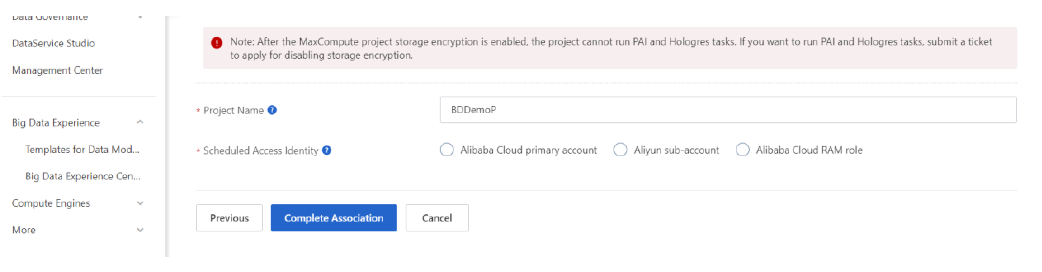

Then enter the project name and here we have filled it with “BDDemoP” and given access to the Alibaba Cloud Primary Account by clicking on it. Then click on “Complete Association”. "BDDemoP" is the maxcompute project name.

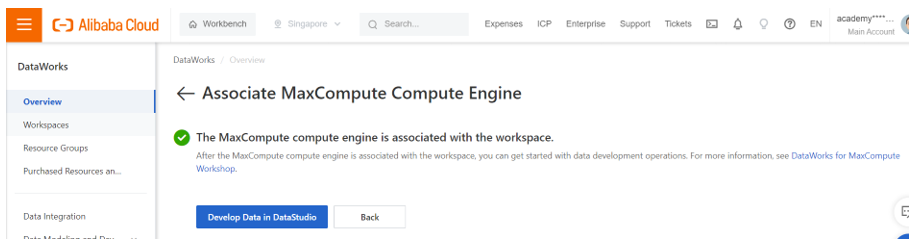

Now the DataWorks workspace is associated with MaxCompute’s compute engine.

Click on “Back”.

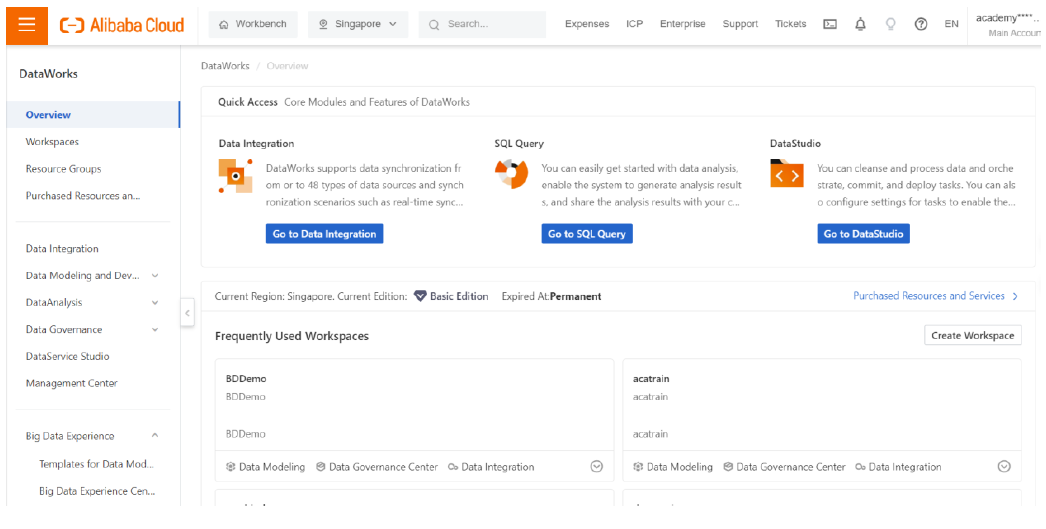

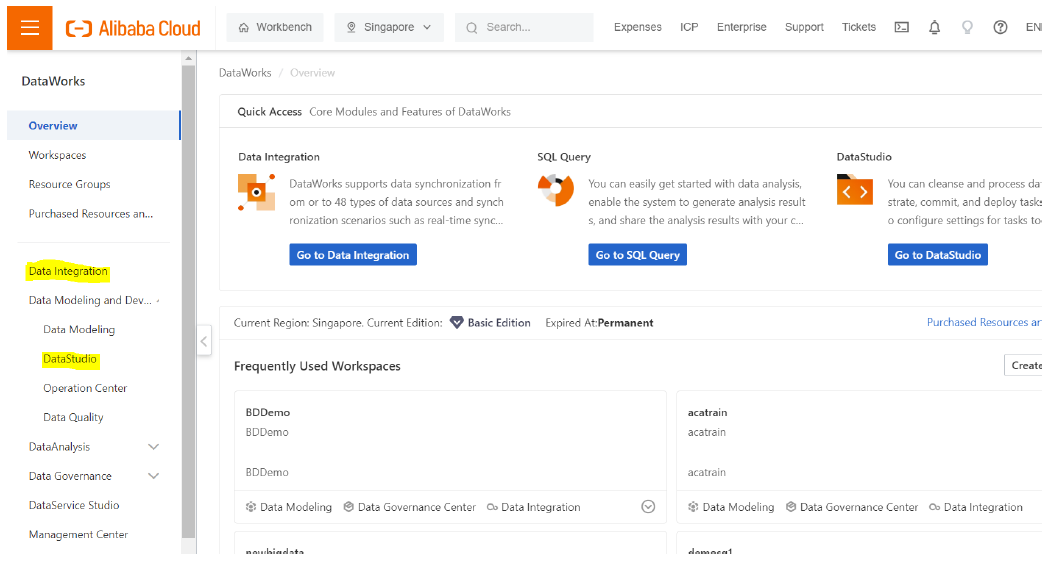

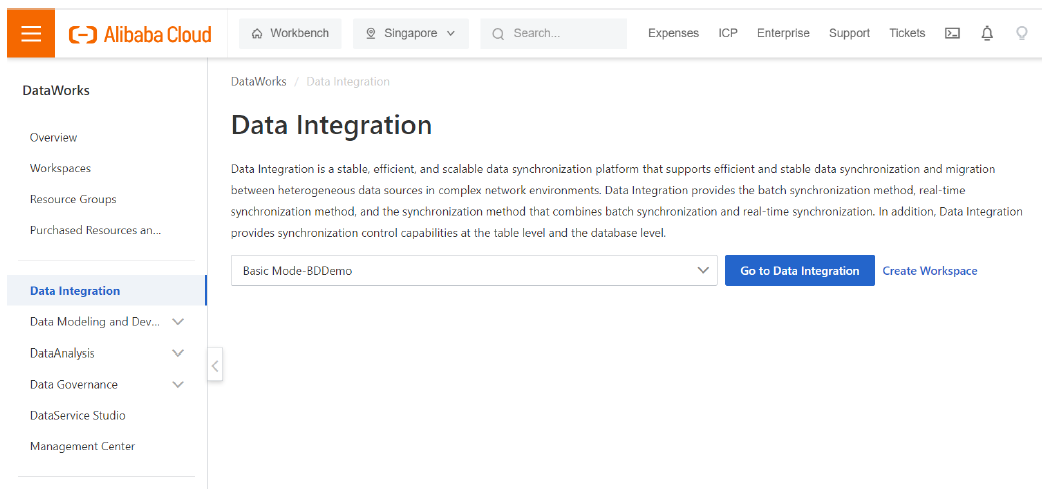

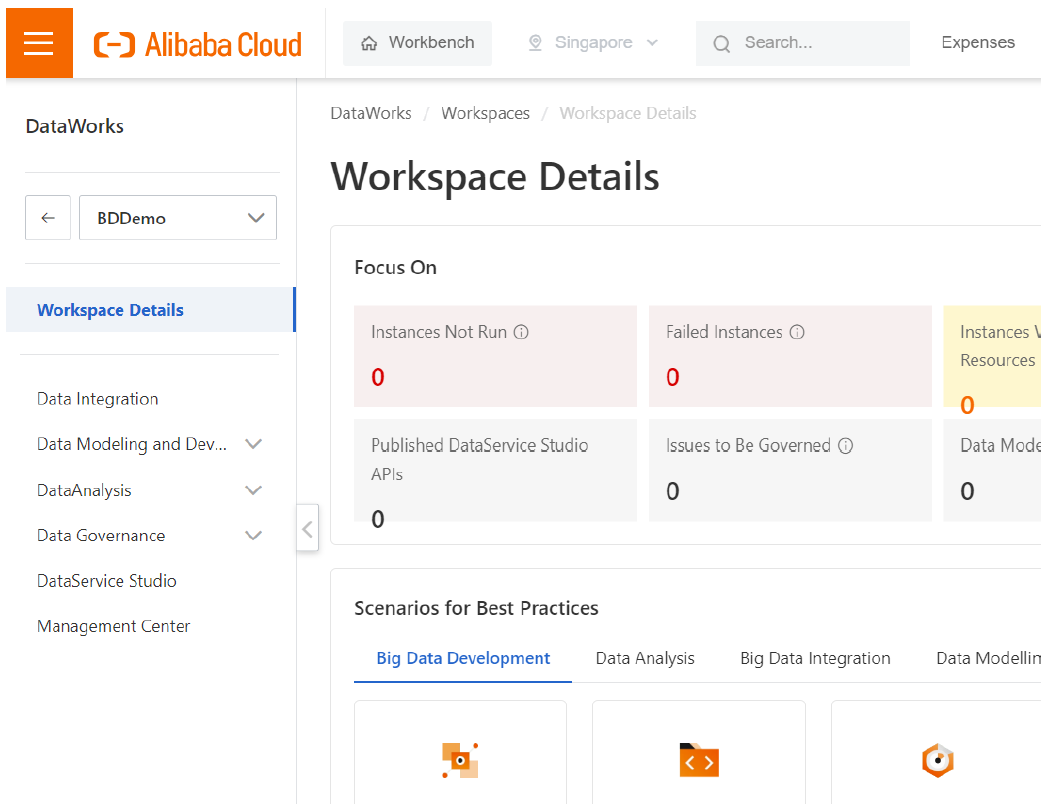

Now we can see the created workspace on the dashboard. For data integration we need to use the data integration portal and the data studio. We can reach data integration by clicking on “Go to Data Integration” button or “Data Integration” option in the left pane.

Data Studio option is available in the left pane as well. Now we have created the workspace and we need to purchase and create a resource group. Click on the Resource Groups in the left pane.

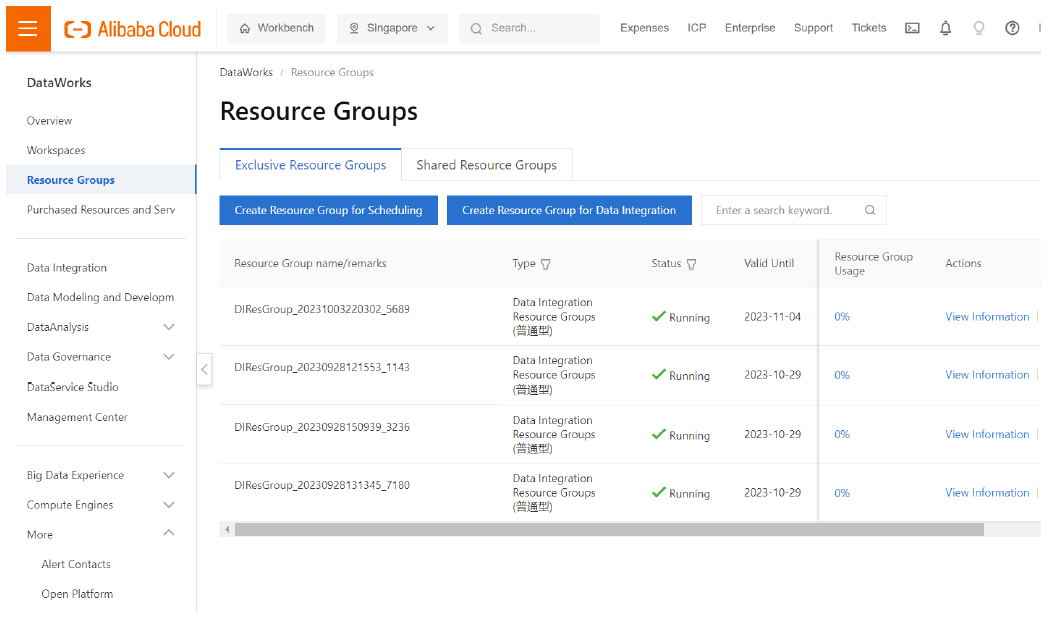

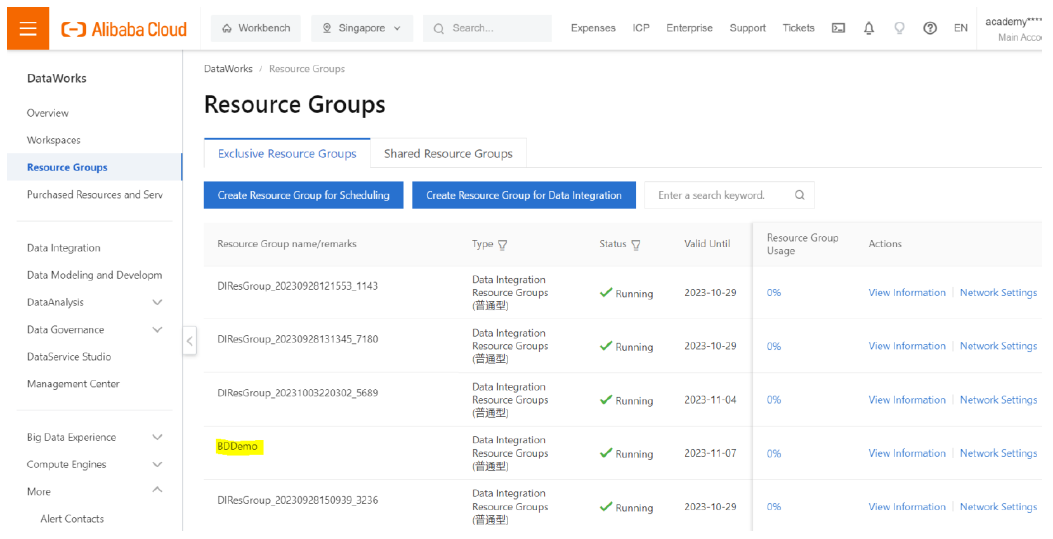

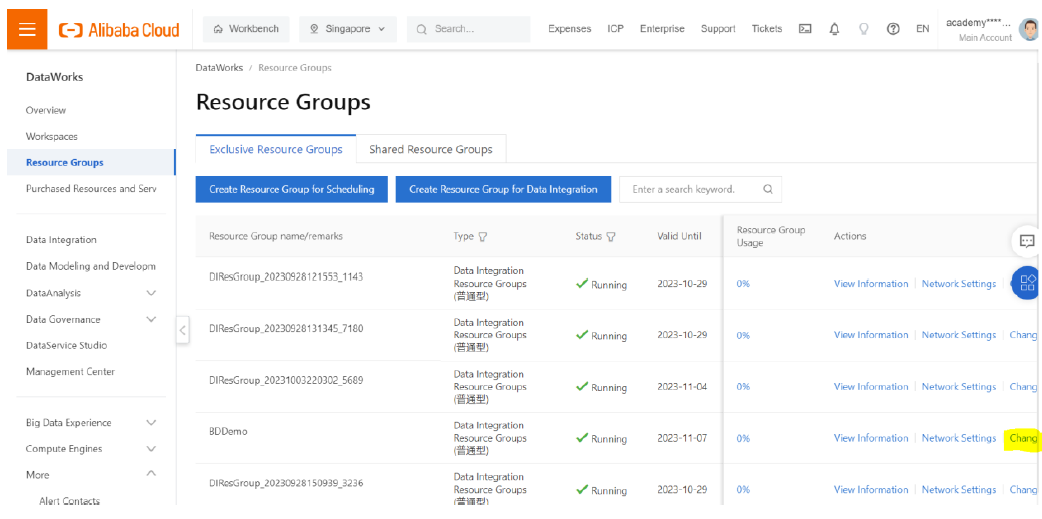

Here I have created 4 resource groups for data integration. I can use either of these or create a new one and use. I choose to do the latter by clicking on “Create Resource Group for Data Integration”.

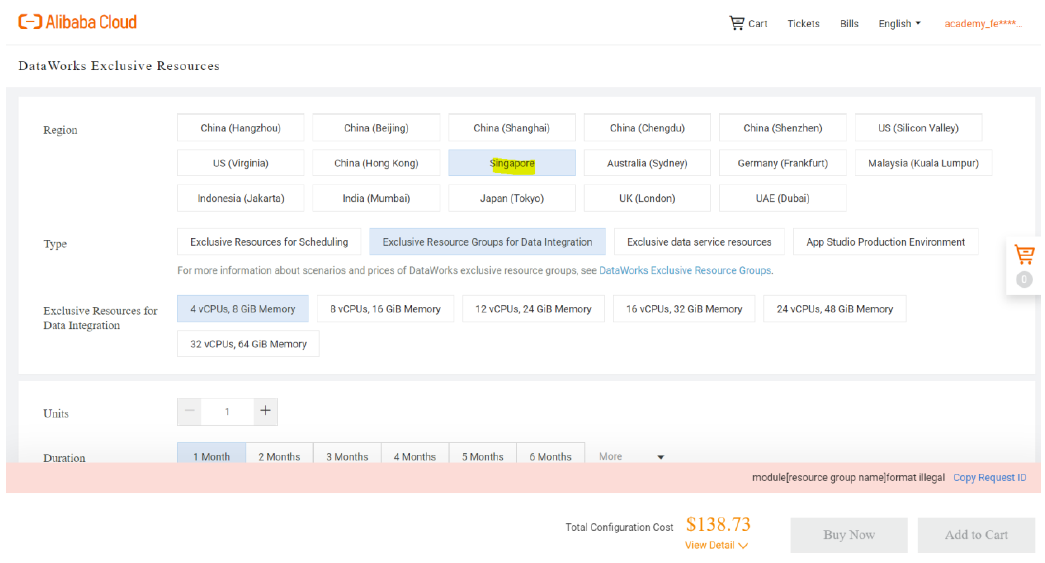

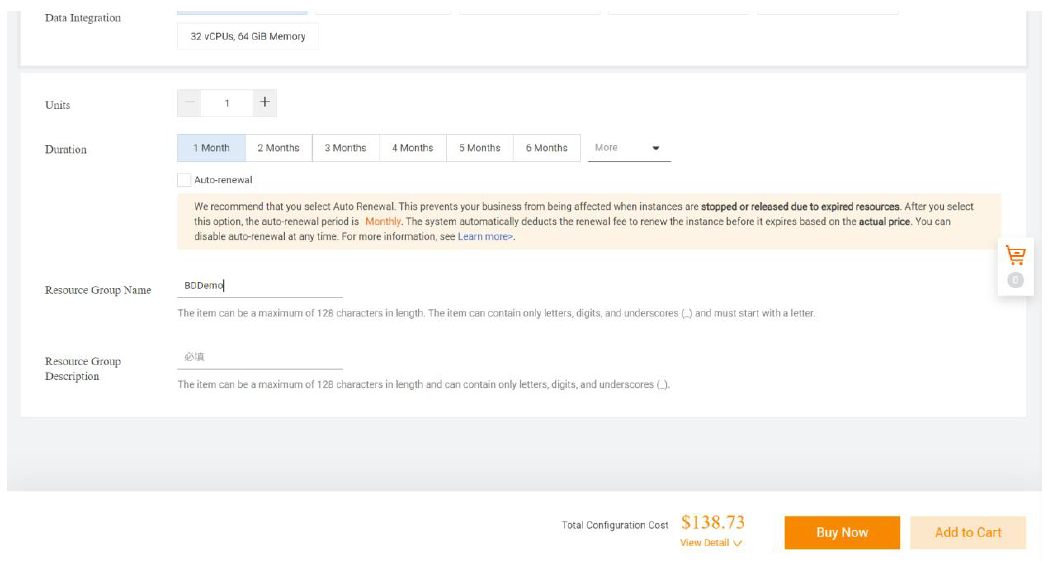

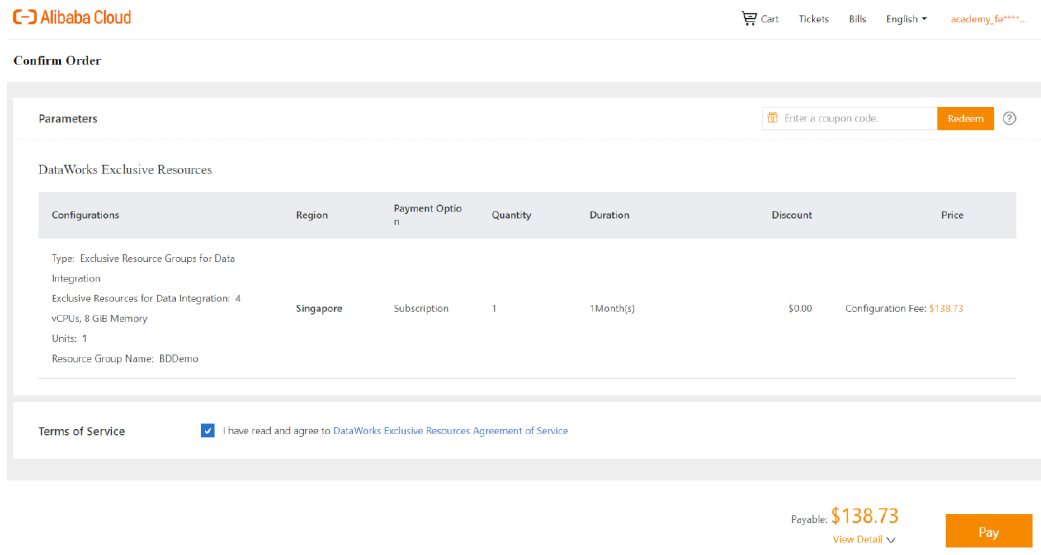

We can select any of the regions listed and choose the configurations needed. Here I have chosen the minimum configuration of 4 vCPUs, 8 GiB Memory for a month.

Enter a name for the resource group for easy identification while working with the project. Click on “Buy Now”.

Agree for the DataWorks Exclusive Resources Agreement of Service and then click “Pay” and proceed with the payment.

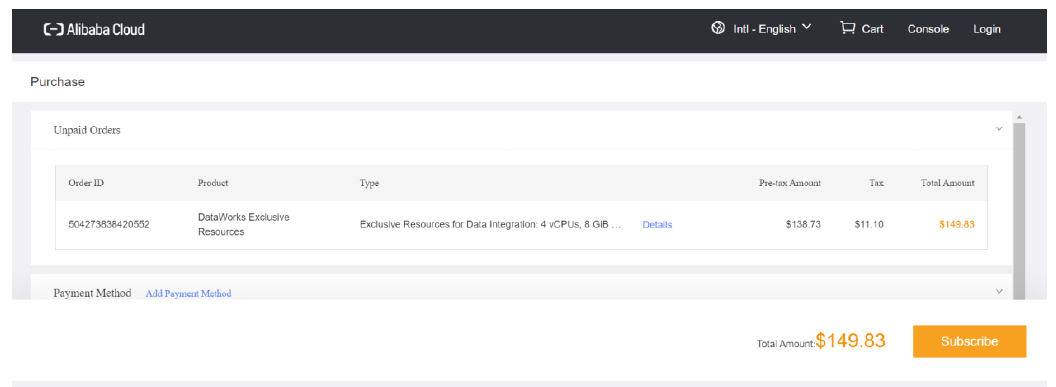

Complete the payment and click on “Subscribe” to finish with the purchase of resource group.

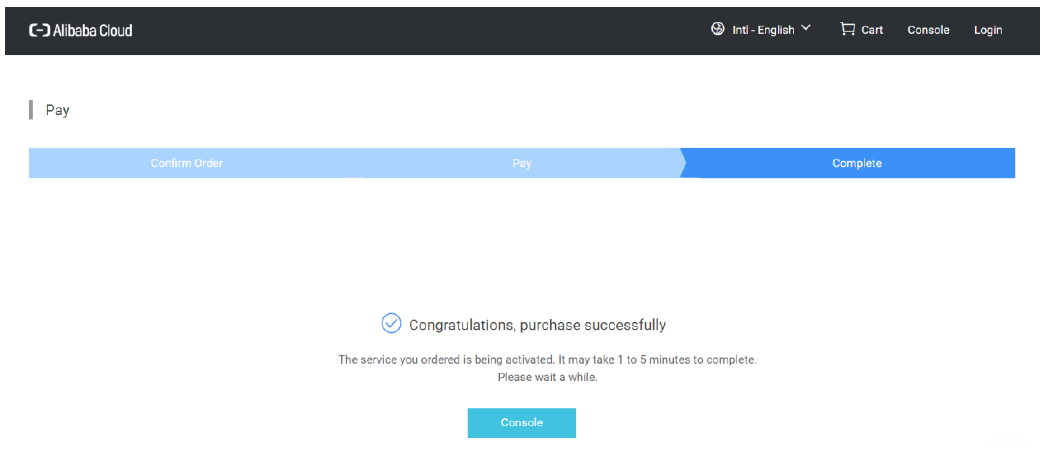

Click on the Console to further work on data integration and processing.

In the resource groups page, we can see the newly purchased resource group listed. Since we gave the name while creating, we will now be able to locate the right resource group we need. Click on Data Integration in the left pane.

Click on “Go to Data Integration” after selecting the workspace we created earlier.

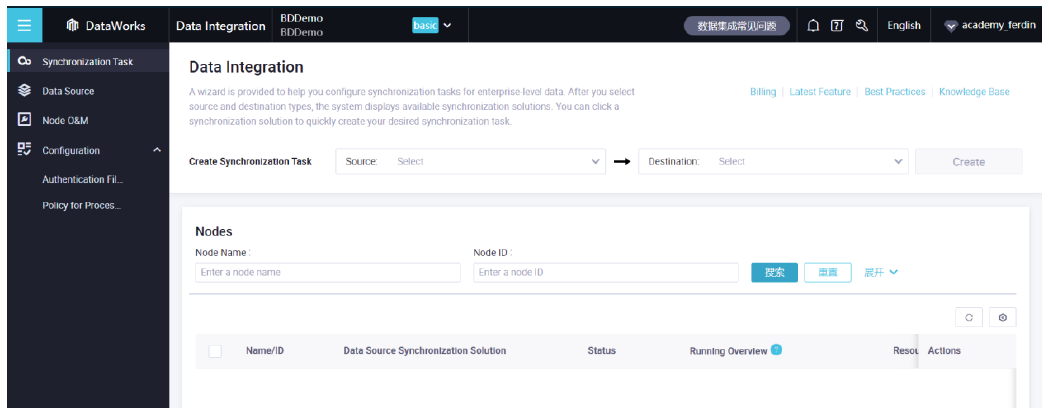

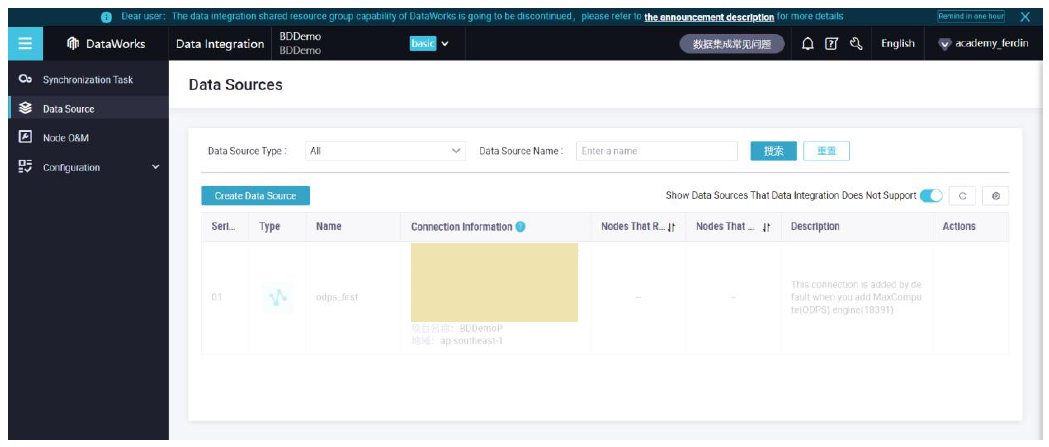

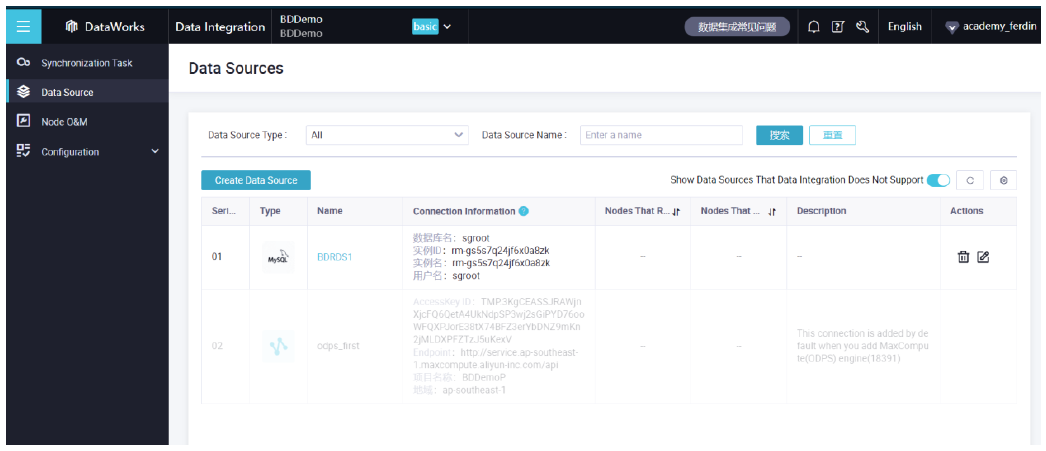

For performing data integration, we need data sources and the destination sources. Click on “Data Source” in the left pane.

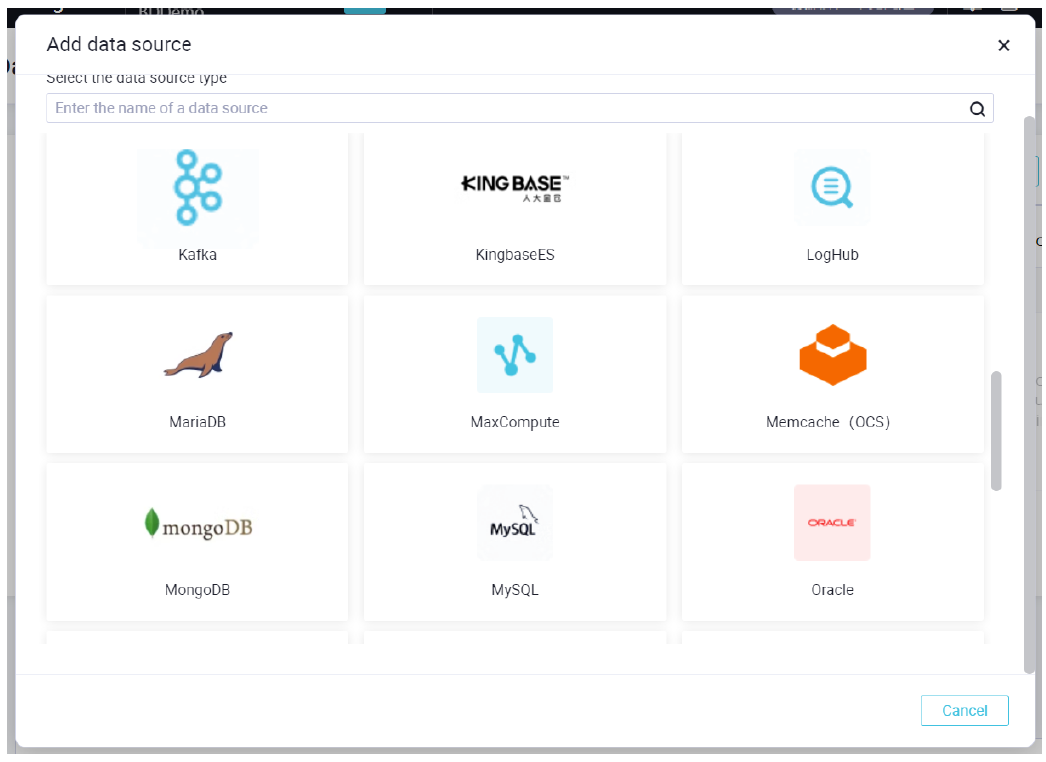

There is a default ODPS MaxCompute data source available. However we create our customized data source by adding a MySQL table and data from OSS. Click on “Create Data Source”.

Now we need to add a MySQL database as a data source. So, we Select “MySQL”.

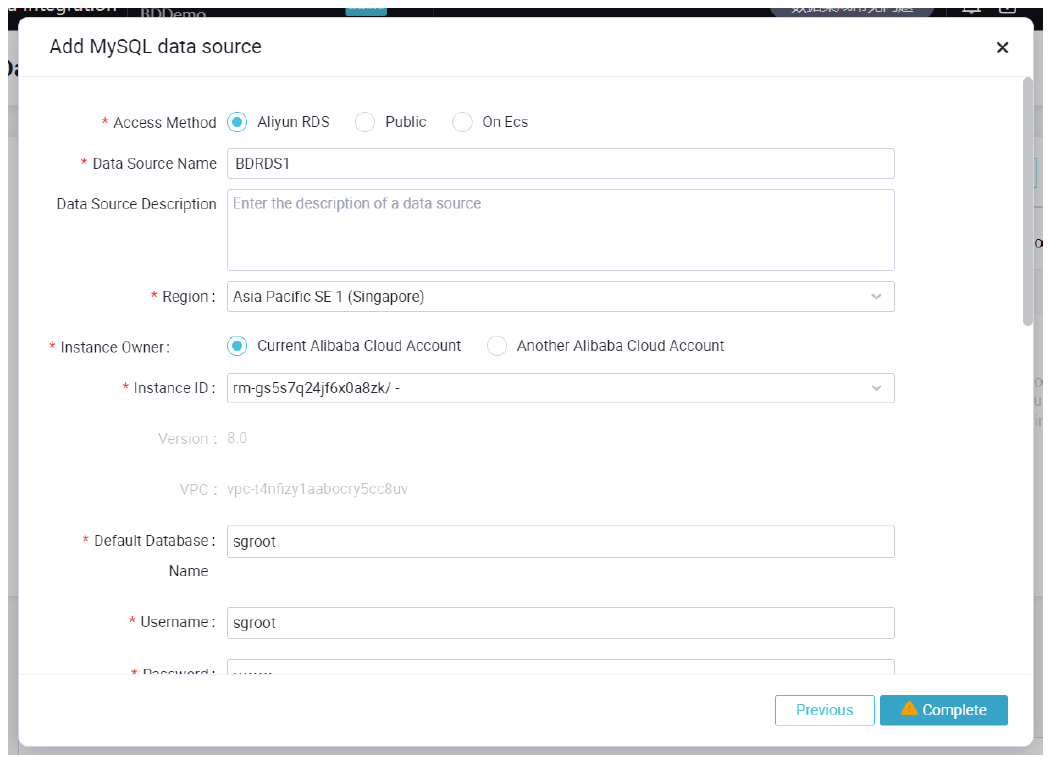

Select the access method as Aliyun RDS as we are associating an RDS instance. We have given a name as BDRDS1 for the data source. Select the region where we created the RDS instance. In our case we have selected Singapore. Select the Current Alibaba Cloud Account as the instance owner. Then it will list the instances owned by the Alibaba Cloud Account in Singapore. We choose the RDS instance we need and then type the database name. After this enter the username and password and click Complete.

Now in the page of Data Source, we will be able to see the MySQL data source we set to be available in data sources available for data integration. After this we need to ensure that the resource group we purchased earlier is bound to this workspace. Go back to Data Works console and click on resource group.

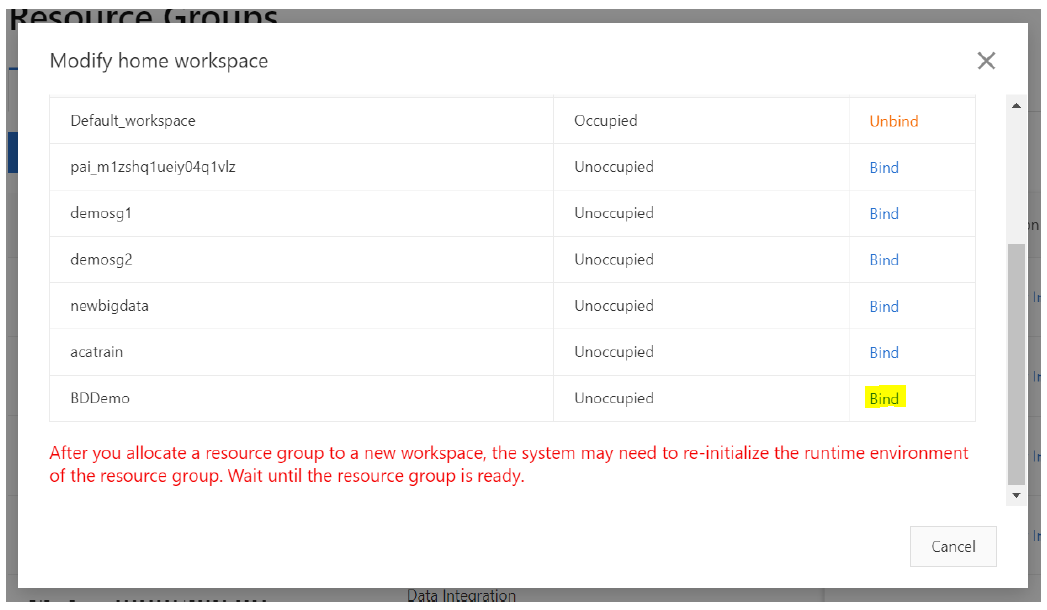

Click on “Change” Option with our desired resource group.

Click on “Bind” to create connection between the workspace and resource group. Then go to Data Works and click on Data Integration.

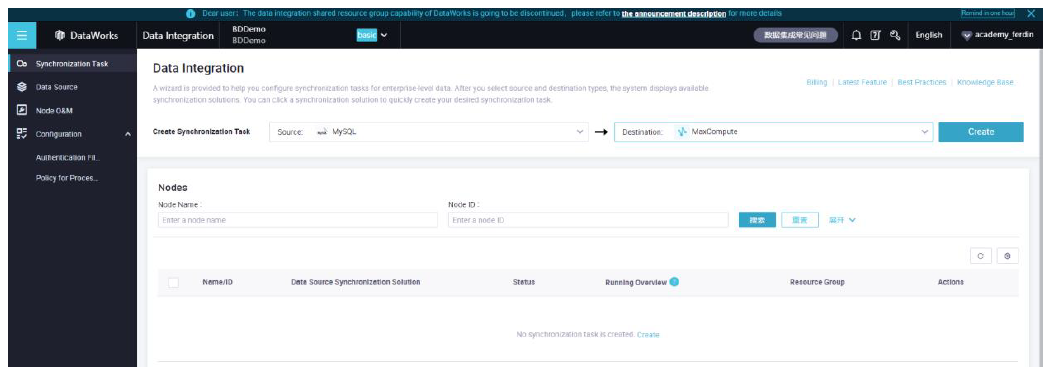

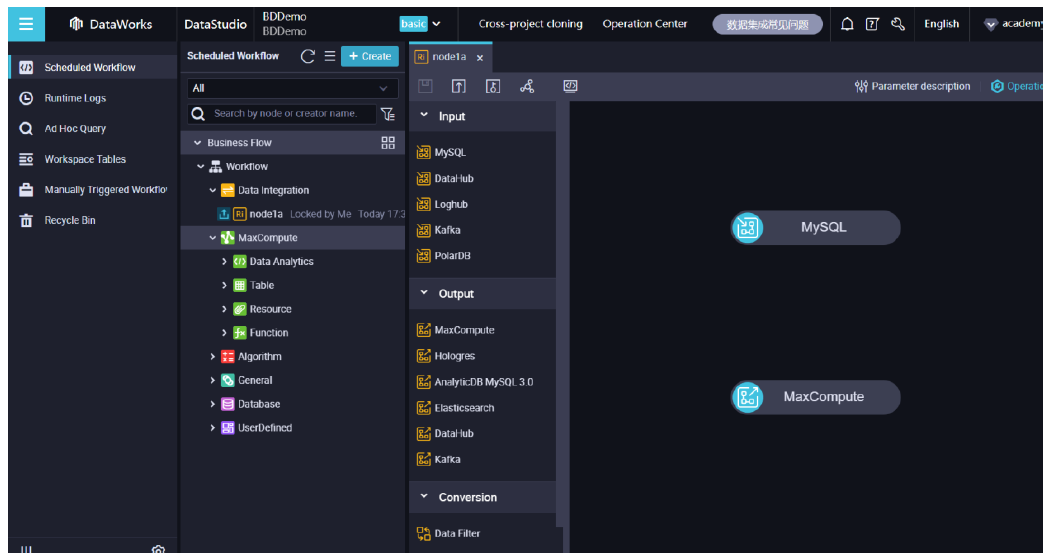

Here we need to create a connection between a MySQL RDS instance and a MaxCompute table. Select MySQL in source and MaxCompute in destination and then click Create.

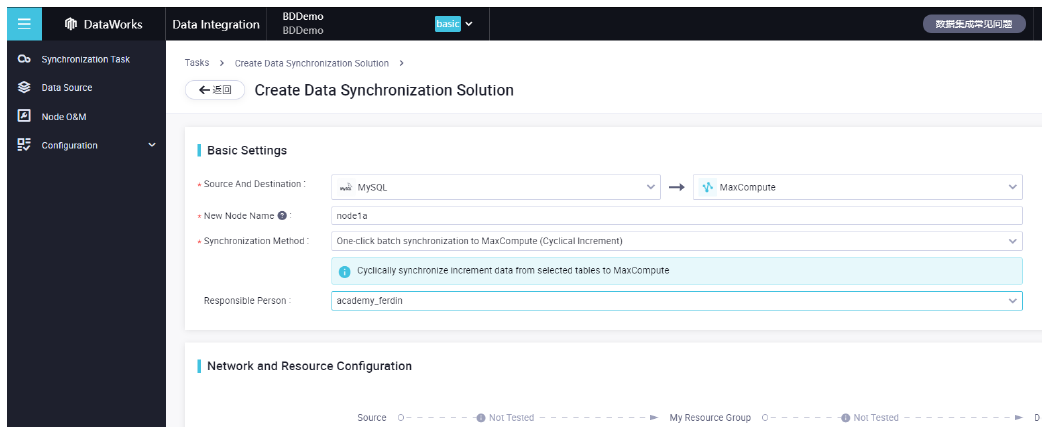

Fill in the details as needed.

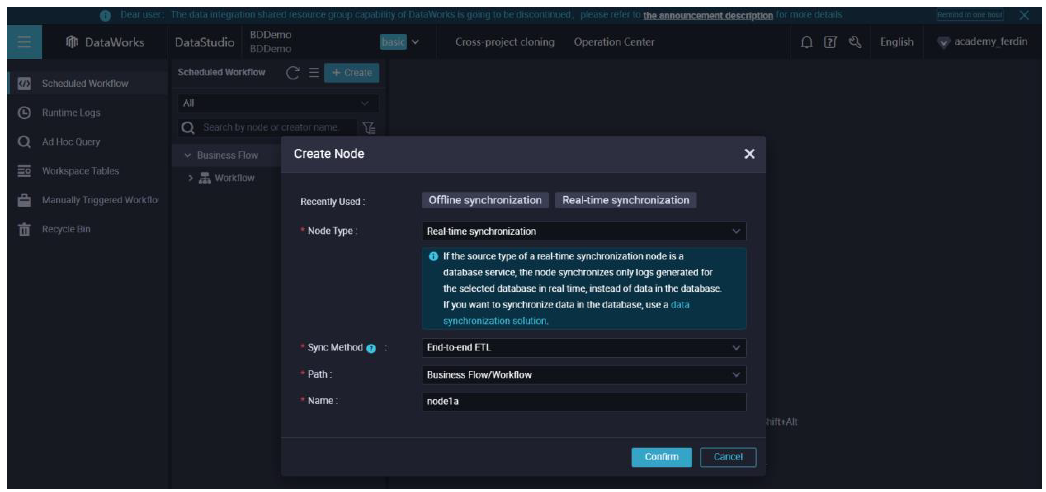

In the Synchronization method, select real time synchronization to a single table. Then it opens a pop up asking to do it in Data Studio. Click Confirm.

Select the path as Business Flow/Workflow. Name the node with a convenient one and click confirm.

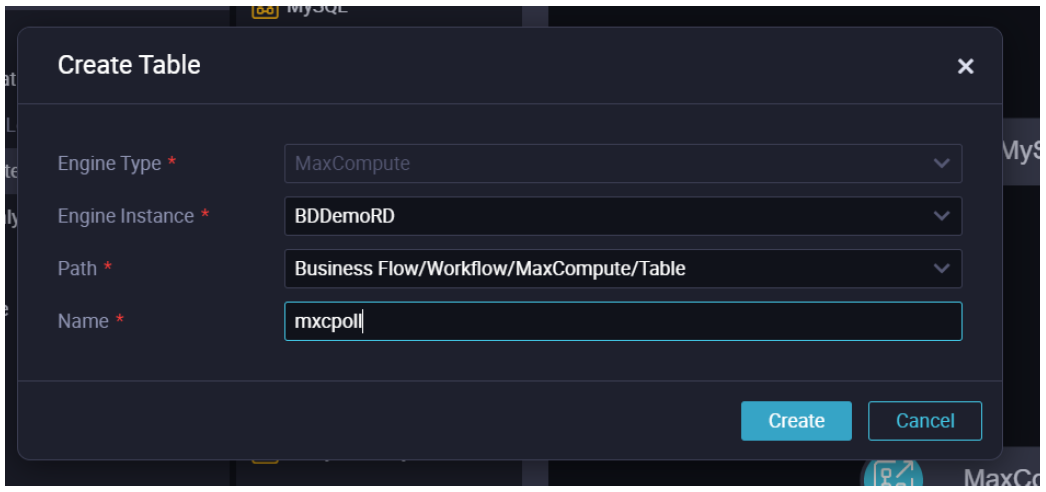

In the 2nd pane, right click on Table under MaxCompute and click Create Table.

Enter a name and click “Create”.

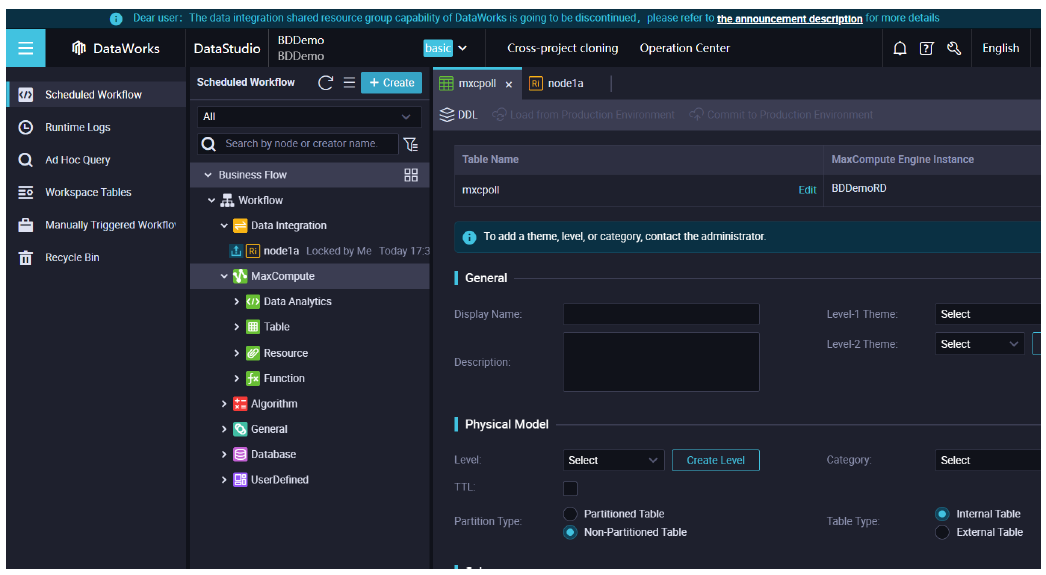

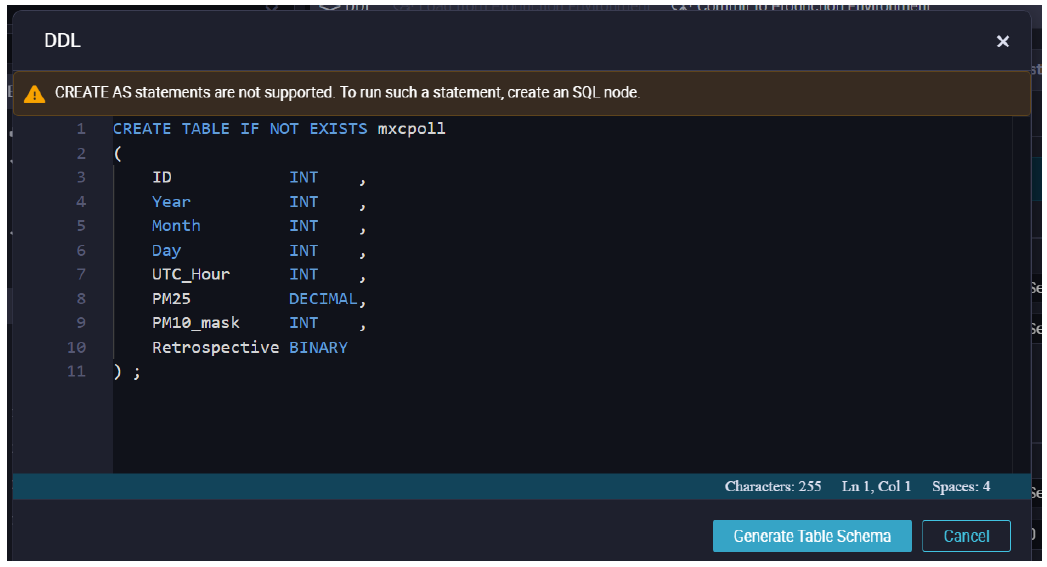

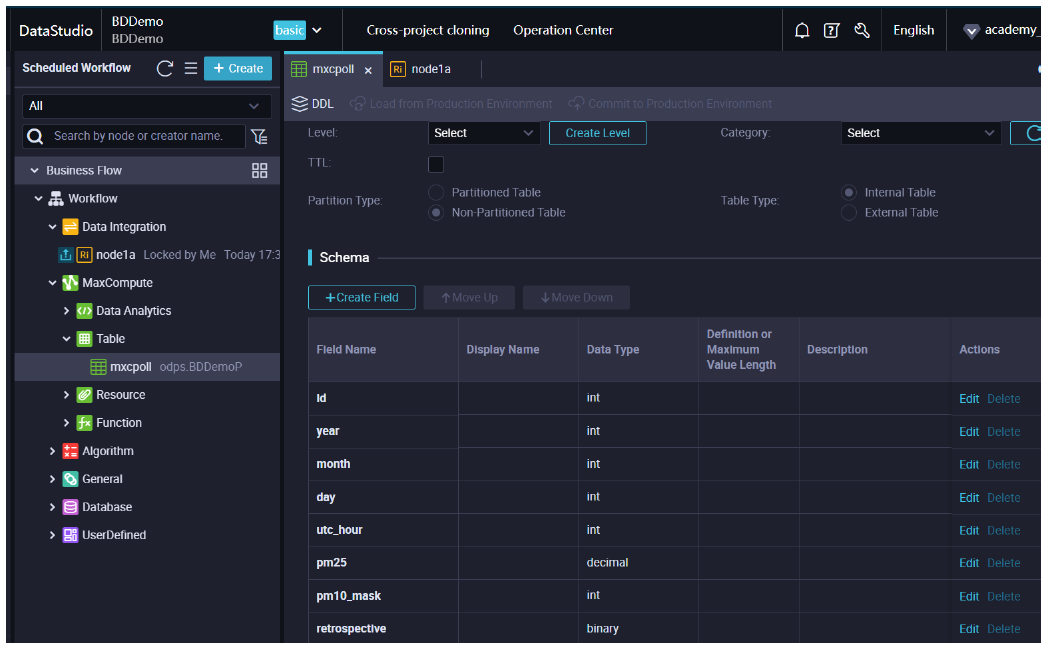

Click on DDL.

Enter the query and Click Generate Table Schema.

Now click on “Commit to Production Environment”. With this the destination dataset is available. Now we need to create it as a data source. Go to Data Works and click on New.

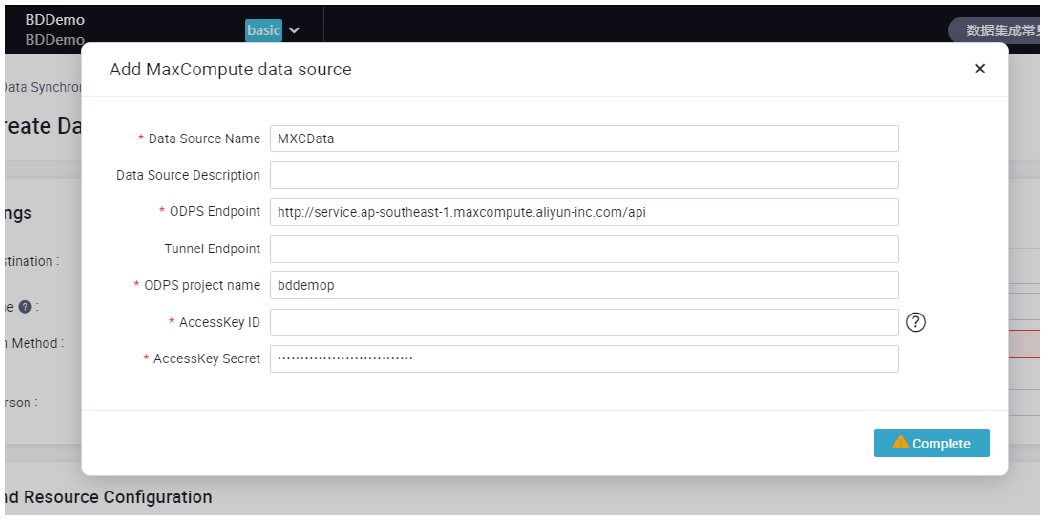

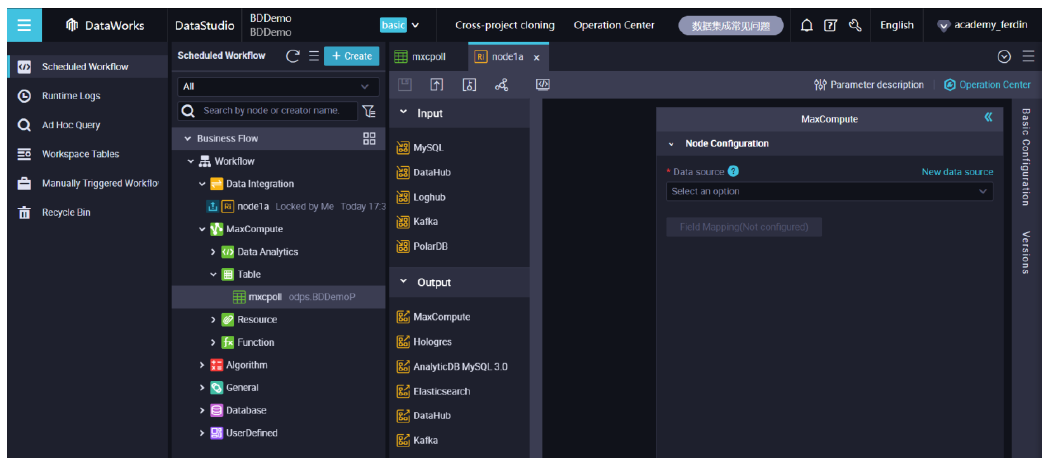

Enter a name for data source and fill the details corresponding to the data set and click Complete. Now move to the node’s tab and double click MaxCompute.

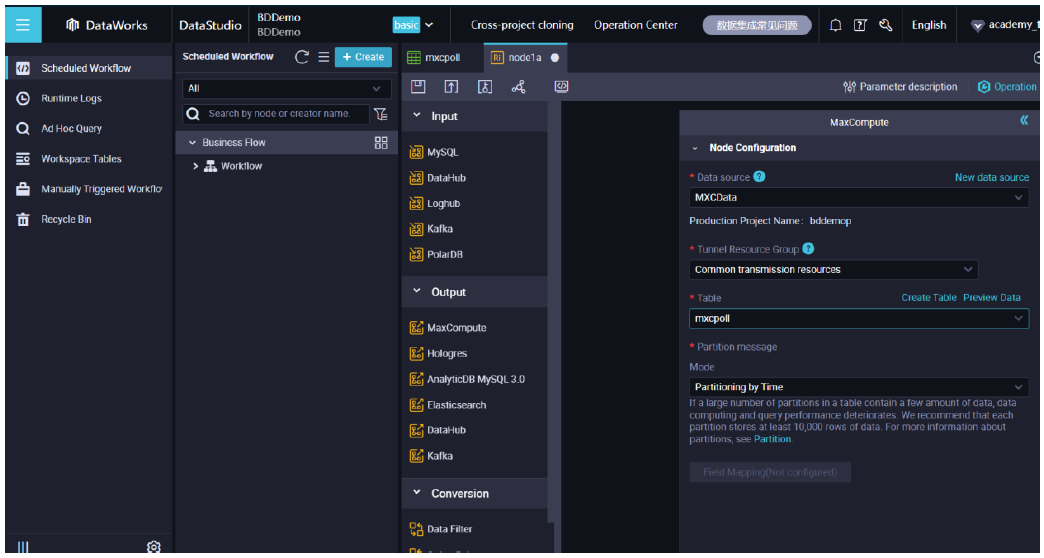

Now go to data studio and select the MaxCompute data source.

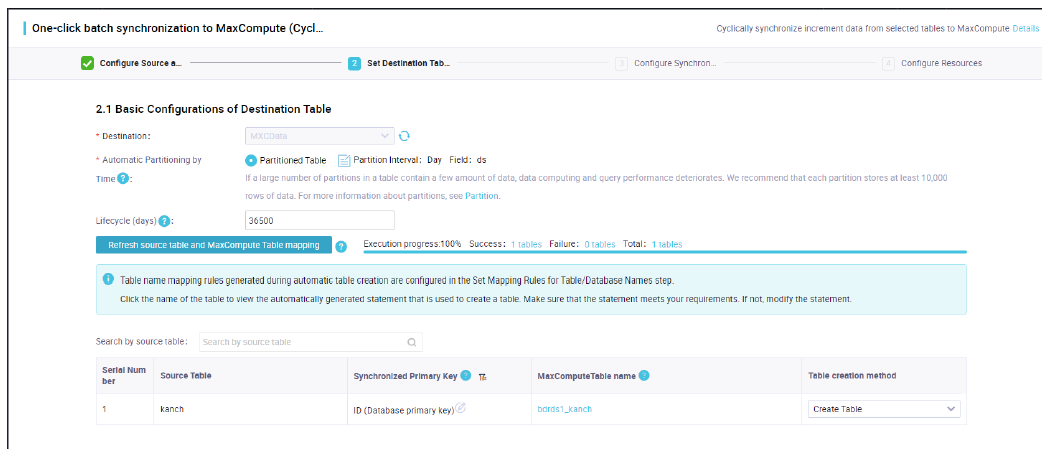

With this, complete the synchronization in DataWorks and refresh the source table with MaxCompute table mapping.

Provide the required information.

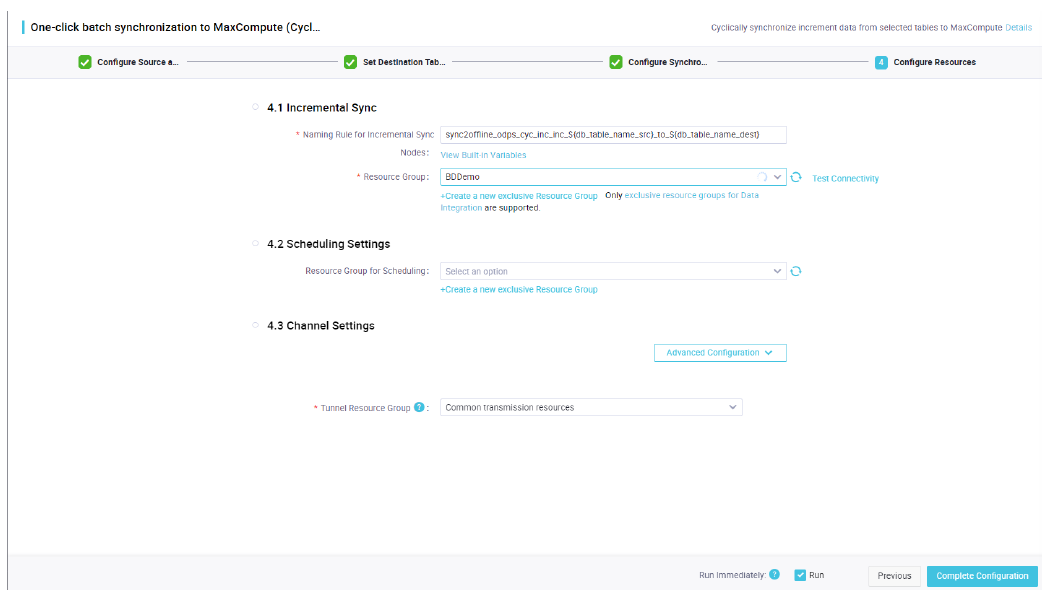

Click on Complete Configuration.

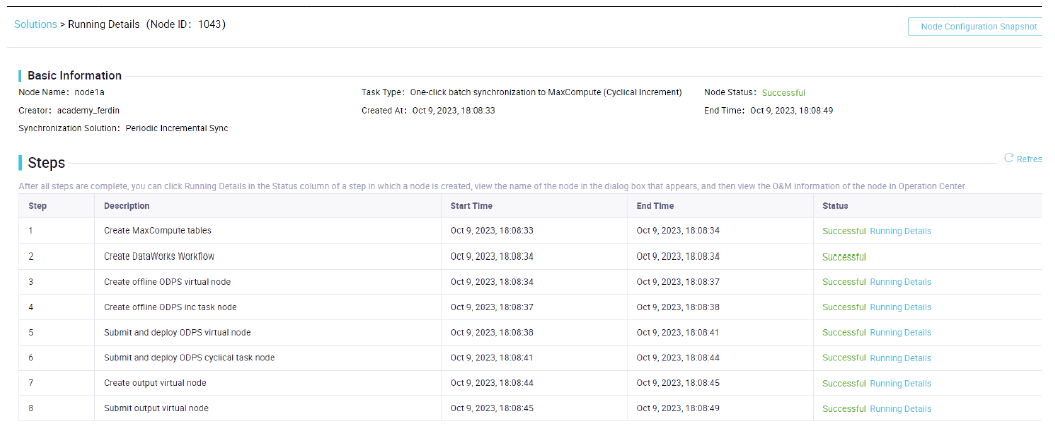

Now the node synchronization is successful. Similarly we can add any number of data sources to integrate into a MaxCompute data warehouse.

PAI Visualized Modeling: Enroute from Development to Deployment

Alibaba Clouder - March 9, 2021

Alibaba Clouder - April 11, 2018

Alibaba Cloud Community - March 21, 2022

Alibaba Clouder - January 6, 2021

Alibaba Clouder - September 3, 2019

Data Geek - May 11, 2024

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Alibaba Cloud provides big data consulting services to help enterprises leverage advanced data technology.

Learn More MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

Alibaba Cloud experts provide retailers with a lightweight and customized big data consulting service to help you assess your big data maturity and plan your big data journey.

Learn More Hologres

Hologres

A real-time data warehouse for serving and analytics which is compatible with PostgreSQL.

Learn MoreMore Posts by ferdinjoe