As a highly regarded next-generation distributed consensus algorithm in industry, Egalitarian Paxos (EPaxos) has broad application prospects. However, EPaxos is yet to be widely implemented in the industry, and there are not many articles that can explain EPaxos in detail other than academic research papers. One reason for this is although the theory of EPaxos algorithm is good, it is quite difficult to understand. In addition, there are also many challenges in its implementation. Therefore, the practical application of EPaxos algorithm is not yet mature.

This series of articles is aimed at introducing the EPaxos algorithm in an easy-to-understand way. These articles enable readers who are familiar with distributed consensus algorithms, such as Paxos and Raft, to easily understand EPaxos step by step. Thus, the obscure EPaxos will be comprehensible for many people.

In the first part of the EPaxos trilogy, I introduced the basic concepts and intuitive understandings of EPaxos after the introduction of Paxos. I believe readers have already had an overall impression of EPaxos through that article.

This article is the second part of the EPaxos trilogy. It introduces the core protocol process of EPaxos from the perspective of the comparison between Paxos and EPaxos. I believe that readers can find answers to questions raised at the end of the last article after reading this article. This article requires to know the background of distributed consensus algorithms such as Paxos or Raft.

EPaxos is a leaderless consensus algorithm without electing a leader that any replica can initiate proposals. But you could also interpret the meaning of leaderless in the opposite way — each replica can be considered a leader. If there are multiple groups in Multi-Paxos or Raft, each replica is assigned to one group. It serves as the leader of the group and the follower of all other groups at the same time, which seems to achieve the effect similar to the leaderless algorithm.

When the leaderless effect is achieved by multiple groups, a consensus is reached on a series of instances independently in each group, which generates an instance sequence. Instances in different groups are independent of each other, and their sequence cannot be determined. Therefore, the consensus across groups is a major problem, which cannot be solved at the consensus level. If this is the case, it may need to be solved by distributed transactions on the upper level.

EPaxos has solved this problem and is a real leaderless algorithm. EPaxos tracks the dependencies of instances and determines the relative sequence of instances generated in different groups. Then, it sorts multiple instance sequences generated by different groups and then combines them into an overall instance sequence. By doing so, consensus across groups, that is, the real leaderless algorithm, can be achieved.

EPaxos runs the consensus protocol first, making the replicas reach a consensus over the instance value and the relative order of instance dependencies. Then, EPaxos runs the sorting algorithm. Based on the previously agreed relative order of instances, it sorts all instances to obtain a consistent overall sequence of instances.

The above is the basic idea of EPaxos, which is introduced from the perspective of Multi-Paxos or Raft that uses multiple groups to achieve a leaderless effect. In fact, group is a concept beyond the consensus algorithm. The group introduced here is only for convenience. EPaxos does not have a concept of the group. However, similar to Paxos or Raft, it is possible to implement multiple groups in EPaxos.

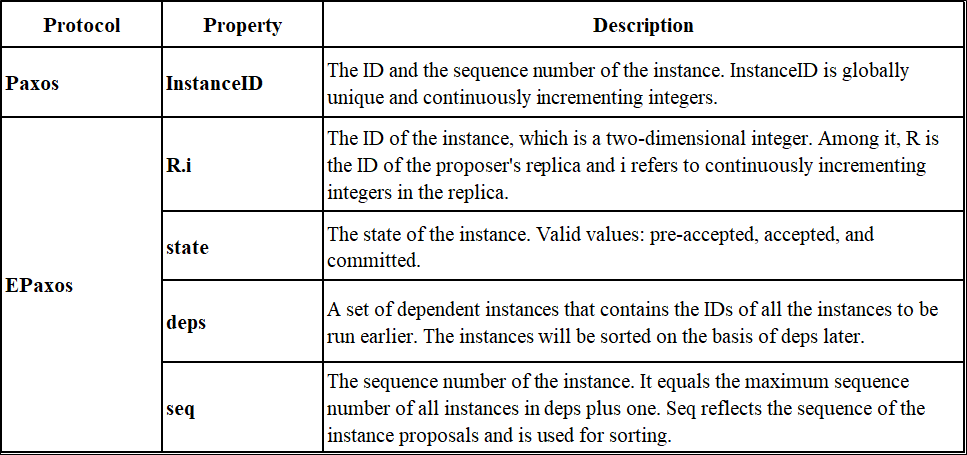

The instances of EPaxos are somewhat different from those of Paxos. The instances of Paxos are assigned by sequence numbers in advance. However, the instances of EPaxos do not have sequence numbers in advance and each replica can concurrently submit instances out of order. It traces and records the dependencies of these instances and sorts on the basis of the dependencies. Here is a summary of the differences:

Table 1: Differences of instances of EPaxos and those of Paxos

Instances in Paxos are identified by InstanceIDs, which are also the sequence numbers of instances, globally unique and continuously incremented.

Instances of EPaxos are two-dimensional, and each replica has one line. Therefore, they are identified by the two-dimensional R.i. R is the ID of the replica and i refers to continuously incrementing integers in the replica. Add one for each new instance started. The sequence of instances in replica R is R.1, R.2, R.3, ... R.i,...

Compared with instances of Paxos, instances of EPaxos have some additional properties:

The properties of deps and seq of the instances in EPaxos are just like the values of the instances, which need to reach a consensus among replicas. Later, replicas will independently sort the instances on the basis of the deps and seq. Since the sorting algorithm of EPaxos is definite, sorting instances based on the same deps and seq in each replica will eventually result in a consistent overall instance sequence.

Instances are vertexes in the graph, deps are the outgoing edges of the vertexes. After the vertices and edges of the graph are determined and agreed among the replicas, the replicas perform deterministic scheduling of the graph to finally obtain a consistent sequence of instances.

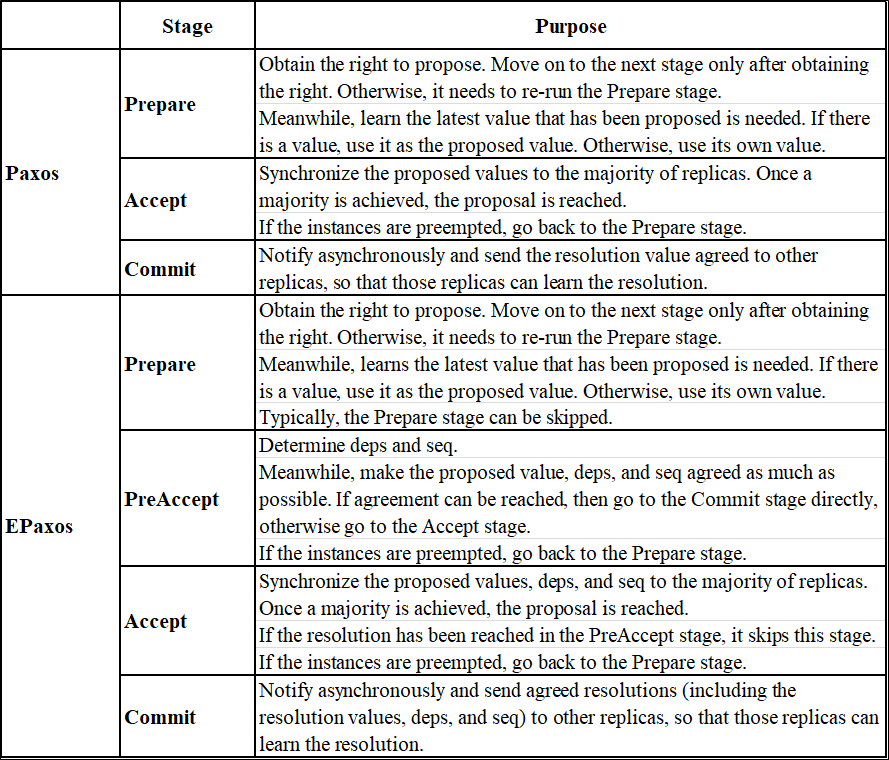

Paxos requires two stages to submit a value. EPaxos have one more stage to determine the values of deps and seq. So, an entire EPaxos involves three stages but not all stages are required. The following table compares the protocol processes of Paxos and of EPaxos:

Table 2: Comparison of the protocol processes between Paxos and EPaxos

The Prepare and Accept stage of EPaxos are similar to those of Paxos. However, EPaxos has an additional PreAccept stage, which is also the most critical stage of EPaxos. It is because there is the PreAccept stage in EPaxos that its instances have more states. Therefore, the special state properties are introduced to identify the current states of instances (pre-accepted, accepted, and committed). The states in the Prepare stage are not introduced since there is no proposed value in the Prepare stage. Therefore, the states can be distinguished by whether there are local proposed values. Generally, EPaxos only runs the PreAccept stage, meaning that the stages of Commit, Prepare and Accept can be skipped.

Similar to Paxos, EPaxos needs to go back to the Prepare stage and start over if the instances are found to have been preempted at any stage.

The role of the Prepare stage, which is similar in Paxos and EPaxos, is aimed at the proposal right and learning the latest values that have been proposed before. In EPaxos, each replica has its own independent instance space and proposes in its own space. This is equivalent to a leader of Multi-Paxos. So similarly, the Prepare stage in EPaxos can be usually skipped and starts from the next stage.

The PreAccept stage is unique to EPaxos, which is used to determine deps and seq and try to make the proposed value, deps, and seq reach a consensus among the replicas. If the consensus has been reached in the PreAccept stage, it directly moves on to the Commit stage (Fast Path). Otherwise, it needs to run the Accept stage, and then to the Commit stage (Slow Path).

How does the PreAccept stage determine deps and seq? In fact, it is relatively simple. Collect the instances that need to be executed earlier from the instances that already exist locally in the replicas, namely the local deps set. As for the local seq, it is the maximum value of the seq of all instances in the local deps plus one. The final deps is the union set of the local deps of most replicas, and the final seq is the maximum value of their local seq.

In concurrent proposals of replicas, both local deps and local seq may be different for different replicas. So, how to reach a consensus in the PreAccept stage? If there are enough replicas (Fast Quorum) with the same local deps and local seq, the consensus has been reached. Otherwise, the final deps is the union set of the local deps of most replicas (Slow Quorum), and the final seq is the maximum value of local seq in replicas. Then, the consensus is reached in the Accept stage.

The Fast Quorum in the PreAccept stage always contains the proposer, and the reason will be discussed later. The value of Fast Quorum is not less than that of Slow Quorum. If the total number of replicas is N, F replicas can fail at the same time. N = 2F + 1, and the Fast Quorum = 2F. EPaxos can be optimized to F + [ (F +1) / 2], and Slow Quorum = F +1. The derivation of the Fast Quorum value is not described here but will be discussed in detail in subsequent articles. Slow Quorum is the majority of replicas, which is the same as replicas in the Accept stage in Paxos.

The role of the Accept stage is similar in EPaxos and Paxos. Paxos only synchronizes the proposed value to the majority of replicas, while EPaxos synchronizes the proposed value, deps, and seq to the majority of replicas. Once a majority is achieved, the proposal is reached. If the resolution has been reached in the PreAccept stage, this stage can be skipped and go to the Commit stage directly.

The role of the Commit stage, which is similar in Paxos and Epaxos, is to send reached resolutions asynchronously to other replicas so these replicas can learn resolutions. The difference is that an EPaxos resolution includes not only the resolution value, but also deps and seq.

Unlike Paxos, the sequence of instances in EPaxos has not been determined after submission. Therefore, EPaxos requires an additional process to sort the submitted instances. When instances as well as all instances in the deps have been submitted, a sorting process begins.

Instances of EPaxos are the vertexes in the graph, deps of the instances are the outgoing edges of the vertexes. After the value of the instances reaches a consensus with the deps, the vertexes and edges of the graph will be agreed upon among replicas. Therefore, each replica will have the same dependency graph.

The process by which EPaxos sorts instances is similar to performing deterministic topological sorting of the graph. However, it should be noted that the dependencies among instances of EPaxos may form loops, which means there may be loops in the graph. Therefore, it is not completely topological sorting.

To process the cyclic dependencies, EPaxos needs to find the strongly connected components of the graph in the sorting algorithm. All loops are included in the strongly connected components. If the overall strongly connected components are considered as a vertex of the graph, all strongly connected components form a directed acyclic graph (DAG). Then, topological sorting is performed on the DAG composed of all strongly connected components.

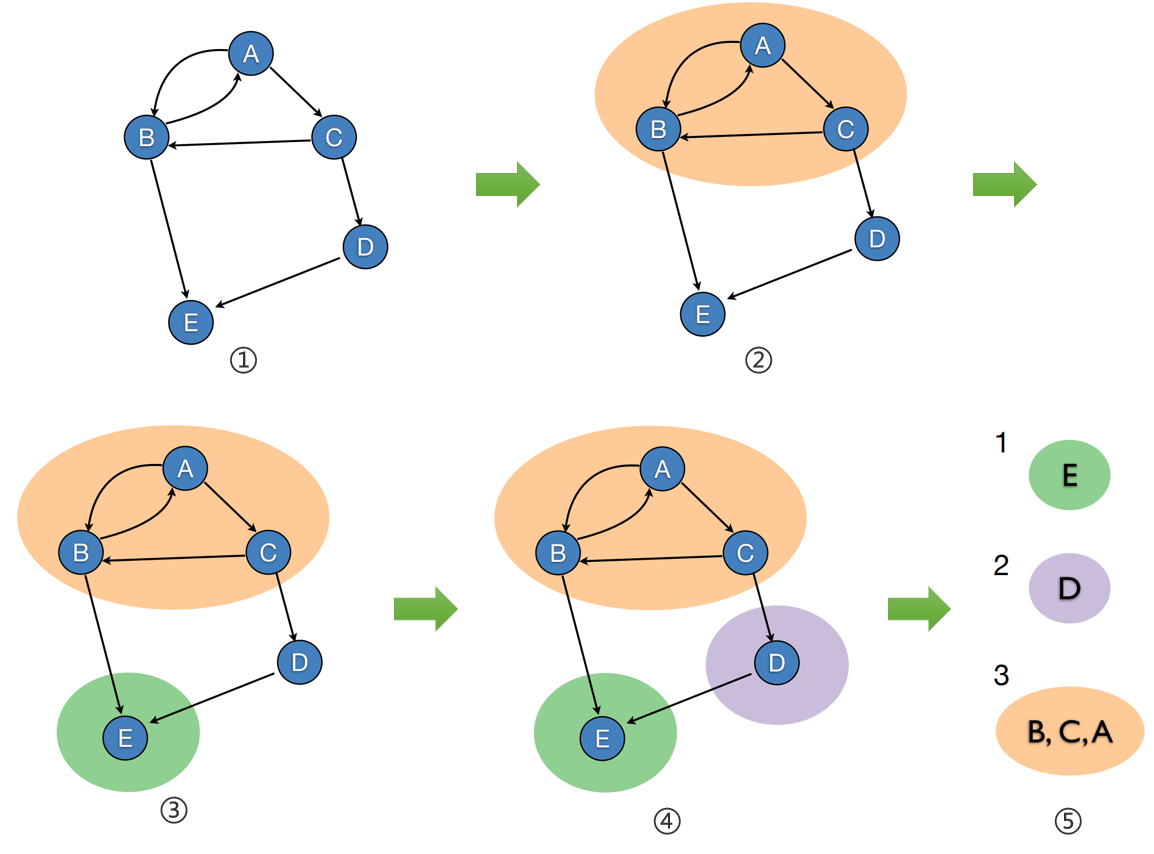

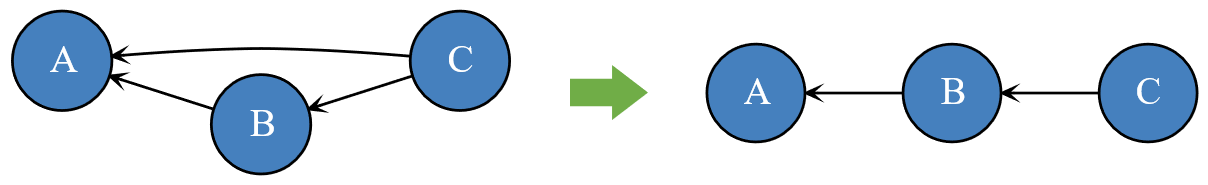

The process of the sorting algorithm of EPaxos is shown in Figure 1, in which the parts circled by the background color are the strongly connected components:

Figure 1: Sorting algorithm of EPaxos

Tarjan algorithm, a recursive algorithm, is generally used to find the strongly connected components of a graph. Tests show that the implementations of recursion are easy to burst, bringing certain challenges to engineering applications.

Instances of different strongly connected components are sorted in the confirming topological sequence. Instances of the same strongly connected component are proposed concurrently and theoretically, they can be sorted by any deterministic rules. EPaxos calculates a seq for each instance. The seq reflects the sequence proposed by the instances. Instances in the same strongly connected component are sorted by seq. In the practice, the seq may be repeated, and this does not guarantee a universally unique increase. However, papers on EPaxos have not taken into account that IDs of seq plus those of replica can be used for sorting.

In fact, as new instances continue to run, old instances may depend on new instances, which may depend on newer instances. The chain of dependencies may continuously extend with no end. As a result, the sorting process fails, which leads to a livelock. This is also a major challenge in EPaxos engineering applications.

Since the instance sorting algorithm is definite, after sorting instances on the basis of the same dependency graph, all replicas can have a consistent sequence of instances.

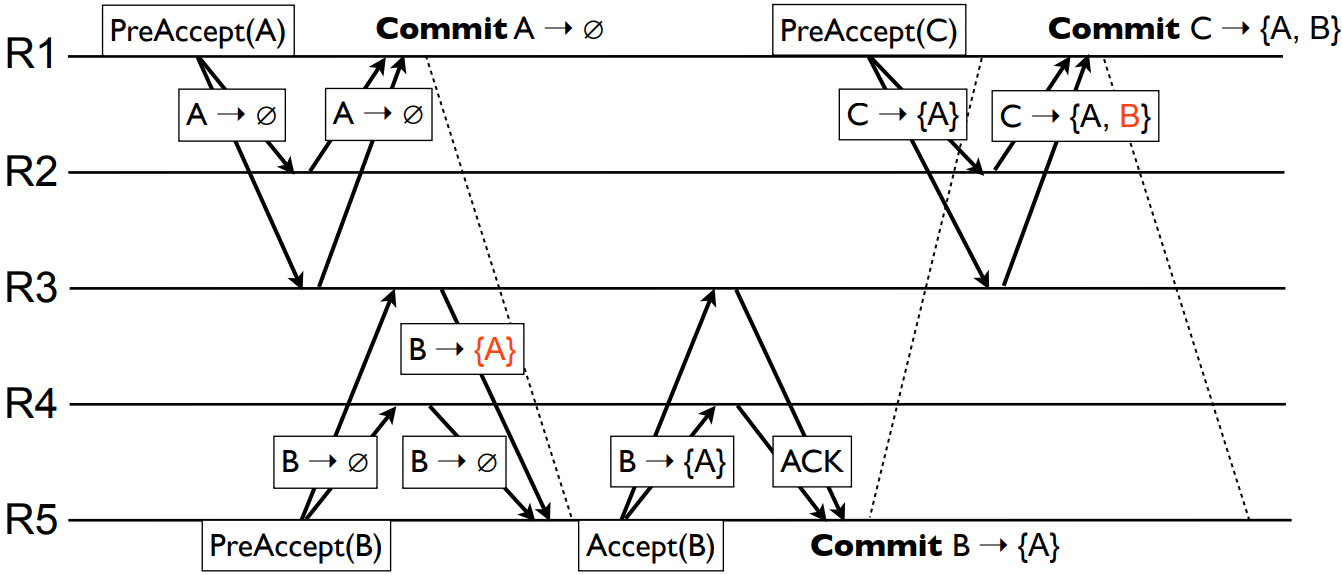

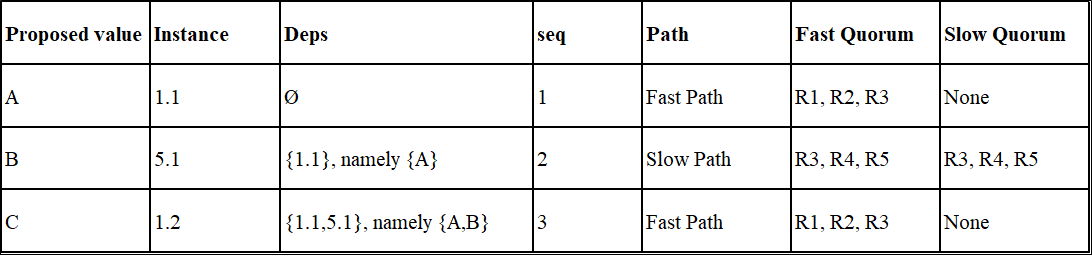

A specific case is presented below to describe the core protocol process of EPaxos, as shown in Figure 2. The system consists of five replicas - R1, R2, R3, R4, and R5. The horizontal direction indicates time, the proposing process of values A, B, and C is shown in the figure. Table 3 lists the property values of each instance in this case.

Figure 2: Consensus protocol of EPaxos

Firstly, R1 proposes the proposed value A in the PreAccept stage. It obtains its local deps and local seq initially. Since it does not have any local instances at this time, the local deps is an empty set and the local seq is the initial value of 1. It makes proposed value A, local deps, and local seq persistent.

R1 then broadcasts the message of PreAccept(A) to R2 and R3. The message includes the proposed value A, local deps, and local seq (not marked in the figure). At this time, R1, R2 and R3 constitute a Fast Quorum. The message of PreAccept can be broadcast only to the replicas in the Fast Quorum. This optimization is called Thrifty optimization in the papers on EPaxos.

After receiving the message of PreAccept(A), R2 and R3 respectively obtain their local deps and local seq. Similar to R1, the local deps is an empty set, and the value of local seq is 1. After persistence, they reply to R1.

After R1 receives the same local deps and local seq of the replicas in Fast Quorum, the resolution will be reached. The final deps is an empty set, the value of seq is 1, and they will be submitted in the Commit stage.

Next, R5 proposes the proposed value B in the PreAccept stage. At this time, R5 does not have any local instances, so the local deps is an empty set and the local seq is the initial value of 1. After being made locally persistent, R5 broadcasts the message of PreAccept(B) to R3 and R4.

The local deps replied by R4 is an empty set, and the value of the local seq is 1. At this time, R3 already has the local instances of proposed value A, so the local deps replied by R3 is {1.1} ({A} in the figure). Besides, the value of local seq is 2, which is the seq of the instances in A plus one.

In the Fast Quorum, the local deps and seq of the replicas are different, so it requires to run Accept stage. The final deps is {1.1} ({A} in the figure), the union set of the local deps of most replicas. The value of the final seq takes the maximum value of 2 of the local seq of most replicas. The Accept stage reached the majority for proposed value B, the final deps, the final seq. Finally, it runs the Commit stage to submit.

At last, R1 proposes the proposed value C in the PreAccept stage. At this time, R1 already has the instances of the proposed value A locally, so the local deps is {1.1} ({A} in the figure). Besides, the value of local seq is 3. After local persistence, R1 broadcasts the message of PreAccept(C) to R2 and R3.

R2 and R3 already have the instances of proposed value A and proposed value B locally at this time, so the local deps replied by R2 and R3 are {1.1, 5.1} ({A, B} in the figure). In addition, the value of local seq is 3, which equals the seq of B instances plus one.

In the Fast Quorum, the local deps and seq of replicas except proposer R1 are the same, so the resolution has been reached. The final deps is {1.1, 5.1} ({A, B}), and the value of seq is 3. It runs the Commit stage to submit.

Table 3: Properties of the instances in the core protocol process case of EPaxos

The dependency of instances in proposed values A, B, and C according to their deps is shown in Figure 3 (left):

Figure 3: The dependency of proposed values A, B, and C (left), and their order after sorting (right)

The deps of instances of A is an empty set, so there is no outgoing edge. The deps of instances in B is {A}, so there is an outgoing edge pointing to A. The deps of instance in C is {A, B}, so two outgoing edges are pointing to A and B respectively.

Moreover, there is no circular dependency in the dependency graph which is already a DAG. Therefore, each vertex of A, B and C is a strongly connected component, and their order is obtained after performing deterministic topological sorting, that is, A <- B <- C, shown in Figure 3 (right).

EPaxos introduces the concept of instance conflict (interfere), a similar concept to Parallel Raft but not the same concept as concurrent conflict. If there is no conflict between the values of two instances, such as accessing different keys, their sequence does not matter, and they can even be processed in parallel. Therefore, EPaxos only processes the dependency between logs that have conflicts.

The deps of instances in EPaxos stores the instances that need to be run earlier. After a conflict is introduced, deps stores instances that have a conflict with this instance.

Conflict is a concept related to the application. After being introduced, all instances are no longer total-ordered but are partial-ordered, which reduces dependencies and the probability of the slow path. Thus, the efficiency has been improved.

The derivation of the Fast Quorum value will be described in subsequent articles. This article discusses why the Fast Quorum in the PreAccept stage always contains the proposer.

At each stage of EPaxos, the proposer always achieve persistence locally and then broadcasts the message to other replicas. In other words, the proposer is always in the Quorum. Therefore, when judging whether a Quorum is achieved, the proposer always has one vote.

In the PreAccept stage, the proposer includes the local deps and seq in the message of PreAccept as the basis for other replicas to calculate their local deps and seq. By doing so, the local deps and seq of the proposer are always included in the final deps and seq, so the Fast Quorum in the PreAccept stage always contains the proposer.

EPaxos always broadcasts to other replicas after the local persistence, thus the Fast Quorum can be reduced. However, the local persistence and network message sending and receiving cannot be carried out concurrently, reducing the efficiency. At the same time, the proposer cannot tolerate local disk damage. These are problems that EPaxos engineering applications must face.

Why is the value of the Fast Quorum not smaller than that of the Slow Quorum? This conclusion can be directly obtained without the derivation of the value of the Fast Quorum. In Paxos, one replica proposes one value, and all replicas only have two results, accepting or not accepting this value. However, in EPaxos, each replica may give different deps and seq. Therefore, it requires more replicas to give the same results to ensure that the correct results can be restored when a replica becomes invalid.

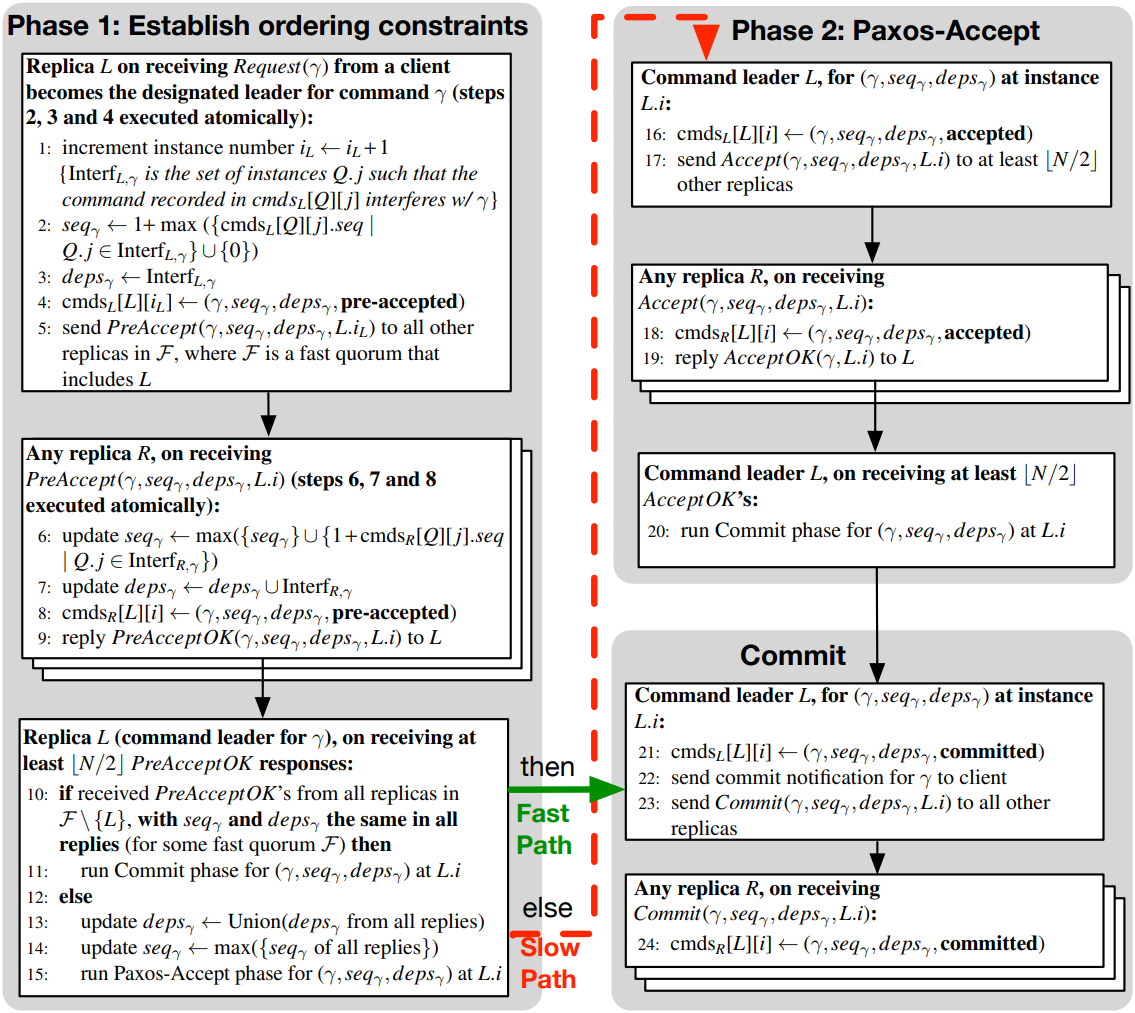

By now, readers should be able to understand the pseudocode of the EPaxos core protocol process, which is shown in Figure 4. To make it simple, the part related to the proposal ID, namely, ballot number, is omitted, which is the same as Paxos.

In the pseudocode, logs are considered as commands. Each instance can reach a consensus on command and each replica stores the received command with the two-dimensional array cmds.

Figure 4: The pseudocode of EPaxos core protocol process

By showing the explicit maintaining of dependencies between instances, EPaxos not only removes the dependency on the leader, but also allows instances to be concurrently submitted out of order, allowing for better pipelining optimization. Meanwhile, the explicit maintenance of dependencies also makes out-of-order execution possible. EPaxos supports acknowledgment, submission, and execution in an out-of-order way, so that theoretically higher throughput is possible. In addition, some challenges in the application of EPaxos are still existed, which are all problems to be solved in the engineering implementation of EPaxos.

This article describes the core protocol process of EPaxos from the perspective of the comparison between Paxos and EPaxos. However, these are definitely not the only contents of EPaxos, especially how to ensure the sequence of logs in the case of Failover. I believe readers will have many questions after reading.

Here are a few questions for interested readers to think about:

The answers will be given in the subsequent article of the EPaxos trilogy. It will introduce the Failover scenario and engineering practical experience of EPaxos. Please stay tuned.

Raft Engineering Practices and the Cluster Membership Change

6 posts | 2 followers

FollowXiangguang - January 28, 2021

Xiangguang - January 11, 2021

Alibaba Clouder - June 24, 2019

Alibaba Clouder - March 17, 2021

Alibaba Cloud Native Community - December 16, 2022

ApsaraDB - January 23, 2024

6 posts | 2 followers

Follow Function Compute

Function Compute

Alibaba Cloud Function Compute is a fully-managed event-driven compute service. It allows you to focus on writing and uploading code without the need to manage infrastructure such as servers.

Learn More Elastic High Performance Computing Solution

Elastic High Performance Computing Solution

High Performance Computing (HPC) and AI technology helps scientific research institutions to perform viral gene sequencing, conduct new drug research and development, and shorten the research and development cycle.

Learn More Quick Starts

Quick Starts

Deploy custom Alibaba Cloud solutions for business-critical scenarios with Quick Start templates.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn MoreMore Posts by Xiangguang