By Yan Xiangguang (Xiangguang)

Distributed consensus, the cornerstone of a distributed system, has been a focus in the computing system field. In recent years, with the scale-up of the distributed system, increasing requirements for its availability and consistency has emerged, leading to an increased usage of distributed consensus.

Throughout the application of distributed consensus in many industry sectors, from the dominance of Paxos to the birth of Raft, and we have seen rapid evolution of this technology, including the leaderless EPaxos that has attracted much attention.

This article will discuss the application of distributed consensus in the industry from a technical perspective and make a comparative analysis in terms of understandability, availability, efficiency, and applicable scenarios.

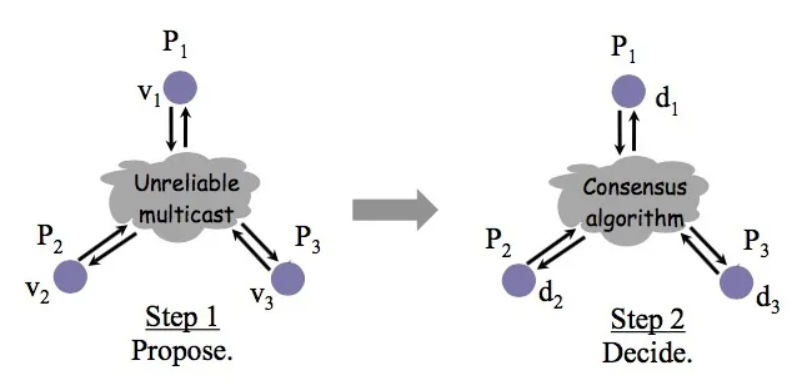

Distributed consensus, in short, is to make all processes in a system to agree on a single value after one or more processes propose this value.

To reach an agreement on a certain value, each process can put forward its own proposal. Finally, by using the distributed consensus algorithm, all properly running processes learn the same value.

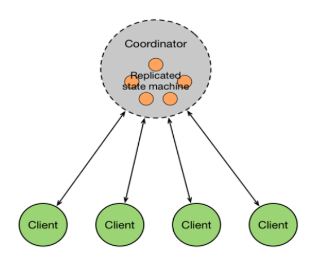

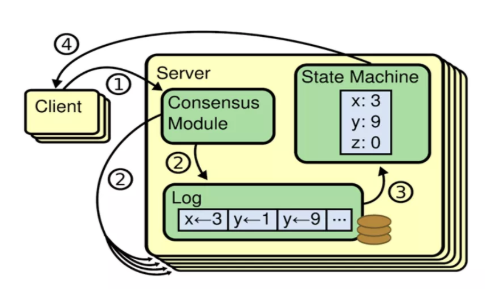

The industrial application of distributed consensus is to build the multi-replicated state machine model to achieve high availability and strong consistency.

Distributed consensus allows multiple machines to share the same state and run the same deterministic state machine, so that the entire machine can continue working normally when a few machines fail.

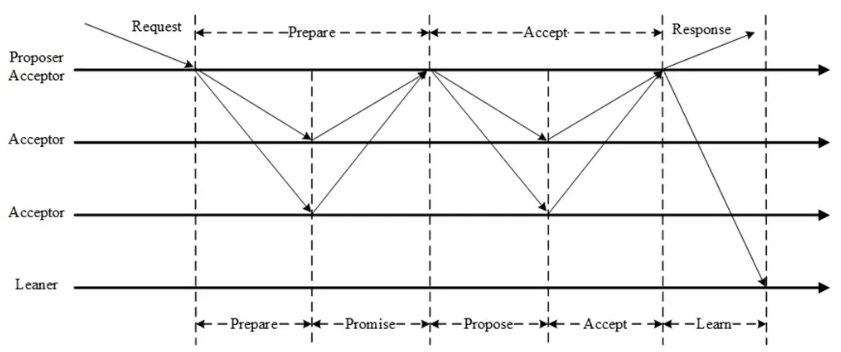

As one of the main algorithms in distributed consensus, Paxos requires at least two phases to reach an agreement: the preparation phase (the request to prepare) and the acceptance phase (the request to accept).

The preparation phase implements the following purposes:

The acceptance stage collects proposals into a majority. Once the majority of proposals is determined, the resolution is reached and can be learned. If the acceptance phase is rejected, the preparation phase needs to be gone through again.

The basic Paxos algorithm requires at least two network trips to reach a resolution. In the case of concurrency, the algorithm might need more. In extreme cases, it might even produce a livelock, which is inefficient. To address this issue, Multi-Paxos was proposed.

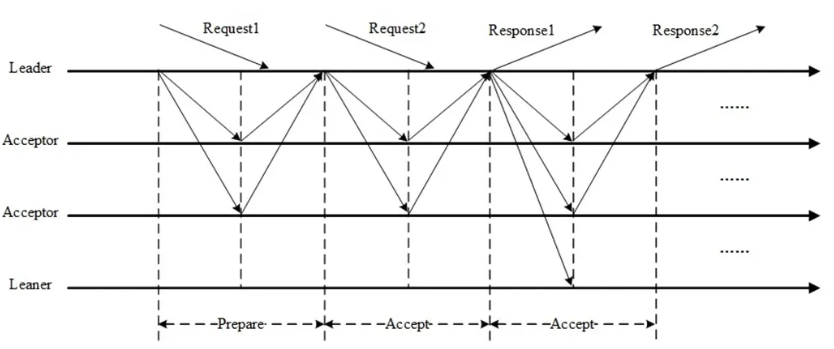

Multi-Paxos elects a leader, and the proposal is initiated by the leader. Due to zero competitions, the livelock problem is eliminated. If all proposals are initiated by the leader, the preparation phase can be skipped to change the two-phase process to a one-phase process, which improves the efficiency. Multi-Paxos does not assume a unique leader. Instead, it allows multiple leaders to propose requests concurrently without affecting safety. In extreme cases, it degrades to Basic Paxos.

The difference between multi-Paxos and Basic Paxos does not lie in multiplicity, because Basic Paxos also supports multiplicity. The point is that Multi-Paxos can be optimized to skip the preparation phase and directly enter the acceptance phase when the same proposer makes continuous proposals.

Unlike Paxos, which is derived directly from the distributed consensus problem, Raft is proposed from the multi-replicated state machine. It uses stronger assumptions to reduce the states to be considered, making it easy to understand and implement.

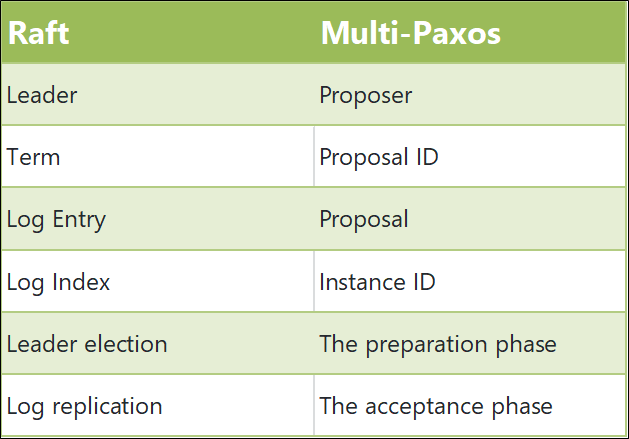

Raft and Multi-Paxos are highly related. The following sections summarize the similarities and differences between Raft and Multi-Paxos.

Raft and Multi-Paxos have the following similar concepts:

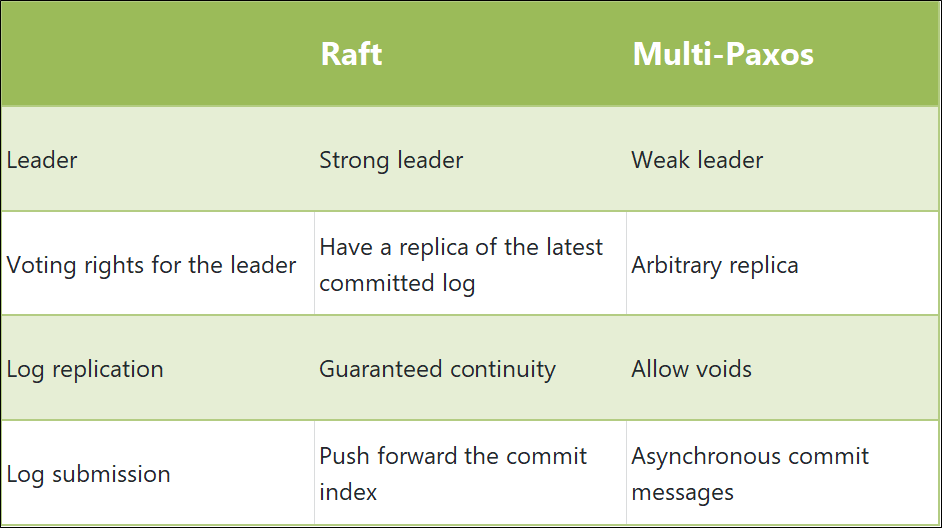

Differences between Raft and Multi-Paxos are as follows:

Raft assumes that the system has at most one leader at any time, and proposals can only be sent by a leader (strong leader). Otherwise, the correctness is affected. Multi-Paxos also elects leaders, but this is for efficiency improvement purposes only. Multi-Paxos does not limit that only the leader (weak leader) can propose proposals.

In engineering, the strong leader usually uses "Leader Lease" and "Leader Stickiness" to ensure the following conditions:

Raft has a restriction in which nodes with the latest submitted logs are only eligible to be leaders. In contrast, Multi-Paxos does not have this restriction.

Raft checks log continuity before confirming a log. If log discontinuity is detected, the log is rejected to ensure log continuity. Multi-Paxos skips this check and allows voids in the log.

Raft carries the commit index of the leader in AppendEntries. Once the majority of logs is determined, the leader updates the local commit index to complete the commit, and the next AppendEntries carries a new commit index and notify other nodes. In comparison, Multi-Paxos does not make any log connectivity assumptions and requires additional commit messages to notify other nodes.

Egalitarian Paxos (EPaxos) was put forward by SOSP'13 and come out earlier than Raft. However, when Raft was popular in the industry, no one noticed EPaxos for a long time until recently.

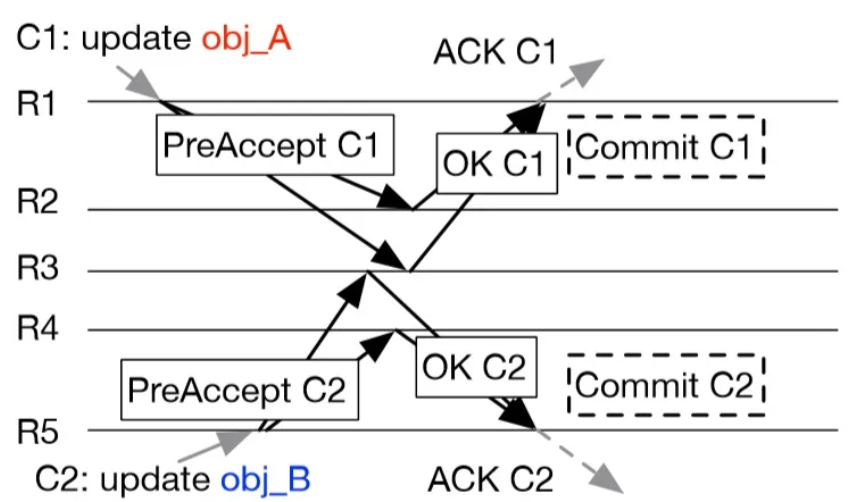

EPaxos is a leaderless consensus algorithm and allows any replica to submit logs. Generally, a log submission requires one or two network trips.

EPaxos has no leader election overhead. When one replica becomes unavailable, other replicas can be accessed immediately, achieving higher availability. Each replica is load balanced without leader bottlenecks and has a higher throughput. The client can choose the nearest replica to provide service, reducing latency in cross-available-zone (AZ) and cross-region scenarios.

Unlike Paxos or Raft, all instance numbers are sorted in advance. Then, the value of each instance is agreed on. Rather than determining the order of instances in advance, EPaxos dynamically determines the order of instances during runtime. EPaxos not only agrees on the value of each instance, but also the relative order among the instances. EPaxos regards the relative order of different instances as a consistency problem, and reaches an agreement among the replicas. In this way, each replica can concurrently propose a proposal in its own instance. After the values and relative order of these instances are agreed on, they are reordered based on the relative order and finally applied to the state machine in the new order.

From the perspective of graph theory, the log is a node of the graph, and the order between the logs is the edge of the graph. EPaxos reaches an agreement on nodes and edges respectively, and then uses topological sorting to determine the order of logs. Given that a loop can incur in the figure, EPaxos needs to handle the circular dependency problem.

EPaxos introduces the concept of log conflict, which is similar to parallel Raft but not the same concept as concurrency conflict. If no conflict exists between logs (such as accessing different keys), their relative order is irrelevant. Therefore, EPaxos only handles the relative order between conflicting logs.

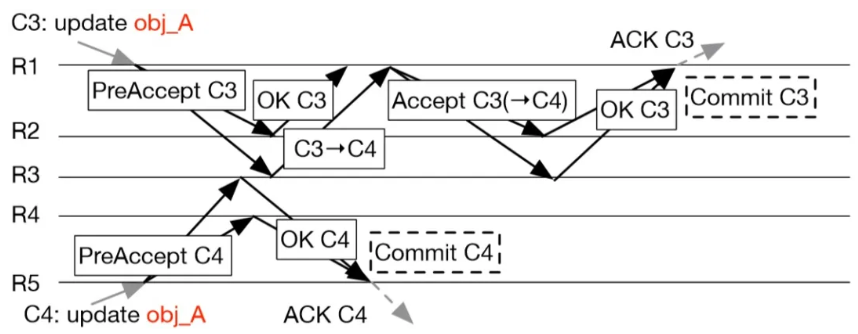

If no conflict exists among concurrent proposed logs, EPaxos only needs to go through the pre-acceptance phase (request to pre-accept) to commit (resulting in a fast path). Otherwise, it needs to go through the acceptance phase to commit (resulting in a slow path).

In the pre-acceptance phase, EPaxos attempts to reach an agreement on the relative order between the log and other logs and maintain the conflict relationship between the log and other logs. If no conflicts are found between the log and other concurrently proposed logs after the pre-acceptance phase ends, the relative order of the logs is agreed on, and EPaxos can directly send an asynchronous commit message for submission. If conflicts are found between the log and other concurrent proposed logs, the relative order of the logs is not agreed on. As a result, EPaxos must go through the log acceptance phase to reach a majority of the conflicting dependencies and then sends a commit message to commit.

The fast path quorum of EPaxos is 2F and can be optimized to F+[(F+1)/2]. It is consistent with that of Paxos and Raft with three and five replicas. The slow path relates to the Paxos acceptance phase, and the quorum value is fixed to F+1.

EPaxos also has an active learning algorithm, which can be used to catch up with logs during recovery. This article does not introduce the algorithm in detail. If you are interested, read the relevant thesis.

From Paxos to Raft and then to EPaxos, a comparison among the algorithms can be conducted to present the evolution. The following section mainly compares and analyzes the algorithms in terms of understandability, efficiency, availability, and applicable scenarios.

As we all know, Paxos is notoriously obscure. It is not only difficult to understand, but also difficult to implement. On the other hand, Raft, however, aims at improved understandability and easy implementation. It greatly reduces the threshold of using distributed consensus and makes distributed consensus more popular. After Raft was proposed, it quickly gained popularity and greatly promoted the engineering application of distributed consensus.

EPaxos was put forward even earlier than Raft, but attained little attention for a long time. One of the reasons is that EPaxos is difficult to understand. EPaxos is based on Paxos, but is more difficult to understand than Paxos, which greatly hinders the engineering application of EPaxos. However, gold always glows. Due to its unique advantages, EPaxos has gained popularity and is quite prospective.

Has any efficiency improvement been achieved from Paxos to Raft and then to EPaxos? The following sections compare Multi-Paxos, Raft, and EPaxos in terms of load balancing performance, message complexity, pipelining, and concurrent processing.

The load of Multi-Paxos and Raft leaders is higher than that of EPaxos, and the load among the replicas is unbalanced, so the leader is easy to become a bottleneck. However, EPaxos does not require a leader, and the load among replicas is fully balanced.

After a leader is elected by Multi-Paxos and Raft, only one network round trip is required to submit a log in most cases. However, Multi-Paxos introduces additional asynchronous commit message submission. Raft only needs to push forward the local commit index without using additional messages. EPaxos requires one or two network round trips based on the log conflict. Therefore, Raft has the lowest message complexity, followed by Paxos. EPaxos has the highest.

We divide pipelines into sequential pipelines and disordered pipelines. Multi-Paxos and EPaxos support disordered pipelines, whereas Raft only supports sequential pipelines due to the log continuity assumption. However, Raft can also realize disordered pipelines. It only needs to maintain a TCP-like sliding window for each follower on the leader and a receiving window corresponding to each follower. The log of the receiving window is allowed to be discontinuous, and there are continuous logs outside the window. Once the log is continuous, the window slides forward, and the pipeline in the window can be disordered.

Multi-Paxos adopts the Paxos policy. Once a concurrency conflict is detected, the system rolls back and retries until it succeeds. Raft uses the strong leader to avoid concurrency conflicts, so the follower does not compete with the leader, avoiding concurrent conflicts. EPaxos directly handles concurrency conflicts and treats conflicting dependencies as a consistency problem to resolve concurrency conflicts. In short, Paxos adopts conflict fallback, Raft adopts conflict avoidance, and EPaxos adopts conflict resolution. Logs for Paxos and Raft are linear. Logs for EPaxos are graph-like. Therefore, EPaxos features better parallelism and higher throughput.

Any replica in EPaxos can provide services. When a replica becomes unavailable, the service can be immediately switched to another replica, with a minor impact on availability. However, Multi-Paxos and Raft depend on the leader. If the leader becomes unavailable, leader reelection is required. As a result, the service is unavailable until a new leader is elected. Obviously, EPaxos has better availability than Multi-Paxos and Raft. Between Multi-Paxos and Raft, which has better availability than the other?

Raft adopts the strong leader. The follower must wait for the lease of the old leader to expire before launching an election. Multi-Paxos adopts the weak leader. The follower can run for the leader at any time. Although Multi-Paxos might compromise the efficiency, it can restore services quicker when the leader fails. Therefore, Multi-Paxos has better availability than Raft.

EPaxos is more applicable to cross-AZ and cross-region scenarios. In scenarios with demanding usability requirements, the leader is prone to creating a bottleneck. Multi-Paxos and Raft have similar application scenarios, such as the internal network. For general high-availability scenarios, the leader is less prone to creating a bottleneck.

6 posts | 2 followers

FollowXiangguang - August 31, 2021

Xiangguang - January 28, 2021

Alibaba Cloud Native - October 12, 2024

Alibaba Clouder - April 11, 2019

Alibaba Cloud_Academy - September 9, 2022

Wei Kuo - August 30, 2019

6 posts | 2 followers

Follow Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn More ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More Super Computing Cluster

Super Computing Cluster

Super Computing Service provides ultimate computing performance and parallel computing cluster services for high-performance computing through high-speed RDMA network and heterogeneous accelerators such as GPU.

Learn More Elastic High Performance Computing

Elastic High Performance Computing

A HPCaaS cloud platform providing an all-in-one high-performance public computing service

Learn MoreMore Posts by Xiangguang