This article describes how to synchronize data from databases (DBs) to Elasticsearch in real time.

Released by ELK Geek

In the article "Combining Elasticsearch with DBs: Application System Scenarios ", we described multiple types of model mappings used in combination scenarios, and discussed how an application business system uses Elasticsearch to address the limitations of DBs.

This article describes how to synchronize database (DB) data to Elasticsearch in real time.

Background

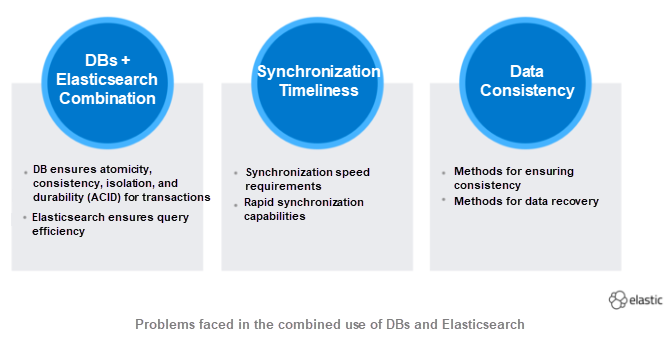

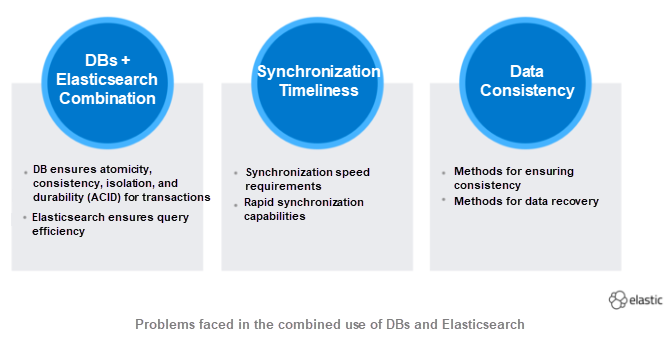

Databases (DBs) and Elasticsearch are essentially database products designed for different application fields. When we use DBs and Elasticsearch together, we are confronted with two types of problems:

-

Synchronization timeliness: How long does it take to synchronize a DB data update to Elasticsearch? What is the maximum wait time acceptable to an application system? Generally, we must keep the synchronization time under one second. If it takes more than one minute, the process is considered offline synchronization.

-

Data consistency: When DB data is frequently changed and modified, how can we ensure data consistency between Elasticsearch and the DBs? Within an allowed time range, data obtained by the application system through a query is valid and acceptable. When the DB data is overwritten due to a change, the obtained data becomes invalid and unacceptable to the application system.

Synchronization Modes

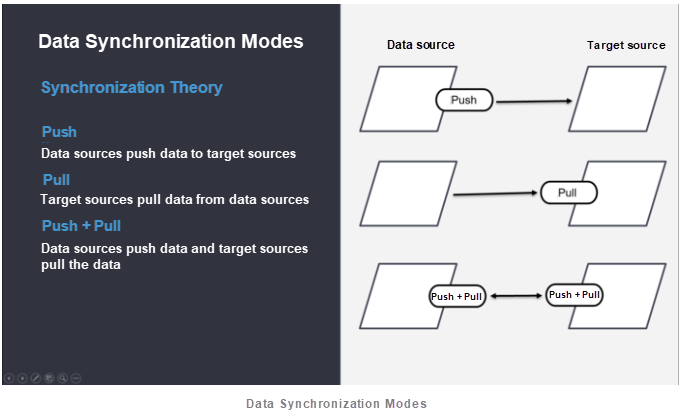

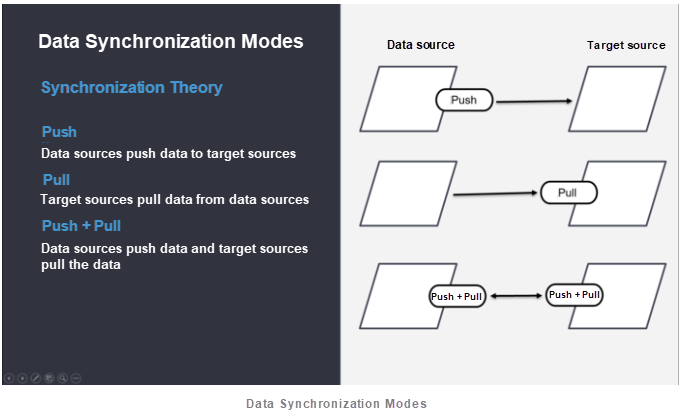

Three data synchronization modes are available:

-

Push: The data source pushes change data to the target source. For example, in a RabbitMQ-based architecture, a server actively sends a message queue (MQ) to a client.

-

Pull: The target source regularly pulls change data from the data source. For example, according to the mechanism of data synchronization between primary and secondary MySQL databases, the primary database pulls change data from the secondary database.

-

Push and pull: Data is both pushed by the data source and pulled by the target source. This mode is usually implemented through intermediate media. For example, in a Kafka-based log application, the data source (collection side) sends log data to the Kafka cluster, and the target source regularly pulls data from the Kafka cluster for updates.

Technical Solutions

Technically, there are multiple ways to synchronize DB data to Elasticsearch.

-

Synchronous double-write: Elasticsearch is updated synchronously when the DB is updated. This technical solution is the simplest, but it faces the largest number of problems, including data conflicts, data overwriting, and data loss. Make your choice carefully.

-

Asynchronous double-write: When the DB is updated, an MQ is recorded and used to notify the consumer. This allows the consumer to backward query DB data so that the data is ultimately updated to Elasticsearch. This technical solution is highly coupled with business systems. Therefore, you need to compile programs specific to the requirements of each business. As a result, rapid response is not possible.

-

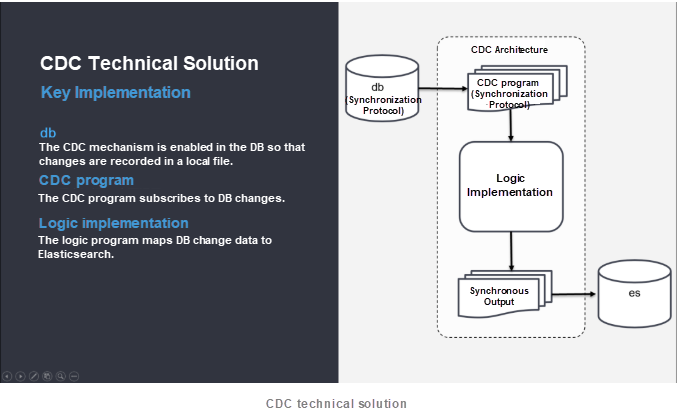

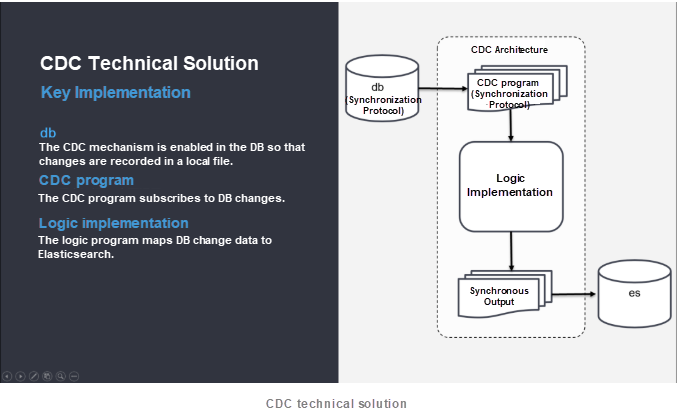

Change Data Capture (CDC): Change data is captured from the DB, pushed to an intermediate program, and synchronously pushed to Elasticsearch by using the logic of the intermediate program. Based on the CDC mechanism, accurate data is returned at an extremely fast speed in response to queries. This solution is less coupled to application programs. Therefore, it can be abstracted and separated from business systems, making it suitable for large-scale use. This is illustrated in the following figure.

The CDC mechanism was originally designed to synchronize data between databases of the same type. It is used for synchronization with high availability between primary and secondary databases. Therefore, data synchronization between databases of the same type is very easy to implement, This approach is naturally supported by database vendors and has proved to be efficient and reliable over years of practical application. On the contrary, data synchronization between heterogeneous databases is complex to implement due to long data pipelines and the number of technical issues involved, making each step critical. The following section describes how to implement the technology stack used by our company and some key technical issues.

Case Study: Synchronize Data from MySQL to Elasticsearch

Synchronizing data from MySQL to Elasticsearch involves the following key technical issues:

- Binlog mechanism

- Canal middleware

- Kafka middleware

- Synchronization application (or middleware in business-oriented cases)

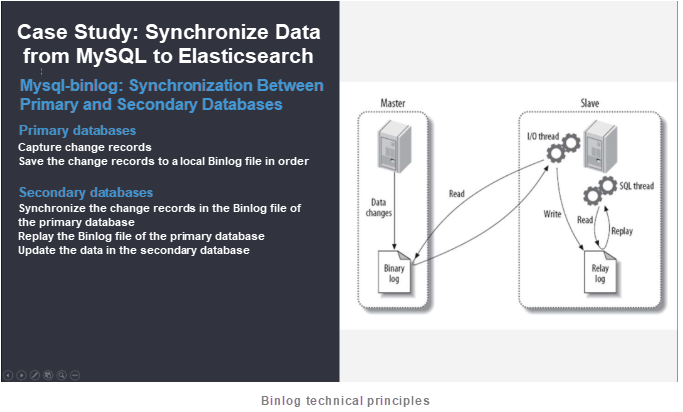

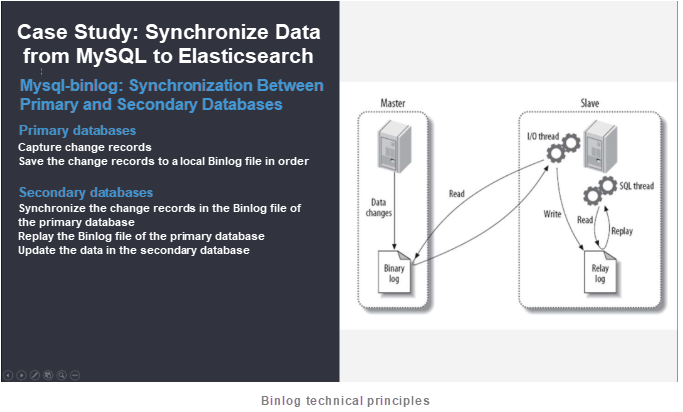

Binlog Mechanism

Binlog is a functional mechanism provided by MySQL. It was originally designed for synchronization between primary and secondary databases.

- The primary database writes data to a Binlog file when the Binlog mechanism is enabled.

- The secondary database pulls Binlog data from the primary database, replays the Binlog data, and updates data in the secondary database.

- When you enable Binlog, note that:

- A primary database is usually subscribed to by multiple secondary databases and must support real-time changes to the business system, resulting in a server resource bottleneck.

- Tables to be synchronized must have primary keys.

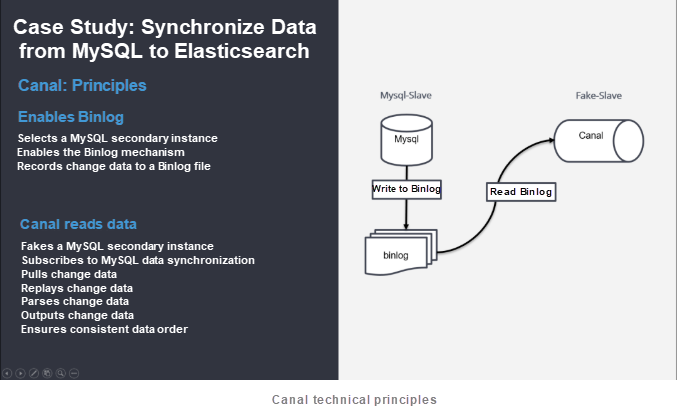

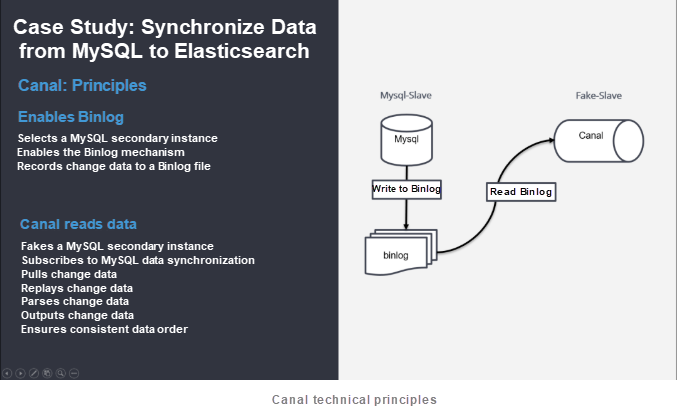

Canal Middleware

Canal is a MySQL middleware. It is specially designed for data synchronization.

- Canal fakes a secondary database.

- It pulls Binlog data.

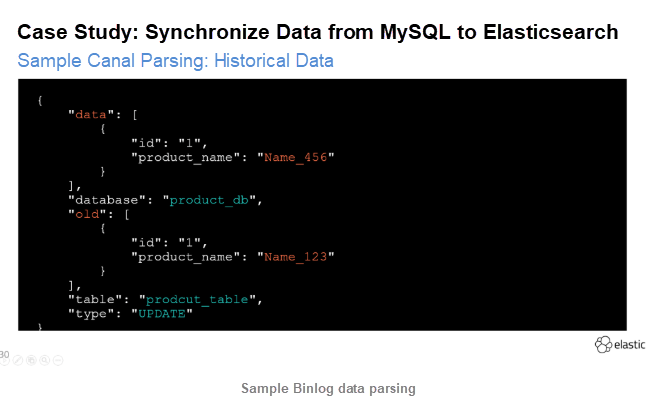

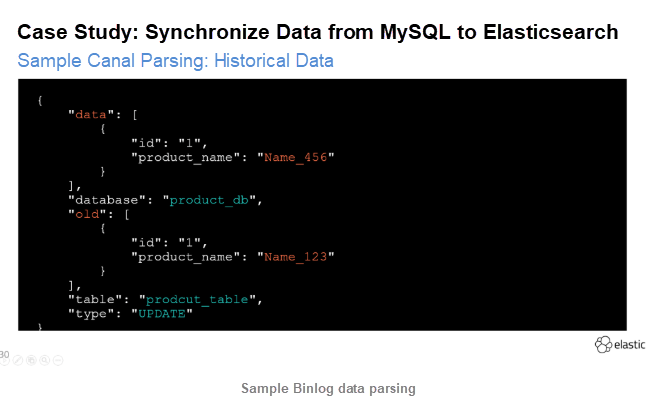

- It replays the Binlog data.

- It parses the Binlog data to a JSON file, using a message to record the previous data and new data, information about the database and table, and the type of change.

- It outputs the data according to the order of changes. Canal supports multiple destination sources and usually outputs data to Kafka middleware.

- When you configure Canal, note that:

- Configure Canal in cluster mode. Canal runs on Java Virtual Machines (JVMs) and its data processing capability is not as good as that of MySQL. One set of Canal clusters cannot support too many transactional database instances.

- Modify the Canal code when you implement horizontal sharding in the database. Otherwise, Canal cannot be identified as a data source.

- Make Canal subscribe to a secondary instance. This is because the primary business database handles too many business system responsibilities.

- Enable Global Transaction ID (GTID) for Binlog mode, so that you can switch to another database based on GTID if the database subscribed to by Canal fails.

- Write data to Kafka. If sharding is implemented in the database, modify the Canal code.

- Set one Kafka partition key to ensure a consistent data change order.

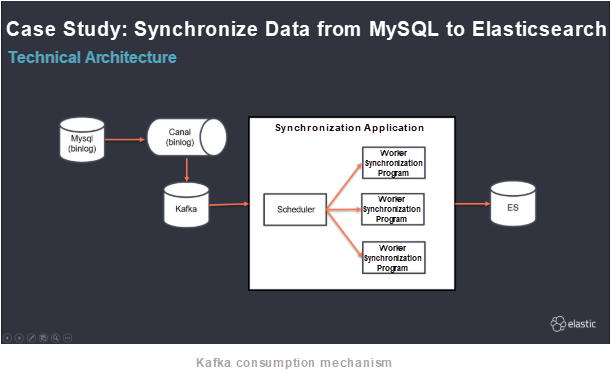

Kafka Middleware

Use Kafka as an intermediate cache based on the following considerations:

-

Partitioning: Kafka supports partitioning, has good concurrency performance, and can handle a greater data throughput than MySQL. Therefore, performance is not a bottleneck.

-

Ordered partition storage: Kafka stores data in order. Primary keys are set to ensure consistency with the order of Binlog data changes.

-

Ordered consumption: A client consumes Kafka data in a certain order based on the Offset mechanism. This ensures consistency with the order of Binlog data changes.

-

Consumer groups: Strictly speaking, Kafka is generally more concerned with message flows than MQs. According to the data model mapping requirements discussed in the previous article, one table can be mapped to multiple indexes. Therefore, you need to set up different consumer groups in such a way that they do not conflict with or overwrite each other while ensuring change data is repeatedly consumed.

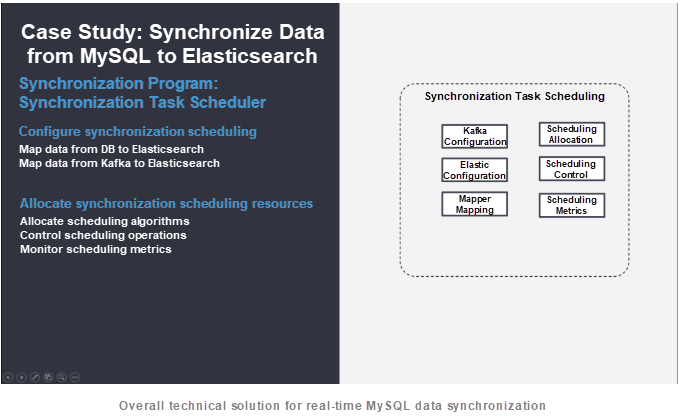

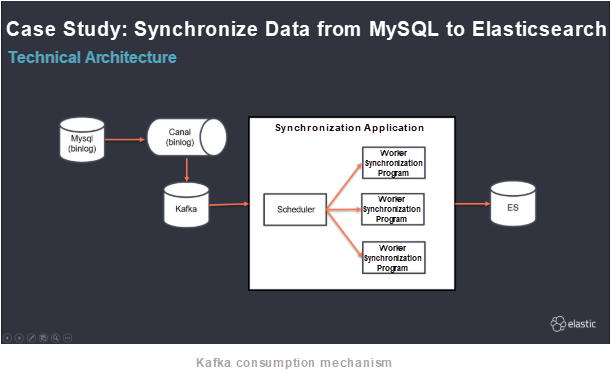

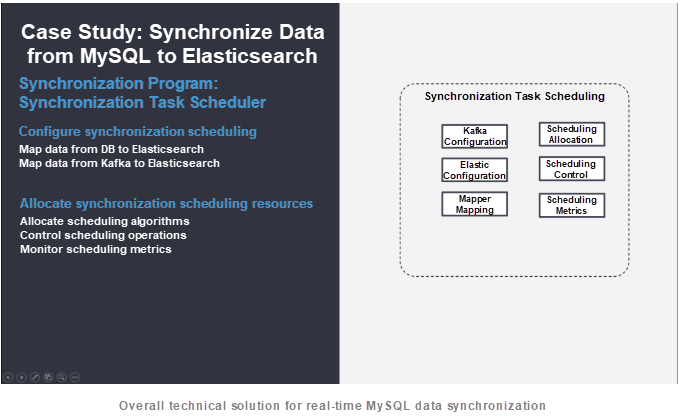

Synchronization Program

Synchronization programs are independently developed based on Java. Popular synchronization tools do not support a high degree of customization. The following two types of synchronization programs are available:

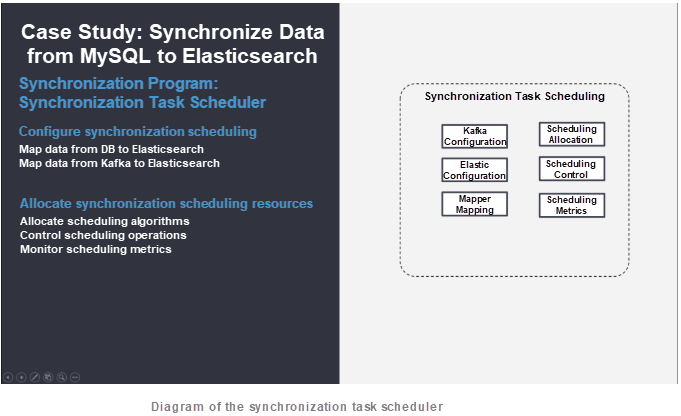

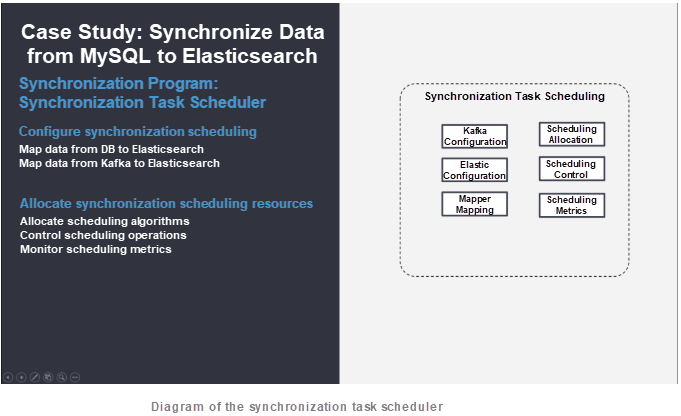

Synchronization task scheduler

- The schedule is used to configure synchronization scheduling, synchronization tasks, and synchronous mapping relationships, including mapping from DBs to Elasticsearch and mapping from Kafka to Elasticsearch.

- It allocates synchronization scheduling resources, controls synchronization task operations such as start, stop, and reset, allocates synchronization tasks, and specifies the degree of parallelism.

Synchronization task executor

- The executor executes tasks for mapping data from Kafka to Elasticsearch. A synchronization task executor consists of four modules.

- The Kafka module pulls consumption data and records consumption locations.

- The Mapper module executes mapping from tables to indexes and mapping from table fields to index fields and generates the specified data in JSON format.

- The Elastic module submits the JSON data generated by the Mapper module to Elasticsearch. If the submission succeeds, the consumption record location is submitted. Otherwise, another logic is used.

- The Schedule module executes synchronization tasks at the thread level. It supports operations, such as start and pause, and summarizes metric data for synchronization tasks in real time

Overview of the Data Synchronization Process

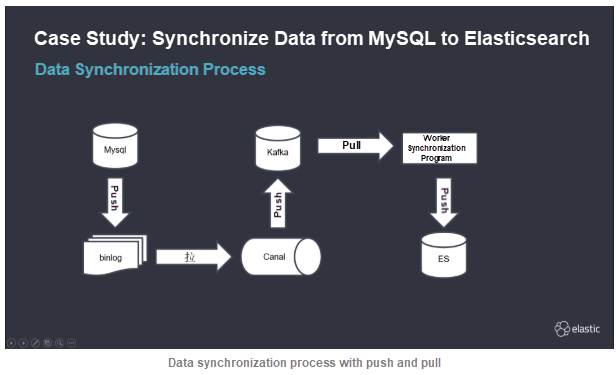

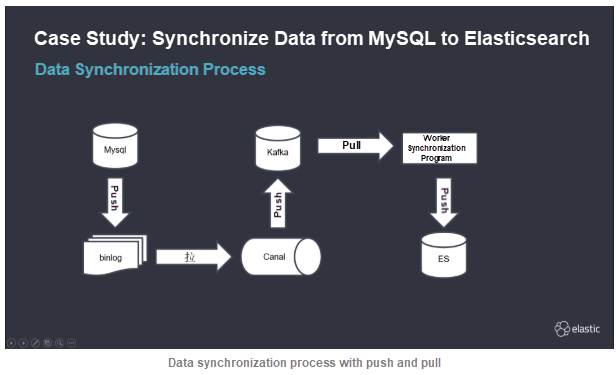

Updating data from DBs to Elasticsearch involves multiple stages and multiple synchronization modes, including pull, push, and push and pull.

- MySQL pushes data to a local Binlog file.

- Canal pulls data from the Binlog file, and then pushes the data to Kafka.

- The Worker synchronization program pulls data from Kafka, processes the data, and then pushes the data to Elasticsearch.

Considerations

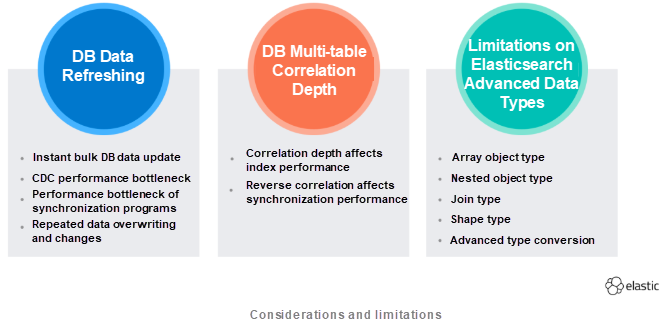

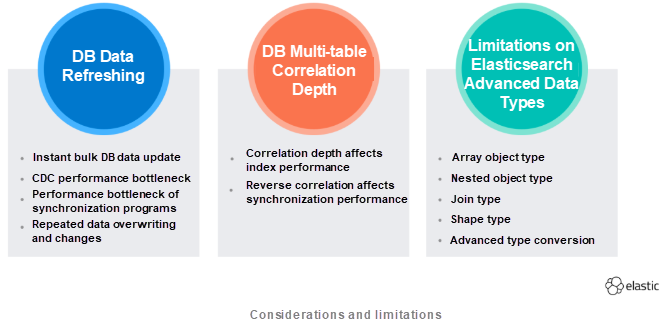

Real-time synchronization from DBs to Elasticsearch involves a long pipeline and many technical issues. Any environment may cause some problems. Therefore, you must pay special attention to the following aspects:

- DB data refreshing: DB data is updated in bulk. This leads to performance bottlenecks in subsequent technical nodes.

- DB multi-table correlation depth: The one-to-one mode is preferred for the correlation of multiple DB tables. In this way, major Elasticsearch mappings can be updated based on primary key correlations, without the need for reverse queries.

- Limitations on Elasticsearch advanced data types: Elasticsearch supports many advanced data types. However, it is better not to use these advanced data types in synchronization programs.

Unresolved Issues

This project encountered many issues. Some of them have been solved, while other issues have only received a partial solution or no solution at all.

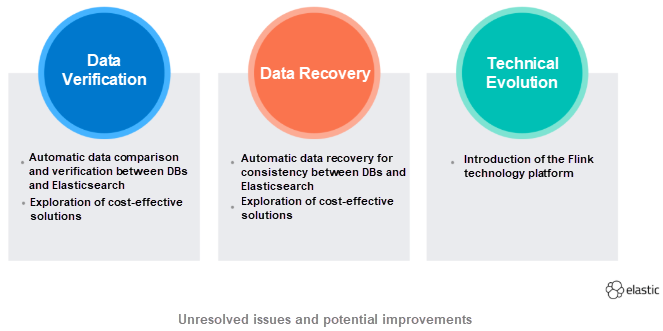

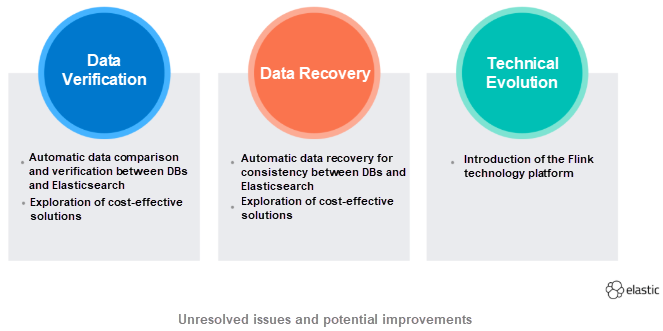

- Data verification: Although DB data can be synchronized to Elasticsearch, there is no effective way to verify its correctness. The traditional method of verification is to compare random queries on both sides, which is very inefficient. A better method of data comparison needs to be explored.

- Data recovery: Automatic data recovery is needed when data is found to be incorrect. However, due to the low efficiency of data verification, the accuracy of data recovery is not very good.

- Technical evolution: Data synchronization programs are independently developed based on Java. However, a lot of effort has gone into non-business practices. The programs handle heavy scheduling work in a traditional way. Now we are considering introducing the Flink platform to schedule underlying resources so that the upper layer only needs to configure synchronous mapping. This is currently being tested.

Summary: Lessons Learned

The solution for real-time data synchronization from DBs to Elasticsearch is the result of a long development process and several technical upgrades. There is still much room for improvement.

We ultimately selected the CDC solution based on the experience shared by the MFW Tech Blog. We adopt the same design idea but different technical implementations.

The overall implementation of data synchronization technologies involves many intermediate steps. You must thoroughly understand every technical detail to prevent fatal mistakes. This requires collaboration throughout the team.

CDC is not a new concept and is supported by almost all database products, such as the following:

- PostgreSQL: Logical Decoding

- SQL Server: Change Data Capture and Change Tracking

- Oralce: Redo Log and Oralce Golden Gate

- MongoDB: Replication Sets

- Elasticsearch: Translog

We recommend that you consider the CDC technical solution first when you encounter similar real-time data synchronization requirements. We also invite you to discuss more powerful real-time data exchange platforms.

About the Author

Li Meng is an Elasticsearch Stack user and a certified Elasticsearch engineer. Since his first explorations into Elasticsearch in 2012, he has gained in-depth experience in the development, architecture, and operation and maintenance (O&M) of the Elastic Stack and has carried out a variety of large and mid-sized projects. He provides enterprises with Elastic Stack consulting, training, and tuning services. He has years of practical experience and is an expert in various technical fields, such as big data, machine learning, and system architecture.

Declaration: This article is reproduced with authorization from Li Meng, the original author. The author reserves the right to hold users legally liable in the case of unauthorized use.

ApsaraDB for HBase

ApsaraDB for HBase

Big Data Consulting for Data Technology Solution

Big Data Consulting for Data Technology Solution

Big Data Consulting Services for Retail Solution

Big Data Consulting Services for Retail Solution

ApsaraDB for MyBase

ApsaraDB for MyBase