By Tingshuai Yan (Hanting)

As we embrace the inclusive AI capabilities of big language models, developers also face huge challenges. As the models continue to evolve, the computational demands increase, resulting in longer inference times. To address the latency issue in inference for large language models, various solutions such as Tensorrt, FasterTransformer, and Vllm have been introduced in the industry. To help users accelerate inference for large language models in cloud-native systems, the cloud-native AI Suite introduces an inference acceleration solution called FasterTransformer.

This article demonstrates how to use FasterTransformer to accelerate inference on the ACK container service, using the Bloom7B1 model as an example. The following components will be utilized in this example:

• Arena

Arena is a lightweight machine-learning solution based on Kubernetes. It supports the complete lifecycle of data preparation, model development, model training, and model prediction, improving the work efficiency of data scientists. Arena is deeply integrated with Alibaba Cloud's basic services, including GPU sharing and CPFS (Cloud Paralleled File System). It can run deep learning frameworks optimized by Alibaba Cloud, maximizing the performance and cost benefits of Alibaba Cloud's heterogeneous devices. For more information about Arena, refer to the Developer Guide for Cloud-native AI Suite [1].

• Triton Server

Triton Server provides Nvidia with a machine learning inference engine that supports TensorFlow, PyTorch, TensorRT, and FasterTransformer backends. The cloud-native AI Suite has integrated Triton Server into Arena, allowing users to easily deploy, operate, maintain, and monitor the Triton Server service in the cloud-native system using simple command lines or SDKs. For more information about using Triton Server in the AI Suite, see the Deploy a PyTorch Model as an Inference Service [2].

• FasterTransformer

FasterTransformer is an inference acceleration solution specifically designed for Transformer models, including encoder-only and decoder-only models. It offers multiple optimization techniques such as Kernel Fuse, Memory Reuse, KV cache, and quantization. Additionally, it provides two distributed inference solutions: Tensor Parallel and Pipeline Parallel. This article explains how to use FasterTransformer in the cloud-native AI Suite to accelerate model inference.

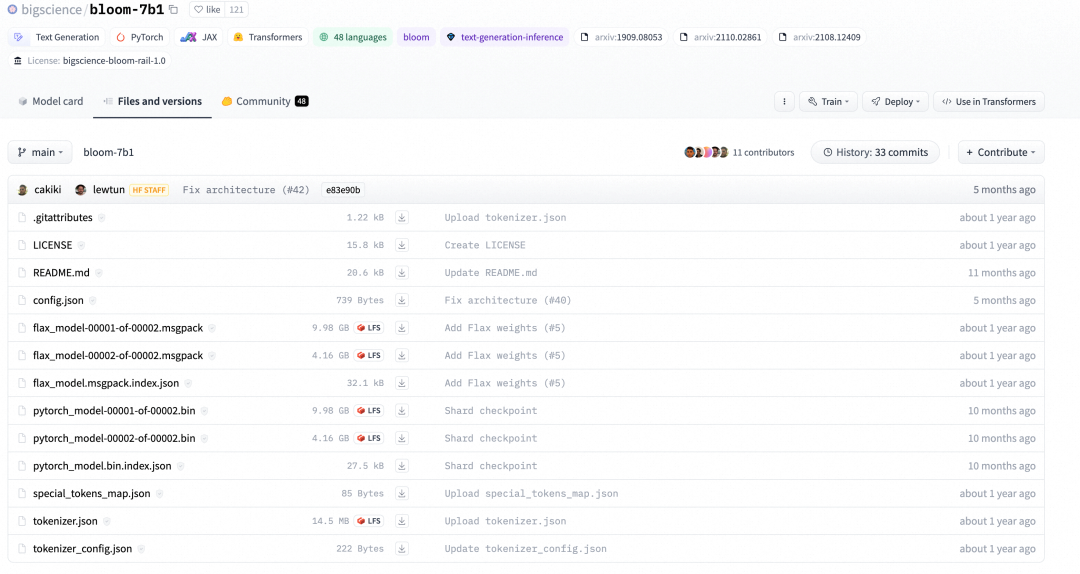

The environment preparation is divided into two parts. The first part is creating a Kubernetes cluster [3] containing GPUs and install the cloud-native AI suite [4]. The second part is downloading the bloom-7b1 model from the huggingface official website.

Run the following command to download the model:

git lfs install

git clone git@hf.co:bigscience/bloom-7b1Run the preceding command to download the file in the HuggingFace repo to your local computer:

After the download is complete, we upload the bloom-71 folder to OSS as shared storage for inference. For more information about how to use OSS, see Get Started with OSS [5].

After the folder is uploaded to OSS, create a PV and a PVC named bloom7b1-pv and bloom7b1-pvc to mount to the container of the inference service. For more information, see Mount a Statically Provisioned OSS Volume [6].

FasterTransformer is essentially a rewrite of the model. It rewrites the Transformer model structure through CUDA, cuDNN, and cuBLAS, so it has its own description of the model structure and model parameters. Our models are generally produced by training frameworks such as PyTorch, Tesorflow, Megatron, and HuggingFace. They often have their own model structures and parameter expressions. Therefore, when using FasterTransformer, you need to convert the original checkpoint of the model into the FasterTransformer structure.

FasterTransformer supports multiple types of transformation scripts. Here we use the examples/pytorch/gpt/utils/huggingface_bloom_convert.py provided by the FasterTransofrmer.

The cloud-native AI suite has accessed to the preceding transformation logic. Therefore, you can use the following script to transform the model.

arena submit pytorchjob\

--gpus=1\

--image ai-studio-registry.cn-beijing.cr.aliyuncs.com/kube-ai/fastertransformer:torch-0.0.1\

--name convert-bloom\

--workers 1\

--namespace default-group\

--data bloom-pvc:/mnt\

'python /FasterTransformer/examples/pytorch/gpt/utils/huggingface_bloom_convert.py -i /mnt/model/bloom-7b1 -o /mnt/model/bloom-7b1-ft-fp16 -tp 2 -dt fp16 -p 64 -v'Use the arena log to observe the transformed logs:

$arena logs -n default-group convert-bloom

======================= Arguments =======================

- input_dir...........: /mnt/model/bloom-7b1

- output_dir..........: /mnt/model/bloom-7b1-ft-fp16

- tensor_para_size....: 2

- data_type...........: fp16

- processes...........: 64

- verbose.............: True

- by_shard............: False

=========================================================

loading from pytorch bin format

model file num: 2

- model.pre_decoder_layernorm.bias................: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.pre_decoder_layernorm.bias.bin

- model.layers.0.input_layernorm.weight...........: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.input_layernorm.weight.bin

- model.layers.0.attention.dense.bias.............: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.attention.dense.bias.bin

- model.layers.0.input_layernorm.bias.............: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.input_layernorm.bias.bin

- model.layers.0.attention.query_key_value.bias...: shape (3, 2048) s | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.attention.query_key_value.bias.0.bin (0/2)

- model.layers.0.post_attention_layernorm.weight..: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.post_attention_layernorm.weight.bin

- model.layers.0.post_attention_layernorm.bias....: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.post_attention_layernorm.bias.bin

- model.layers.0.mlp.dense_4h_to_h.bias...........: shape (4096,) | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.mlp.dense_4h_to_h.bias.bin

- model.layers.0.mlp.dense_h_to_4h.bias...........: shape (8192,) s | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.mlp.dense_h_to_4h.bias.0.bin (0/2)

- model.layers.0.attention.query_key_value.bias...: shape (3, 2048) s | saved at /mnt/model/bloom-7b1-ft-fp16/2-gpu/model.layers.0.attention.query_key_value.bias.1.bin (1/2)Run the arena list command to check whether the transformation is completed:

NAME STATUS TRAINER DURATION GPU(Requested) GPU(Allocated) NODE

convert-bloom SUCCEEDED PYTORCHJOB 3m 1 N/A 192.168.123.35After the conversion, a folder named model/arena/bloom-7b1-ft-fp16 is created in OSS. The checkpoint of the FasterTransofrmer is stored in the folder.

At this time, we already have two bloom-7b1 checkpoints on OSS. One is the bloom-7b folder that stores the native checkpoint of huggingface, and the other is the bloom-7b-ft-fp16 folder that stores the transformed FasterTransformer checkpoint. We will use these two checkpoints to compare the performance and see if FasterTransformer can improve performance.

The performance comparison uses the examples/pytorch/gpt/bloom_lambada.py provided by the Fastertransformer, and we have integrated it into the AI suite. Here we submit two performance evaluation commands respectively. Commands for Huggingface Bloom-7b1 evaluation:

arena submit pytorchjob\

--gpus=2\

--image ai-studio-registry.cn-beijing.cr.aliyuncs.com/kube-ai/fastertransformer:torch-0.0.1\

--name perf-hf-bloom \

--workers 1\

--namespace default-group\

--data bloom7b1-pvc:/mnt\

'python /FasterTransformer/examples/pytorch/gpt/bloom_lambada.py \

--tokenizer-path /mnt/model/bloom-7b1 \

--dataset-path /mnt/data/lambada/lambada_test.jsonl \

--batch-size 16 \

--test-hf \

--show-progress'Check out the results of HuggingFace:

$arena -n default-group logs -t 5 perf-hf-bloom

Accuracy: 57.5587% (2966/5153) (elapsed time: 173.2149 sec)Commands for Fastertransformer Bloom-7b evaluation:

arena submit pytorchjob\

--gpus=2\

--image ai-studio-registry.cn-beijing.cr.aliyuncs.com/kube-ai/fastertransformer:torch-0.0.1\

--name perf-ft-bloom \

--workers 1\

--namespace default-group\

--data bloom7b1-pvc:/mnt\

'mpirun --allow-run-as-root -n 2 python /FasterTransformer/examples/pytorch/gpt/bloom_lambada.py \

--lib-path /FasterTransformer/build/lib/libth_transformer.so \

--checkpoint-path /mnt/model/2-gpu \

--batch-size 16 \

--tokenizer-path /mnt/model/bloom-7b1 \

--dataset-path /mnt/data/lambada/lambada_test.jsonl \

--show-progress'Check out the results of FasterTransformer. You can see that the performance is improved by 2.5 times.

$arena -n default-group logs -t 5 perf-ft-bloom

Accuracy: 57.6363% (2970/5153) (elapsed time: 68.7818 sec)By comparing the results, we can find that the performance of Fastertransformer is significantly improved compared with that of the native Huggingface.

In this section, we use Triton Server to deploy FasterTransformer. FasterTransformer's backends are not natively supported in Triton Server, so we need to ask the Fastertransformer backends provided by Nvidia for help. By using the FasterTransformer backends, Triton Server no longer allocates GPU resources. The FasterTransformer backends determine whether the GPU resources are available based on the CUDA_VISIBLE_DEVICES and allocate available resources to the corresponding RANK to perform distributed inference.

The model Repo directory of FasterTransformer is as follows:

├── model_repo

│ └── fastertransformer

│ ├── 1

│ │ └── config.ini

│ └── config.pbtxtRun the following Arena command to start FasterTransformer:

arena serve triton \

--namespace=default-group \

--version=1 \

--data=bloom7b1-pvc:/mnt \

--name=ft-triton-bloom \

--allow-metrics \

--gpus=2 \

--replicas=1 \

--image=ai-studio-registry.cn-beijing.cr.aliyuncs.com/kube-ai/triton_with_ft:22.03-main-2edb257e-transformers \

--model-repository=/mnt/triton_repoThrough Kubectl logs, we can see the deployment logs of the Triton Server. According to the logs, we can see that Triton Server has started two GPUs for distributed inference.

I0721 08:57:28.116291 1 pinned_memory_manager.cc:240] Pinned memory pool is created at '0x7fd264000000' with size 268435456

I0721 08:57:28.118393 1 cuda_memory_manager.cc:105] CUDA memory pool is created on device 0 with size 67108864

I0721 08:57:28.118403 1 cuda_memory_manager.cc:105] CUDA memory pool is created on device 1 with size 67108864

I0721 08:57:28.443529 1 model_lifecycle.cc:459] loading: fastertransformer:1

I0721 08:57:28.625253 1 libfastertransformer.cc:1828] TRITONBACKEND_Initialize: fastertransformer

I0721 08:57:28.625307 1 libfastertransformer.cc:1838] Triton TRITONBACKEND API version: 1.10

I0721 08:57:28.625315 1 libfastertransformer.cc:1844] 'fastertransformer' TRITONBACKEND API version: 1.10

I0721 08:57:28.627137 1 libfastertransformer.cc:1876] TRITONBACKEND_ModelInitialize: fastertransformer (version 1)

I0721 08:57:28.628304 1 libfastertransformer.cc:372] Instance group type: KIND_CPU count: 1

I0721 08:57:28.628326 1 libfastertransformer.cc:402] Sequence Batching: disabled

I0721 08:57:28.628334 1 libfastertransformer.cc:412] Dynamic Batching: disabled

I0721 08:57:28.661657 1 libfastertransformer.cc:438] Before Loading Weights:

+-------------------+-----------------------------------------------------------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Backend | Path | Config |

+-------------------+-----------------------------------------------------------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------+

| fastertransformer | /opt/tritonserver/backends/fastertransformer/libtriton_fastertransformer.so | {"cmdline":{"auto-complete-config":"true","min-compute-capability":"6.000000","backend-directory":"/opt/tritonserver/backends","default-max-batch-size":"4"}} |

+-------------------+-----------------------------------------------------------------------------+---------------------------------------------------------------------------------------------------------------------------------------------------------------+

I0721 09:01:19.653743 1 server.cc:633]

+-------------------+---------+--------+

| Model | Version | Status |

after allocation : free: 7.47 GB, total: 15.78 GB, used: 8.31 GB

+-------------------+---------+--------+

| fastertransformer | 1 | READY |

+-------------------+---------+--------+

I0721 09:01:19.668137 1 metrics.cc:864] Collecting metrics for GPU 0: Tesla V100-SXM2-16GB

I0721 09:01:19.668167 1 metrics.cc:864] Collecting metrics for GPU 1: Tesla V100-SXM2-16GB

I0721 09:01:19.669954 1 metrics.cc:757] Collecting CPU metrics

I0721 09:01:19.670150 1 tritonserver.cc:2264]

+----------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Option | Value |

+----------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| server_id | triton |

| server_version | 2.29.0 |

| server_extensions | classification sequence model_repository model_repository(unload_dependents) schedule_policy model_configuration system_shared_memory cuda_shared_memory binary_tensor_data statistics trace logging |

| model_repository_path[0] | /mnt/triton_repo |

| model_control_mode | MODE_NONE |

| strict_model_config | 0 |

| rate_limit | OFF |

| pinned_memory_pool_byte_size | 268435456 |

| cuda_memory_pool_byte_size{0} | 67108864 |

| cuda_memory_pool_byte_size{1} | 67108864 |

| response_cache_byte_size | 0 |

| min_supported_compute_capability | 6.0 |

| strict_readiness | 1 |

| exit_timeout | 30 |

+----------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

I0721 09:01:19.672326 1 grpc_server.cc:4819] Started GRPCInferenceService at 0.0.0.0:8001

I0721 09:01:19.672597 1 http_server.cc:3477] Started HTTPService at 0.0.0.0:8000

I0721 09:01:19.714356 1 http_server.cc:184] Started Metrics Service at 0.0.0.0:8002Start forward for verification:

# Use kubectl to start port-forward.

kubectl -n default-group port-forward svc/ft-triton-bloom-1-tritoninferenceserver 8001:8001Here, we utilize a script written in Python SDK provided by Triton Server to initiate a request to Triton Server. The script performs three main tasks:

• Utilize the tokenizer from Hugging Face for the bloom-7b1 model to perform word segmentation and token transformation on the query.

• Initiate a request to Triton Server using the Triton Server SDK.

• Transform the output tokens using the tokenizer to obtain the final result.

import os, sys

#from tkinter import _Padding

import numpy as np

import json

import torch

#import tritongrpcclient

import argparse

import time

from transformers import AutoTokenizer

import tritonclient.grpc as grpcclient

# create tokenizer

tokenizer = AutoTokenizer.from_pretrained('/mnt/model/bloom-7b1', padding_side='right')

tokenizer.pad_token_id = tokenizer.eos_token_id

def load_image(img_path: str):

"""

Loads an encoded image as an array of bytes.

"""

return np.fromfile(img_path, dtype='uint8')

def tokeninze(query):

# encode

encoded_inputs = tokenizer(query, padding=True, return_tensors='pt')

input_token_ids = encoded_inputs['input_ids'].int()

input_lengths = encoded_inputs['attention_mask'].sum(

dim=-1, dtype=torch.int32).view(-1, 1)

return input_token_ids.numpy().astype('uint32'), input_lengths.numpy().astype('uint32')

if __name__ == "__main__":

parser = argparse.ArgumentParser()

parser.add_argument("--model_name",

type=str,

required=False,

default="fastertransformer",

help="Model name")

parser.add_argument("--url",

type=str,

required=False,

default="localhost:8001",

help="Inference server URL. Default is localhost:8001.")

parser.add_argument('-v',

"--verbose",

action="store_true",

required=False,

default=False,

help='Enable verbose output')

args = parser.parse_args()

#1. Create a client

try:

triton_client = grpcclient.InferenceServerClient(

url=args.url, verbose=args.verbose)

except Exception as e:

print("channel creation failed: " + str(e))

sys.exit(1)

output_name = "OUTPUT"

#2) Set the input

inputs = []

## 2.1) input_ids

query="deepspeed is"

input_ids, input_lengths = tokeninze(query)

inputs.append(grpcclient.InferInput("input_ids", input_ids.shape, "UINT32"))

inputs[0].set_data_from_numpy(input_ids)

## 2.2) input_length

inputs.append(grpcclient.InferInput("input_lengths", input_lengths.shape, "UINT32"))

inputs[1].set_data_from_numpy(input_lengths)

## 2.3) output length

output_len=32

output_len_np = np.array([[output_len]], dtype=np.uintc)

inputs.append(grpcclient.InferInput("request_output_len", output_len_np.shape, "UINT32"))

inputs[2].set_data_from_numpy(output_len_np)

#3) Set the output

outputs = []

outputs.append(grpcclient.InferRequestedOutput("output_ids"))

#4) Initiate the request

start_time = time.time()

results = triton_client.infer(model_name=args.model_name, inputs=inputs, outputs=outputs)

latency = time.time() - start_time

#5) Result processing: Convert the result to numpy type, calculate max, and convert the label

output0_data = results.as_numpy("output_ids")

print(output0_data.shape)

result = tokenizer.batch_decode(output0_data[0])

print(result)Run the following command to initiate a client request:

$python3 bloom_7b_client.py

(1, 1, 36)

['deepspeed is the speed of the ship at the time of the collision, and the\ndeepspeed of the other ship is the speed of the other ship

at the time']In this article, we demonstrate how to accelerate large language models using FasterTransformer in the cloud-native AI suite, specifically with the Bloom-7b1 model. By comparing it with the HuggingFace version, we observe a 2.5 times performance improvement.

[1] Developer Guide for Cloud-native AI Suite

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/getting-started/cloud-native-ai-component-set-user-guide

[2] Deploy a PyTorch Model as an Inference Service

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/deploy-a-pytorch-model-as-an-inference-service?spm=a2c4g.11186623.0.0.2267225carYzgA

[3] Create a Kubernetes Custer Containing GPUs

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/use-gpu-scheduling-in-ack-clusters#task-1664343

[4] Install the Cloud-native AI Suite

https://www.alibabacloud.com/help/en/ack/cloud-native-ai-suite/user-guide/deploy-the-cloud-native-ai-suite

[5] Get Started with OSS

https://www.alibabacloud.com/help/en/oss/getting-started/getting-started-with-oss

[6] Mount a Statically Provisioned OSS Volume

https://www.alibabacloud.com/help/en/ack/ack-managed-and-ack-dedicated/user-guide/mount-statically-provisioned-oss-volumes

Demystify the Practice of Large Language Models: Exploring Distributed Inference

Locate and Solve RPC Service Registration Failure with MSE Autonomous Service

664 posts | 55 followers

FollowAlibaba Container Service - November 15, 2024

Alibaba Cloud Native Community - April 2, 2024

Alibaba Cloud Native Community - March 18, 2024

Alibaba Cloud Big Data and AI - January 8, 2026

Alibaba Cloud Community - January 4, 2026

Alibaba Cloud Native - September 12, 2024

664 posts | 55 followers

Follow ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Apsara Stack

Apsara Stack

Apsara Stack is a full-stack cloud solution created by Alibaba Cloud for medium- and large-size enterprise-class customers.

Learn MoreMore Posts by Alibaba Cloud Native Community