We will first introduce the business background of Alibaba Mama's advertising platform, then explore the design and evolution of its real-time advertising system and data lake architecture.

Summary: This article is adapted from a presentation by Chen Liang, a data technology expert at Alibaba Mama, during the Flink Forward Asia 2024 stream-lakehouse session.

Business Background and Challenges

Introduction to Alibaba Mama's Advertising Business

I. Introduction to Alibaba Mama and its Ecosystem

Alibaba Mama is a digital marketing platform under Alibaba Group, primarily offering advertising placement, data analysis, and optimization tools to merchants. Its main products include:

- Taobao Alliance: Provides merchants with opportunities to collaborate with online promoters (such as influencers and bloggers), attracting more traffic through commission-based incentives.

- Search Advertising: Helps merchants increase exposure in search results, attracting potential customers.

- Display Advertising: Displays ads on various websites and applications to reach a broader user base.

- Data Analysis: Offers comprehensive data analysis tools to help merchants understand ad performance and optimize placement strategies.

How the Advertising Ecosystem Operates

To understand these products, it's essential to understand the broader ecosystem in which they function. The process begins with advertisers, who fund the campaigns. They create and set up advertising plans on platforms like a DSP (Demand-Side Platform), defining the target audience, region, timing, and budget. Advertisers select suitable ad materials and creative content to attract users and achieve their campaign goals. Once configured, this information is synchronized with the ad delivery engine.

The core function of the delivery engine is processing user traffic. Traffic sources cover both Alibaba's own applications (e.g., Taobao, Tmall, Gaode) and external media ecosystems (e.g., ByteDance, Kuaishou, Zhihu). The engine enables precise ad distribution based on user interests.

The user journey generates a stream of data:

- Exposure Data: Generated when a user searches or browses and an ad is displayed.

- Click Data: Produced when a user clicks on an ad.

- Conversion Data: In e-commerce, a click is not the final goal. Users are guided to perform actions like collecting items, adding to a cart, or placing an order. This information is collected for conversion analysis.

This data is then used for attribution analysis to evaluate ad effectiveness, using industry models like linear, last-touch, U-shaped, and MTA.

Crucial Challenges in the Ecosystem

This complex environment presents two critical operational challenges:

- Anti-Cheating: Ad billing, which relies on exposure and click behavior, is vulnerable to fraudulent attacks. A robust system with both real-time and offline anti-cheating data links is essential to protect the platform and its clients from financial losses.

- Budget Control: Ad spending must be strictly controlled to stay within the advertiser's budget. Over-delivery is not compensated, so precise control over ad volume is mandatory.

The entire advertising ecosystem is highly complex, with long, interconnected links. It is imperative that data accurately and promptly reflects back to advertisers so they can devise effective strategies and adjust budgets.

II. Introduction to Alibaba Mama SDS (Strategic Data Solutions)

To manage the complexities of this ecosystem and deliver value, Alibaba Mama's Strategic Data Solutions (SDS) team provides the following key capabilities:

- Effect Evaluation and Optimization: Provides real-time monitoring and analysis of marketing campaign effectiveness, aiding businesses in continuously optimizing their marketing ROI.

- Personalized Marketing: Utilizes user data for precise audience targeting, enhancing ad effectiveness and conversion rates.

- Data-Driven Growth Strategy: Supports merchants and platforms with growth strategies through data analysis, making their decisions more scientific and effective.

- Technical Services: Offers technical support for marketing insights, strategy formulation, value quantification, and effect attribution to all Alibaba Mama advertising clients.

III. The Importance of Real-Time in the Advertising Business

Real-time data is the lifeblood of an efficient advertising business. Its importance is highlighted by:

- Quick Decision-Making: Real-time data allows advertisers to swiftly understand ad performance and adjust strategies promptly to enhance placement effectiveness.

- Budget Optimization: Real-time monitoring helps advertisers allocate budgets wisely, investing in better-performing channels and ads to maximize ROI.

- Market Response: Real-time analysis enables advertisers to comprehend market changes and consumer behavior, allowing them to grasp trends and enhance competitiveness.

- Personalized Marketing: Based on real-time insights, advertisers can achieve more precise targeting, improving user experience and conversion rates.

IV. Characteristics and Challenges of Alibaba Mama's Real-Time Business

Alibaba Mama's real-time business is defined by two primary characteristics, which also represent its core challenges:

- High Availability: Both internal operations and external customers rely on real-time data for critical decision-making. Services like algorithmic real-time pricing (ODL) demand exceptional timeliness and stability.

-

Extreme Complexity: The ad placement process is intricate, involving multiple interconnected systems (e.g., BP, engines, media, risk control, and settlement) with long upstream and downstream dependencies. This complexity manifests in several ways:

- Massive Data Volume and Numerous Sources: The system must handle enormous volumes of data from a wide variety of sources.

- Complex Processing Logic: It requires sophisticated, real-time processing for attribution, anti-cheating, and budget control.

- Multi-System Coordination: It demands seamless and stable data flow across numerous independent but interdependent systems.

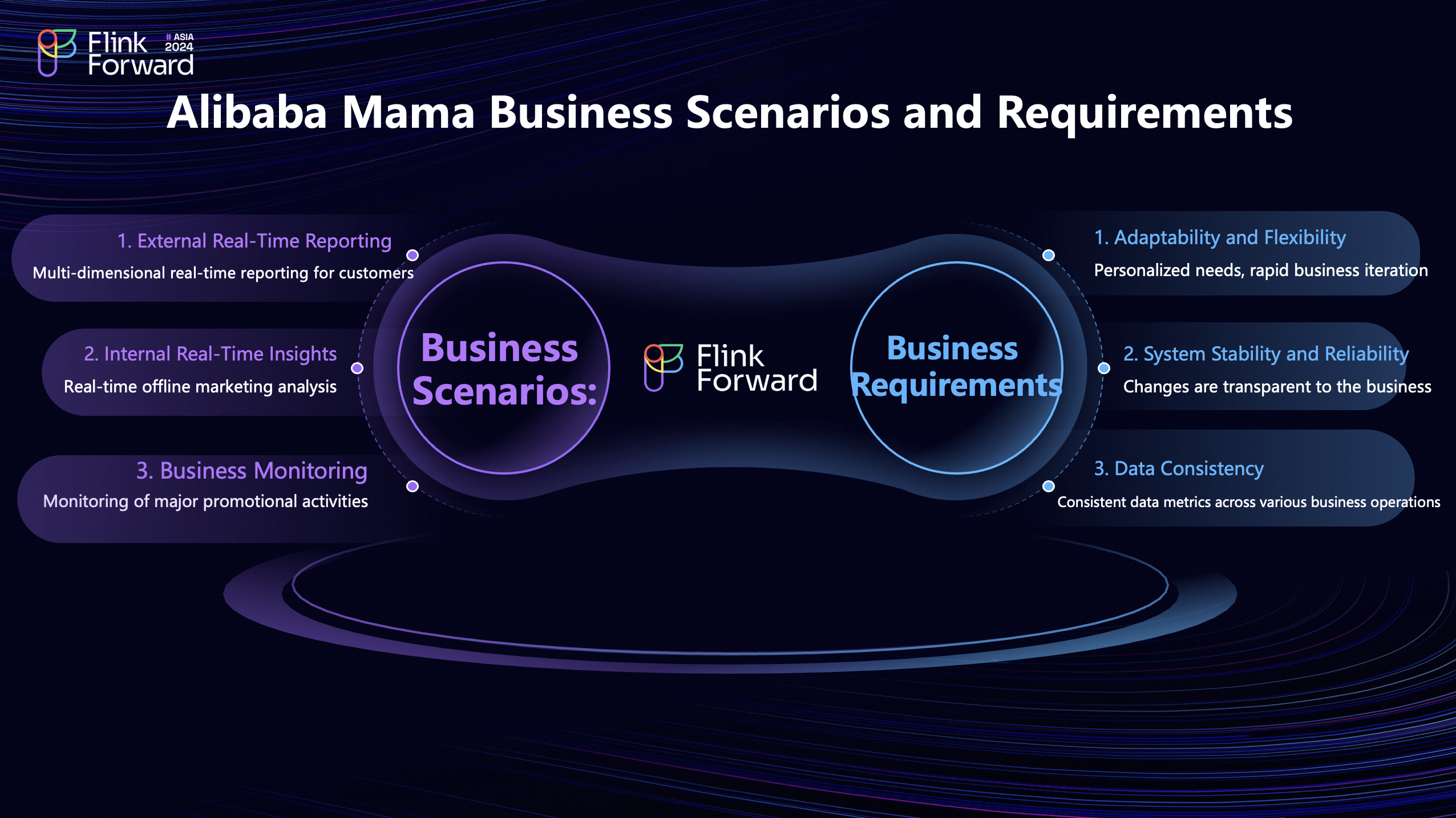

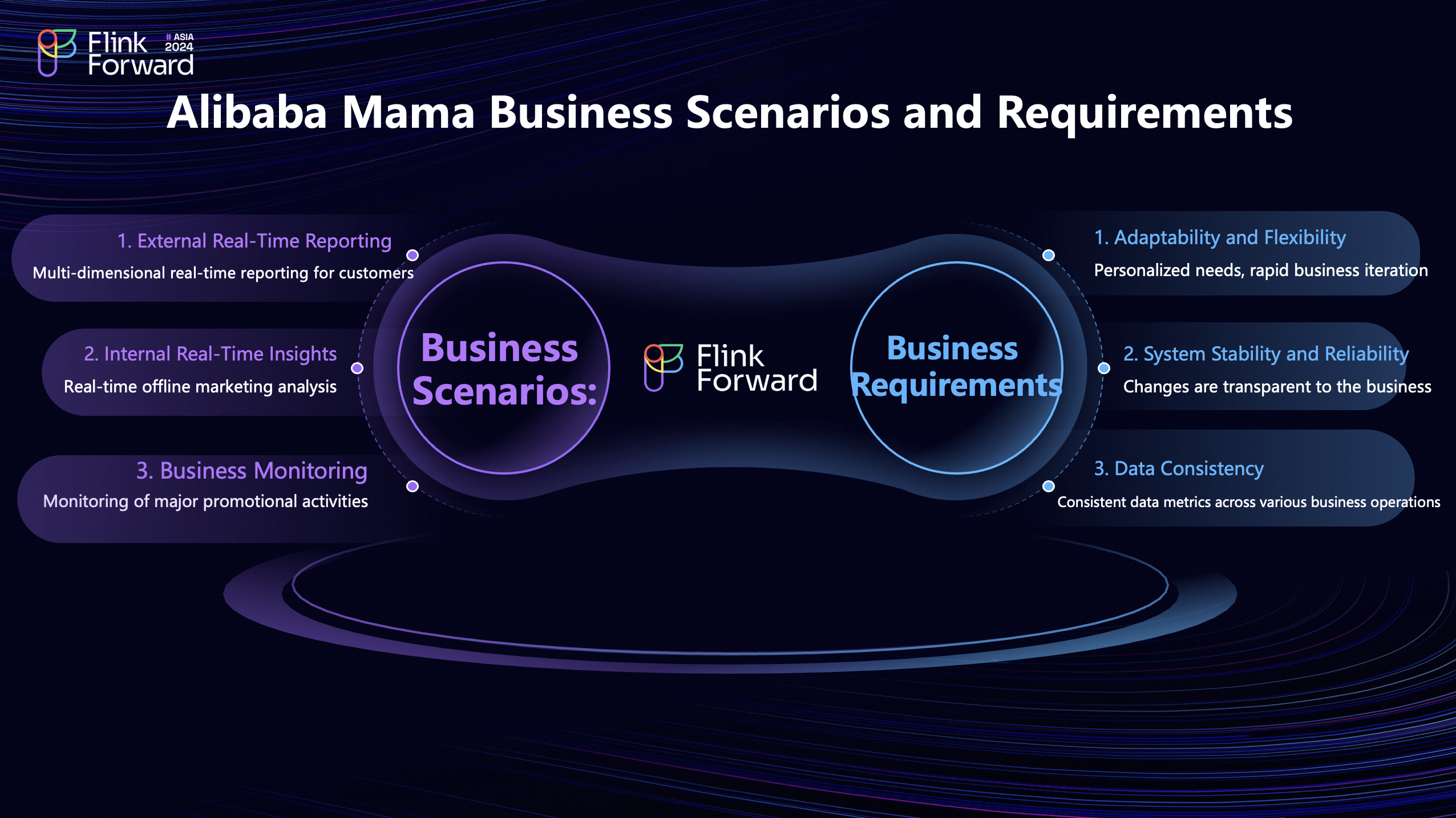

Alibaba Mama's Data Business Scenarios and Demands

Combining the above business background, here we introduce Mama SDS's business scenarios and demands.

(1) Business Scenarios:

- External Real-Time Reports: Advertisers need to view real-time data across various dimensions, such as exposure volume, click-through rate (CTR), and return on investment (ROI), to understand ad effectiveness in real-time and determine if the budget needs to be increased or stopped.

- Internal Real-Time Analysis Needs: Internal operations personnel need to evaluate ad delivery, analyze specific audiences, products, and scenarios, and review audience effects, regional delivery conditions, and other potential issues post-ad delivery. Therefore, both real-time and offline analyses are required internally.

- Business Monitoring: During key promotional event nodes, such as 618, Double Eleven, and Double Twelve promotional periods, we need to closely monitor business changes, including whether traffic meets standards, if the budget is sufficient, and if the results are satisfactory. Real-time data is essential for flexibly adjusting operational and delivery strategies.

(2) Business Demands:

- Flexibility and Variability: Alibaba Mama SDS interfaces with numerous downstream businesses, each with specific needs, undergoing rapid changes and frequent iterations.

- Stability and Reliability: The entire system requires real-time data for multiple viewers, with no interruptions or data update delays. If delayed, advertisers will immediately notice, leading to complaints or stopping ads, potentially causing serious issues, such as trending on social media within 15 minutes.

- Data Consistency: Advertisers can view data across multiple systems, making data consistency crucial; otherwise, they may question the accuracy of the data.

Technical Goals for Alibaba Mama's Data Warehouse Construction

-

Data Timeliness: From a technical standpoint, advertisers desire data to be as real-time as possible, ideally immediate and without delay. Currently, we ensure minute-level data updates.

-

System Throughput: Alibaba Mama handles an enormous volume of traffic, with daily scales reaching trillions and TPS in the tens of millions, presenting significant read/write challenges. We aim for the system to quickly replay data in specific scenarios, such as after new business launches, rapidly refreshing data into the system, making fast replay a fundamental requirement.

-

Stability and Reliability: System availability should be ≥99.9%. Quick recovery from failures is essential to "stop the bleeding." Supporting gray releases is crucial as faults or issues often occur during releases or changes. The system must quickly roll back during gray release problems, preventing fault impact from persisting and expanding.

-

Cost Efficiency: As the saying goes, "wanting the horse to run without feeding it," we aim to support more business with minimal resources. Simultaneously, we seek to reduce development and maintenance burdens, freeing ourselves from heavy workloads to focus on more meaningful business.

Architecture Design

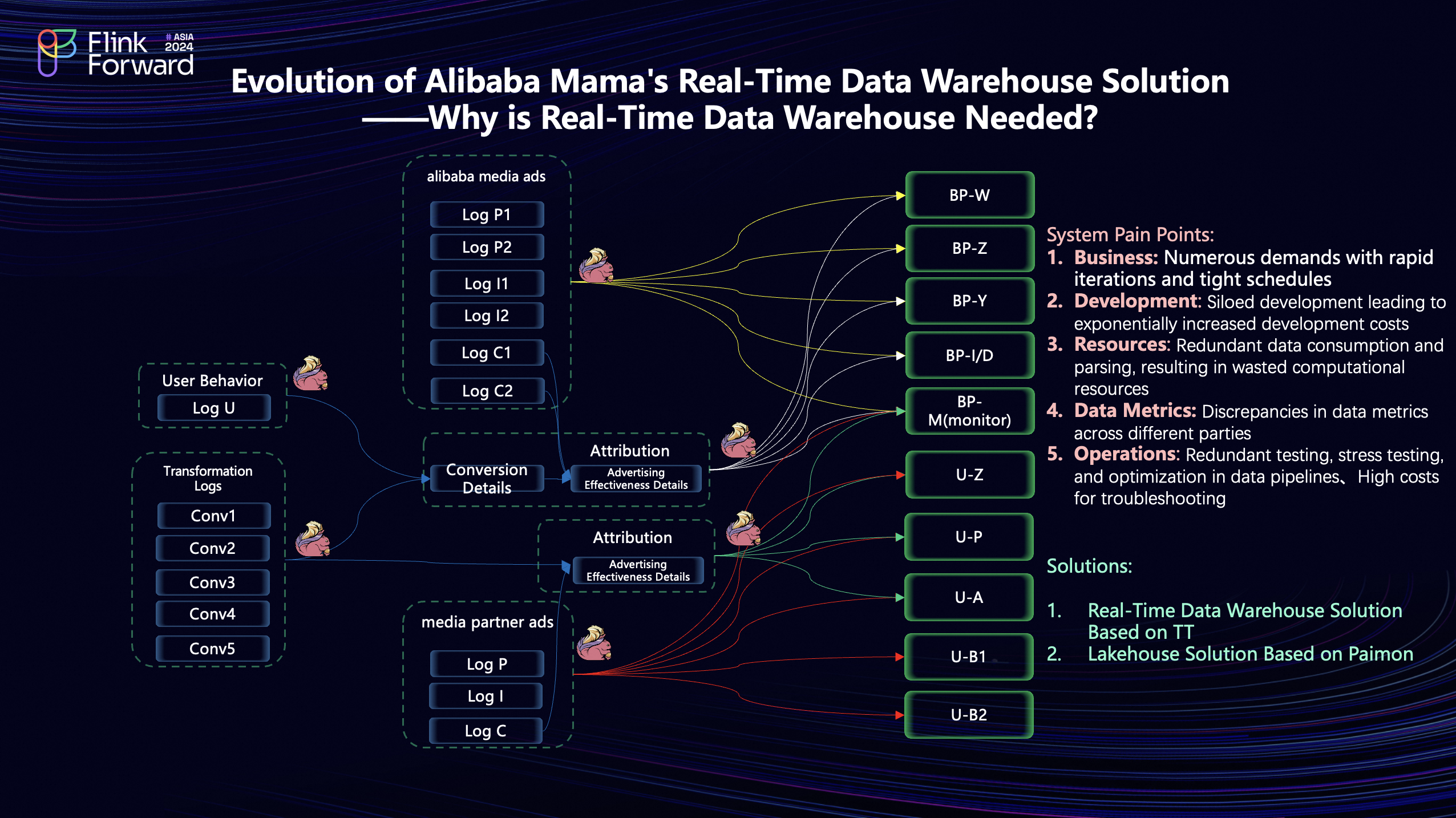

After introducing the business, let's now share our architecture and its evolution process. First, let's look at the simplified system link diagram.

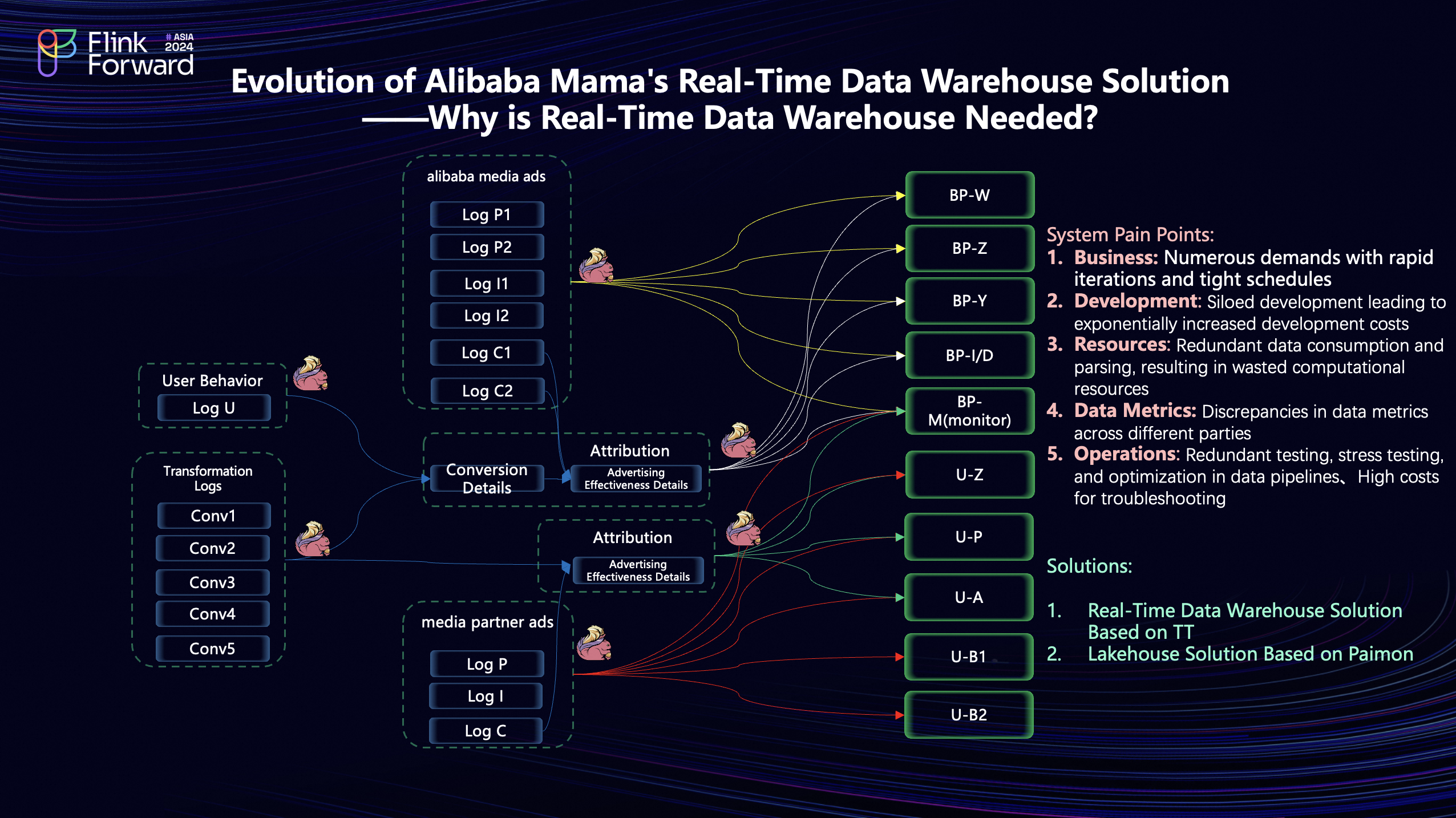

Why is Real-Time Data Warehousing Needed?

From the diagram, it's evident that the simplified link is actually quite complex. When initially building the real-time system, our goal was speed, demanding the system quickly cover business needs. How do we achieve this speed? We use Flink for data consumption, with real-time data written into business systems through systems like Kafka, enabling rapid business launch. This is a common approach. The diagram clearly shows how the same data is presented across different business series. Each link represents a business logic, sharing a set of code and a series of jobs, yet parsing and consuming identical data.

While this approach offers some apparent benefits, it's crucial to address the following issues:

- Flexibility and Variability: Initially, at the business level, as business types increase, each link requires maintenance and development, posing significant challenges in flexibility and efficiency.

- Development Costs: Secondly, this development model resembles a silo approach, lacking reusability and leading to redundant work.

- Resource Waste: Thirdly, all data needs parsing and consumption, which actually wastes resources, as some data may never be used but still requires parsing and processing.

- Data Consistency: Fourthly, the issue of data consistency arises. If a data caliber is forgotten or not updated timely in a business system, it results in problematic output data.

- Maintenance Costs: Lastly, on the maintenance side, we need to handle all tasks ourselves, including stress testing, performance tuning, and troubleshooting, which demands significant time and cost investment.

To address these issues, we propose two solutions.

Evolution of Alibaba Mama's Real-Time Data Warehouse Solution

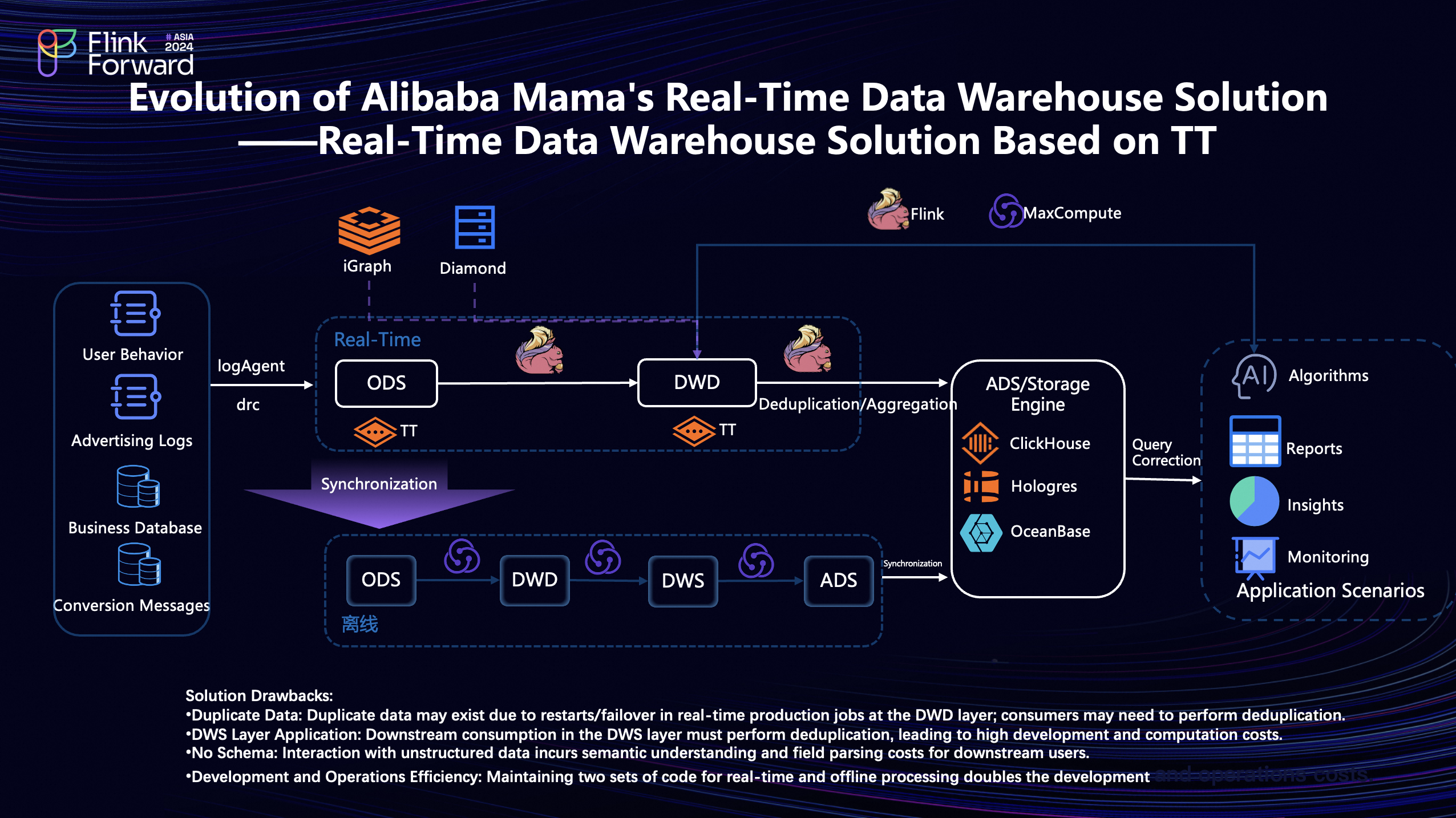

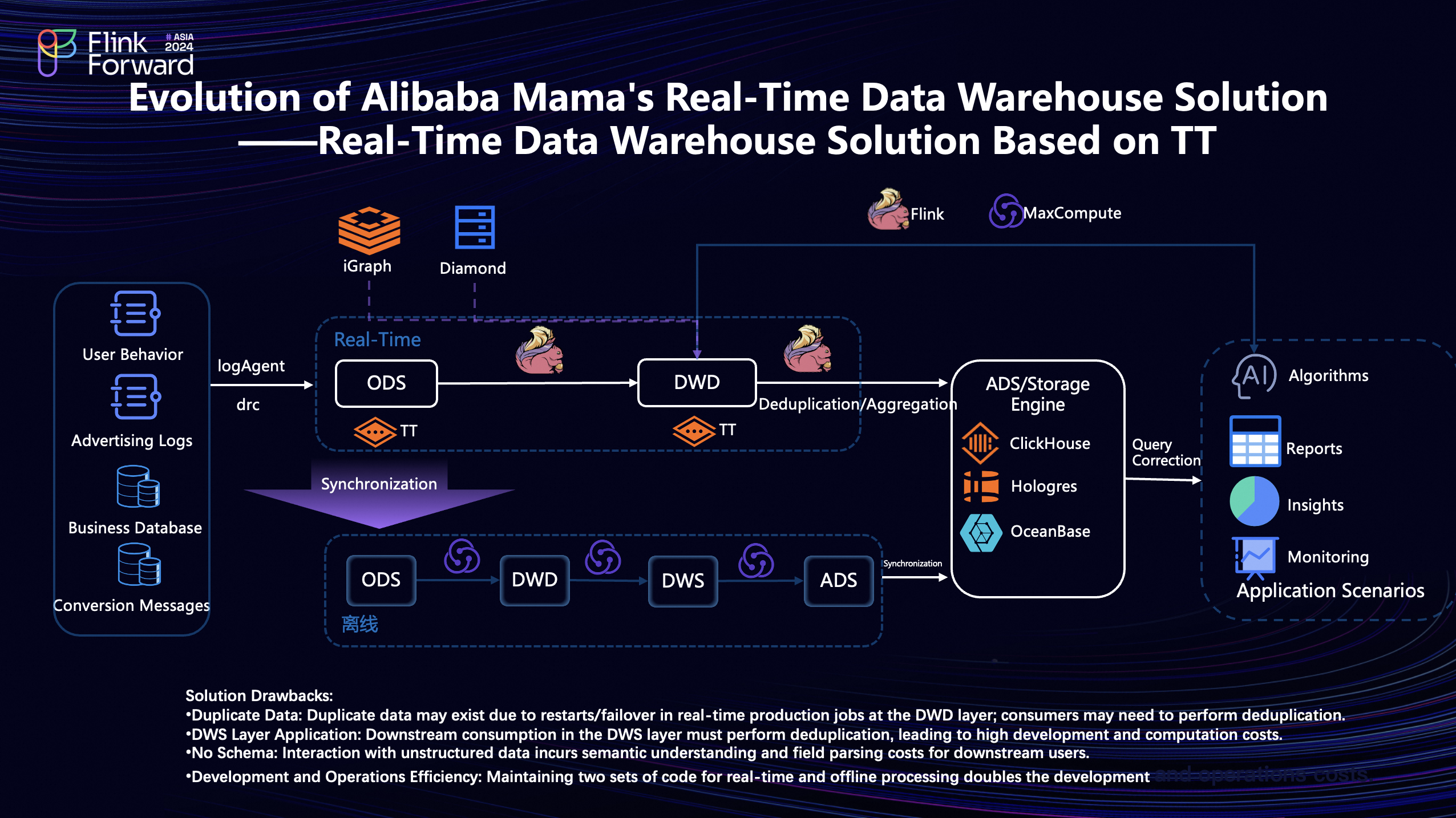

Real-Time Data Warehouse Solution Based on TT

a. Data Warehouse Layering

The real-time data warehouse solution based on TT (a message queue similar to Kafka) includes ODS, DWD, and online dimension tables.

-

ODS Layer: This layer comprises logs required for business, including user behavior logs, ad logs, and conversion messages from business databases, such as collections, orders, and transactions. These are collected into TT via log collection/DRC systems as ODS layer data for the real-time system.

-

DWD Layer: In the real-time link, the system utilizes Flink to consume TT data and process DWD layer data.

-

Online Dimension Tables: The ODS layer may lack some common dimension fields, which might not be frequently updated, typically on a daily basis. We designed a dimension table system (iGraph) to supplement these dimension fields. Additionally, we use a configuration system (diamond) to add some information akin to dimension table data, supplementing the DWD layer.

b. Solution Drawbacks

-

Data Duplication: When consuming DWD layer data downstream, one might notice data duplication due to business iteration or job failover in ODS and DWD tasks. Whether deduplication is necessary in the DWD layer depends on business needs, but it's usually essential, as failure to deduplicate can lead to duplicate data occurrences, such as advertisers noticing sudden year-on-year data growth, triggering complaints.

-

Missing DWS Layer: Another issue is the potential to add a DWS layer between the DWD and application layers, as aggregation logic often remains consistent. However, in TT, lacking upsert capabilities prevents overwriting earlier aggregation results with the latest ones in the DWS layer. For instance, assuming a 5-minute time window, data aggregated at 10:05 is written to TT, and the second aggregation at 10:10 needs to overwrite the previous result, but TT retains both records. Downstream connections to TT require self-executed deduplication, which is costly. Testing shows resource consumption at least doubles, with large data volumes causing job instability. Without a DWS layer, aggregated results are directly written to downstream online storage systems, including OceanBase, Hologres, and ClickHouse. The real-time link aims to address timeliness issues; even with real-time anti-cheating mechanisms, precision remains insufficient. Moreover, deduplication logic is needed in the business context.

-

No Schema: In the real-time link between DWD and ODS, we face unstructured data issues. These data lack a fixed schema post-parsing, forcing downstream users like algorithms and BI analysis to parse and compute independently, increasing costs and wasting resources.

-

Resource and Efficiency: Advertisers naturally desire fast and accurate data. Thus, we deployed an offline link, a typical lambda architecture, synchronizing ODS layer data to MaxCompute and processing ETL to produce DWD layer data, aggregating DWD to ADS layer for final synchronization to online storage systems. Maintaining both offline and real-time code sets undoubtedly doubles development and maintenance resources.

Lakehouse Solution Based on Paimon

How can we address the issues faced by the real-time data warehouse based on TT? Fortunately, starting in 2023, we've focused on the Paimon technology stack, beginning research in June 2023 and gradually promoting its application in business.

Solution Advantages:

-

Primary Key Table: In this solution, the ODS layer remains consistent with the previous solution, based on TT storage with an identical lake entry process. However, the DWD layer replaces the original storage with Paimon and introduces a new DWS layer design. Paimon supports upsert operations, enabling deduplication through upsert writes in the DWD and DWS layers, with downstream consumption of changelogs.

-

Schema: Additionally, it offers another benefit whereby previously mentioned algorithm, BI, and various operational data needs can now be directly queried at both the DWD and DWS layers. Previously, support was required, but now, data tables are parsed, and data is ready for direct use in real-time and offline queries.

-

Development Efficiency: Unified schema for both real-time and offline processes eliminates the need for additional parsing, development, and computational overhead, allowing code reuse across real-time and offline operations.

Regarding the offline link, why was it necessary to synchronize data to the ODS step? Essentially, due to the anti-cheating mechanism being based on offline processing, it must handle batch data, making this step indispensable. Moreover, this process provides another advantage as a backup link. After data parsing at the ODS layer, it can be reverse-written into Paimon, enabling downstream queries to access corrected data. If correction results exist, corrected data is queried; if not, real-time data is directly queried. Once the entire process is completed, data is synchronized to ClickHouse to support high-frequency downstream queries.

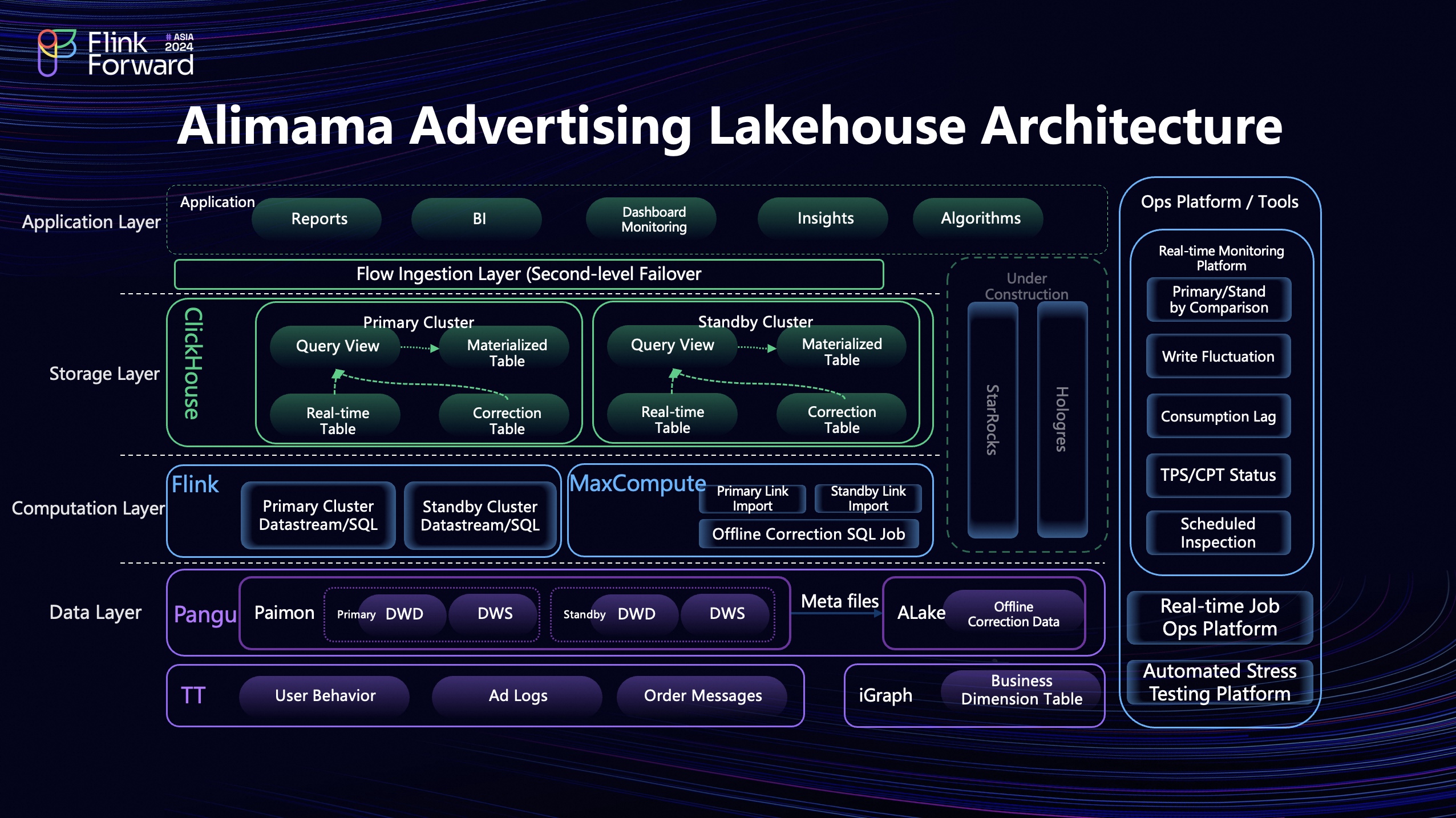

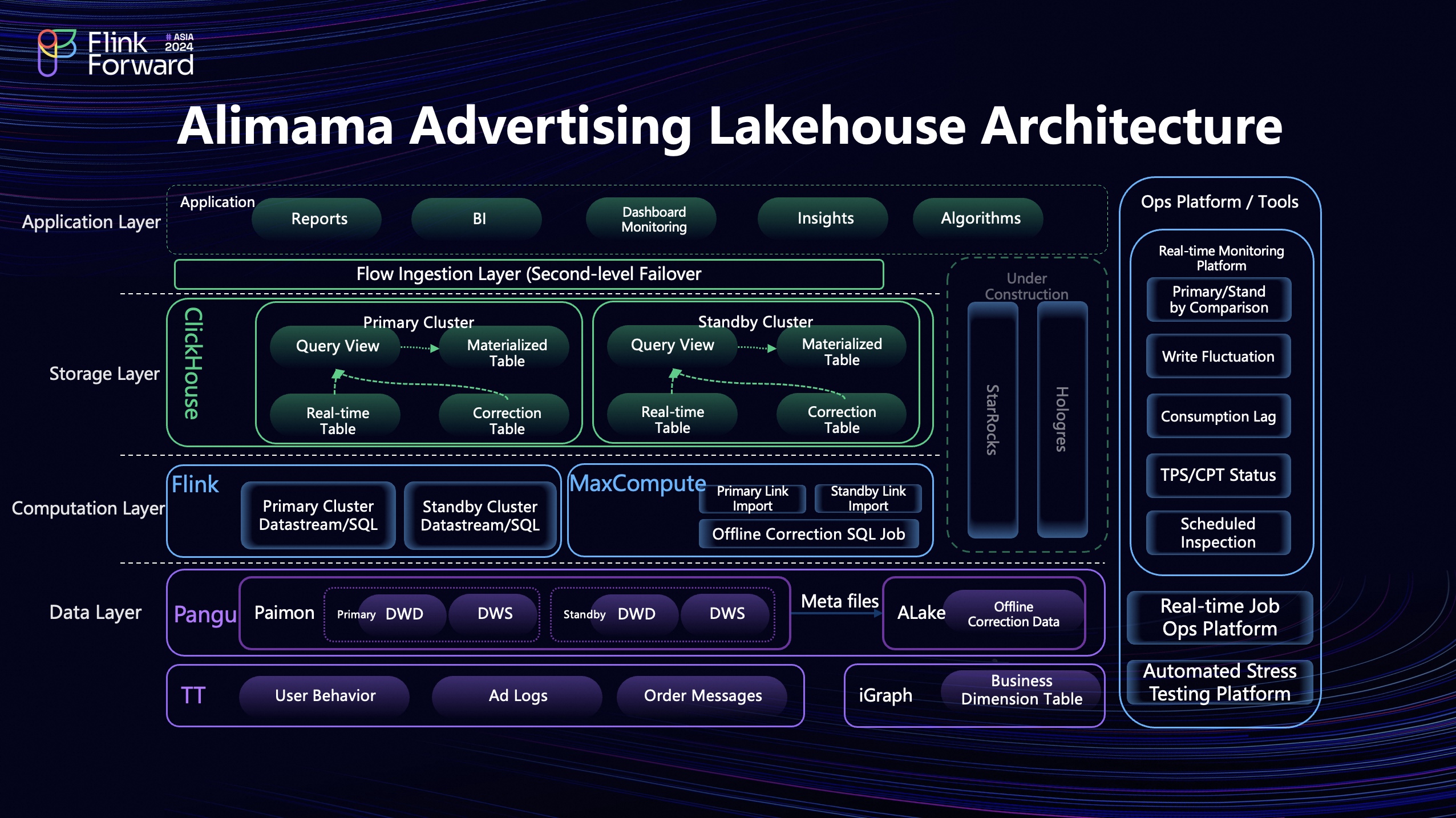

Alibaba Mama Advertising Lakehouse Architecture

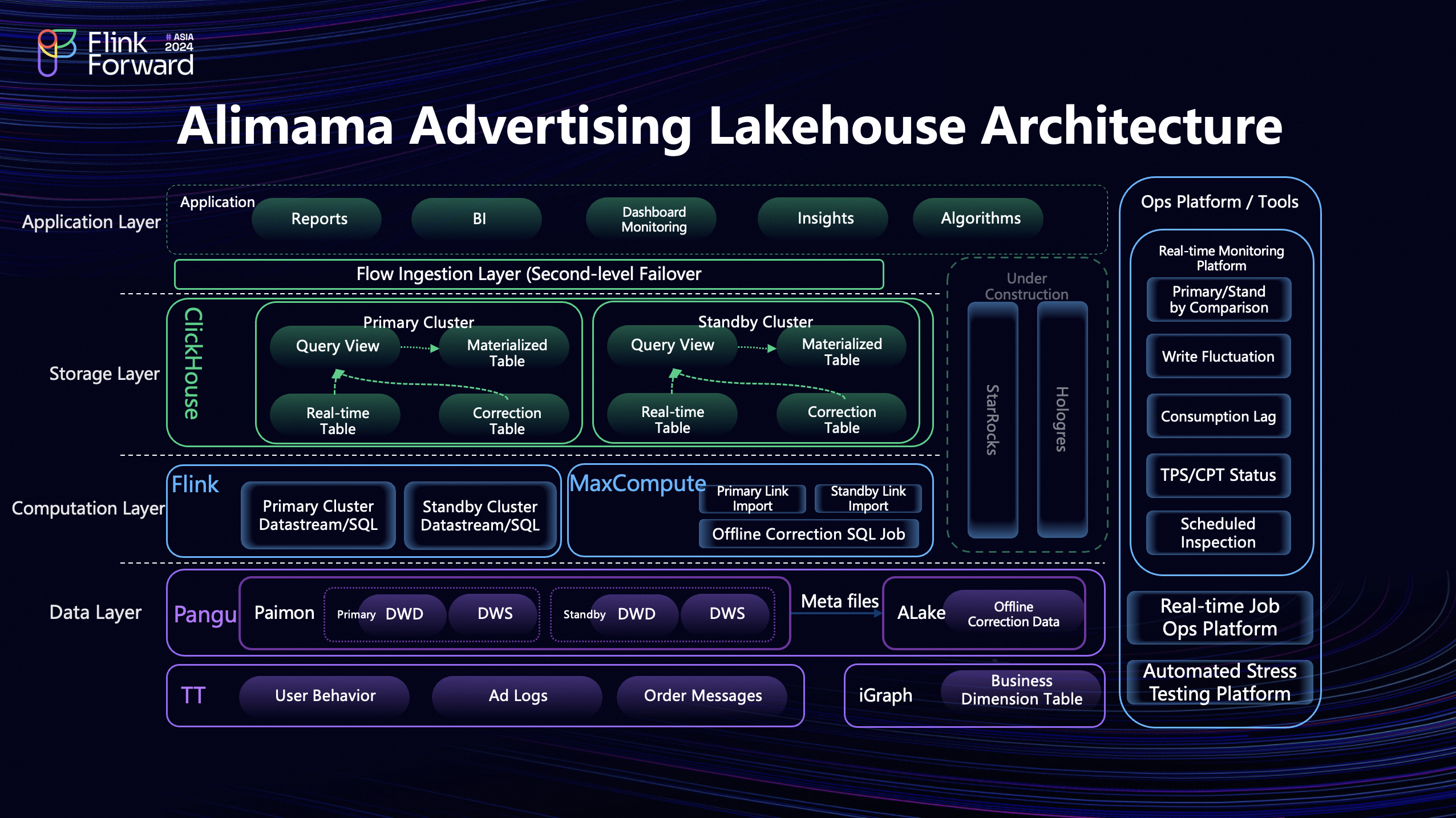

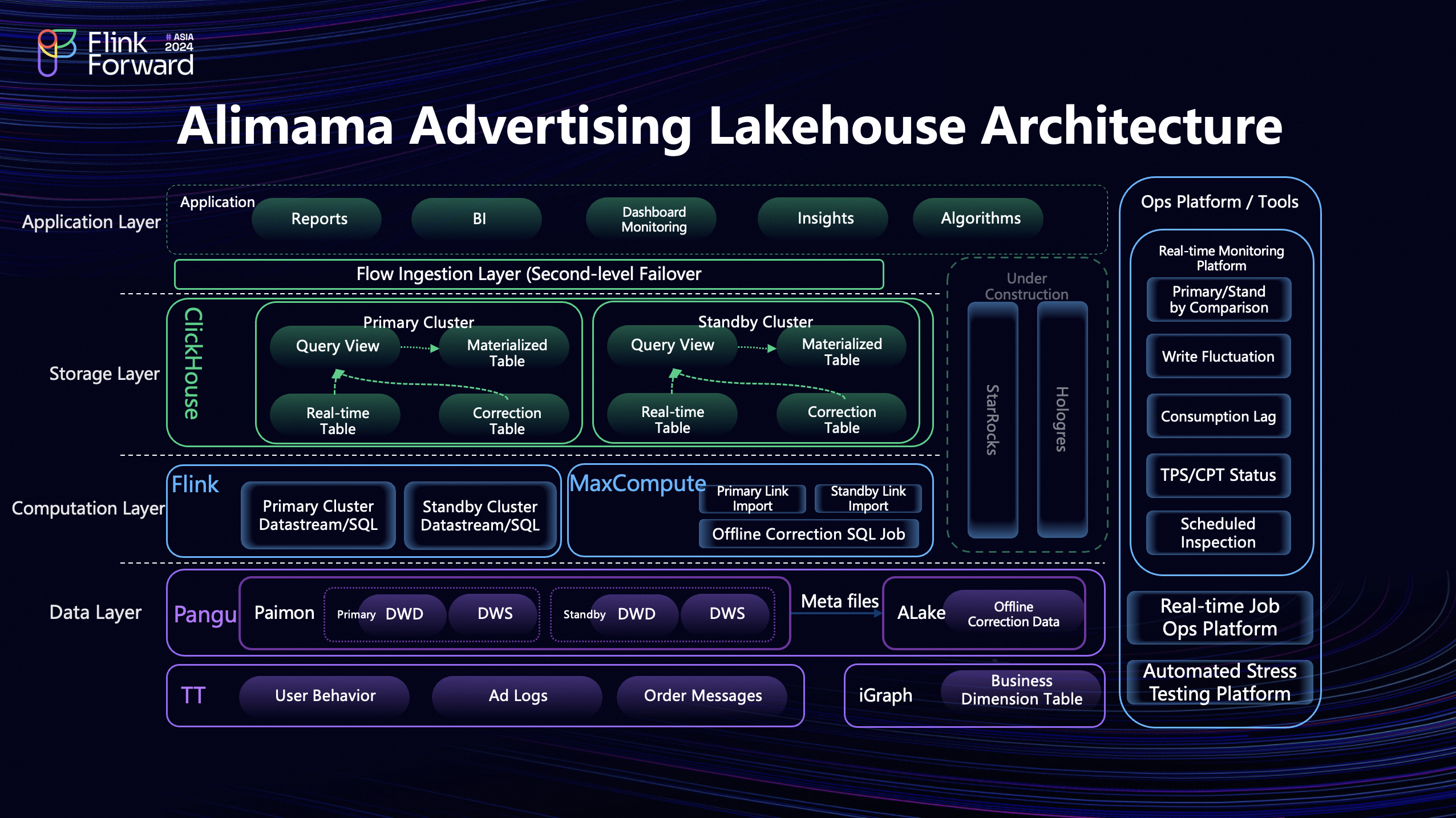

The current advertising lakehouse architecture at Alibaba Mama is as illustrated. The system architecture comprises four layers and an operations platform.

-

Data Layer: The initial source of data remains in TT, with the entire system relying on TT, hence this part remains unchanged. TT contains various behavioral data, advertising data, and transaction conversion data. Dimension table data from business databases is incrementally synchronized to TT. After parsing these incrementally synchronized data, they are written into iGraph. TT data undergoes ETL processing via Flink and is imported into our data lake, namely Paimon tables, including DWD and DWS layers. There are dual storage systems here, designed for remote disaster recovery and providing availability and gray release capabilities. Data is provided in real-time for consumer use, while the offline link offers offline data correction, relying on another offline link. Ultimately, these two sets of data are merged.

-

Compute Layer: All ETL processes, including final data storage writing, utilize Flink and MaxCompute computing engines.

-

Storage Layer: Configured with dual systems, primary and backup links are set up with synchronization tasks to ensure reliability and independence of synchronization.

-

Application Layer: Comprises applications such as Mama reports, BI, algorithms, and Damo Disk.

-

Operations Platform/Tools: Includes system monitoring, system operations, and automated stress testing.

This design effectively constitutes a three-link architecture, including two real-time links and one offline link, allowing flexible daily switching. The offline link serves as a backup for the entire system, ensuring data availability if Flink encounters issues, providing offline data as a safeguard. Although issues are undesirable, our architectural design necessitates preparedness. Additionally, it serves as a backup plan for large-scale promotional activities. For high-frequency, large-scale queries, we currently use ClickHouse, capable of handling applications with TPS in the tens of thousands. For smaller traffic scenarios or more flexible queries, support for MaxCompute is available, though it may impact timeliness, potentially at an hourly level. Exploration of StarRocks and Hologres for flexible point queries and analysis is ongoing. Upstream applications primarily include reporting, BI monitoring, algorithms, and real-time and batch data insights. These constitute the four layers. On the far right, we possess our operations tools and platform due to the large scale of our jobs, necessitating various monitoring, such as inter-group latency and issue detection. For instance, monitoring is required for write issues, consumption issues, intermediate process state issues, or specific node issues, as this is a global monitoring process. We designed such a system to address potential issues. We have our real-time operations platform and stress testing platform because stress testing is essential in big data scenarios. Given significant annual changes in traffic and business, failing to conduct stress testing may lead to various issues.

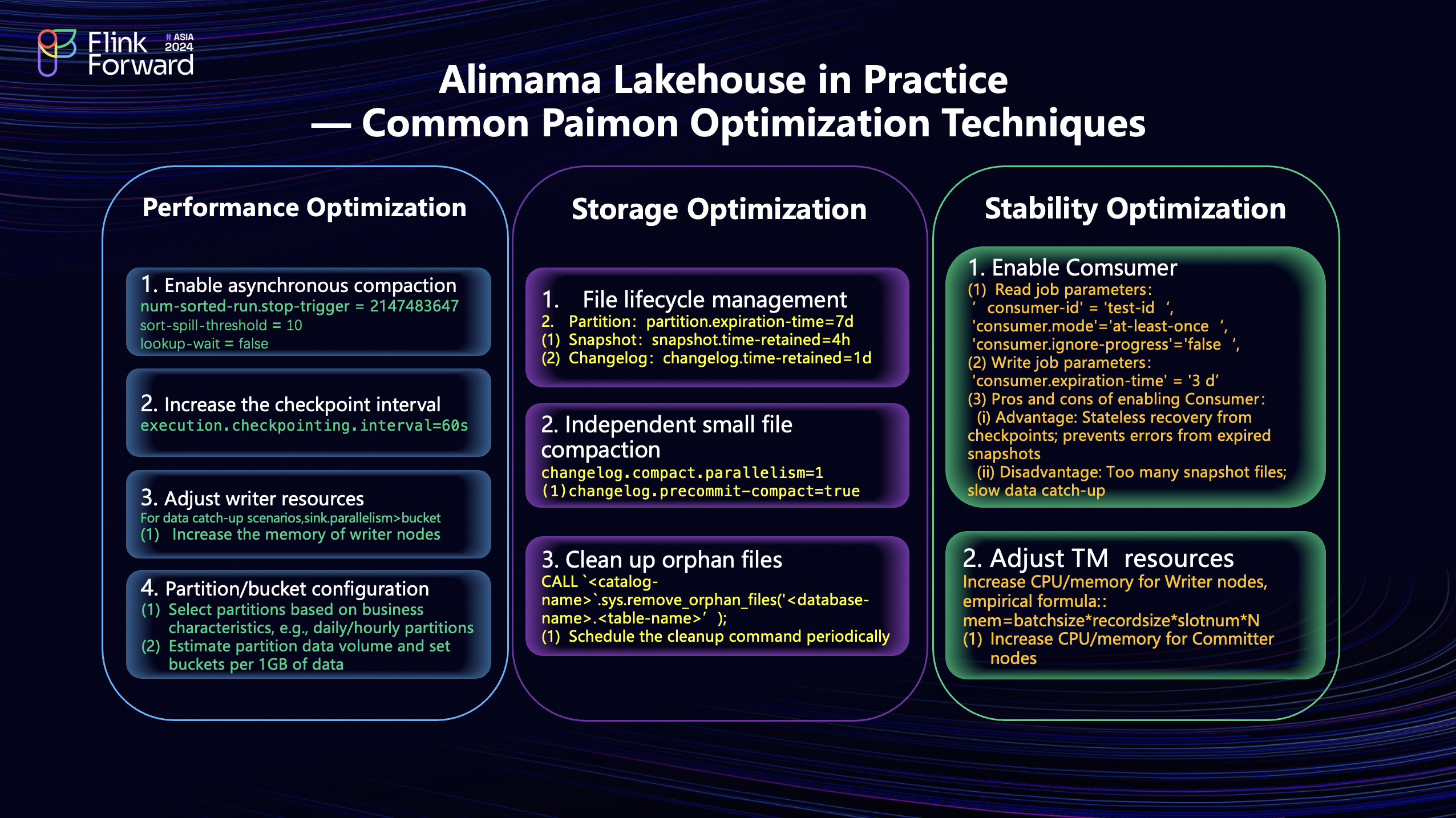

Common Optimization Techniques for Paimon

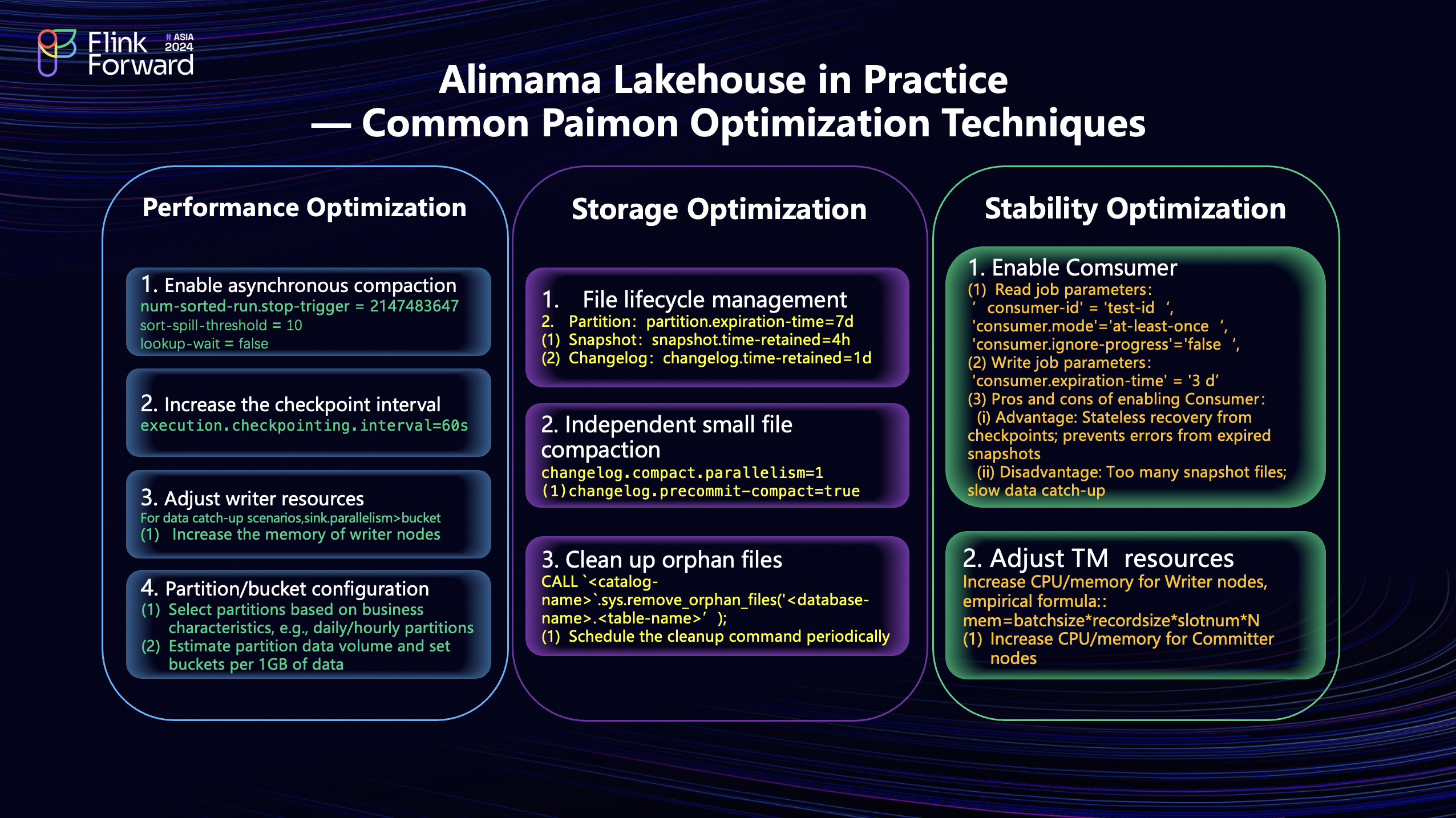

Earlier, I introduced the evolution of our entire lakehouse architecture and our vision for the next architecture. Here, I'd like to share some common optimization strategies we employ when building a lakehouse.

-

Performance Optimization:

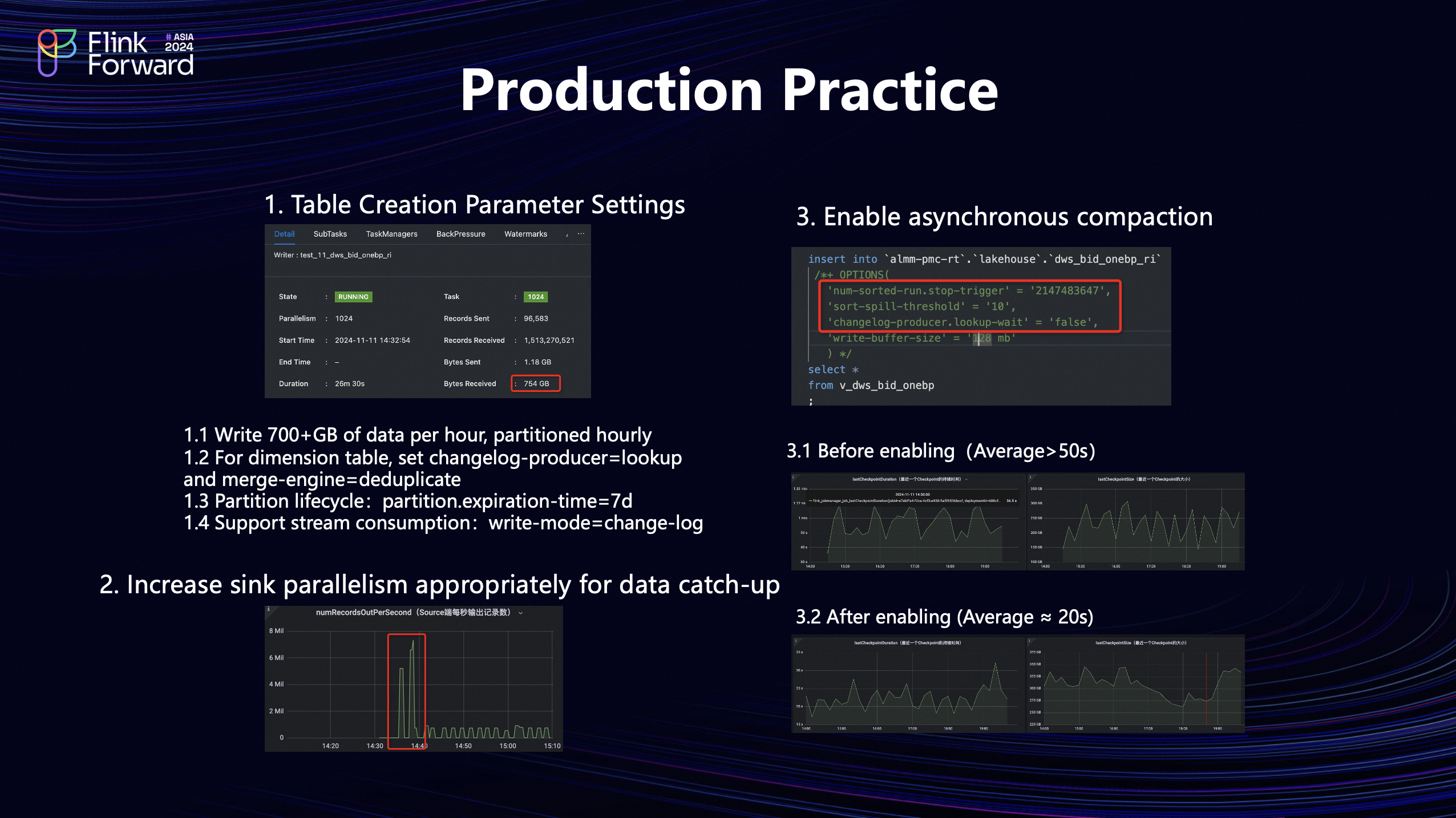

- It's highly recommended to enable asynchronous compact functionality based on usage, significantly enhancing system throughput, including single-table write throughput and stability.

- Adjusting the Checkpoint interval time is crucial, depending on traffic conditions. For instance, a system with traffic in the hundred-thousand range might not require adjustments, but systems with millions or tens of millions of traffic must increase the Checkpoint interval time to avoid bottlenecks and various backpressure issues caused by frequent checkpoints.

- Adjusting Writer node resources also requires traffic-based estimation, such as adjusting parallelism based on bucket count, which is especially important during data tracing and backfilling. The memory configuration of Writer nodes should be adjusted according to the scenario, as large traffic scenarios typically require adjustments.

- Finally, consider partition selection and settings, as well as bucket count settings based on business characteristics. Once a Paimon table is established, adjusting its partition or switching bucket count requires a Rescale process, potentially necessitating system stoppage, which is relatively costly. Thus, in considering peak scenarios, we need to evaluate whether performance can meet demands.

-

Storage Optimization:

- File Lifecycle Management: Checkpoint execution generates many small files. If lifecycle table settings are too long, the number of small files naturally increases. Although there are merge operations, lifecycle management issues cannot be ignored in high-traffic scenarios. This includes partition, lifecycle, snapshot, and changelog lifecycle management.

- Small File Merging: File merging can be completed by enabling independent compact jobs. Small file merging is a current focus, and the community may propose new solutions, so stay tuned.

- Cleaning Obsolete Files: Initially, we might encounter poor performance optimization or stability differences, causing frequent job failures and generating isolated files. These files need manual cleaning. The community provides general commands that can be used to write custom cleaning scripts and schedule them to resolve issues. Storage optimization primarily addresses file quantity issues rather than file size issues.

-

Stability Optimization: Stability issues are particularly pronounced during high traffic, potentially triggering failures. For example, unreasonable memory settings or insufficient peak traffic estimation might prevent system recovery. Although we set expiration times for lifecycle and snapshot files, once expired, jobs may become unrecoverable.

- Enabling Consumer: To address this, we offer Consumer capabilities, allowing Consumer IDs to be marked and never expire within a certain timeframe, enabling recovery after job failures. However, note that enabling Consumer increases file quantities due to extended expiration times. During tracing, the system scans Snapshot files one by one, increasing the final traced data volume and slowing speed.

- Adjusting TM Resources: TM CPU/memory resource adjustments should be based on specific circumstances. The Paimon team provides experience formulas for adjustments, such as estimating memory requirements based on a table's update frequency, record size, and bucket count. Configuration should be based on experience, particularly noting that users familiar with Paimon should know its final Commit node, ensuring data eventual consistency. If there are numerous partitions, appropriately increasing CPU and memory resources ensures smoother system operation.

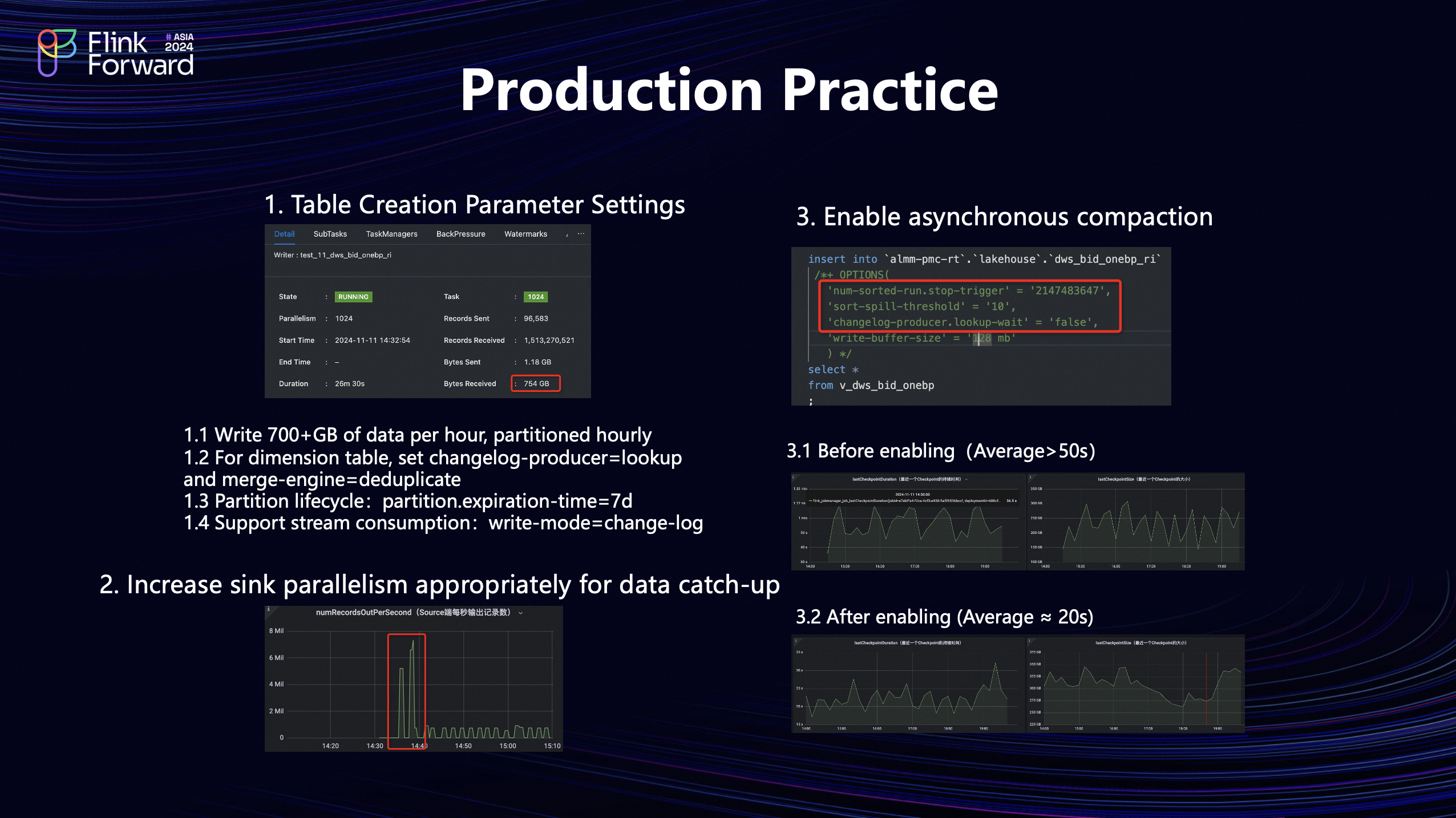

Here's an optimization example: we completed a job that wrote over 700 GB of data in an hour. For this job, we set the bucket number per partition to 512, with one partition per hour. The lifecycle was set to seven days, supporting real-time data stream read/write. With these settings, TPS (transactions per second) can reach five to six million. Before enabling asynchronous processing, the average node switching time exceeded 50 seconds, but after enabling, it was reduced to 20 seconds.

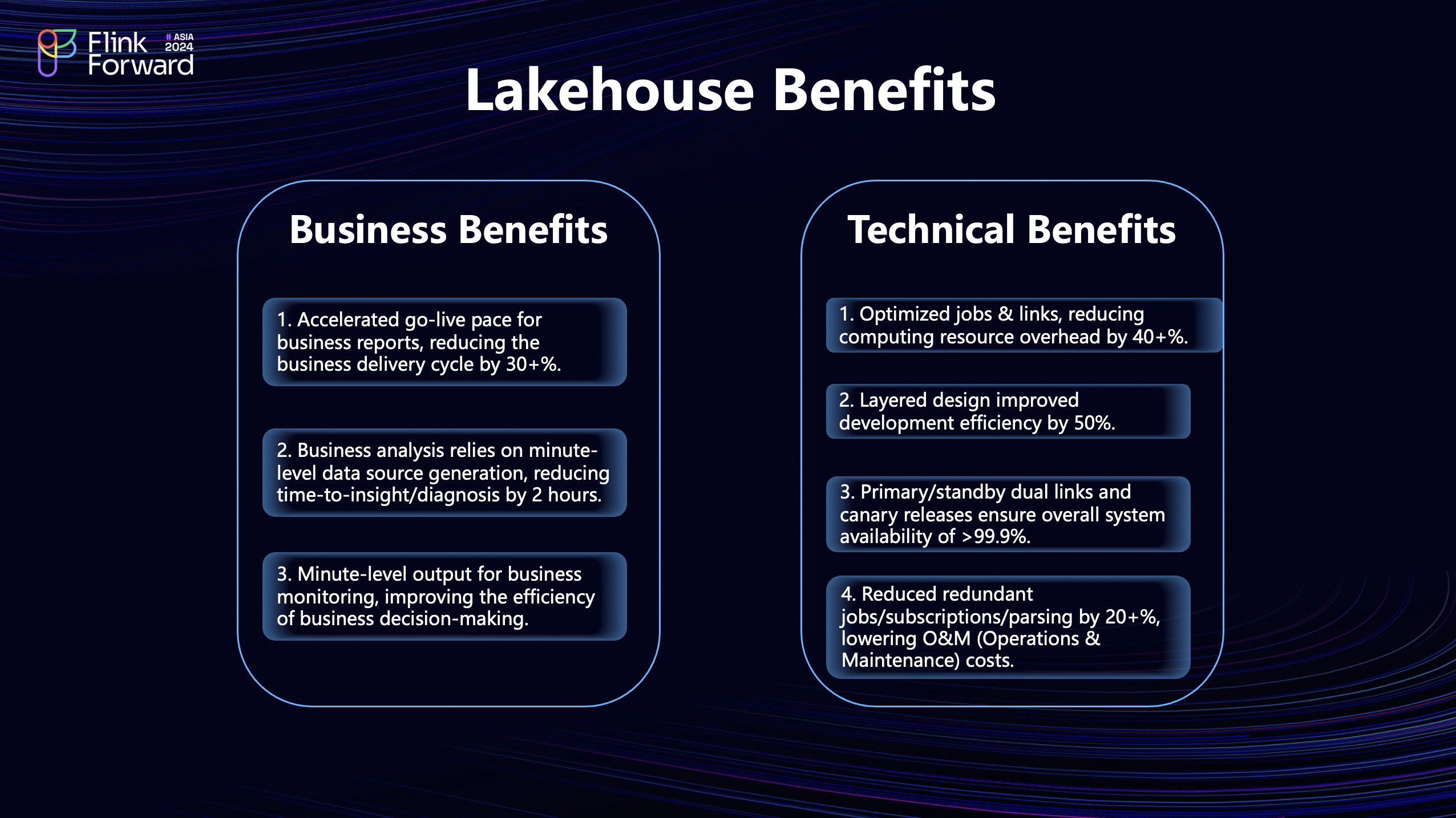

Lakehouse Benefits

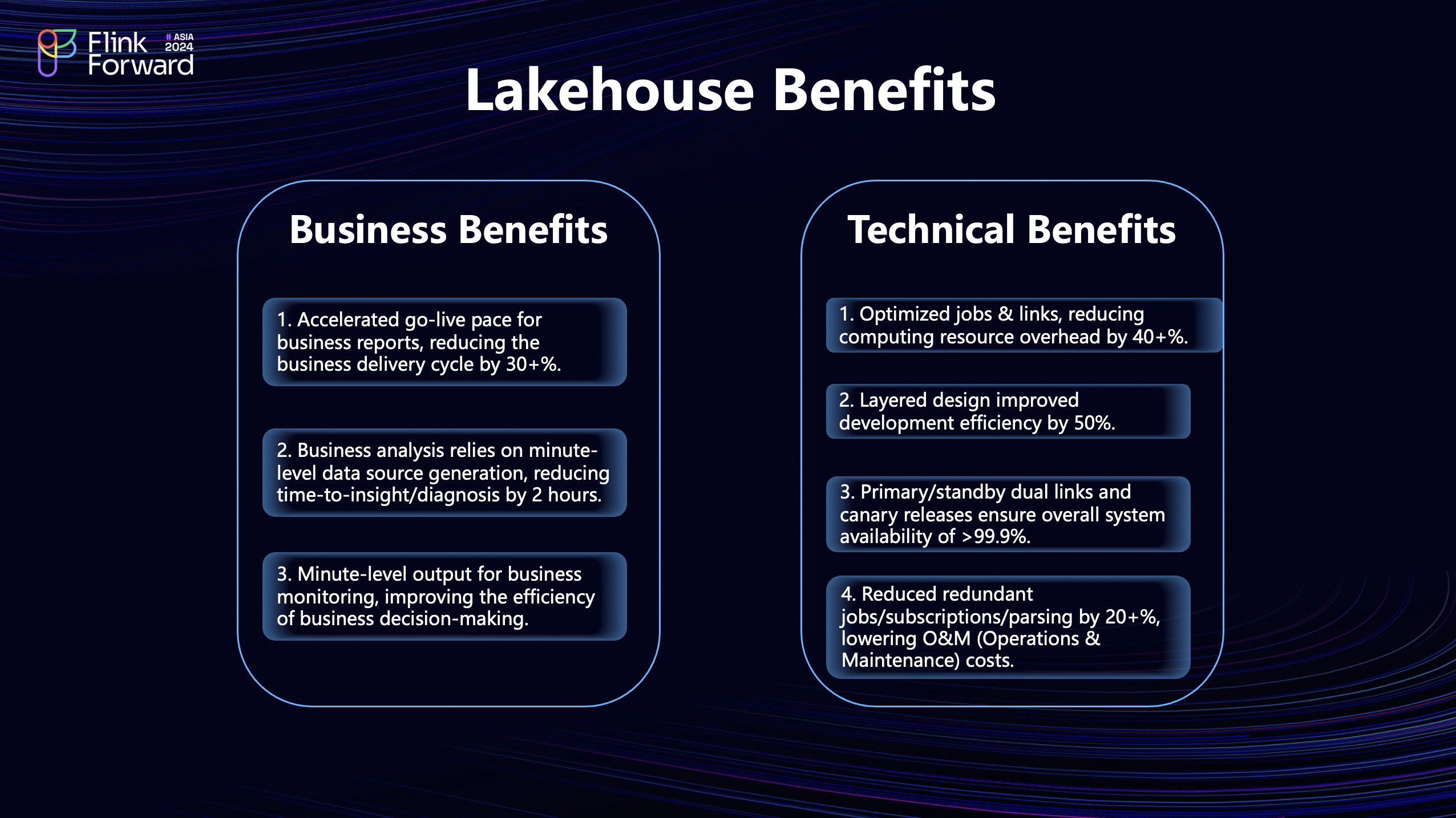

Next, I'd like to share the business and technical benefits brought about by these optimizations.

-

Business Benefits:

- The layered and arc design simplifies many downstream iterative tasks, requiring only a snippet of code for Group By or Join operations, significantly shortening the delivery cycle. Overall, interaction cycles have been reduced by approximately 30%+.

- Real-time data from the DWD and DWS layers can be used for insight scenarios without offline processing, typically producing a batch of data within 15 minutes. Overall, timeliness has been reduced by about two and a half hours, saving an average of two hours.

- Real-time data monitoring has greatly improved business operation efficiency. Previously, business operators felt anxious when data updates exceeded two hours, uncertain whether to increase the budget or halt campaigns. Now, with minute-level data output, operators can accurately assess campaign progress and whether budgets meet expectations.

-

Technical Benefits:

- The optimization primarily alleviated our computational resource burden. By eliminating duplicate consumption links, overall resource expenditure reduced by over 40%.

- The layered design enhanced business efficiency, reducing development workload by 50%.

- The dual-link design mentioned in the architecture meets the high availability requirement of three nines, i.e., 99.9% uptime. Calculated over a year (365 days), downtime should be less than 9 hours. In reality, we experienced almost no prolonged downtime throughout the year; if such an event occurred, you'd surely see us trending on social media.

- Lastly, the redundancy in job subscriptions: subscribing to TT incurs costs, meaning duplicate consumption results in duplicate charges. Additionally, the resource expenditure for our Flink jobs is substantial, translating to costs. Thus, eliminating some jobs reduces the need for constant monitoring, synchronously lowering operational costs.

Realtime Compute for Apache Flink

Realtime Compute for Apache Flink

Data Lake Formation

Data Lake Formation

Data Lake Storage Solution

Data Lake Storage Solution

Data Lake Analytics

Data Lake Analytics