By Wang Xining, Senior Alibaba Cloud Technical Expert

Istio is an open-source service mesh that provides the basic runtime and management elements required by a distributed microservices model. As organizations increasingly adopt cloud platforms, developers must use microservices models to achieve portability, and administrators must manage large distributed applications that are deployed in hybrid cloud or multi-cloud environments. Istio protects, connects, and monitors microservices in a consistent way, making microservice deployment easier to manage.

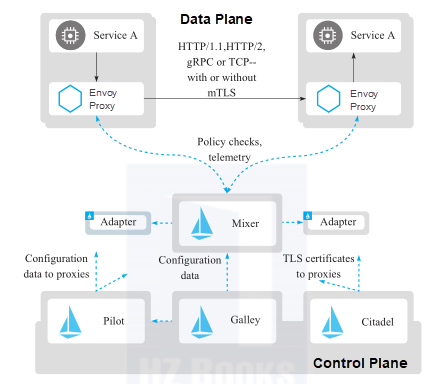

As for architecture design, the Istio service mesh is logically divided into the control plane and data plane. The control plane Pilot manages and configures the proxies for traffic routing and configures Mixer to implement policies and collect telemetry data. The data plane consists of a group of intelligent proxies, which are called Envoy, deployed in sidecar mode. These proxies regulate and control the network communication between microservices and Mixer.

Envoy is an excellent proxy for the service mesh. To maximize the value of Envoy, we need to use it in conjunction with the underlying infrastructure and components. The service mesh's data plane is formed by Envoy, and its control plane is built by using Istio's supporting components.

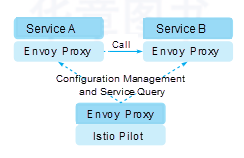

Envoy allows you to configure a group of service proxies by using a static configuration file or a group of discovery services. Then, the service proxies can be used to discover listeners, endpoints, and clusters at runtime. Istio Pilot implements Envoy's xDS APIs.

Envoy's discovery services rely on a service registry to discover service endpoints. Istio Pilot implements the xDS APIs and abstracts Envoy from any specific service registration. When Istio is deployed in Kubernetes, it uses Kubernetes' service registry to discover services. Other registries are used in the same way as HashiCorp Consul. The Envoy data plane is unaffected by these implementation details.

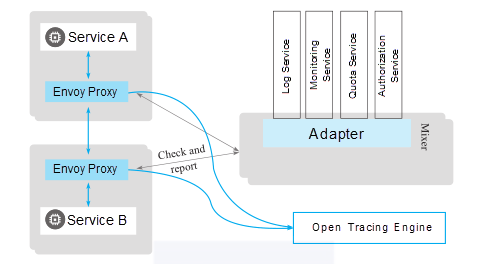

Envoy can send a lot of metrics and telemetry data. The data destination depends on the Envoy configuration. Istio provides the telemetry data receiver Mixer as part of its control plane. Envoy can send telemetry data to Mixer. Envoy sends distributed tracing data to open tracing engines, which comply with the OpenTracing API. Istio supports compatible open tracing engines and configures Envoy to send its tracing data to the specified engine.

The Istio control plane and the Envoy data plane constitute an attractive service mesh implementation. Istio and Envoy are developed in active communities and oriented to the next-generation service architecture. Istio is platform-independent and can run in various environments, including cross-cloud and internal environments, as well as Kubernetes and Mesos. You can deploy Istio in Kubernetes or in Nomad with Consul. Istio supports the services that are registered with Consul and the services that are deployed in Kubernetes or on virtual machines (VMs).

The control plane consists of four components: Pilot, Mixer, Citadel, and Galley. For more information, see the Istio architecture diagram.

Istio Pilot is used to manage traffic and control inter-service traffic flows and API calls. It allows you to better perceive the traffic status and identify potential problems. This improves the call stability and network robustness and helps keep applications stable even under unfavorable circumstances. With Istio Pilot, you can configure service-level properties such as circuit breakers, timeout, and retry. You can also configure continuous deployment tasks, such as canary release, A/B testing, and phased release through percentage-based traffic splitting. Pilot provides discovery services to Envoy and provides a traffic management function for intelligent routing and elastic capabilities, such as circuit breakers, timeout, and retry. Pilot converts advanced routing rules that control traffic behavior to Envoy-specific configurations and propagates them to Envoy at runtime. Istio provides powerful out-of-the-box fault recovery features, including timeout, retry mechanisms that support timeout budgeting and variable jitter, limits on the number of concurrent connections and requests destined for upstream services, periodic and active health checks for each member in a load balancing pool, and passive health checks.

Pilot abstracts the platform-specific service discovery mechanisms into a standard format, which is applicable to all sidecar proxies that conform to the data plane APIs. This loose coupling enables Istio to run in multiple environments, such as hnetes, Consul, and Nomad, while maintaining the same operation interface for traffic management.

Istio Mixer supports policy control and telemetry data collection. It isolates Istio's other components from the implementation details of all infrastructure backends. Mixer, a platform-independent component, is used to implement the access control and usage policies in the service mesh and collect telemetry data from Envoy and other services. Envoy extracts request-level properties and sends them to Mixer for evaluation.

Mixer includes a flexible plug-in model that enables Istio to interface with a variety of host environments and infrastructure backends. Then, Istio abstracts Envoy and Istio-managed services from these details. With Mixer, you can accurately control all interactions between the service mesh and infrastructure backends.

Unlike sidecar proxies that must minimize memory usage, Mixer runs independently, so it can use a fairly large cache and output buffer. They can be used as a highly scalable and available secondary cache for sidecar proxies.

Mixer is designed to provide high availability for each instance. Its local cache and buffer help reduce latency and shield infrastructure backends from failures, even when the backends are not responding.

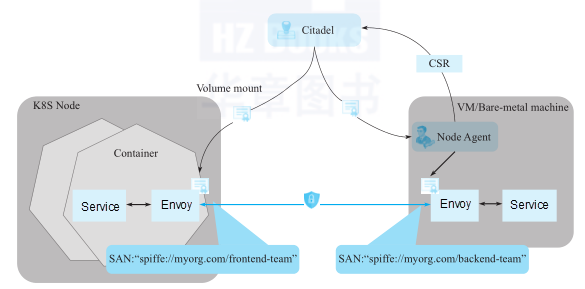

Istio Citadel provides powerful security features, including authentication, security policies, transparent Transport Layer Security (TLS) encryption, and authentication, authorization, and accounting (AAA) tools for service and data protection. Envoy can terminate or initiate TLS traffic to services in the service mesh. To implement these security features, Citadel must support the creation, signing, and rotation of certificates. Istio Citadel provides application-specific certificates that can be used to establish bidirectional TLS to protect traffic between services.

Istio Citadel ensures that services containing sensitive data can only be accessed from strictly authenticated and authorized clients. Citadel provides powerful inter-service and end-user authentication through built-in identity and credential management. Citadel can be used to upgrade unencrypted traffic in the service mesh. It allows O&M personnel to implement policies based on service identification instead of network control. Istio provides a configuration policy used to configure platform authentication on the server-side. This policy is not mandatory on the client-side. You can specify authentication requirements for specific services. Istio's key management system can automatically generate, distribute, rotate, and revoke keys and certificates.

Istio Role-based Access Control (RBAC) provides namespace-level, service-level, and method-level access control for services in the Istio service mesh. It features (1) role-based semantics, which are simple and easy to use; (2) service-to-service and end user-to-service authorization; and (3) flexibility through custom property support in roles and role binding.

Istio improves the security of microservices and their communications, including service-to-service communication and end user-to-service communication, without the need to modify service code. Istio provides a powerful role-based identity mechanism for each service to implement cross-cluster and cross-cloud interoperability.

Galley is used to verify the Istio API configurations written by users. Over time, Galley will take over Istio's top-level responsibilities for retrieving configurations and processing and distributing components. Galley isolates other Istio components from the retrieval of user configurations from underlying platforms, such as Kubernetes.

In short, Istio Pilot helps you simplify traffic management as your deployment scale gradually grows. Mixer provides robust and easy-to-use monitoring capabilities, allowing you to locate and fix problems quickly and effectively. Citadel reduces security workloads and allows you to focus on critical tasks during development.

Istio's architecture design has several key goals, which are critical to the system's response to large traffic volumes and high-performance service processing.

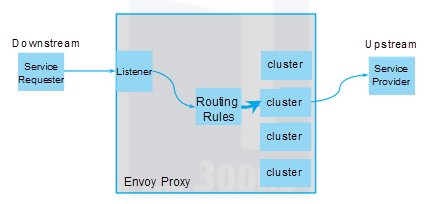

In the section that introduced the concept of service mesh, we learned about service proxies and how to use a proxy to create a service mesh in order to regulate the network communication between microservices. Istio uses Envoy as its default out-of-the-box service proxy. Envoy runs with all application instances in the service mesh, but they are not in the same container process. This forms the data plane of the service mesh. Applications can communicate with other services through Envoy. Envoy is a key component of the data plane and of the service mesh architecture as a whole.

Envoy was originally developed by Lyft to solve complex network problems during distributed system development. Envoy was made available as an open-source project in September 2016 and added to the Cloud Native Computing Foundation (CNCF) a year later. Compiled in C++, Envoy has high performance and runs stably and reliably even under high loads. Networks are generally transparent to applications, making it easy to identify the root causes of problems that occur on networks and applications. Based on this concept, Envoy is designed as a service architecture-oriented Layer-7 proxy and communication bus.

To better understand Envoy, we must be familiar with the following concepts:

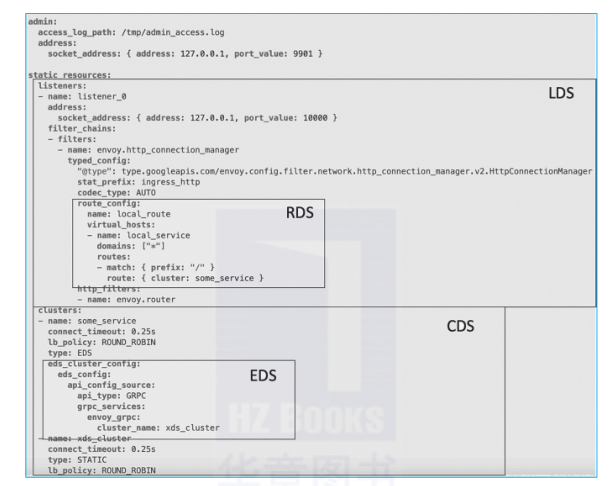

Listener Discovery Service (LDS), Route Discovery Service (RDS), Endpoint Discovery Service (EDS), and Secret Discovery Service (SDS).

Envoy provides many functions for inter-service communication. For example, Envoy can expose one or more listeners to connect to the downstream host and can expose listeners to external applications through ports. Envoy can also process listener-transmitted traffic by defining routing rules and direct the traffic to the target cluster. The roles and usage of these discovery services in Istio will be explained later.

Now that you understand these concepts related to Envoy, you probably want to understand the role of Envoy.

Envoy is a proxy that assumes an intermediary role in the network architecture. It can provide additional traffic management features, such as security, privacy protection, and policies. During inter-service calls, Envoy can shield clients from the service backend's topology details, simplify interactions, and prevent backend services from overloading. A backend service is a group of identical instances in the running state. Each instance processes a certain load.

A cluster is essentially a group of Envoy-connected and logically identical upstream hosts. How does a client know which instance or IP address to use while interacting with a backend service? Envoy provides the routing function. Through the SDS, Envoy discovers all members in a cluster, and actively checks each member's health status. Then, Envoy implements a load balancing policy to route requests to healthy members. Clients do not need to know the actual deployment details when Envoy implements load balancing across service instances.

Currently, Envoy provides two API versions: V1 and V2. The latter version was released in Envoy 1.5.0. To ensure smooth migration to API version 2, Envoy provides the --v2-config-only parameter upon startup. This parameter specifies the use of API version 2 in Envoy. The API version 2 is a superset of version 1 and compatible with it. In Istio 1.0 and later versions, Envoy explicitly supports API version 2. If you view the container startup command that uses Envoy as a sidecar proxy, you can see the following startup parameters, of which the --v2-config-only parameter is specified:

$ /usr/local/bin/envoy -c

/etc/istio/proxy/envoy-rev0.json --restart-epoch 0 --drain-time-s 45

--parent-shutdown-time-s 60 --service-cluster ratings --service-node

sidecar~172.33.14.2~ratings-v1-8558d4458d-ld8x9.default~default.svc.cluster.local

--max-obj-name-len 189 --allow-unknown-fields -l warn --v2-config-onlyThe -c parameter specifies the path of the bootstrap configuration file based on API version 2. This parameter is in JSON format. Other formats, such as YAML and Proto3, are also supported. The bootstrap configuration file is parsed based on API version 2. If parsing fails, the system determines whether to parse it as a JSON configuration file based on API version 1 by checking the [--v2-config-only] parameter. The other parameters are explained below to help you understand the Envoy startup configuration:

Envoy is an intelligent proxy driven by configuration files in JSON or YAML format. Envoy provides different configuration versions. The initial version 1 provides the original method for configuring Envoy upon startup. This version was deprecated. Currently, Envoy configuration v2 is supported. For more information about the differences between API versions 1 and 2, see https://www.envoyproxy.io/docs

This article only introduces API version 2 because it is the latest version and is currently used by Istio.

The Envoy API version 2 configuration is based on gRPC. These APIs can be called to reduce the time it takes Envoy to aggregate configurations through the streaming function. This removes API polling and allows the server to push updates to Envoy, without periodic Envoy polling.

The Envoy architecture makes it possible to use different types of configuration management methods. You can select a deployment method as needed. Simple deployment can be implemented through fully static configuration. Complex deployment can be implemented by incrementally adding complex dynamic configurations. The following configuration methods are available:

You can use the Envoy configuration file to specify a listener, routing rules, and cluster. The following example provides a simple Envoy configuration file:

static_resources:

listeners:

- name: httpbin-demo

address:

socket_address: { address: 0.0.0.0, port_value: 15001 }

filter_chains:

- filters:

- name: envoy.http_connection_manager

config:

stat_prefix: egress_http

route_config:

name: httpbin_local_route

virtual_hosts:

- name: httpbin_local_service

domains: ["*"]

routes:

- match: { prefix: "/"

}

route:

auto_host_rewrite: true

cluster: httpbin_service

http_filters:

- name: envoy.router

clusters:

- name: httpbin_service

connect_timeout: 5s

type: LOGICAL_DNS

# Comment out the following line to test on v6 networks

dns_lookup_family: V4_ONLY

lb_policy: ROUND_ROBIN

hosts: [{ socket_address: { address: httpbin, port_value: 8000 }}]This Envoy configuration file declares a listener that enables a socket on port 15001 and attaches a filter chain to it. The http_connection_manager filter uses routing instructions in the Envoy configuration file and routes all traffic to the httpbin_service cluster. In this example, the simple routing instructions are wildcards that match all virtual hosts. The last part of the configuration file defines the connection properties of the httpbin_service cluster. In this example, the EDS is set to LOGICAL_DNS. The load balancing algorithm ROUND_ROBIN is used to communicate with the upstream service httpbin.

This simple configuration file is used to create incoming traffic from a listener and route all traffic to the httpbin cluster. It also specifies the load balancing algorithm and connection timeout configuration.

Many configuration items are specified, such as the listener, routing rules, and destination cluster for traffic routing. This is an example of a fully static configuration file.

For more information about the parameters, see the Envoy document at www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/service_discovery#logical-dns

In the preceding section, we mentioned that we could dynamically configure Envoy. The following section explains the concept of dynamic configuration for Envoy and how to use the xDS APIs for dynamic configuration.

Envoy can use a set of APIs to update configurations, without any downtime or restart. Envoy only needs a simple bootstrap configuration file, which directs configurations to the proper discovery service API. Other settings are dynamically configured. Envoy's dynamic configuration APIs are called xDS services and they include:

You can use one or more xDS services for configuration. Envoy's xDS APIs are designed for eventual consistency, and proper configurations are eventually converged. For example, Envoy may eventually use a new route to retrieve RDS updates, and this route may forward traffic to clusters that have not yet been updated in the CDS. As a result, the routing process may produce routing errors until the CDS is updated. Envoy introduces the ADS to solve this problem. Istio also implements the ADS, which can be used to modify proxy configurations.

For example, you can use the following configuration to allow Envoy to dynamically discover listeners:

dynamic_resources:

lds_config:

api_config_source:

api_type: GRPC

grpc_services:

- envoy_grpc:

cluster_name: xds_cluster

clusters:

- name: xds_cluster

connect_timeout: 0.25s

type: STATIC

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

hosts: [{ socket_address: { address: 127.0.0.3, port_value: 5678 }}]In this way, you do not need to explicitly configure each listener in the configuration file. Envoy is instructed to find the correct listener configuration value at runtime by using the LDS API. The preceding configuration also defines a cluster named xds_cluster where the LDS API is located.

Through static configuration, you can intuitively view the information provided by each discovery service.

This article is adapted from the book "Istio in Action" and reproduced with the consent of the publisher. This book was written by Alibaba Cloud senior technical expert Wang Xining and introduces Istio's basic principles and development practices. It contains a large number of selected cases and a great deal of reference code. You can download it to quickly get started with Istio development. Gartner believes that the service mesh will become the standard technology of all leading container management systems in 2020. If you are interested in microservices and cloud native, you will find a lot of interesting information in the book.

How to Manage Multi-cluster Deployment with Istio: Multiple Control Planes

56 posts | 8 followers

FollowAlibaba Clouder - February 14, 2020

DavidZhang - December 30, 2020

Alibaba Cloud Native - November 3, 2022

Alibaba Developer - January 29, 2021

Alibaba Cloud Native Community - April 6, 2023

Xi Ning Wang(王夕宁) - July 1, 2021

56 posts | 8 followers

Follow ApsaraDB for MyBase

ApsaraDB for MyBase

ApsaraDB Dedicated Cluster provided by Alibaba Cloud is a dedicated service for managing databases on the cloud.

Learn More Microservices Engine (MSE)

Microservices Engine (MSE)

MSE provides a fully managed registration and configuration center, and gateway and microservices governance capabilities.

Learn More Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn MoreMore Posts by Xi Ning Wang(王夕宁)