By Sai Sarath Chandra, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

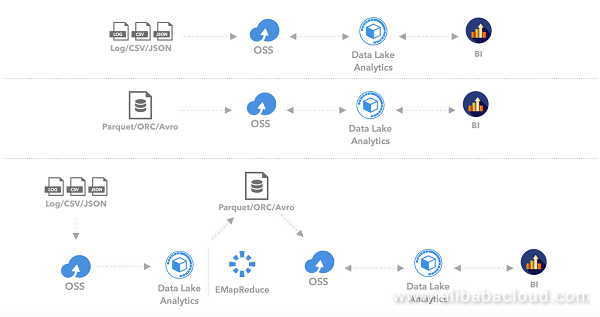

In this tutorial, we will analyze raw data with Tableau using Data Lake Analytics. We will analyze the files that are available in Object Storage Service (OSS).

We will be performing the following steps in sequence:

Firstly, you have to activate Alibaba Cloud Data Lake Analytics using the Console or through the product page. After successful activation, you will receive a mail containing the credentials for accessing Data Lake Analytics.

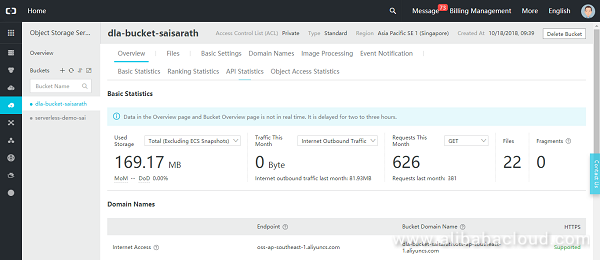

You have to create a bucket in OSS console/OSS Browser in the same region. So assuming if you are using Data Lake Analytics outside China, you can use the Singapore region. This means that the data has to be stored in the OSS Bucket in Singapore. The choice of Availability Zone does not matter.

Grant permissions to Data Lake Analytics to access OSS in the RAM Console or by clicking the below link:

Upload the respective files into the bucket, either by console or browser.

The data which I used for this article is available in the GitHub link below:

https://github.com/saichandu415/Public-Datasets

We use Data Lake Analytics SQL to create the schema and tables. All the information related to the SQL are found here: https://www.alibabacloud.com/help/doc-detail/72005.htm

We will create a new schema named 'ecomm'

CREATE SCHEMA ecomm WITH DBPROPERTIES(

LOCATION = 'oss://<Bucket-Name>/brazilian-ecommerce/',

catalog='oss'

);The schema creation is different, you need to specify the LOCATION of where data files are located or in which bucket they are in. Make sure It should be ending with '/' and don't forget to mention the catalog section. By default we can create 100 schemas, 4096 tables under each schema, and 990 columns in each table

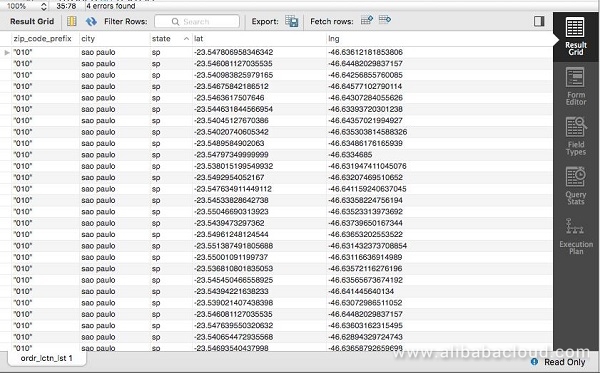

USE ecomm;We will import the file(geolocation_olist_public_dataset.csv) data contains all the locations of all the orders, by creating a table under the schema by using the following command

CREATE EXTERNAL TABLE ordr_lctn_lst(

zip_code_prefix STRING,

city STRING,

state STRING,

lat STRING,

lng STRING

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFILE

LOCATION 'oss://<bucket-name>/brazilian-ecommerce/geolocation_olist_public_dataset.csv'

TBLPROPERTIES ("skip.header.line.count"="1");All the SQL data types are available and will soon be updated in the documentation, since all the .csv files are updated with the Header data in the first entry of data. We can ignore that by using the detail by using the TBLPROPERTIES with skip.header.line.count

You can query the data by running following command and verify the data

select * from ecomm.ordr_lctn_lst;

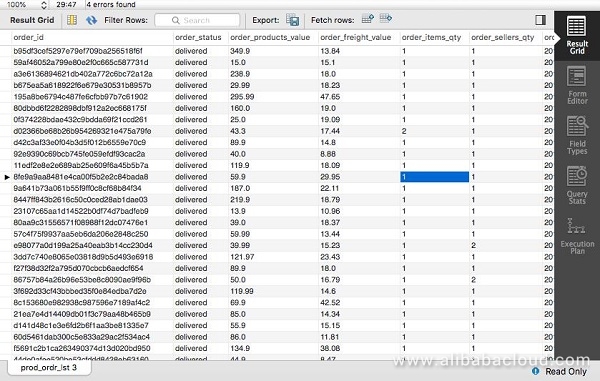

We also have file(olist_public_dataset_v2.csv) that consists of all the order details with all the below columns.

CREATE EXTERNAL TABLE prod_ordr_lst(

order_id STRING,

order_status STRING,

order_products_value DOUBLE,

order_freight_value DOUBLE,

order_items_qty INT,

order_sellers_qty INT,

order_purchase_timestamp TIMESTAMP,

order_aproved_at TIMESTAMP,

order_estimated_delivery_date TIMESTAMP,

order_delivered_customer_date TIMESTAMP,

customer_id STRING,

customer_city STRING,

customer_state STRING,

customer_zip_code_prefix INT,

product_category_name STRING,

product_name_length INT,

product_description_length INT,

product_photos_qty INT,

product_id STRING,

review_id STRING,

review_score INT,

review_comment_title STRING,

review_comment_message STRING,

review_creation_date TIMESTAMP,

review_answer_timestamp TIMESTAMP

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFILE

LOCATION 'oss://bucket-name/brazilian-ecommerce/olist_public_dataset_v2.csv'

TBLPROPERTIES ("skip.header.line.count"="1");TBLPROPRTIES("skip.header.line.count") can also be used to skip multiple lines, the logic of the skip.header.line.count is as below:

Suppose x is the value of skip.header.line.count; n is the number of lines in the data file:

You can verify the data from by executing,

Select * from ecomm.prod_ordr_lst

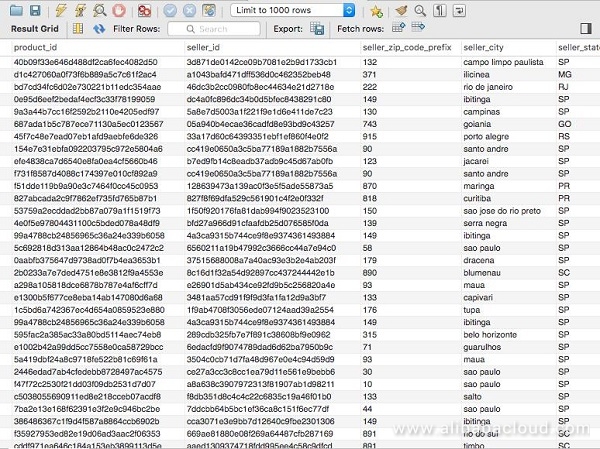

We need to import seller order list by using the following command

CREATE EXTERNAL TABLE slr_ordr_lst(

order_id STRING,

product_id STRING,

seller_id STRING,

seller_zip_code_prefix INT,

seller_city STRING,

seller_state STRING

)

ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

STORED AS TEXTFILE

LOCATION 'oss://dla-bucket-saisarath/brazilian-ecommerce/sellers_olist_public_dataset_.csv'

TBLPROPERTIES ("skip.header.line.count"="1");You can verify the data by running a select command

SELECT * FROM slr_ordr_lst;

Install latest version of Tableau.

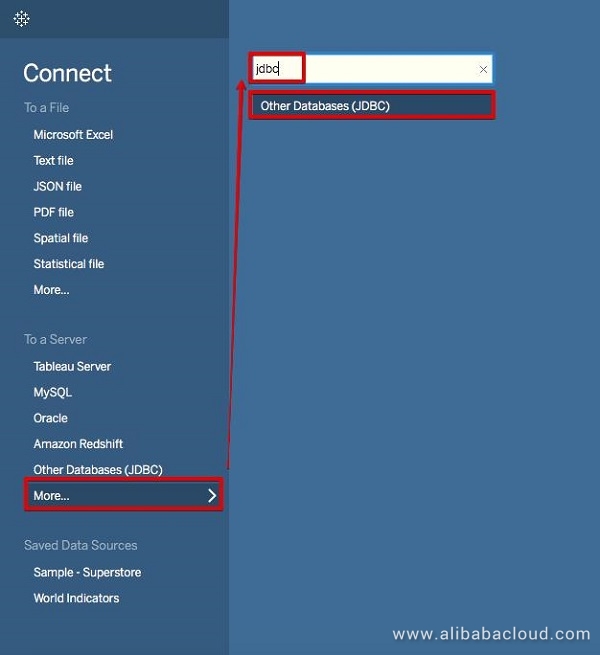

Data Lake Analytics currently support only JDBC Connection to other analysis tools, you can select the 'JDBC'

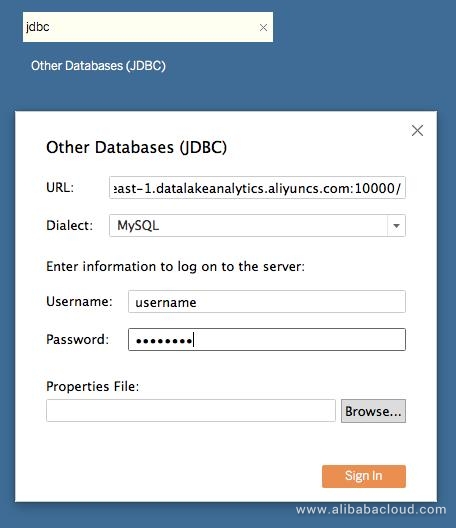

The only database connection string is below, since Data Lake Analytics is only supported in Singapore.

jdbc:mysql://service.ap-southeast-1.datalakeanalytics.aliyuncs.com:10000/You need to create a JDBC connection in Tableau. Once after connection you will be able to see the below dashboard

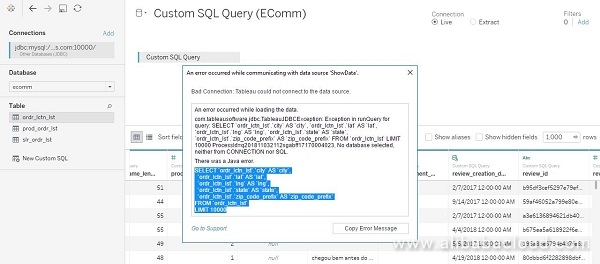

For some reason the Data Lake Analytics Data source, double clicking the table didn't show the data. This is a simple fix. You can copy the SQL statement from the error and can update by adding "ecomm"

SELECT `ordr_lctn_lst`.`city` AS `city`,

`ordr_lctn_lst`.`lat` AS `lat`,

`ordr_lctn_lst`.`lng` AS `lng`,

`ordr_lctn_lst`.`state` AS `state`,

`ordr_lctn_lst`.`zip_code_prefix` AS `zip_code_prefix`

FROM `ecomm`.`ordr_lctn_lst`

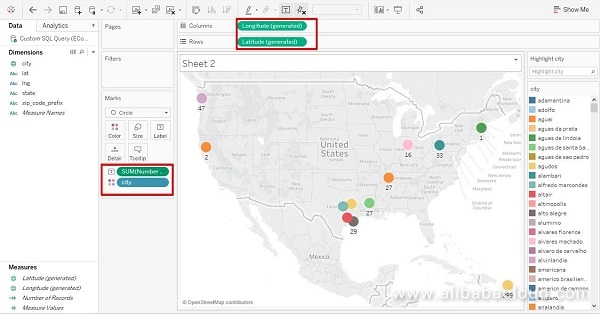

LIMIT 100000After copying, you need to add the table name highlighted above. Switch to sheet and select map type.

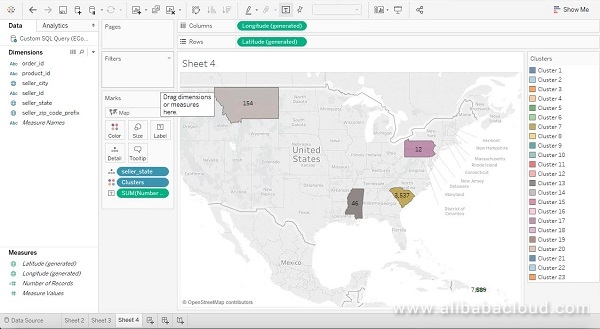

You need to choose "Longitude" to the columns and "Latitude" to the rows and select the "Number of Records" & "City" to the marks section.

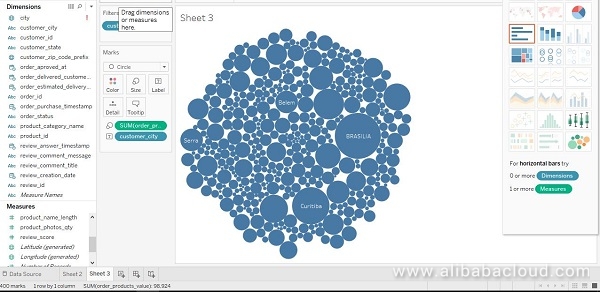

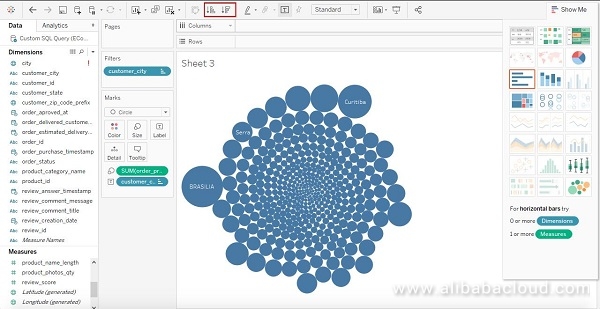

Now we analyze the orderlist, using the prod_ordr_lst. We analyze the data using the "packed bubbles" type. Both the products value and the city

We can do some interesting things like representing the data by sorting we can use ascending format.

We can also analyze the seller order list(slr_ordr_lst) and find out how many products are being sold from each state as below, the custom SQL query is

SELECT `slr_ordr_lst`.`order_id` AS `order_id`,

`slr_ordr_lst`.`product_id` AS `product_id`,

`slr_ordr_lst`.`seller_city` AS `seller_city`,

`slr_ordr_lst`.`seller_id` AS `seller_id`,

`slr_ordr_lst`.`seller_state` AS `seller_state`,

`slr_ordr_lst`.`seller_zip_code_prefix` AS `seller_zip_code_prefix`

FROM `ecomm`.`slr_ordr_lst`

LIMIT 10000

Important Observations and Tips:

To learn more about Alibaba Cloud Data Lake Analytics, visit www.alibabacloud.com/products/data-lake-analytics

Shape Alibaba Cloud's Future: New Round of Alibaba Cloud MVP Nomination for December 2018 Opens Now!

2,605 posts | 747 followers

FollowAlibaba Clouder - August 7, 2020

Alibaba Clouder - September 24, 2019

Alibaba Cloud Indonesia - August 28, 2020

Alibaba Cloud Indonesia - September 4, 2020

Alibaba Cloud New Products - January 19, 2021

ApsaraDB - February 20, 2021

While creating the schema i'm getting the exception of nopreviledgeaccess access denied error even though I have admin role in RAM

2,605 posts | 747 followers

Follow MaxCompute

MaxCompute

Conduct large-scale data warehousing with MaxCompute

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn More OSS(Object Storage Service)

OSS(Object Storage Service)

An encrypted and secure cloud storage service which stores, processes and accesses massive amounts of data from anywhere in the world

Learn MoreMore Posts by Alibaba Clouder

Raja_KT March 1, 2019 at 8:10 am

Good one . But not sure of the performance with Big data.