Over the last year, Alibaba Cloud has developed a wide range of advanced features for the Application Load Balancer (ALB) Ingress controller. These features are designed to facilitate configuration management, intelligently block errors, implement disaster recovery, improve user experience, and safeguard customer businesses.

• To support more ALB Ingress features and improve user experience, ALB provides the AScript feature which allows you to enable the slow start mode and connection draining feature.

• To facilitate configuration migration, ALB provides the ingress2Albconfig tool to help you efficiently migrate configurations from NGINX Ingresses to ALB Ingresses.

• To facilitate configuration verification, ALB Ingresses are integrated with webhook services to block YAML configuration errors. This feature can quickly detect configuration errors in a finer-grained manner and reduce cluster tuning failures.

• To enhance disaster recovery capabilities, ALB adopts the multi-cluster deployment mode to support more comprehensive disaster recovery.

ALB supports AScript, which allows you to create scripts to flexibly customize features. When the standard ALB configurations cannot meet your business requirements, you can use AScript to precisely control and adjust traffic management policies.

AScript can run custom scripts that are triggered at different stages of a request to manage traffic based on custom configurations. AScript is applicable to content delivery, security hardening, and performance optimization scenarios in which AScript implements intelligent and custom load balancing policies. The new version of the ALB Ingress controller allows you to configure AScript on Albconfigs to implement finer-grained business management. AScript continuously optimizes service performance and user experience in complex and changing business environments. The following procedure shows how to use AScript to customize scripts:

1. In the ascript_configmap.yaml file, create a ConfigMap for the script. Sample code:

apiVersion: v1

kind: ConfigMap

metadata:

name: ascript-rule

namespace: default

data:

scriptContent: |

if match($remote_addr, '127.0.0.1|10.0.0.1') {

add_rsp_header('X-IPBLOCK-DEBUG', 'hit')

exit(403)

}2. In the YAML file of the Albconfig, specify the position and parameters for the script. Sample code:

apiVersion: alibabacloud.com/v1

kind: AlbConfig

metadata:

name: default

spec:

config:

name: xiaosha-alb-test-1

addressType: Intranet

listeners:

- port: 80

protocol: HTTP

aScriptConfig:

- aScriptName: ascript-rule

enabled: true

position: RequestFoot

configMapNamespace: default

extAttributeEnabled: true

extAttributes:

- attributeKey: EsDebug

attributeValue: test1For more information about AScript, visit https://www.alibabacloud.com/help/en/slb/application-load-balancer/user-guide/programmable-script/

After a new pod is added to a Service, if the ALB Ingress immediately distributes network traffic to the pod, the CPU or memory usage may immediately spike. This can cause access errors. However, you can enable the slow start mode, which allows the ALB Ingress to gradually migrate traffic to the new pod to prevent errors caused by traffic spikes. Configuration example:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

alb.ingress.kubernetes.io/slow-start-enabled: "true"

alb.ingress.kubernetes.io/slow-start-duration: "100"

name: alb-ingress

spec:

ingressClassName: alb

rules:

- host: alb.ingress.alibaba.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: tea-svc

port:

number: 80When a pod enters the terminating state, the ALB Ingress removes the pod from the backend server group. In this case, the existing connections on the pod may still be processing requests. If the ALB Ingress immediately closes all the connections, service errors may arise. After connection draining is enabled, the ALB Ingress maintains the connections for a specific period of time after the pod is removed. Connection draining ensures that connections are smoothly closed after existing requests are completed. The following process shows how connection draining works:

After connection draining is enabled, the ALB Ingress maintains data transmission over existing connections on the pod until the connection draining period ends to ensure smooth disconnections. During this process, new requests are no longer accepted:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

alb.ingress.kubernetes.io/connection-drain-enabled: "true"

alb.ingress.kubernetes.io/connection-drain-timeout: "199"

name: alb-ingress

spec:

ingressClassName: alb

rules:

- host: alb.ingress.alibaba.com

http:

paths:

- path: /test

pathType: Prefix

backend:

service:

name: tea-svc

port:

number: 80With the development of ALB Ingresses, more features are designed to suit the requirements of different scenarios. As a fully managed service, ALB Ingresses not only do not require manual O&M, but also support high scalability. Ingress configurations can be highly complex under long-term maintenance. Changing the Ingress configurations to ALB Ingress configurations requires complex operations. Some customers may choose not to use ALB Ingresses due to complex configurations.

To facilitate Ingress configuration management, the ALB Ingress team developed a tool for O&M engineers and developers to convert NGINX Ingress configurations to ALB Ingress configurations. This tool allows you to migrate workloads to ALB Ingresses through canary releases, without affecting the existing traffic. The following figure shows how an ALB Ingress works.

Traffic is redirected to the ALB instance through a canary release based on the DNS records to ensure disaster recovery. Tool images are uploaded to the Alibaba Cloud image repository. You can configure the following Job to perform a one-time conversion task, or create a tool container for long-term use. After further improvements, this tool will support more common methods.

apiVersion: batch/v1

kind: Job

metadata:

name: ingress2albconfig

namespace: default

spec:

template:

spec:

containers:

- name: ingress2albconfig

image: registry.cn-hangzhou.aliyuncs.com/acs/ingress2albconfig:latest

command: ["/bin/ingress2albconfig", "print"]

restartPolicy: Never

serviceAccount: ingress2albconfig

serviceAccountName: ingress2albconfig

backoffLimit: 4

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:ingress2albconfig

rules:

- apiGroups:

- networking.k8s.io

resources:

- ingresses

- ingressclasses

verbs:

- get

- list

- watch

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ingress2albconfig

namespace: default

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: system:ingress2albconfig

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:ingress2albconfig

subjects:

- kind: ServiceAccount

name: ingress2albconfig

namespace: defaultThe preceding one-time task uses the print command on an Alibaba Cloud tool image. You must have the role-based access control (RBAC) permissions because the print command is used within the cluster. In the example, you have read and write permissions on all Ingresses. The generated and existing resources are in the same namespace. Parameters after the conversion start with from_.

apiVersion: alibabacloud.com/v1

kind: AlbConfig

metadata:

creationTimestamp: null

name: from_nginx

spec:

config:

accessLogConfig: {}

edition: Standard

name: from_nginx

tags:

- key: converted/ingress2albconfig

value: "true"

zoneMappings:

- vSwitchId: vsw-xxx # Replace the value with the ID of the vSwitch in the VPC.

- vSwitchId: vsw-xxx # Replace the value with the ID of the vSwitch in the VPC.

listeners:

- port: 80

protocol: HTTP

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

creationTimestamp: null

name: from_nginx

spec:

controller: ingress.k8s.alibabacloud/alb

parameters:

apiGroup: alibabacloud.com

kind: AlbConfig

name: from_nginx

scope: null

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP":80}]'

creationTimestamp: null

name: from_ingress1

namespace: default

spec:

ingressClassName: from_nginx

rules:

- http:

paths:

- backend:

service:

name: nginx-svc-g1msr

port:

number: 80

path: /

pathType: Prefix

status:

loadBalancer: {}The preceding resources generated by the conversion task are not ready-to-use. You must specify the ID of the vSwitch in the VPC because ALB instances reply on zones and use vSwitch IP addresses to provide services. We recommend that you plan network resources based on your business requirements.

The ingress2Albconfig tool is still in early stage and expects more iterations and conversion features. If you require unsupported features, submit your feedback. We prioritize the development of features requested by customers.

ALB Ingress controller tuning failures can be caused by invalid formats or types of the Albconfig or Ingress. If the Albconfig or Ingress configuration error is not corrected, the tuning failure persists and the Albconfig or Ingress configurations cannot be correctly converted to ALB configurations. In addition, invalid Albconfig formats on outdated controllers can cause controller pod crashes, which result in configuration change failures.

In Kubernetes 1.9, the dynamic admission control feature supports admission webhooks, which allow you to implement access control for requests destined for API servers. The API servers block requests after they are authenticated and authorized but before the objects are persisted, and use the webhooks to implement admission control. ALB Ingress controller 2.15.0 supports webhook verification, which offers the following advantages compared with error detection and failure reporting during configuration tuning:

• Early blocking: The ALB Ingress controller uses webhooks to block errors and invalid configurations before requests reach the API server. This reduces resource waste and prevents cluster status chaos. Compared with error detection and failure reporting during the Ingress controller tuning stage, this method greatly reduces resource waste because the resource scheduling and network configuration steps are not performed.

• Decoupled logic: The verification logic is built in webhooks so that the Ingress controller logic can focus more on resource deployment and management because the Ingress controller does not need to verify the validity of configurations. Such design follows the single-responsibility principle, which divides the system into different easy-to-manage and easy-to-scale modules.

• Security hardening: The YAML verification logic is built in an independent webhook service, which manages and controls resource requests destined for clusters and reduces the risk of malicious requests and configuration errors.

• Configuration error detection: When a webhook detects an invalid parameter, the webhook blocks the request and provides a detailed error message to help you identify the cause of error. Instead of requiring you to focus on tuning failure events caused by parameter errors, this method can pinpoint the parameter and cause of error.

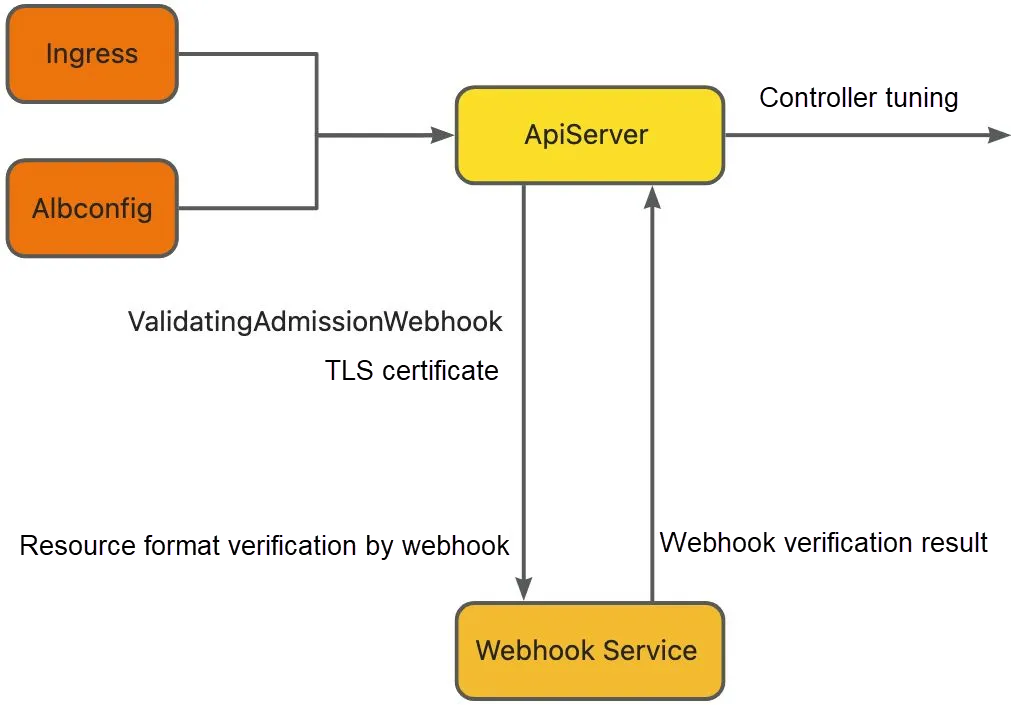

Main webhook components

ValidatingAdmissionWebhook: defines the webhook URLs to be called when the Kubernetes API server receives a resource creation or update request and the operations and resources that trigger webhook verification. This component is deployed along with the ALB Ingress controller.

TLS certificate: Network communication between the API server and webhook service must be encrypted over TLS.

Webhook service: The webhook service can parse AdmissionReview requests, verify Ingress resource definitions, and generate admission responses.

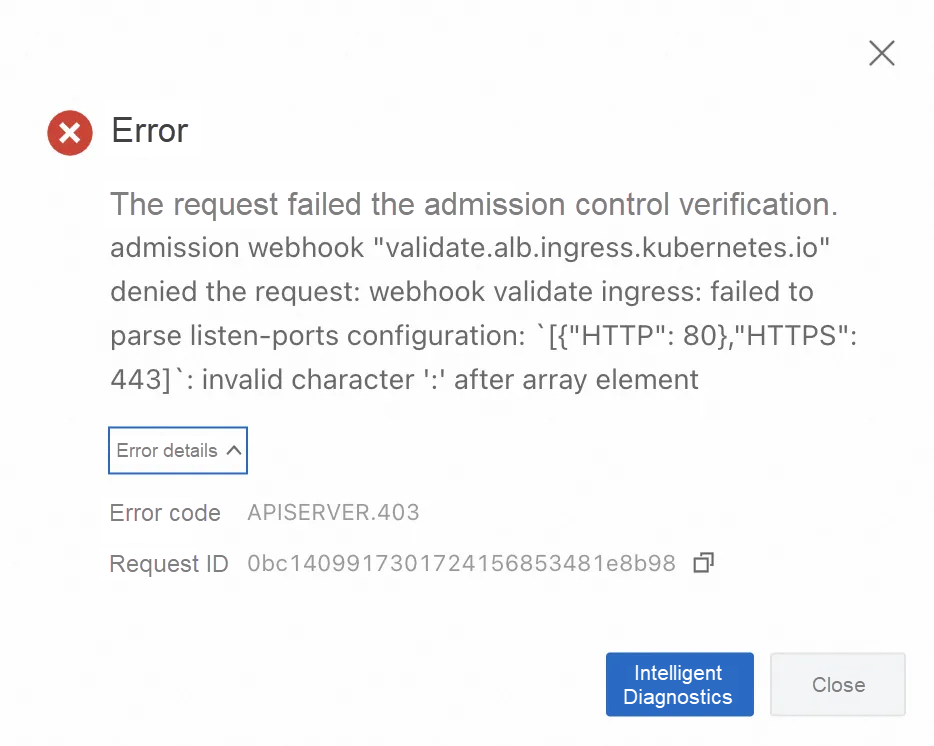

As shown in the following figure, when a user configures an Ingress in the Container Service for Kubernetes (ACK) console, the listen-ports comment error is detected by the webhook verification service and the error message is directly returned to the user. The user can receive the error information at the earliest opportunity and fix the error.

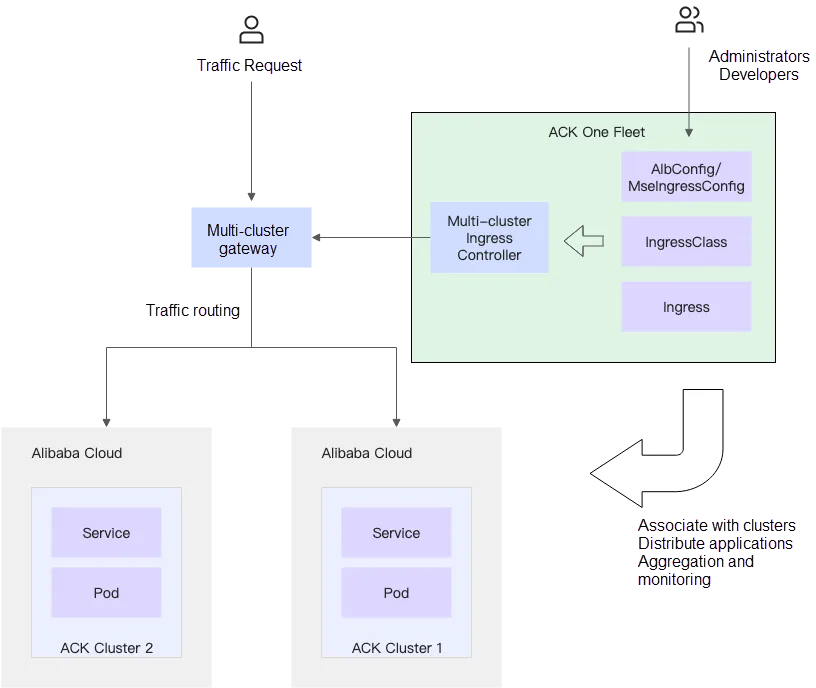

With the continuous promotion and adoption of cloud-native technology in various fields, it is increasingly difficult for a single cluster to implement disaster recovery and high availability. In the event of upgrade failures on basic cluster components or zone-level failures, it is difficult to quickly restore services. To implement disaster recovery and high availability, you can deploy ALB Ingresses as multi-cluster gateways on Distributed Cloud Container Platform for Kubernetes (ACK One) Fleet instances. ACK One Fleet instances are managed by ACK, and can manage Kubernetes clusters in any environment. ACK One Fleet instances provide enterprises with a consistent cloud-native application management experience.

ALB multi-cluster gateways are a solution provided by Alibaba Cloud for application disaster recovery and north-south traffic management in hybrid cloud or multi-cluster environments. This solution helps you quickly implement zone-disaster recovery or geo-disaster recovery for hybrid cloud and multi-cluster applications, and facilitates traffic management and governance.

Multi-cluster gateways have the following benefits:

• Fully managed and O&M-free gateways.

• Reduce the number of gateways and costs. Multi-cluster global Ingresses can manage layer-7 north-south traffic at the region level.

• Simplify traffic management in multi-cluster environments. You can configure forwarding rules for multi-cluster Ingresses on the Fleet instance instead of configuring the rules in each cluster.

• Designed for cross-zone high availability.

• Provide millisecond-level fallback. If a backend server error occurs in a cluster, multi-cluster gateways smoothly redirect traffic to other backend servers.

ALB multi-cluster gateways improve architecture availability for both zone-disaster recovery systems and geo-disaster recovery systems.

For more information, visit https://www.alibabacloud.com/help/en/ack/distributed-cloud-container-platform-for-kubernetes/use-cases/implementation-of-same-city-disaster-recovery-with-ack-one-alb-multi-cluster-gateway

Over the last year, ALB Ingresses have been providing customers in various fields with flexible deployment solutions to increase the efficiency of error identification and security and reliability of network environments. Alibaba Cloud focuses on customer requirements and fast iteration to provide cloud-native gateways with higher security and reliability that are applicable to more scenarios.

You are welcome to follow the updates of ALB Ingresses and make valuable suggestions.

Disclaimer: The views expressed herein are for reference only and don't necessarily represent the official views of Alibaba Cloud.

Alibaba Introduces Qwen3, Setting New Benchmark in Open-Source AI with Hybrid Reasoning

New Whitepaper Shows How AI Can Power Sustainable Business Transformation

1,320 posts | 464 followers

FollowAlibaba Cloud Community - August 21, 2024

Alibaba Cloud Native - August 14, 2024

Alibaba Container Service - April 24, 2025

Alibaba Cloud Community - September 1, 2022

Alibaba Clouder - April 27, 2021

Alibaba Container Service - January 26, 2022

1,320 posts | 464 followers

Follow Cloud-Native Applications Management Solution

Cloud-Native Applications Management Solution

Accelerate and secure the development, deployment, and management of containerized applications cost-effectively.

Learn More Networking Overview

Networking Overview

Connect your business globally with our stable network anytime anywhere.

Learn More Accelerated Global Networking Solution for Distance Learning

Accelerated Global Networking Solution for Distance Learning

Alibaba Cloud offers an accelerated global networking solution that makes distance learning just the same as in-class teaching.

Learn More Server Load Balancer

Server Load Balancer

Respond to sudden traffic spikes and minimize response time with Server Load Balancer

Learn MoreMore Posts by Alibaba Cloud Community