By Zhang Junquan (Senior DBA of Akulaku Database Team)

Cloud database implements compute-storage separation, supports the independent expansion of computing and storage, has high availability and flexibility, and provides pay-as-you-go billing to enhance cost controllability. However, each cloud database has different characteristics and bottlenecks. For example, it is very difficult for the traditional MySQL-like cloud database service to handle the change of hundreds of millions (or even billion)s of data, which may affect the speed of business iteration. Our company has done research and practice on the Alibaba Cloud native database, PolarDB, when investigating and solving this problem. We hope to provide some references for the industry through this article.

Akulaku is a leading FinTech company providing digital financial solutions for the Southeast Asian market. With the rapid development of the company and the continuous increase of business volume, some traditional database services can no longer meet the needs of business growth. These businesses depend on hundreds of databases. With the expansion of databases in daily work and frequent data changes under high loads, maintenance costs rise rapidly. Therefore, we invested resources in research solutions to solve this problem.

The current problems faced by traditional cloud databases mainly include the following aspects:

After collecting business pain points and selecting database architecture, we migrated this part of the business to PolarDB. The reasons are listed below:

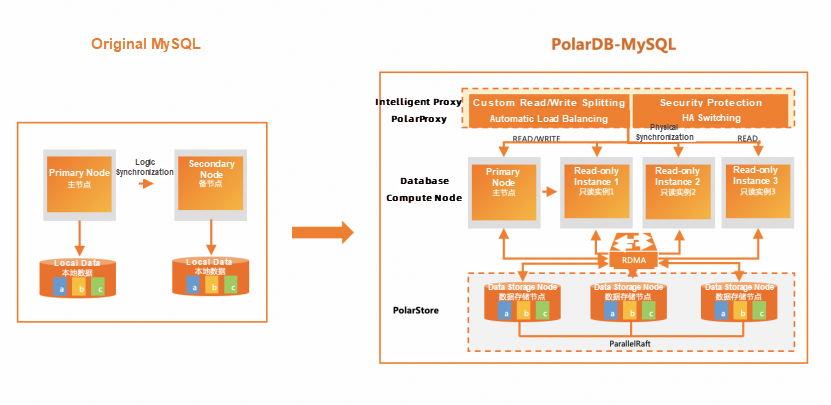

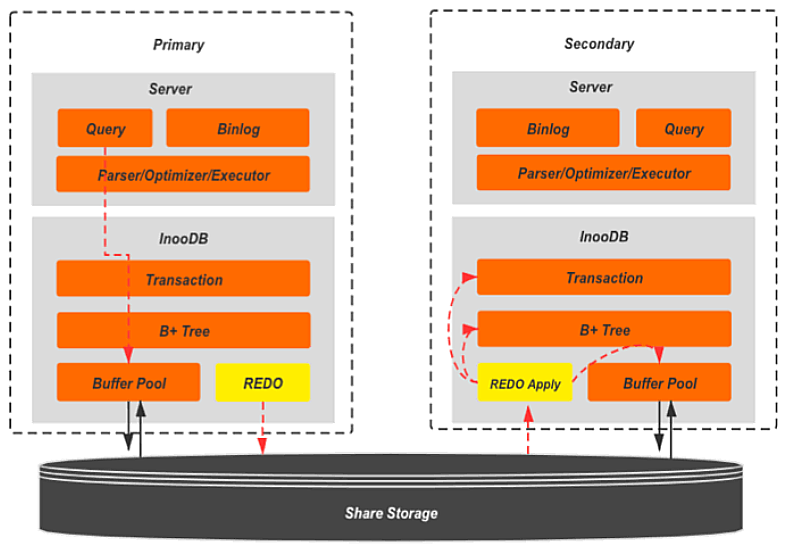

The compute nodes of PolarDB are separated from the Share Storage to facilitate rapid expansion, the underlying layer uses physical logs, so the primary-secondary synchronization is in milliseconds, and a large disk capacity of 100T is supported, so there is no need to worry about disk capacity problems in the business. At the same time, PolarDB also has an excellent performance in performance and disaster recovery in the test.

The architecture change is shown below:

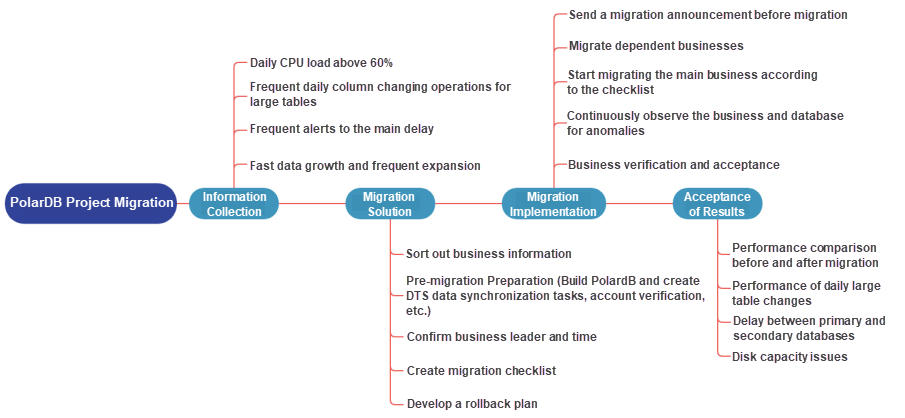

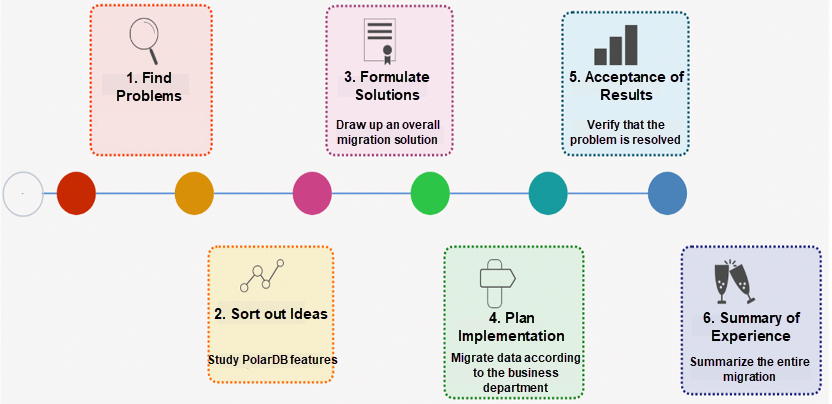

The following section describes how to migrate data from the traditional cloud database to PolarDB. The entire migration consists of four main phases:

Collect the database instances that need to be migrated. We focus on instances with frequent daily changes and large performance optimizations. You need to investigate the actual situation for the migration solution. For example, you need to consider the main business but also upstream and downstream businesses, such as data analysis platforms, data warehouse reports, Canal synchronization, etc.

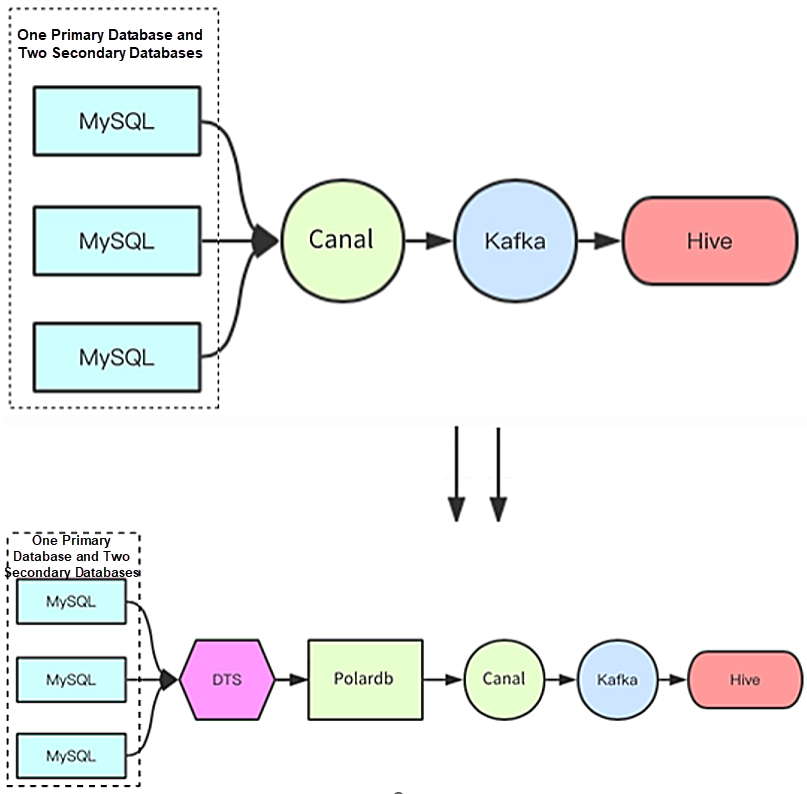

Dependent businesses need to be migrated to PolarDB before the main business migration. This prevents the data loss of dependent businesses after the main business migration. Particular attention should be paid to the heterogeneous data source scenario. Here, Canal migration is used as an example:

Canal must be switched to PolarDB in advance. We should pay attention to two issues:

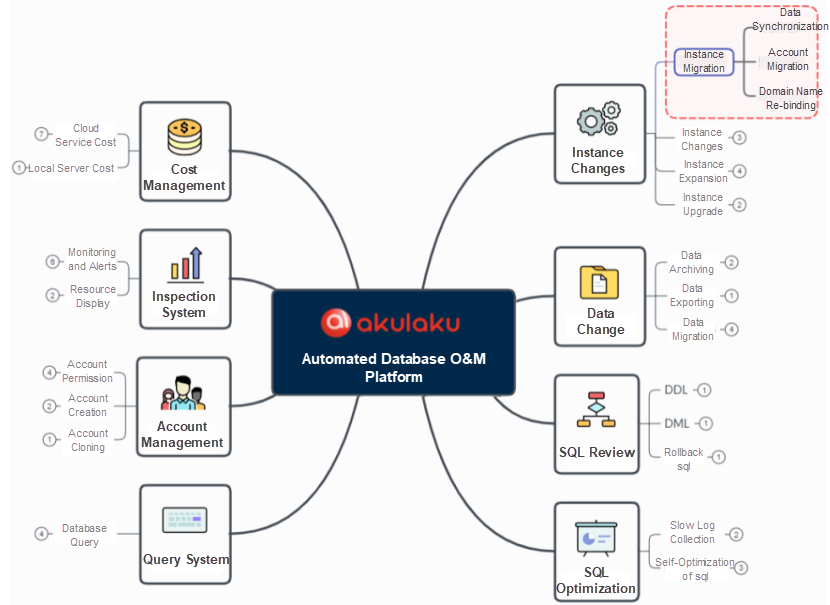

Due to a large amount of daily business change, Akulaku independently developed a database O&M platform, which integrates functions (such as instance migration, SQL review, and system inspection). This migration mainly relies on the instance migration function of the automated database O&M platform while avoiding business impacts caused by misoperations. After switching, you can view the performance status of PolarDB in real-time based on the resource display and monitoring functions of the platform.

The data synchronization function of the automated database O&M platform mainly involves calling the DTS interface to synchronize data in real-time. You only need to fill in the configuration on the platform. The following account migration and domain name change are all operated through the platform with one click.

There are several things to note:

This part describes the situation after migration and discusses the features of PolarDB.

With the increase in business volume, you can experience the benefits of migration in the scenario of e-commerce promotion. For example, we have a database with hundreds of thousands of QPS. In the past, it was difficult for the database to support services, and read and write operations of some services were in the primary database, increasing the load pressure on the primary database.

The compute nodes of PolarDB are separated from storage nodes, which allows you to rapidly expand compute nodes and cope with emergencies. With its own Proxy node, PolarDB supports read/write splitting, business cutting, and load balancing and can cope with high-load environments better.

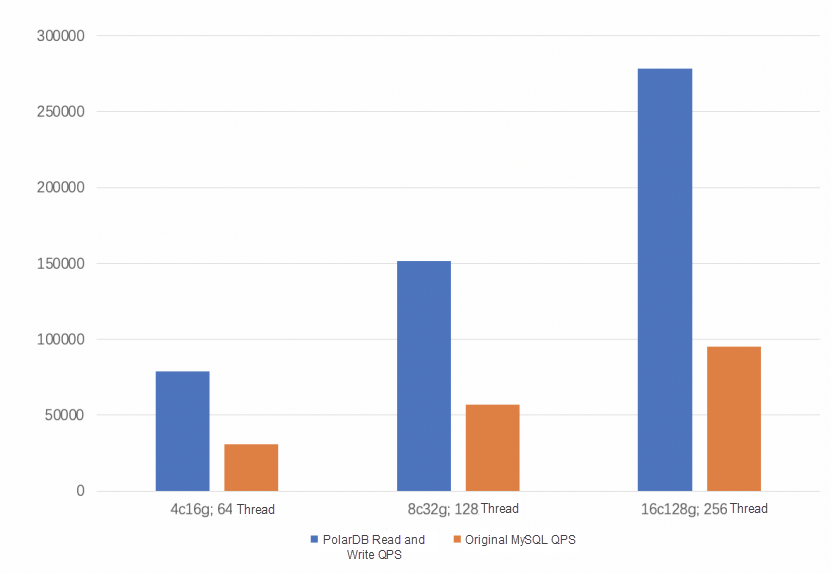

We collected some PolarDB QPS data in the high load scenario and compared them with previous databases. We found that the performance of PolarDB has been significantly improved. In the same configuration, the higher the database load, the greater the benefits of PolarDB. The benefits include performance optimization and the stability of the database.

Since the business does not do read/write splitting, connections are often made to the primary database. However, PolarDB only needs to provide cluster addresses. On the premise of ensuring read and write consistency, some read and write transactions are automatically distributed to the primary and secondary databases. In addition, based on the automatic load-based scheduling policy, PolarDB implements automatic scheduling according to the number of active connections to realize load balancing among multiple nodes.

Loads on some secondary databases spike instantaneously during some big promotions, causing slow service queries. In this case, PolarDB can add secondary nodes quickly. PolarDB is a shared disk, so you do not need to copy data to add nodes. In addition, compute nodes and storage nodes are separated. When you add secondary nodes separately, the operation of the entire cluster will not be affected.

In general, MySQL services are aimed at a single server. Data is cached by the OS from writing to storage (the interaction between the OS kernel and user data). PolarDB adopts Chunk Servers interconnected by the RDMA high-speed network to provide block device services to the upper compute nodes. PolarDB gets rid of the traditional IO mode, making data read and write develop more, and QPS can exceed 0.5 million.

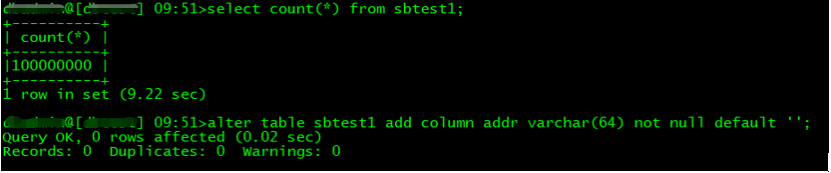

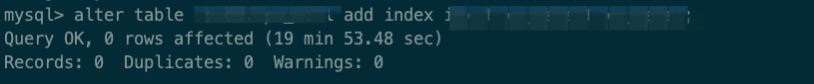

For adding large table columns, especially for businesses that involve data analysis platforms, the cardinality of tables is particularly large, and there are many tables at the 100-million level. In the past, PT or Ghost tools were generally used to make large table changes to minimize business impact when making changes in MySQL. However, it takes a long time and is often interrupted due to network or load. After you migrate data to PolarDB, you can directly perform DDL changes (such as large table column adding through the automated platform) and the new features of PolarDB.

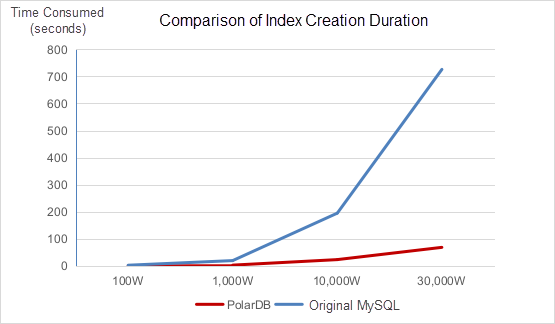

PolarDB 5.7 introduced a new feature called MySQL 8.0-Instant Add Column. Instant Add Column adopts the instant algorithm, which makes it unnecessary to rebuild the whole table when adding columns. You only need to temporarily obtain the metadata lock (MDL) and record the basic information of new columns in the metadata of the table. PolarDB supports parallel DDL and DDL physical replication optimization to add indexes.

As shown in the preceding figure, the time taken to add columns in 100-million-level large tables is within one second. You only need to change the table definition information but don’t need to modify existing data. Based on this feature, PolarDB significantly reduces the time and risk of business changes, which is of great help to our daily maintenance and improves the database SLA.

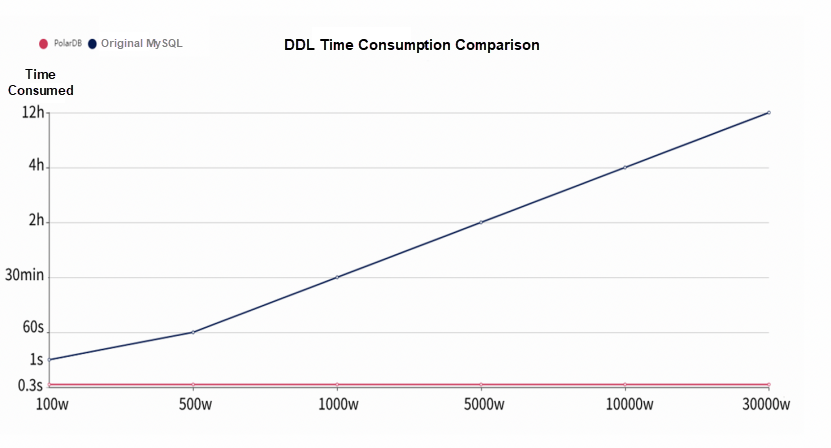

The preceding figure shows that when you add columns, the time consumed by PolarDB is stable within one second. However, the time consumed by the original MySQL database increases with data volume. The original database takes more than ten hours, especially when a table with hundreds of millions of columns changes. The execution efficiency of PolarDB has been significantly improved, which is a qualitative leap in comparison with the original database.

You must enable the following parameters to use the Instant Add Column function with clusters of PolarDB for MySQL 5.7:

innodb_support_instant_add_column

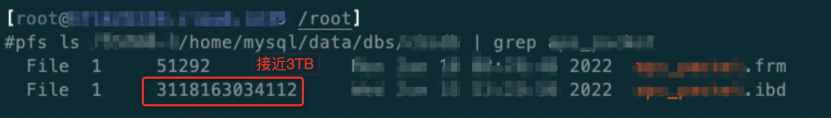

As shown in the preceding figure, it takes less than 20 minutes to create indexes in parallel for a table that contains 440 million rows of data and is nearly 3TB in size. This significantly reduces the time required to create indexes for large tables.

As shown in the preceding figure, compared with the original MySQL, the performance advantage of creating indexes of PolarDB becomes more obvious as the table size increases.

Some business scenarios in the Akulaku business system are particularly strict in the delay between primary and secondary databases. When using the original MySQL database, business alerts are often triggered due to delays, which is also a troublesome problem for us. After migrating data to PolarDB, the efficiency of primary-secondary synchronization is significantly improved.

PolarDB uses physical logs for synchronization. Since the storage is shared, the primary node updates data to the Share Storage in real-time through the RDMA network. Other compute nodes read redo logs in real-time through the high-performance RDMA network to modify the Page in Buffer Pool and synchronize B+Tree and transaction information. Different from the top-down replication method of logical replication, physical replication is bottom-up, which can control the main delay at the millisecond level.

As shown in the architecture diagram, the Primary node and Replica node share the same PolarStore File System (PFS) to reuse data files and log files. The RO node directly reads the Redo Log on the PFS for parsing and modifies and applies it to the Page in its Buffer Pool. When the user's request reaches the Replica node, the latest data can be accessed. At the same time, RPC communication is maintained between the Replica node and the Primary node to synchronize the Apply site of the Replica's current log and information such as ReadView.

As the business volume increases, the disks of the original MySQL database need to be expanded frequently. If the remaining disks in some regions are insufficient, the instance will be switched, which seriously affects the business. Businesses related to the data analysis platform involve storing at least T-level data, and PolarDB supports up to 100T capacity, which alleviates this problem. Due to the optimization of its underlying architecture, it has significantly improved IO performance.

How does the storage of PolarDB achieve high fault tolerance, large capacity, and fast loading speed? We checked the relevant information and learned that PolarFS played a role. If you are interested, please refer to the following documents:

https://www.vldb.org/pvldb/vol11/p1849-cao.pdf

According to preliminary statistics, this migration involves dozens of systems across multiple business lines of the company. It takes several months from the beginning of the research to the final official launch. After more than half a year of practice, stability, compatibility and performance met our current development needs. We hope Alibaba Cloud can launch more excellent products to help meet additional challenges in the future together.

Alibaba Clouder - April 19, 2021

Alibaba Cloud Indonesia - May 4, 2021

Alibaba Clouder - July 3, 2020

Alibaba Clouder - February 11, 2021

Alibaba Cloud Indonesia - May 5, 2021

Alibaba Clouder - February 9, 2021

Database for FinTech Solution

Database for FinTech Solution

Leverage cloud-native database solutions dedicated for FinTech.

Learn More PolarDB for PostgreSQL

PolarDB for PostgreSQL

Alibaba Cloud PolarDB for PostgreSQL is an in-house relational database service 100% compatible with PostgreSQL and highly compatible with the Oracle syntax.

Learn More PolarDB for Xscale

PolarDB for Xscale

Alibaba Cloud PolarDB for Xscale (PolarDB-X) is a cloud-native high-performance distributed database service independently developed by Alibaba Cloud.

Learn More PolarDB for MySQL

PolarDB for MySQL

Alibaba Cloud PolarDB for MySQL is a cloud-native relational database service 100% compatible with MySQL.

Learn MoreMore Posts by ApsaraDB