By Zhaofeng Zhou (Muluo)

InfluxDB ranks first in the time series databases on DB-Engines, which is really well deserved. From the perspective of its functional richness, usability, and bottom-layer implementation, it has many highlights and is worthy of an in-depth analysis.

First, I'll briefly summarize several important attributes:

The following sections mainly analyze the basic concepts, the TSM storage engine, continuous queries and TimeSeries indexes in detail.

First, let's learn some basic concepts in InfluxDB. The following are specific examples:

INSERT machine_metric,cluster=Cluster-A,hostname=host-a cpu=10 1501554197019201823This is a command line to write a data entry into InfluxDB. The components of this data are as follows:

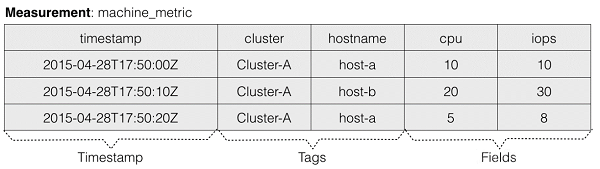

Finally, the data in each measurement is logically organized into a large data table, as shown in the following figure:

When querying, InfluxDB supports criteria queries of any dimensions in the measurement. You can specify any tag or field to perform a query. Following the data case above, you can construct the following query criteria:

SELECT * FROM "machine_metric" WHERE time > now() - 1h;

SELECT * FROM "machine_metric" WHERE "cluster" = "Cluster-A" AND time > now() - 1h;

SELECT * FROM "machine_metric" WHERE "cluster" = "Cluster-A" AND cpu > 5 AND time > now() - 1h;From the data model and query criteria, the Tag and Field have no difference. In terms of semantics, the tag is used to describe the measurement, while the field is used to describe the value. In terms of internal implementation, the tag is fully indexed, while the field is not, so a criteria query based on tags is much more efficient than that based on fields.

The bottom-layer storage engine of InfluxDB has gone through the process from LevelDB to BlotDB, and then to the self-developed TSM. The consideration of the whole selection and transformation can be seen in the documents on the official website. The whole consideration process is worth learning from. The consideration on technology selection and transformation is always more impressive than simply describing the attributes of a product.

I briefly summarize the entire process of selecting and transforming the storage engine. The first stage is LevelDB. The main reason for selecting LevelDB is that its bottom-layer data structure adopts LSM, which is very write-friendly, provides high write throughput, and is relatively consistent with the attributes of time series data. In LevelDB, data is stored in KeyValue and sorted by Key. The Key used by InfluxDB is a combination of SeriesKey + Timestamp, so the data of the same SeriesKey are sorted and stored by the Timestamp, which can provide very efficient scanning by the time range.

However, one of the biggest problems with using LevelDB is that InfluxDB supports the automatic deletion of historical data (Retention Policy). In time series data scenarios, automatic deletion of data is usually the deletion of large blocks of historical data in continuous time periods. LevelDB does not support Range Delete or TTL, so deletion can only be done one key at a time, which causes a large amount of deletion traffic pressure. And in the data structure of LSM, the real physical deletion is not instantaneous, and only takes effect when compaction is enabled. The practice of deleting data for various TSDBs can be broadly divided into two categories:

InfluxDB adopts the first policy, which divides the data into multiple different shards in a 7-day cycle, and each shard is an independent database instance. As the running time increases, the number of shards increases. As each shard is an independent database instance and the bottom layer is an independent LevelDB storage engine, the problem is that each storage engine opens more files, but with the increase of shards, the number of file handles opened by the final process will soon reach the upper limit. LevelDB uses the level compaction policy at the bottom layer, which is one of the reasons for the large number of files. In fact, the level compaction policy is not suitable for writing time series data, and InfluxDB doesn't mention why.

Because of significant customer feedback regarding excessive file handles, InfluxDB chose BoltDB to replace LevelDB in the selection of new storage engines. The bottom-layer data structure of BoltDB is the mmap B+ tree. The reasons for choosing it include: 1. APIs with the same semantics as LevelDB; 2. Pure Go implementation for easy integration and cross-platform functionality; 3. Only one file is used in a single database, which solves the problem of excessive consumption of file handles. This is the most important reason for choosing BoltDB. However, the BoltDB B+ tree structure isn't as good as LSM in terms of write capability, and B+ tree generates a large number of random writes. Therefore, after using BoltDB, InfluxDB quickly encountered IOPS problems. When the database size reaches several GBs, it often encounters an IOPS bottleneck, which greatly affects the writing ability. Although InfluxDB has also adopted some write optimization measures subsequently, such as adding a WAL layer before BoltDB, writing data to the WAL first, and the WAL can ensure that the data is written to disk in sequence. But eventually writing to BoltDB still consumes a large amount of IOPS resources.

After several minor versions of BoltDB, they finally decided to internally develop TSM for InfluxDB. The design goal of TSM was to solve the problem of excessive file handles in LevelDB, and the second is to solve the write performance problem of BoltDB. TSM is short for Time-Structured Merge Tree. Its idea is similar to LSM, but it has made some special optimizations based on the attributes of time series data. The following are some important components of TSM:

Two policies are recommended for the pre-aggregation and precision reduction of data in InfluxDB. One is to use Kapacitor, the data computation engine in InfluxDB, and the other is to use continuous queries, which comes with InfluxDB.

CREATE CONTINUOUS QUERY "mean_cpu" ON "machine_metric_db"

BEGIN

SELECT mean("cpu") INTO "average_machine_cpu_5m" FROM "machine_metric" GROUP BY time(5m),cluster,hostname

ENDThe above is a simple CQL that configures a continuous query. It enables InfluxDB to start a timing task to aggregate all the data under the measurement "machine_metric" by the dimension of cluster + hostname every 5 minutes, compute the average value of the field "cpu", and write the final result into the new measurement "average_machine_cpu_5m".

The continuous queries of InfluxDB are similar to the auto-rollup function of KairosDB. They are all scheduled on a single node. Data aggregation is the delayed rather than the real-time StreamCompute, during which the storage will be under significant read pressure.

In addition to supporting the storage and computation of time series data, time series databases also need to be able to provide multi-dimensional queries. InfluxDB indexes TimeSeries for faster multi-dimensional queries. Regarding data and indexes, InfluxDB is described as follows:

InfluxDB actually looks like two databases in one: a time series data store and an inverted index for the measurement, tag, and field metadata.

Prior to InfluxDB 1.3, TimeSeries indexes (hereinafter referred to as TSI) are only supported the memory-based method, that is, all TimeSeries indexes are stored in the memory, which is beneficial but also has many problems. However, in the latest InfluxDB 1.3, another indexing method is provided for selection. The new indexing method stores the indexes on disk, which is less efficient than the memory-based index, but solves many problems existing in the memory-based index.

// Measurement represents a collection of time series in a database. It also

// contains in memory structures for indexing tags. Exported functions are

// goroutine safe while un-exported functions assume the caller will use the

// appropriate locks.

type Measurement struct {

database string

Name string `json:"name,omitempty"`

name []byte // cached version as []byte

mu sync.RWMutex

fieldNames map[string]struct{}

// in-memory index fields

seriesByID map[uint64]*Series // lookup table for series by their id

seriesByTagKeyValue map[string]map[string]SeriesIDs // map from tag key to value to sorted set of series ids

// lazyily created sorted series IDs

sortedSeriesIDs SeriesIDs // sorted list of series IDs in this measurement

}

// Series belong to a Measurement and represent unique time series in a database.

type Series struct {

mu sync.RWMutex

Key string

tags models.Tags

ID uint64

measurement *Measurement

shardIDs map[uint64]struct{} // shards that have this series defined

}The above is the definition of the memory-based index data structure in the source code of InfluxDB 1.3. It is mainly composed of two important data structures:

Series: It corresponds to a TimeSeries, and stores some basic attributes related to the TimeSeries and the shard to which it belongs.

Measurement: Each measurement corresponds to a measurement structure in the memory, with some indexes inside the structure to speed up the query.

The advantage of the full memory-based index structure is that it can provide highly efficient multi-dimensional queries, but there are also some problems:

For the problems of the full memory-based index, an additional index implementation is provided in the latest InfluxDB 1.3 Thanks to good scalability in the code design, the index module and the storage engine module are plug-ins. You can choose which index to use in the configuration.

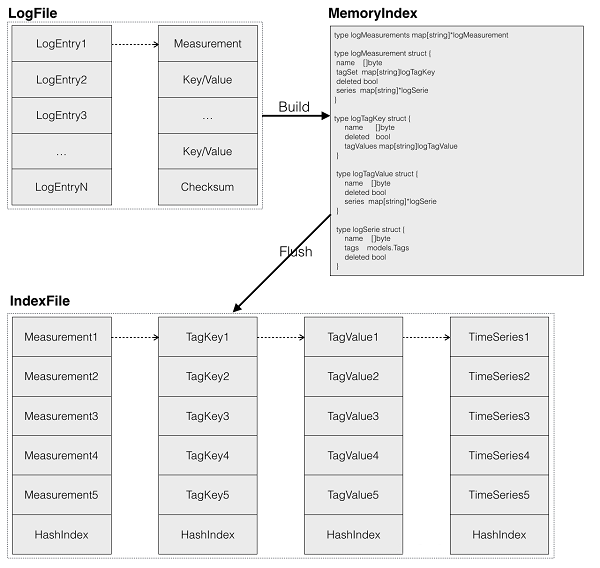

In InfluxDB, a special storage engine is implemented to store index data. Its structure is also similar to that of LSM. As shown in the figure above, it is a disk-based index structure. For details, see Design Documents.

The index data is written to the Write-Ahead-Log (WAL) first. The data in the WAL is organized by LogEntry, and each LogEntry corresponds to a TimeSeries and contains the information about the measurement, tag, and checksum. After being successfully written to the WAL, the data enters into a memory-based index structure. After the WAL accumulates to a certain size, the LogFile is flushed into an IndexFile. The logical structure of the IndexFile is consistent with the structure of the memory-based index, indicating the map structure of the measurement to the tagkey, the tagkey to the tagvalue, and the tagvalue to TimeSeries. InfluxDB uses mmap to access the file, and a HashIndex is saved for each map in the file to speed up the query.

When IndexFiles accumulate to a specified amount, InfluxDB also provides a compaction mechanism to merge multiple IndexFiles into one, saving storage space and speeding up queries.

All InfluxDB components are self-developed. The advantage of self-developement is that each component can be designed based on the attributes of time series data to maximize performance. The whole community is also very active, but large function upgrades often occur, such as changing the storage format or changing the index implementation, which is quite inconvenient for users. In general, I am more optimistic about InfluxDB development. Unfortunately, the cluster version of InfluxDB is not open-source.

A Comprehensive Analysis of Open-Source Time Series Databases (2)

A Comprehensive Analysis of Open-Source Time Series Databases (4)

57 posts | 12 followers

FollowAlibaba Cloud Storage - April 25, 2019

Alibaba Cloud Storage - April 25, 2019

ApsaraDB - July 23, 2021

Alibaba Cloud Storage - April 25, 2019

Alibaba Cloud Community - March 9, 2023

Alibaba Cloud Storage - March 3, 2021

57 posts | 12 followers

Follow Tablestore

Tablestore

A fully managed NoSQL cloud database service that enables storage of massive amount of structured and semi-structured data

Learn More Time Series Database (TSDB)

Time Series Database (TSDB)

TSDB is a stable, reliable, and cost-effective online high-performance time series database service.

Learn More Time Series Database for InfluxDB®

Time Series Database for InfluxDB®

A cost-effective online time series database service that offers high availability and auto scaling features

Learn More Quick BI

Quick BI

A new generation of business Intelligence services on the cloud

Learn MoreMore Posts by Alibaba Cloud Storage