This is the first part of the blog - Low-Latency Distributed Messaging with RocketMQ. In this blog, we will discuss about the history of the Alibaba Cloud message engine family. We will talk about reducing latency, as well as the advantages and disadvantages of using page cache. We will then talk about the development of RocketMQ and how it eliminates latency.

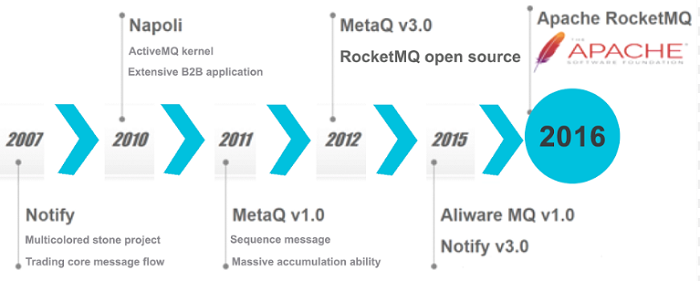

Alibaba Cloud Message Queue engine has experienced three generations of evolution so far. The first generation features the push mode and utilizes relational databases for data storage. Messages run on the first generation of Alibaba Cloud featured very low latency. It received wide application, especially for Alibaba and Taobao's high-frequency transactions.

The second generation, also known as pull mode, bears a proprietary message storage system developed by Alibaba Cloud. Its throughput performance was comparable to that of Kafka. However, given Taobao's application scenarios, especially the scenarios requiring high reliability such as transaction chains, the messaging engine places priority on stability and reliability rather than throughput. The real-time performance of message delivery remains superior to push mode given its adoption of a persistent-connection pull mode.

After the first two generations went live and underwent numerous enhancements for many years, the team at Alibaba Cloud developed the high-performance and low-latency messaging engine RocketMQ. Developed in 2011, it is primarily based on the amalgamation of the pull and push modes. RocketMQ became open source in 2012. After 6 years of testing on transaction chains of Alibaba's Double Eleven (Singles' Day) event core, the software survived and thrived.

Alibaba Cloud donated RocketMQ to the Apache Software Foundation (ASF). Ever since, RocketMQ's popularity flourished, and is becoming one of the most important distributed messaging engine within the Apache Community, after ActiveMQ and Kafka. So far, RocketMQ has provided laudable service for thousands of applications within the Alibaba Group. RocketMQ has played a decisive role in the stability of the group's incredible mid-range terabyte-scaled message flow.

Figure 1.

With the perfection of the Java language ecology and improvements to JVM's performance, C and C++ are no longer the only choices for low latency scenarios. As such, this section mainly describes some explorations into RocketMQ's availability and low latency.

The performance metrics for an application tends to consider the throughput and latency. Throughput refers to the number of requests that a program can process over a period. Latency is the end-to-end response time.

Low latency has different definitions under different circumstances. For example, in a chat application, we can define low latency as within 200ms, and in a transaction system, we can define it as within 10ms. Compared with throughput, latency is susceptible to the effects of numerous factors, such as the CPU, network, memory, and operating system.

As per Little's law, when latency becomes too high, the requests residing in the distributed system will increase sharply, rendering some nodes unavailable. The state of unavailability can even spread to other nodes, resulting in the loss of service capacity to the entire system, resulting in the fault termed an avalanche. Therefore, to improve the availability of the overall distributed system, we need to create low-latency applications

The biggest role of RocketMQ as a messaging engine is asynchronous decoupling, allowing for cutting down peaks and load shifting. Distributed applications that use RocketMQ for asynchronous decoupling can freely scale up and down. Meanwhile, when data peaks arrive, the need to stack a large quantity of messages into RocketMQ arises, and the backend programs can read the data based on their respective consumption rates. Thus, it is imperative to ensure low latency of the chain in which RocketMQ writes messages.

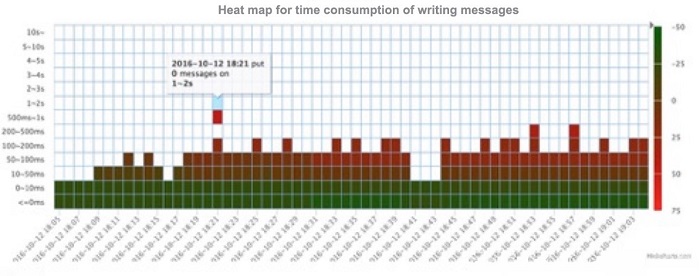

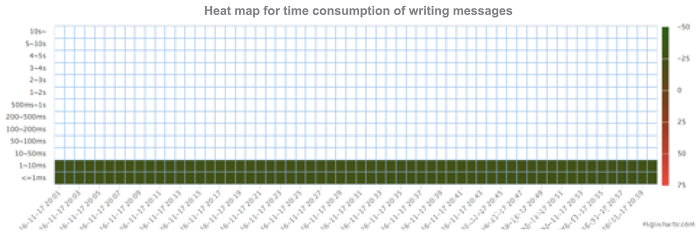

In 2016, Tmall launched new methods of playing their 'Red Envelope' game for Singles' Day. The game was very sensitive to latency, and could only tolerate latency of within 50ms. During the initial stage of the load testing, there were massive latencies of 50 - 500ms when RocketMQ wrote messages, resulting in several failures during the peak usage of the Red Envelope game, extensively affecting the front-end business. The picture below is the thermodynamic diagram of latency of red envelope cluster writing messages during load testing.

Figure 2.

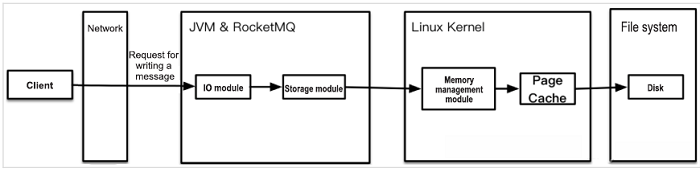

As a messaging engine developed in pure Java language, RocketMQ's self-contained memory modules rely on Page Caches to accelerate and stack. This means that such factors as JVM, GC, kernel, Linux memory management mechanisms, and the file IO will affect its performance. As shown below, the risk of latency exists in each link from sending a message from the client to the final persistence on a disk. From observations of online data, there are accidental latencies of seconds in RocketMQ message writing chain.

Figure 3.

For other JVM pauses, the system can output JVM pause time to the GC log through -XX: +PrintGCApplicationStoppedTime. The specific reason for the pause is output through -XX: +PrintSafepointStatistics -XX: PrintSafepointStatisticsCount=1, and specific optimizations are performed. In case many pauses of RevokeBias emerge in RocketMQ, the best option is to close the biased locking feature through -XX: -UseBiasedLocking. Additionally, the output of the GC log can produce a file IO and sometimes lead to unwanted pauses. It is possible to output GC logs to tmpfs (memory file system), but tmpfs will occupy memory. To avoid memory waste, you can roll GC logs with -XX: +UseGCLogFileRotation. Despite GC logs producing file IOs, JVM will output some statistical data requested by the jstat command to the directory /tmp(hsperfdata). You can close the features through -XX: +PerfDisableSharedMem, and JMX is used to replace the jstat.

As a protection mechanism of critical zones, developers widely use locking in the development of multi-threaded applications. However, locking is a double-edged sword. Excessive or incorrect use of locks diminishes the performance of multi-threaded applications.

Locks in Java are non-fair locks by default. Locking does not consider queuing problems and hence the direct attempts to obtain locks. If it fails, queuing starts automatically. Non-fair locks will cause long thread waiting times and higher latencies. Often, the use of fair locks tends to result in greater performance losses to applications.

On the other hand, synchronization will lead to context switches which bring about certain expenses. Context switches are often at a level of microseconds. But if there are too many threads and competitive pressures, dozens of millisecond-level expenses will occur. We can use LockSupport.park to imitate context switches for testing.

To avoid latency resulting from locking, use CAS primitive to unlock RocketMQ kernel chain, reducing latency and significantly improve throughput.

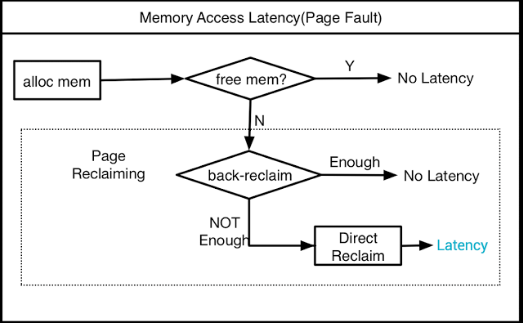

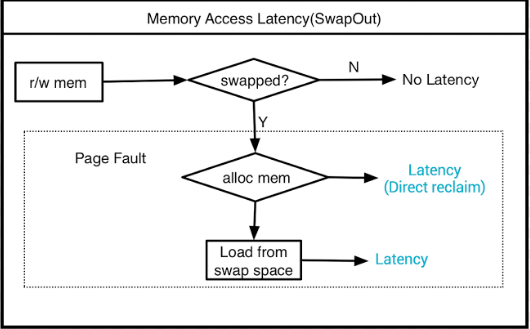

Memory in Linux mainly includes anonymous memory and page caches. With limitations of Linux memory coming into play, high latency may occur when applications access the memory. Linux uses as much memory as possible as a cache. Precisely, there is little memory available for the server. If sufficient memory is unavailable, applications requesting or accessing new memory pages will lead to memory reclamation. The backend memory will enter direct reclaim when reclaimed at speed slower than that of memory allocation. Applications will spin up and wait until the end of memory reclamation which results in huge latencies, as shown below.

Figure 4.

On the other hand, the kernel will also reclaim anonymous memory pages. After swap-outs of the anonymous memory pages, the next access will produce a file IO, resulting in increased latency, as shown below.

Figure 5.

The latencies produced in the two cases are avoidable by adjustments and optimizations to the kernel parameters (vm.extra_free_kbytes and vm.swappiness).

Memory management is by Linux and occurs in pages, 4KB for each page. Latency arises when read-write competition occurs within the memory on the same page. In such cases, it is necessary for applications to adjust memory access to avoid these latencies.

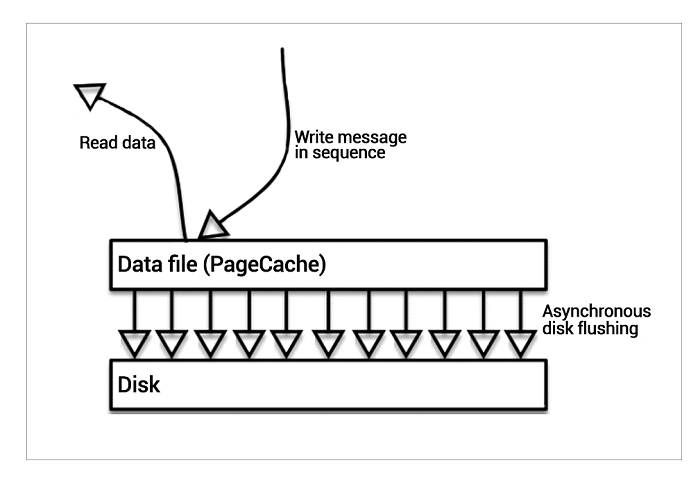

Page caches are file caches used to accelerate the reading and writing of files. They provide more powerful stacking abilities for RocketMQ. RocketMQ maps data files to the memory; it first writes in page caches during the writing of messages, and makes messages persistent through asynchronous flush-to-disk (it also supports synchronous flush-to-disk) mode. One can read messages directly from the page cache, which is also the commonly used mode of distributed storage products in the industry, as shown below:

Figure 6.

In most cases, the read-write speed in this mode is prompt. However, when the operating system performs a writeback of dirty pages, memory reclaim and memory swaps-in and out, large read-write latencies emerge, resulting in an accidental high latency state of the storage engine.

In response to this, RocketMQ uses a variety of optimization techniques, such as memory pre-distribution, file preheating, mlock system calls and read/write splitting to ensure the elimination of the latency resulting from page caches while utilizing their advantages.

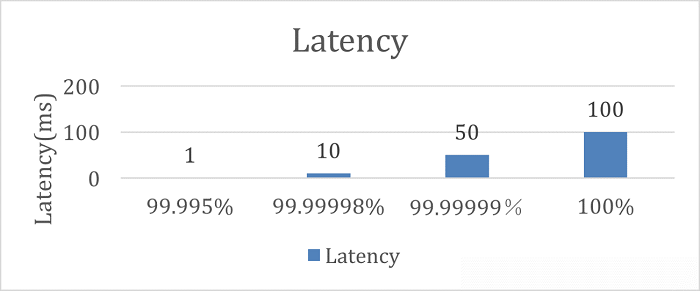

Through optimization of the above cases, RocketMQ eliminates high latency while writing messages, and has gone through Singles' Day for testing. Below is the dynamic diagram of message writing durations after optimization.

99.995% of latency rates from the optimized RocketMQ's message writing are within 1ms, and 100% within 100ms, as shown below.

Figure 7.

Latency is a crucial aspect when it comes to IT and user experience. There are several ways of exploring the factors that cause low latency. RocketMQ is an ideal tool to eliminate high latency while writing messages. In the second part of this blog, we will be discussing the keys to ensuring capacity along with the highly available architecture of RocketMQ.

How China's Developers Are Defining The Information Age (Infographic 5)

2,593 posts | 793 followers

FollowAlibaba Clouder - March 20, 2018

Alibaba Cloud Community - December 21, 2021

Alibaba Cloud Native Community - January 5, 2023

Alibaba Cloud Native - June 12, 2024

Alibaba Cloud Native Community - July 4, 2023

Alibaba Cloud Native Community - July 20, 2023

2,593 posts | 793 followers

Follow ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ

ApsaraMQ for RocketMQ is a distributed message queue service that supports reliable message-based asynchronous communication among microservices, distributed systems, and serverless applications.

Learn MoreMore Posts by Alibaba Clouder

Raja_KT February 14, 2019 at 6:50 am

I am new to RocketMQ. Does it have GUI for publish , subscribe and broker system?