By Harshit Khandelwal, Alibaba Cloud Community Blog author.

Data in the real world can be messy or incomplete, often riddled with errors. Finding insights or solving problems with such data can be extremely tedious and difficult. Therefore, it is important that data is converted into a format that is understandable before it can be processed. This is essentially what data preprocessing is. It is an important piece of the puzzle because it helps in removing various kinds of inconsistencies from the data.

Some ways that data can be handled during preprocessing includes:

This tutorial will discuss the preprocessing aspect of the machine learning pipeline, coving the ways in which data in handled, paying special attention to the techniques of standardization, normalization, feature selection and extraction, and data reduction. Last, this tutorial goes over how you can implement these techniques in a simple way.

This is part of a series of blog tutorials that discuss the basics of machine learning.

Generally speaking, standardization can be considered as the process of implementing or developing standards based on a consensus. These standard help to maximize compatibility, repeatability, and quality, among other things. When it comes to machine learning, standardization serves as a means of making a data metric the same throughout the data. This technique can be applied to various kinds of data like pixel values for images or more specifically for medical data. Many machine learning algorithms such as support-vector machine and k-nearest neighbor uses the standardization technique to rescale the data.

Normalization is the process of reducing unwanted variation either within or between arrays of data. Min-Max scaling is one very common type of normalization technique frequently used in data preprocessing. This approach bounds a data feature into a range, usually either between 0 and 1 or between -1 and 1. This helps to create a standardized range, which makes comparisons easier and more objective. Consider the following example, you need to compare temperatures from cities from around the world. One issue is that temperatures recorded in NYC are in Fahrenheit but those in Poland are listed in Celsius. At the most fundamental level, the normalization process takes the data from each city and converts the temperatures to use the same unit of measure. Compared to standardization, normalization has smaller standard deviations due to the bounded range restriction, which works to suppress the effect of outliers.

Feature selection and extraction is a technique where either important features are built from the existing features. It serves as a process of selection of relevant features from a pool of variables.

The techniques of extraction and selection are quite different from each other. Extraction creates new features from other features, whereas selection returns a subset of most relevant features from many features. The selection technique is generally used for scenarios where there are many features, whereas the extraction technique is used when either there are few features. A very important use of feature selection is in text analysis where there many features but very few samples (these samples are the records themselves), and an important use of feature extraction is in image processing.

These techniques are mostly used because they offer the following benefits:

Developing a machine learning model on large amounts of data can be a computational heavy and tedious task, therefore the data reduction is a crucial step in data preprocessing. The process of data reduction can also help in making data analysis and the mining of huge amounts of data easier and simpler. Data reduction is an important process no matter the machine learning algorithm.

Despite being thought to have a negative impact on the final results of machine learning algorithms, this is simply not true. The process of data reduction does not remove data, but rather converts the data format from one type to another for better performance. The following are some ways in which data is reduced:

This section shows how you can apply some of these preprocessing techniques on Alibaba Cloud.

Several re-scaling and feature manipulation methods can be used directly by applying methods to each record of the data, but it can be tiring and extremely difficult to apply them manually. So now let's have a look at how these methods look in action to allow you to have an easier and simpler workflow.

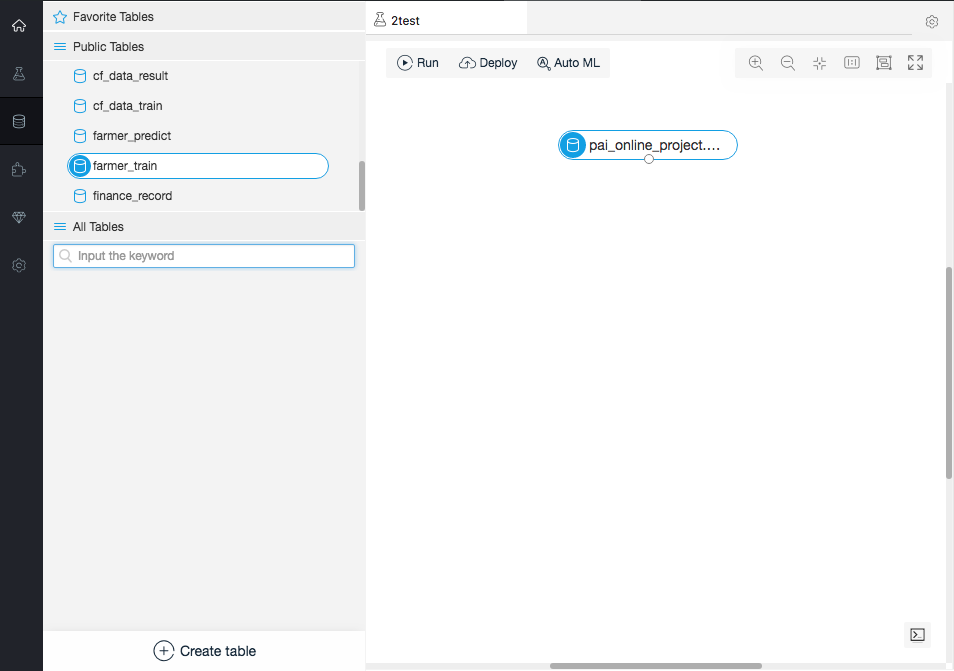

First, let's start by getting a dataset that's already present in the cloud. The data used in this example is farmer data:

So the data is as follows:

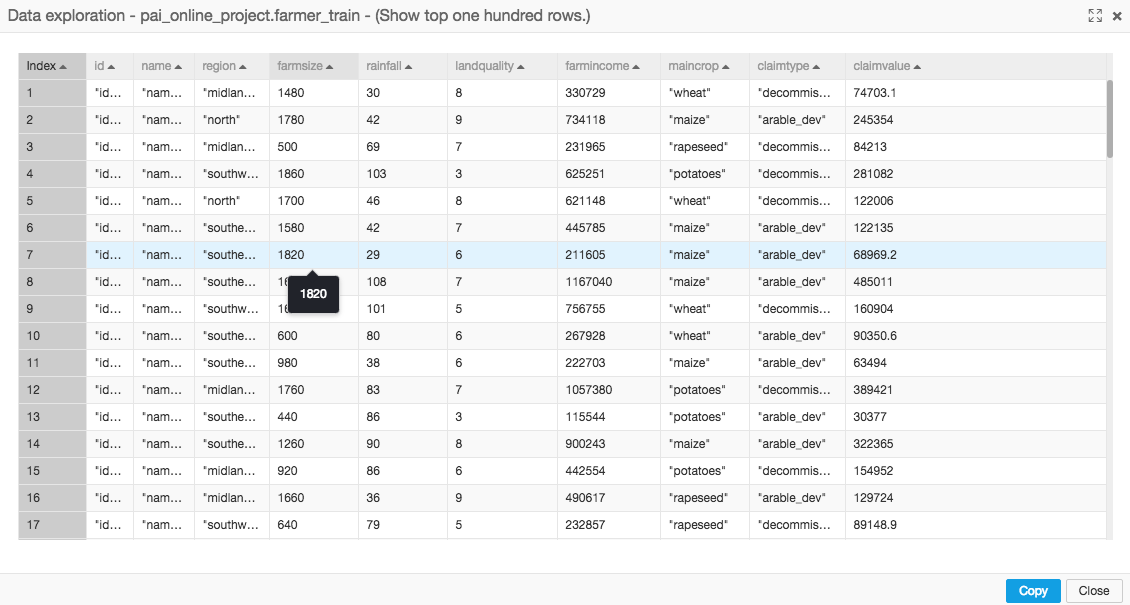

Next, you can use the normalization node, which is under the category Data Preprocessing:

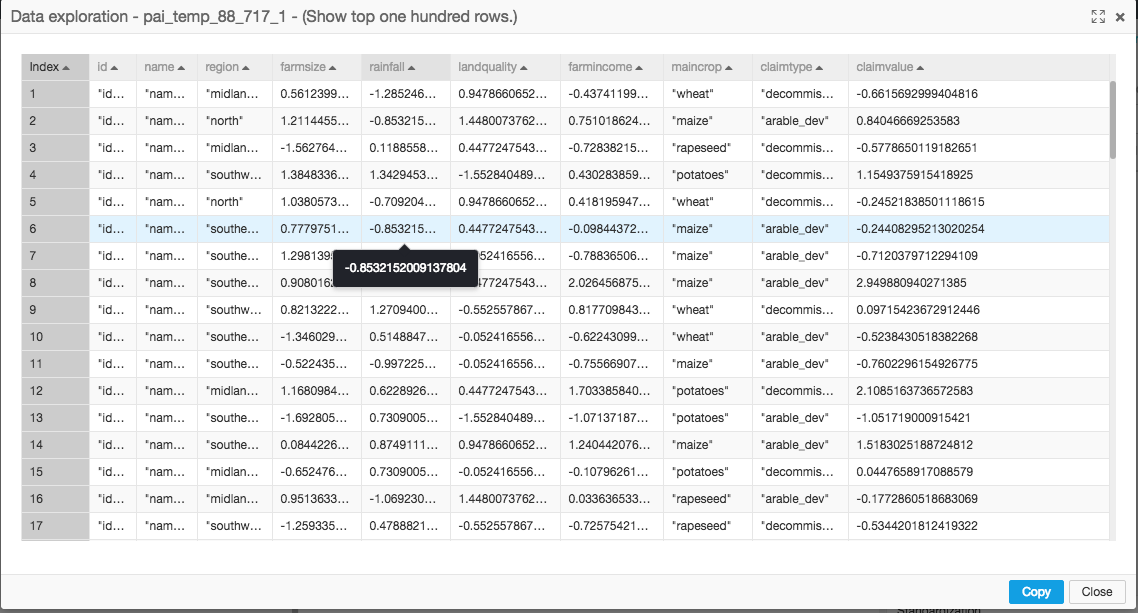

After applying normalization, the data gets converted as shown below:

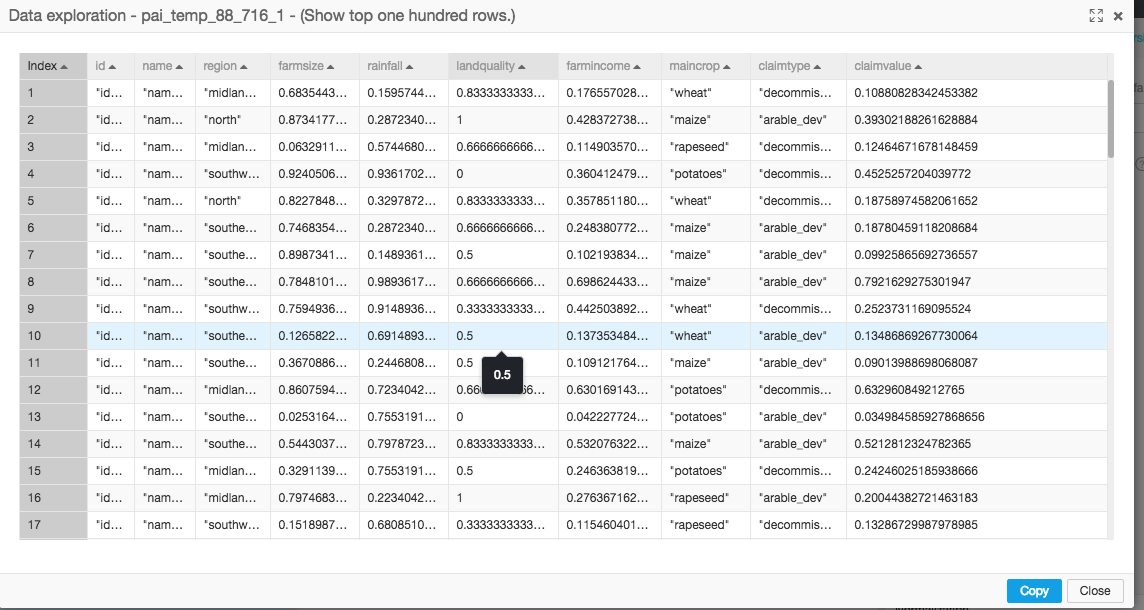

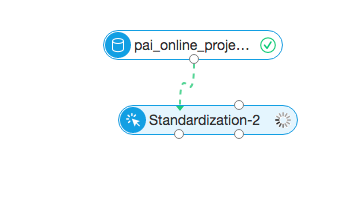

So let's see what kind of results standardization produces:

The results are as follows:

The difference between the results of the above output and the normalization output can be clearly seen. The data is much more regular.

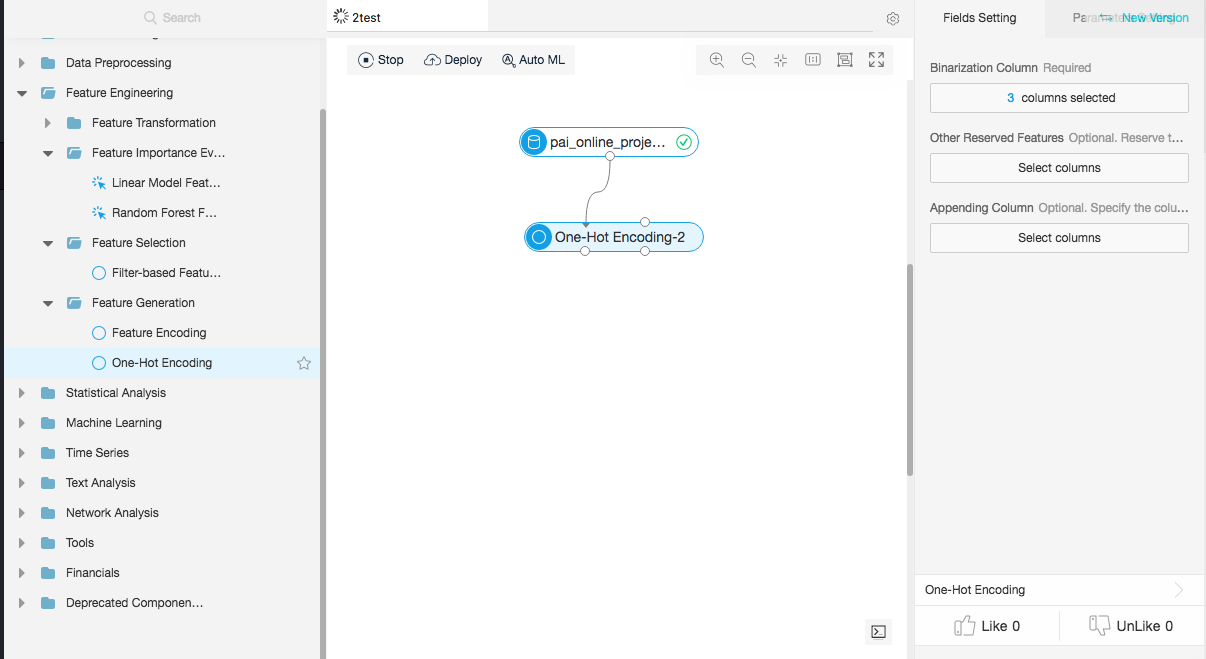

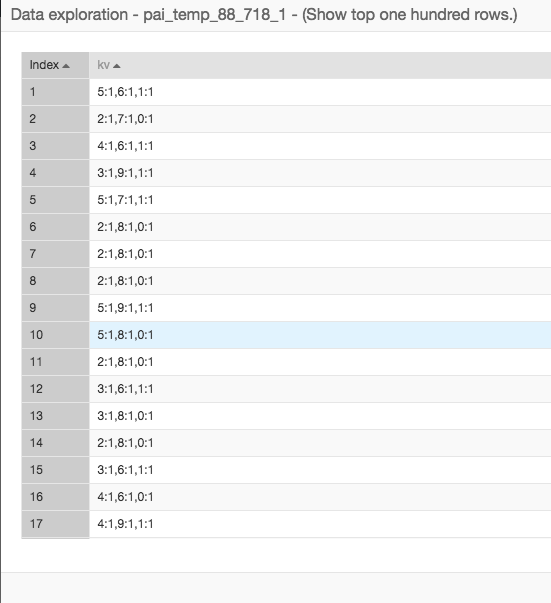

So now let's move on to one of the very useful types of feature extraction techniques, which is one-hot encoding. What this type of extraction technique does is that it creates a separate column for each type of category of data (this result only works for categorical data). It does this by through binary encoding while creating the new column, meaning that it will put 1 where the value exists and 0 elsewhere.

The data produced is as follows:

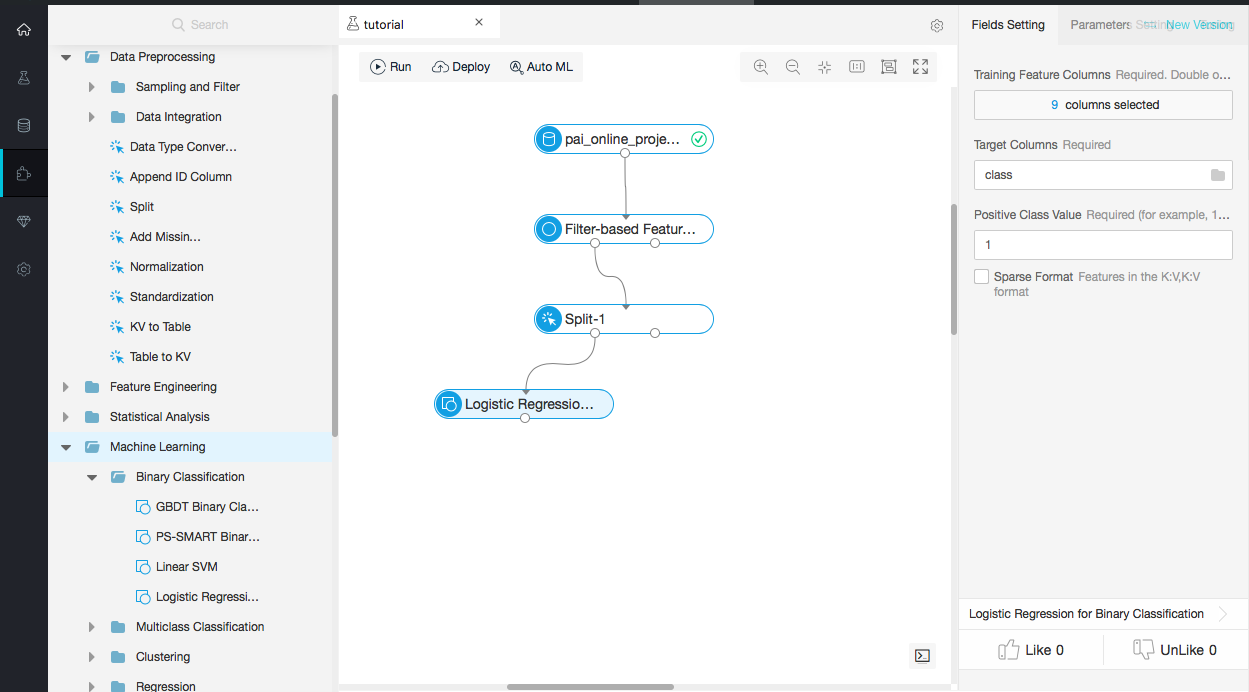

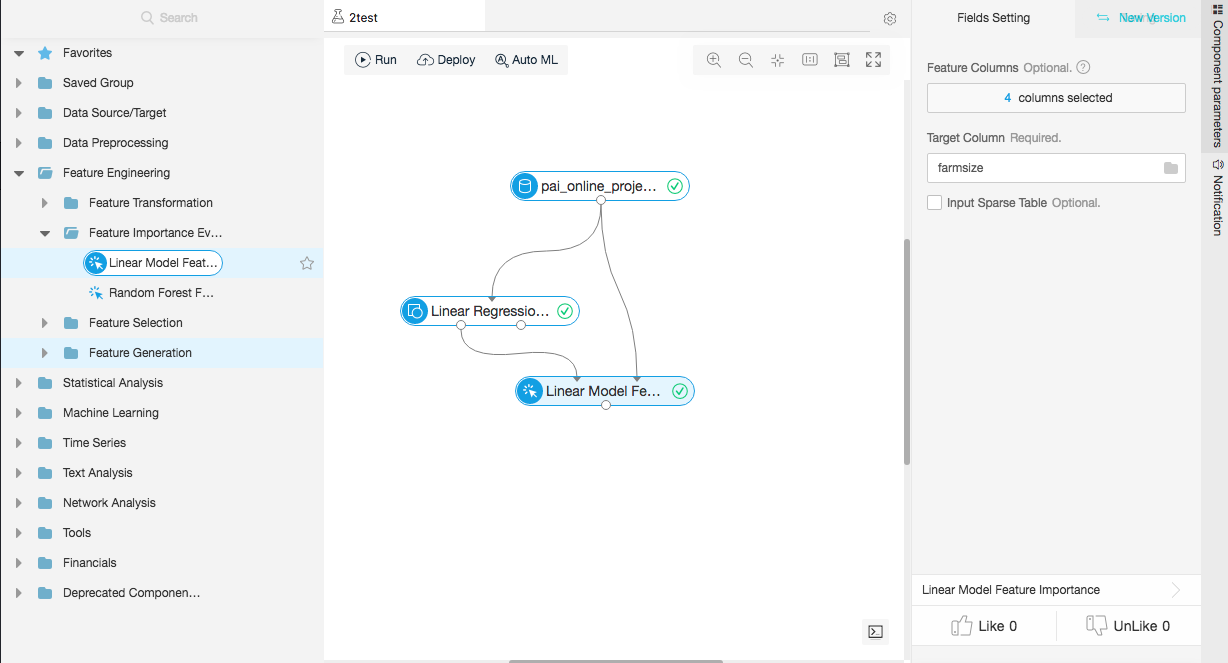

Next, you want to select features for the model you want to develop. Below, a linear Regression model is used for generating feature importance (that is, determine which features are most important) and gives values for each column, particularly about how much they contribute towards the accuracy of the model.

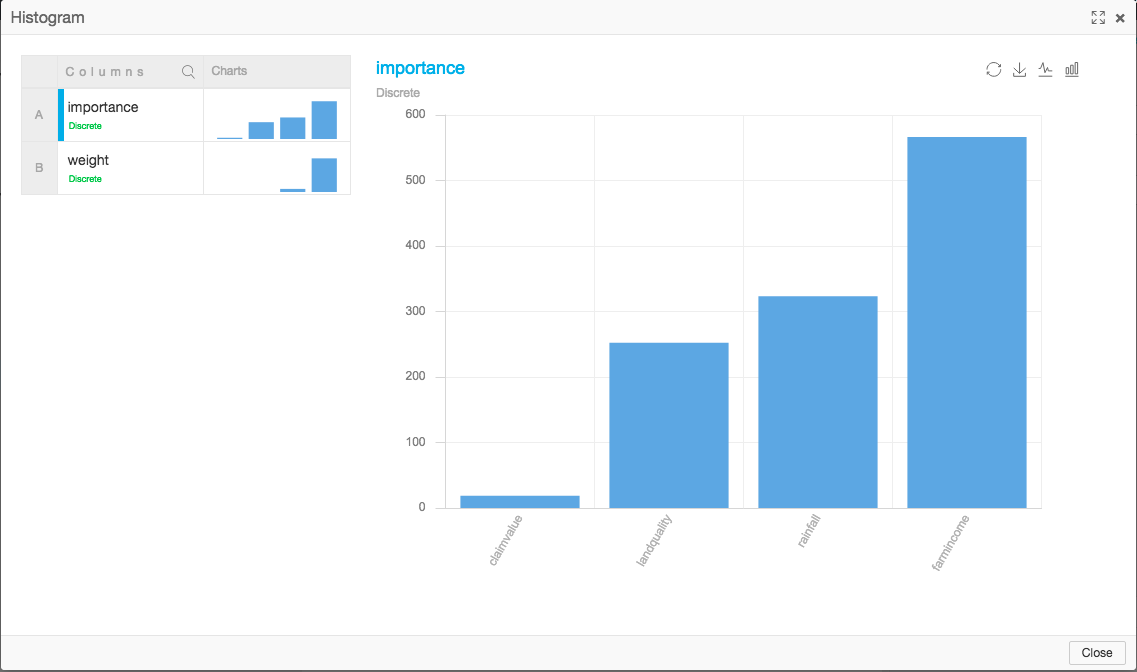

The result we got from linear model feature importance is depicted in the histogram below:

In the preprocessing aspect of the machine learning pipeline, some important techniques are standardization, normalization, feature selection and extraction, and data reduction. Of these, standardization and normalization are techniques that help bring data within a well-defined range. Next, the techniques of feature selection and extraction help to reduce the dimensionality of the dataset while also creating new features out of old features.

A Closer Look into the Major Types of Machine Learning Models

2,593 posts | 793 followers

FollowAlibaba Clouder - July 17, 2020

Alibaba Clouder - February 24, 2020

GarvinLi - December 27, 2018

Ahmed Gad - August 26, 2019

GarvinLi - January 18, 2019

Data Geek - February 27, 2025

2,593 posts | 793 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More DataWorks

DataWorks

A secure environment for offline data development, with powerful Open APIs, to create an ecosystem for redevelopment.

Learn More GPU(Elastic GPU Service)

GPU(Elastic GPU Service)

Powerful parallel computing capabilities based on GPU technology.

Learn MoreMore Posts by Alibaba Clouder