By Bi Ran

Due to software development issues, machine learning projects are always complex and complicated. In addition, machine learning projects are data-driven. This creates other challenges, such as long workflows, inconsistent data versions, difficulties to trace experiments and recur experiment results, and high model iteration costs. To resolve these issues, many enterprises have built an internal machine learning platform to manage the machine learning lifecycle, such as the Google Tensorflow Extended platform, Facebook FBLearner Flow platform, and Uber Michelangelo platform.

However, these platforms depend on the infrastructure of the enterprises. This means that they cannot be completely open-source. These platforms use the machine learning workflow framework. This framework enables data scientists to define their own machine learning workflows more flexibly. They can use the existing data processing and model training capabilities to manage the machine learning lifecycle.

Google has substantial experience in building machine learning workflow platforms. TensorFlow Extended (TFX) is a Google-production-scale machine learning platform used to support the core businesses of Google, including their search, translation, and video businesses. More importantly, TFX improves the efficiency of creating machine learning projects.

The Kubeflow team of Google made Kubeflow Pipelines (KFP) completely open-source at the end of 2018. KFP adopts the design of Google TensorFlow Extended. The only difference between KFP and TFX is that KFP runs in Kubenretes and TFX runs in Borg.

The Kubeflow Pipelines platform consists of the following components:

You can use Kubeflow Pipelines to achieve the following goals:

You may want to get started with Kubeflow Pipelines after learning all of its features. To use Kubeflow Pipelines, you must overcome the following challenges:

The Alibaba Cloud Container Service team has provided a Kubeflow Pipelines deployment solution based on Kustomize for users in China. Unlike basic Kubeflow services, Kubeflow Pipelines depends on stateful services such as MySQL and Minio. Therefore, data persistence and backup are required. In this example, Alibaba Cloud SSD cloud disks are used to store MySQL and Minio data.

You can also deploy the latest version of Kubeflow Pipelines on Alibaba Cloud without deploying other services.

If your operating system is Linux or Mac OS, run the following commands:

opsys=linux # or darwin, or windows

curl -s https://api.github.com/repos/kubernetes-sigs/kustomize/releases/latest |\

grep browser_download |\

grep $opsys |\

cut -d '"' -f 4 |\

xargs curl -O -L

mv kustomize_*_${opsys}_amd64 /usr/bin/kustomize

chmod u+x /usr/bin/kustomizeIf your operating system is Windows, download kustomize_2.0.3_windows_amd64.exe.

1. Connect to the Kubernetes cluster through SSH. For more information, click here.

2. Download the source code.

yum install -y git

git clone --recursive https://github.com/aliyunContainerService/kubeflow-aliyun3. Configure security settings.

3.1 Configure a TLS certificate. If you do not have a TLS certificate, run the following commands to generate one:

yum install -y openssl

domain="pipelines.kubeflow.org"

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.key -out kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.crt -subj "/CN=$domain/O=$domain"If you have a TLS certificate, upload the private key and certificate to kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.key and kubeflow-aliyun/overlays/ack-auto-clouddisk/tls.crt, respectively.

3.2 Set a password for the admin account.

yum install -y httpd-tools

htpasswd -c kubeflow-aliyun/overlays/ack-auto-clouddisk/auth admin

New password:

Re-type new password:

Adding password for user admin4. Use Kustomize to generate a YAML configuration file.

cd kubeflow-aliyun/

kustomize build overlays/ack-auto-clouddisk > /tmp/ack-auto-clouddisk.yaml5. Check the region and zone of the Kubernetes cluster, and replace the zone ID with the zone ID of the cluster. For example, if your cluster is in the cn-hangzhou-g zone, then run the following commands:

sed -i.bak 's/regionid: cn-beijing/regionid: cn-hangzhou/g' \

/tmp/ack-auto-clouddisk.yaml

sed -i.bak 's/zoneid: cn-beijing-e/zoneid: cn-hangzhou-g/g' \

/tmp/ack-auto-clouddisk.yamlWe recommend that you check whether the /tmp/ack-auto-clouddisk.yaml configuration file is updated.

6. Change the container image address from gcr.io to registry.aliyuncs.com.

sed -i.bak 's/gcr.io/registry.aliyuncs.com/g' \

/tmp/ack-auto-clouddisk.yamlWe recommend that you check whether the /tmp/ack-auto-clouddisk.yaml configuration file is updated.

7. Set the disk space, for example, to 200 GB.

sed -i.bak 's/storage: 100Gi/storage: 200Gi/g' \

/tmp/ack-auto-clouddisk.yaml8. Verify the Kubeflow Pipelines YAML configuration file.

kubectl create --validate=true --dry-run=true -f /tmp/ack-auto-clouddisk.yaml9. Use kubectl to deploy the Kubeflow Pipelines service.

kubectl create -f /tmp/ack-auto-clouddisk.yaml10. Use Ingress to query the connection information of the Kubeflow Pipelines service. In this example, the IP address of the Kubeflow Pipelines service is 112.124.193.271. The connection URL of the Kubeflow Pipelines console is https://112.124.193.271/pipeline/.

kubectl get ing -n kubeflow

NAME HOSTS ADDRESS PORTS AGE

ml-pipeline-ui * 112.124.193.271 80, 443 11m11. Log on to the Kubeflow Pipelines console.

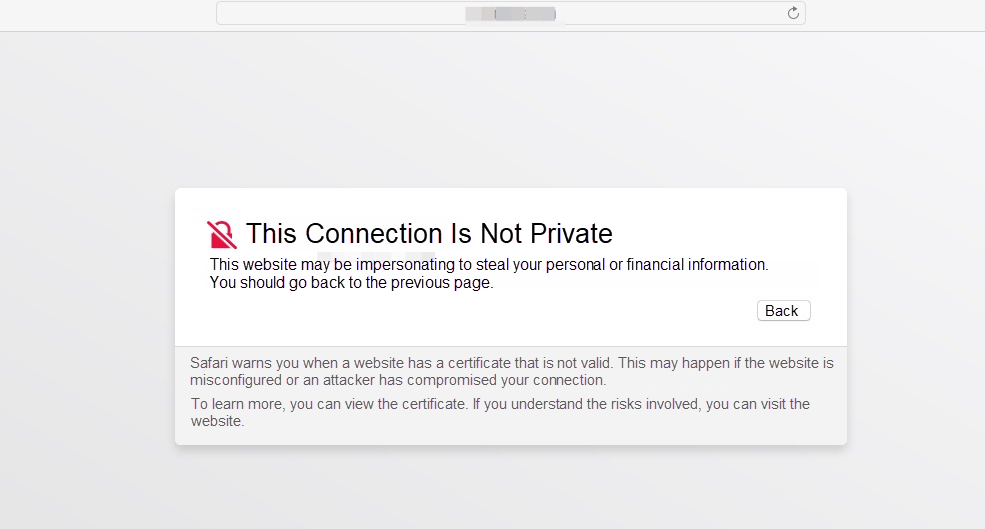

If a self-signed certificate is used, the system cautions that the connection is not private. Click Advanced and then click visit the website.

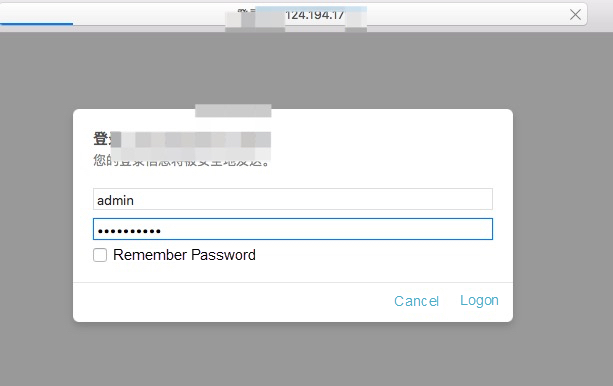

Enter username admin and the password set in step 2.2.

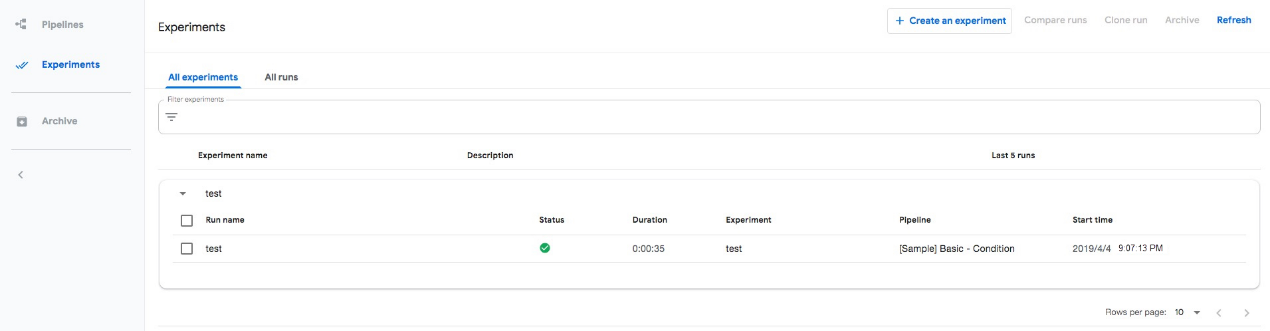

You can then manage and run training tasks in the Kubeflow Pipelines console.

1. Why are Alibaba Cloud SSD cloud disks used in this example?

With Alibaba Cloud SSD cloud disks, you can periodically back up data to prevent Kubeflow Pipelines metadata loss.

2. How can I back up a cloud disk?

If you want to back up the data stored in a cloud disk, you can manually create snapshots for the cloud disk or apply an automatic snapshot policy to it to automatically create snapshots on schedule.

3. How can I remove the Kubeflow Pipelines deployment?

Complete the following tasks to remove the Kubeflow Pipelines deployment:

kubectl delete -f /tmp/ack-auto-clouddisk.yaml4. How can I use an existing cloud disk to store data if I do not want the system to automatically create a cloud disk for me?

For more information, click here.

This document introduces the background of Kubeflow Pipelines, the major issues that Kubeflow Pipelines resolves, and the procedure of using Kustomize to deploy Kubeflow Pipelines for machine learning on Alibaba Cloud. To learn more, see the document about how to use Kubeflow Pipelines to develop a machine learning workflow.

Using Logtail to Collect Logs from NAS Mounted on Kubernetes

New Thoughts on Cloud Native: Why Are Containers Everywhere?

223 posts | 33 followers

FollowAlibaba Container Service - March 10, 2020

Alibaba Container Service - April 28, 2020

Alibaba Clouder - January 13, 2021

Alibaba EMR - April 27, 2021

Alibaba Container Service - March 29, 2019

5927941263728530 - May 15, 2025

223 posts | 33 followers

Follow Platform For AI

Platform For AI

A platform that provides enterprise-level data modeling services based on machine learning algorithms to quickly meet your needs for data-driven operations.

Learn More Container Service for Kubernetes

Container Service for Kubernetes

Alibaba Cloud Container Service for Kubernetes is a fully managed cloud container management service that supports native Kubernetes and integrates with other Alibaba Cloud products.

Learn More ACK One

ACK One

Provides a control plane to allow users to manage Kubernetes clusters that run based on different infrastructure resources

Learn More Epidemic Prediction Solution

Epidemic Prediction Solution

This technology can be used to predict the spread of COVID-19 and help decision makers evaluate the impact of various prevention and control measures on the development of the epidemic.

Learn MoreMore Posts by Alibaba Container Service