Join us at the Alibaba Cloud ACtivate Online Conference on March 5-6 to challenge assumptions, exchange ideas, and explore what is possible through digital transformation.

By Hitesh Jethva, Alibaba Cloud Tech Share Author. Tech Share is Alibaba Cloud's incentive program to encourage the sharing of technical knowledge and best practices within the cloud community.

Patroni is an open source tool that can be used for creating and managing your own customized, high-availability cluster for PostgreSQL with Python. It can be used to handle tasks such as replication, backups and restorations. Patroni also provides an HAProxy configuration, giving your application a single endpoint for connecting to the cluster's leader. If you are looking to quickly deploy HA PostgreSQL cluster in the data center, then Patroni is definitely worth considering.

In this tutorial, we will be configuring a highly available PostgreSQL cluster using Patroni on Alibaba Cloud Elastic Compute Service (ECS) with Ubuntu 16.04. We will need four ECS instances; we will use Instance1 as a master and Instance2 as a slave, configure replication from master to slaves, and configure automatically failover to the slave if the master goes down.

First, log in to your Alibaba Cloud ECS Console. Create a new ECS instance, choosing Ubuntu 16.04 as the operating system with at least 2GB RAM. Connect to your ECS instance and log in as the root user.

Once you are logged into your Ubuntu 16.04 instance, run the following command to update your base system with the latest available packages.

apt-get update -yIn this tutorial, we will be using the following setup:

| Instance | Application | IP Address |

| Instance1 | Postgres, Patroni | 192.168.0.105 |

| Instance2 | Postgres, Patroni | 192.168.0.104 |

| Instance3 | etcd | 192.168.0.103 |

| Instance4 | HAProxy | 192.168.0.102 |

First, you will need to install PostgreSQL on Instance1 and Instance2. By default, PostgreSQL is available in the Ubuntu 16.04 repository. You can install it by just running the following command:

apt-get install postgresql -yOnce the installation is completed, verify the status of PostgreSQL using the following command:

systemctl status postgresqlOutput:

● postgresql.service - PostgreSQL RDBMS

Loaded: loaded (/lib/systemd/system/postgresql.service; enabled; vendor preset: enabled)

Active: active (exited) since Fri 2018-09-21 20:03:04 IST; 1min 7s ago

Main PID: 3994 (code=exited, status=0/SUCCESS)

CGroup: /system.slice/postgresql.service

Sep 21 20:03:04 Node1 systemd[1]: Starting PostgreSQL RDBMS...

Sep 21 20:03:04 Node1 systemd[1]: Started PostgreSQL RDBMS.

Sep 21 20:03:24 Node1 systemd[1]: Started PostgreSQL RDBMS.Next, stop the PostgreSQL service so that Patroni can manage it:

systemctl stop postgresqlNext, you will need to create a symlinks from /usr/lib/postgresql/9.5/bin. Because, Patroni uses some tools that comes with PostgreSQL. You can create symlink with the following command:

ln -s /usr/lib/postgresql/9.5/bin/* /usr/sbin/You will need to install Patroni on Instance1 and Instance2.

Before installing Patroni, you will need to install Python and Python-pip to your server. You can install them with the following command:

apt-get install python3-pip python3-dev libpq-dev

sudo -H pip3 install --upgrade pipNext, install Patroni using the pip command:

pip install patroni

pip install python-etcdEtcd is a distributed key value store that provides a reliable way to store data across a cluster of machines. Here, we will use Etcd to store the state of the Postgres cluster. So, both Postgres nodes make use of etcd to keep the Postgres cluster up and running.

You can install Etcd on Instance3 with the following command:

apt-get install etcd -yHAProxy is free, open source software that provides a high availability load balancer and proxy server for TCP and HTTP-based applications that spreads requests across multiple servers.

Here, we will use HAProxy to forwards the connection Master or Slave node which is currently the master and online.

You can install HAProxy on Instance4 with the following command:

apt-get install haproxy -yEtcd default configuration file is located at /etc/default directory. You will need to make some changes in etcd file.

nano /etc/default/etcdAdd the following lines:

ETCD_LISTEN_PEER_URLS="http://192.168.0.103:2380,http://127.0.0.1:7001"

ETCD_LISTEN_CLIENT_URLS="http://127.0.0.1:2379, http://192.168.0.103:2379"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.103:2380"

ETCD_INITIAL_CLUSTER="etcd0=http://192.168.0.103:2380,"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.103:2379"

ETCD_INITIAL_CLUSTER_TOKEN="cluster1"

ETCD_INITIAL_CLUSTER_STATE="new"Save and close the file, then restart Etcd service with the following command:

systemctl restart etcdYou can now check the status of Etcd with the following command:

systemctl status etcdOutput:

● etcd.service - etcd - highly-available key value store

Loaded: loaded (/lib/systemd/system/etcd.service; enabled; vendor preset: enabled)

Active: active (running) since Fri 2018-09-21 22:27:47 IST; 5s ago

Docs: https://github.com/coreos/etcd

man:etcd

Main PID: 4504 (etcd)

CGroup: /system.slice/etcd.service

└─4504 /usr/bin/etcd

Sep 21 22:27:47 Node2 etcd[4504]: starting server... [version: 2.2.5, cluster version: to_be_decided]

Sep 21 22:27:47 Node2 systemd[1]: Started etcd - highly-available key value store.

Sep 21 22:27:47 Node2 etcd[4504]: added local member ce2a822cea30bfca [http://localhost:2380 http://localhost:7001] to cluster 7e27652122e8b2ae

Sep 21 22:27:47 Node2 etcd[4504]: set the initial cluster version to 2.2

Sep 21 22:27:48 Node2 etcd[4504]: ce2a822cea30bfca is starting a new election at term 5

Sep 21 22:27:48 Node2 etcd[4504]: ce2a822cea30bfca became candidate at term 6

Sep 21 22:27:48 Node2 etcd[4504]: ce2a822cea30bfca received vote from ce2a822cea30bfca at term 6

Sep 21 22:27:48 Node2 etcd[4504]: ce2a822cea30bfca became leader at term 6

Sep 21 22:27:48 Node2 etcd[4504]: raft.node: ce2a822cea30bfca elected leader ce2a822cea30bfca at term 6

Sep 21 22:27:48 Node2 etcd[4504]: published {Name:hostname ClientURLs:[http://192.168.0.103:2379]} to cluster 7e27652122e8b2aePatroni uses YAML to store their configuration. So, you will need to create a configuration file for Patroni on Instance1 and Instance2:

nano /etc/patroni.ymlAdd the following lines:

scope: postgres

namespace: /db/

name: postgresql0

restapi:

listen: 192.168.0.105:8008

connect_address: 192.168.0.105:8008

etcd:

host: 192.168.0.103:2379

bootstrap:

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

initdb:

- encoding: UTF8

- data-checksums

pg_hba:

- host replication replicator 127.0.0.1/32 md5

- host replication replicator 192.168.0.105/0 md5

- host replication replicator 192.168.0.104/0 md5

- host all all 0.0.0.0/0 md5

users:

admin:

password: admin

options:

- createrole

- createdb

postgresql:

listen: 192.168.0.105:5432

connect_address: 192.168.0.105:5432

data_dir: /data/patroni

pgpass: /tmp/pgpass

authentication:

replication:

username: replicator

password: password

superuser:

username: postgres

password: password

parameters:

unix_socket_directories: '.'

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: falseSave and close the file, when you are finished.

Next, create a data directory for Patroni on Instance1 and Instance2:

mkdir -p /data/patroniNext, change the ownership and permissions of the data directory:

chown postgres:postgres /data/patroni

chmod 700 /data/patroniNext, you will need to create a startup script for Patroni to manage and monitor Patroni. You can do this with the following command:

nano /etc/systemd/system/patroni.serviceAdd the following lines:

[Unit]

Description=Runners to orchestrate a high-availability PostgreSQL

After=syslog.target network.target

[Service]

Type=simple

User=postgres

Group=postgres

ExecStart=/usr/local/bin/patroni /etc/patroni.yml

KillMode=process

TimeoutSec=30

Restart=no

[Install]

WantedBy=multi-user.targSave and close the file. Then start PostgreSQL and Patroni service on both Instance1 and Instance2 with the following command:

systemctl start patroni

systemctl start postgresqlNext, check the status of Patroni using the following command:

systemctl status patroniOutput:

● patroni.service - Runners to orchestrate a high-availability PostgreSQL

Loaded: loaded (/etc/systemd/system/patroni.service; disabled; vendor preset: enabled)

Active: active (running) since Fri 2018-09-21 22:22:22 IST; 3min 17s ago

Main PID: 3286 (patroni)

CGroup: /system.slice/patroni.service

├─3286 /usr/bin/python3 /usr/local/bin/patroni /etc/patroni.yml

├─3305 postgres -D /data/patroni --config-file=/data/patroni/postgresql.conf --max_worker_processes=8 --max_locks_per_transaction=64

├─3308 postgres: postgres: checkpointer process

├─3309 postgres: postgres: writer process

├─3310 postgres: postgres: stats collector process

├─3315 postgres: postgres: postgres postgres 192.168.0.105(54472) idle

├─3320 postgres: postgres: wal writer process

└─3321 postgres: postgres: autovacuum launcher process

Sep 21 22:24:52 Node1 patroni[3286]: 2018-09-21 22:24:52,329 INFO: Lock owner: postgresql0; I am postgresql0

Sep 21 22:24:52 Node1 patroni[3286]: 2018-09-21 22:24:52,391 INFO: no action. i am the leader with the lockNote: Repeat all the above steps on both Instance1 and Instance2.

PostgreSQL cluster is now up and running. It's time to configure HAProxy to forward connection receive from PostgreSQL client to the Master node.

You can configure HAProxy by editing /etc/haproxy/haproxy.cfg file:

nano /etc/haproxy/haproxy.cfgAdd the following lines:

global

maxconn 100

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

listen stats

mode http

bind *:7000

stats enable

stats uri /

listen postgres

bind *:5000

option httpchk

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server postgresql_192.168.0.105_5432 192.0.2.11:5432 maxconn 100 check port 8008

server postgresql_192.168.0.104_5432 192.0.2.12:5432 maxconn 100 check port 8008Save and close the file. Then, restart HAProxy with the following command:

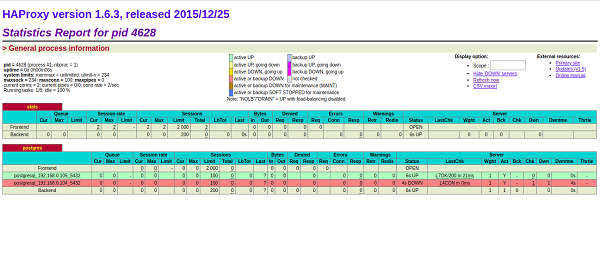

systemctl restart haproxyNow, open your web browser and type the URL http://192.168.0.102:7000 (HAProxy Instance4 IP address). You will be redirected to the HAProxy dashboard as shown below:

In the above image, the postgresql_192.168.0.105_5432 row is highlighted in green. That means 192.168.0.105 is currently acting as the master.

If you shut down the primary node (Instance1), 192.168.0.104 (Instance2) should be acting as the master.

When you restart the Instance1, it will rejoin the cluster as a slave and sync up with the master.

2,593 posts | 793 followers

FollowAlibaba Clouder - March 15, 2019

Alibaba Clouder - July 6, 2018

Alibaba Clouder - June 4, 2019

Alibaba Clouder - May 22, 2019

Alibaba Clouder - June 10, 2019

Alibaba Clouder - December 26, 2018

Patroni uses DCS (Distributed Configuration Store) to attain consensus. Only the node that holds the leader lock can be the master and the leader lock is obtained via DCS. If the master node doesn’t hold the leader lock, then it will be demoted immediately by Patroni to run as a standby. This way, at any point in time, there can only be one master running in the system.

We have tried the above installation process in which failover is working but replication is not working. Any changes we have to do or any suggestions?

2,593 posts | 793 followers

Follow ECS(Elastic Compute Service)

ECS(Elastic Compute Service)

Elastic and secure virtual cloud servers to cater all your cloud hosting needs.

Learn More ApsaraDB RDS for PostgreSQL

ApsaraDB RDS for PostgreSQL

An on-demand database hosting service for PostgreSQL with automated monitoring, backup and disaster recovery capabilities

Learn More Marketplace

Marketplace

Marketplace is an online market for users to search and quickly use the software as image for Alibaba Cloud products.

Learn MoreMore Posts by Alibaba Clouder

5848363536543818 July 19, 2019 at 11:43 am

How does the two postgresql instances sync with each other?