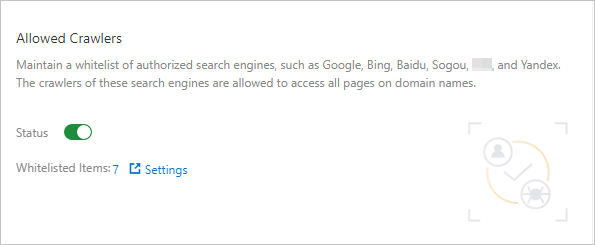

The allowed crawlers function maintains a whitelist of authorized search engines, such as Google, Bing, Baidu, Sogou and Yandex. The crawlers of these search engines are allowed to access all pages on domain names.

Background information

Rules defined in the function allow requests from specific crawlers to the target domain name based on the Alibaba Cloud crawler library. The Alibaba Cloud crawler library is updated in real time based on the analysis of network traffic that flows through Alibaba Cloud, and captures the characteristics of requests that are initiated from crawlers. The crawler library is updated dynamically and contains crawler IP addresses of mainstream search engines, including Google, Baidu, Sogou, Bing, and Yandex.

Prerequisites

- Subscription WAF instance: If your WAF instance runs the Pro, Business, or Enterprise edition, the Bot Management module is enabled.

Your website has been added to WAF. For more information, see Tutorials.

Procedure

Log on to the Web Application Firewall (WAF) console. In the top menu bar, select the resource group and region for your WAF instance: Chinese Mainland or Outside Chinese Mainland.

In the left navigation pane, choose .

On the Website Protection page, switch to the domain name to configure.

- Click the Bot Management tab, find the Allowed Crawlers section. Then, turn on Status and click Settings.

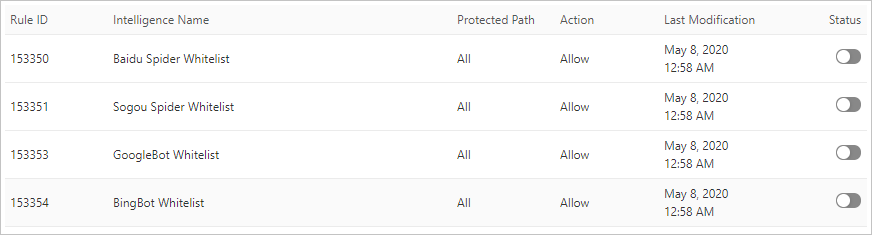

- In the Allowed Crawlers list, find the target rule by Intelligence Name, and turn on Status.

The default rules only allow crawler requests from the following search engines: Google, Bing, Baidu, Sogou and Yandex. You can enable the Legit Crawling Bots rule to allow requests from all search engine crawlers.

The default rules only allow crawler requests from the following search engines: Google, Bing, Baidu, Sogou and Yandex. You can enable the Legit Crawling Bots rule to allow requests from all search engine crawlers.