You can configure the built-in stub_status module of NGINX to enable a dedicated status page to display the key metrics of your NGINX server in real time. The metrics include Active connections, Reading, Writing, and Waiting. You can use Logtail plug-ins to collect NGINX monitoring logs. After the logs are collected, you can query and analyze the logs. This way, you can continuously monitor your NGINX cluster.

Prerequisites

Logtail is installed on your server. For more information, see Install Logtail on a Linux server or Install Logtail on a Windows server.

For a Linux server, install Logtail V0.16.0 or later. For a Windows server, install Logtail V1.0.0.8 or later.

Step 1: Configure the stub_status module

In this topic, Linux is used as an example to describe the configuration procedure.

Run the following commands to install and start NGINX:

sudo yum install nginx sudo systemctl start nginxRun the following command to check whether the NGINX stub_status module is supported. For more information, see Module ngx_http_stub_status_module.

nginx -V 2>&1 | grep -o with-http_stub_status_module with-http_stub_status_moduleIf the following information is returned, the module is supported:

with-http_stub_status_moduleConfigure the stub_status module on your server.

Run the following command to open the

/etc/nginx/nginx.conffile:vim /etc/nginx/nginx.confPress the

ikey on your keyboard to enter the edit mode.Add the following code to the

server {..}section. For more information aboutnginx_status, see Enable Nginx Status Page.location /nginx_status { stub_status on; # Enable the stub_status module. access_log off; allow ${Server IP address}; deny all; # Deny access requests from all other IP addresses to the status page. }Press the

Esckey on the keyboard to exit the edit mode. Then, enter:wqto save and close the file.

Run the following command on your server to verify the configuration results:

curl http://${Server IP address}/nginx_statusIf the following output is returned, the configuration is successful.

Active connections: 1 server accepts handled requests 2507455 2507455 2512972 Reading: 0 Writing: 1 Waiting: 0

Step 2: Collect NGINX monitoring logs

Log on to the Simple Log Service console.

On the right side of the page that appears, click the Quick Data Import card.

Click Custom Data Plug-in.

Select the project and Logstore. Then, click Next.

Create a machine group.

If a machine group is available, click Use Existing Machine Groups.

If no machine groups are available, perform the following steps to create a machine group. In this example, an Elastic Compute Service (ECS) instance is used.

On the ECS Instances tab, select Manually Select Instances. Then, select the ECS instance that you want to use and click Create.

For more information, see Install Logtail on ECS instances.

ImportantIf you want to collect logs from an ECS instance that belongs to a different Alibaba Cloud account than Log Service, a server in a data center, or a server of a third-party cloud service provider, you must manually install Logtail. For more information, see Install Logtail on a Linux server or Install Logtail on a Windows server.

After you manually install Logtail, you must configure a user identifier for the server. For more information, see Configure a user identifier.

After Logtail is installed, click Complete Installation.

In the Create Machine Group step, configure the Name parameter and click Next.

Log Service allows you to create IP address-based machine groups and custom identifier-based machine groups. For more information, see Create an IP address-based machine group and Create a custom identifier-based machine group.

Confirm that the machine group is displayed in the Applied Server Groups section and click Next.

ImportantIf you apply a machine group immediately after you create the machine group, the heartbeat status of the machine group may be FAIL. This issue occurs because the machine group is not connected to Simple Log Service. To resolve this issue, you can click Automatic Retry. If the issue persists, see What do I do if no heartbeat connections are detected on Logtail?

In the Configure Data Source step, configure Configuration Name and Plug-in Configuration. Then, click Next.

inputs is required and is used to configure the data source settings for the Logtail configuration.

ImportantYou can specify only one type of data source in inputs.

processors is optional and is used to configure the data processing settings for the Logtail configuration to parse data. You can specify one or more processing methods.

If your logs cannot be parsed based only on the setting of inputs, you can configure processors in the Plug-in Configuration field to add plug-ins for data processing. For example, you can extract fields, extract log time, mask data, and filter logs. For more information, see Use Logtail plug-ins to process data.

{ "inputs": [ { "type": "metric_http", "detail": { "IntervalMs": 60000, "Addresses": [ "http://${Server IP address}/nginx_status", "http://${Server IP address}/nginx_status", "http://${Server IP address}/nginx_status" ], "IncludeBody": true } } ], "processors": [ { "type": "processor_regex", "detail": { "SourceKey": "content", "Regex": "Active connections: (\\d+)\\s+server accepts handled requests\\s+(\\d+)\\s+(\\d+)\\s+(\\d+)\\s+Reading: (\\d+) Writing: (\\d+) Waiting: (\\d+)[\\s\\S]*", "Keys": [ "connection", "accepts", "handled", "requests", "reading", "writing", "waiting" ], "FullMatch": true, "NoKeyError": true, "NoMatchError": true, "KeepSource": false } } ] }The following table describes the key parameters.

Parameter

Type

Required

Description

type

string

Yes

The type of the data source. Set the value to metric_http.

IntervalMs

int

Yes

The interval between two consecutive requests. Unit: milliseconds.

Addresses

Array

Yes

The URLs that you want to monitor.

IncludeBody

boolean

No

Specifies whether to collect the body information of requests. Default value: false. If you set this parameter to true, the body information is collected and stored in the content field.

You can view the collected logs 1 minute after the Logtail configuration is created. The following example shows a collected log. By default, Simple Log Service generates the nginx_status dashboard to display the results of query and analysis on the collected logs.

_address_:http://10.10.XX.XX/nginx_status

_http_response_code_:200

_method_:GET

_response_time_ms_:1.83716261897

_result_:success

accepts:33591200

connection:450

handled:33599550

reading:626

requests:39149290

waiting:68

writing:145 Step 3: Query and analyze logs

Log on to the Simple Log Service console.

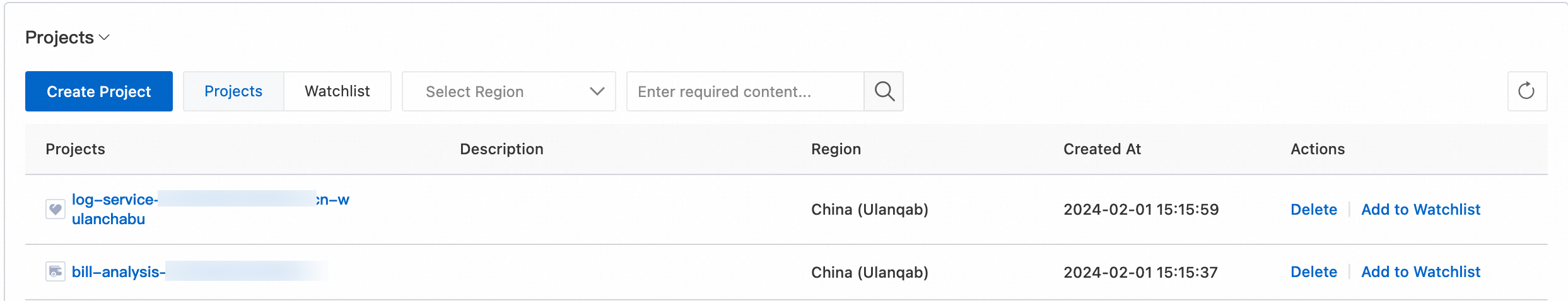

In the Projects section, click the project that you want to manage.

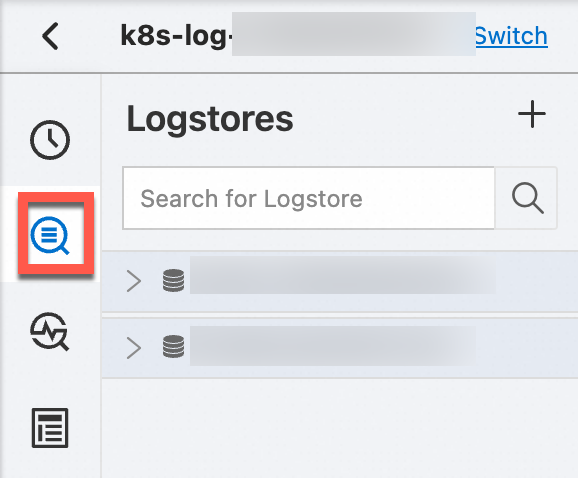

In the left-side navigation pane, click Log Storage. In the Logstores list, click the Logstore that you want to manage.

Enter a query statement in the search box, click Last 15 Minutes, and then specify a query time range.

For more information, see Step 1: Enter a query statement.

Query logs

Query the information about an IP address.

_address_ : 10.10.0.0Query the requests whose response time exceeds 100 milliseconds.

_response_time_ms_ > 100Query the requests for which the HTTP status code 200 is not returned.

not _http_response_code_ : 200

Analyze logs

Obtain the average numbers of waiting connections, reading connections, writing connections, and connections at 5-minute intervals.

*| select avg(waiting) as waiting, avg(reading) as reading, avg(writing) as writing, avg(connection) as connection, from_unixtime( __time__ - __time__ % 300) as time group by __time__ - __time__ % 300 order by time limit 1440Obtain the top 10 servers that have the largest number of waiting connections.

*| select max(waiting) as max_waiting, _address_, from_unixtime(max(__time__)) as time group by address order by max_waiting desc limit 10Obtain the number of IP addresses.

* | select count(distinct(_address_)) as totalObtain the number of IP addresses from which failed requests are initiated.

not _result_ : success | select count(distinct(_address_))Obtain the IP addresses from which the 10 most recent failed requests are initiated.

not _result_ : success | select _address_ as address, from_unixtime(__time__) as time order by __time__ desc limit 10Obtain the total number of requests at 5-minute intervals.

*| select avg(handled) * count(distinct(_address_)) as total_handled, avg(requests) * count(distinct(address)) as total_requests, from_unixtime( __time__ - __time__ % 300) as time group by __time__ - __time__ % 300 order by time limit 1440Obtain the average request latency at 5-minute intervals.

*| select avg(_response_time_ms_) as avg_delay, from_unixtime( __time__ - __time__ % 300) as time group by __time__ - __time__ % 300 order by time limit 1440Obtain the numbers of successful requests and failed requests.

not _http_response_code_ : 200 | select count(1)_http_response_code_ : 200 | select count(1)