In business scenarios such as finance, logistics, and the Internet of Things (IoT), systems generate massive amounts of time series data, such as transaction records, trajectory data, and monitoring logs. Performing real-time analytics on this terabyte-scale data often presents performance challenges. PolarDB for PostgreSQL provides a cost-effective solution for storing massive time series data with features such as partitioned tables and tiered storage of hot and cold data. Building on this foundation, the In-Memory Column Index (IMCI) feature lets you perform real-time, high-performance analytics on massive time series data without complex data pre-processing, helping you effectively unlock the value of your data.

Solution overview

Operational flow

Data writing: Business applications write time series data, such as transaction records, to a PolarDB for PostgreSQL cluster.

Columnstore index: Create a columnstore index on the base table. PolarDB for PostgreSQL automatically maintains the columnstore data in the table. Column store organizes data by column, which provides a higher compression ratio and reduces I/O consumption during aggregate queries because only the relevant columns need to be read.

Query acceleration: Analytical queries, such as candlestick aggregations, are directed by the optimizer or a

Hintto use the columnstore index. The query engine then uses columnar storage and parallel processing to scan and aggregate the data and returns the result.

Solution advantages

Simple to use: No business modifications or complex extract, transform, and load (ETL) processes are required. You can simply create a columnstore index for the base table to transparently accelerate analytical queries.

Rich features: Natively supports partitioned tables and includes a rich set of built-in time series analysis functions, such as

time_bucket,first, andlast, to simplify your SQL development.

Performance results

Data volume: 100 million data entries over a 2-day span, with approximately 50 million entries per day.

Candlestick aggregation query: Includes five metrics within a specified period: highest value, lowest value, opening price, closing price, and total transaction volume.

Columnstore index degree of parallelism: 8.

The time taken is as follows (in seconds):

Scenario

Second-level candlestick aggregation

Minute-level candlestick aggregation

Hourly K-line aggregation

Day-level candlestick aggregation

Full data aggregation (100 million entries)

3.41

0.95

0.93

0.91

1-day data aggregation (approx. 50 million entries)

1.88

0.82

0.81

0.76

12-hour data aggregation (approx. 25 million entries)

0.89

0.55

0.53

N/A

1-hour data aggregation (approx. 6 million entries)

0.41

0.39

0.37

N/A

Implementation steps

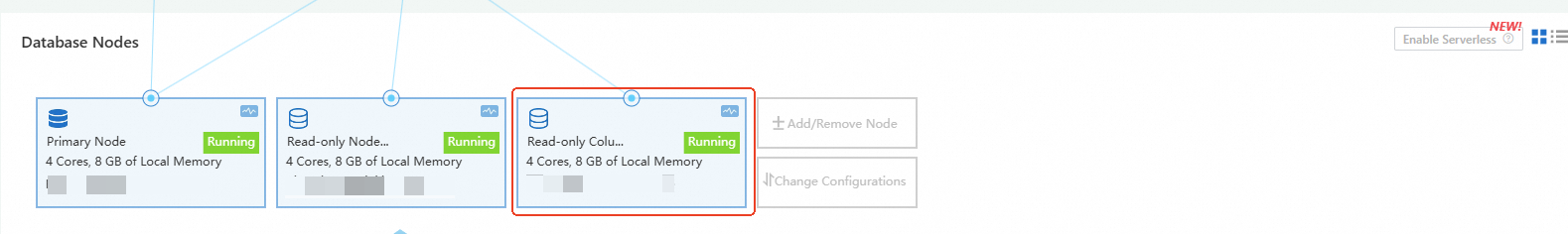

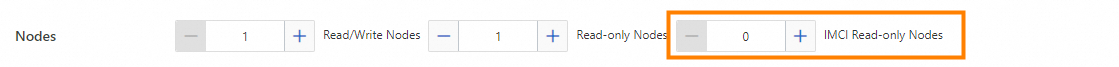

Step 1: Prepare the environment

Confirm that your cluster version and configuration meet the following requirements:

Cluster versions:

PostgreSQL 16 (minor engine version 2.0.16.8.3.0 or later)

PostgreSQL 14 (minor engine version 2.0.14.10.20.0 or later)

NoteYou can view the minor engine version number in the console or by running the

SHOW polardb_version;statement. If the minor engine version does not meet the requirement, you must upgrade the minor engine version.The source table must have a primary key. The primary key column must be included when you create the columnstore index.

The

wal_levelparameter must be set tological. This adds the information required to support logical replication to the write-ahead logging (WAL).NoteYou can set the wal_level parameter in the console. Modifying this parameter restarts the cluster. Plan your business operations accordingly and proceed with caution.

Enable the columnstore index feature.

The method for enabling the columnstore index feature varies depending on the minor engine version of your PolarDB for PostgreSQL cluster:

Step 2: Prepare the data

This solution uses a transaction records table and simulates the generation of 100 million transaction data entries over approximately two days. During this period, transactions occur daily from 8:00 to 16:00, with an estimated daily data volume of about 40 million entries.

-- Transaction records table CREATE TABLE market_trades ( trade_id BIGINT GENERATED ALWAYS AS IDENTITY PRIMARY KEY, -- Auto-increment primary key trade_ts TIMESTAMP, -- Transaction timestamp market_id VARCHAR, -- Market ID price DECIMAL, -- Transaction price amount DECIMAL, -- Transaction amount insert_ts TIMESTAMP -- System write timestamp ); INSERT INTO market_trades(trade_ts, market_id, price, amount, insert_ts) SELECT trade_ts, market_id, price, amount, trade_ts + (random() * 500)::INT * INTERVAL '1 millisecond' AS insert_ts FROM ( -- ======================== -- 1. Day 1 peak: 2025-06-01 8:00 - 16:00, 40 million entries -- ======================== SELECT '2025-06-01 08:00:00'::TIMESTAMP + (random() * 28800)::INT * INTERVAL '1 second' + -- 28800 seconds = 8 hours (random() * 1000)::INT * INTERVAL '1 millisecond' AS trade_ts, CASE WHEN random() < 0.6 THEN 'BTC-USDT' ELSE 'ETH-USDT' END AS market_id, CASE WHEN random() < 0.6 THEN 30000 + (random() * 1000) ELSE 2000 + (random() * 100) END AS price, random() * 10 + 0.1 AS amount FROM generate_series(1, 40000000) UNION ALL -- ======================== -- 2. Day 1 off-peak: 2025-06-01 16:00 - 2025-06-02 08:00, 10 million entries -- ======================== SELECT CASE WHEN random() < 0.5 THEN -- 16:00 - 24:00 '2025-06-01 16:00:00'::TIMESTAMP + (random() * 28800)::INT * INTERVAL '1 second' ELSE -- 00:00 - 08:00 (early morning of day 2) '2025-06-02 00:00:00'::TIMESTAMP + (random() * 28800)::INT * INTERVAL '1 second' END + (random() * 1000)::INT * INTERVAL '1 millisecond' AS trade_ts, CASE WHEN random() < 0.6 THEN 'BTC-USDT' ELSE 'ETH-USDT' END AS market_id, CASE WHEN random() < 0.6 THEN 30000 + (random() * 1000) ELSE 2000 + (random() * 100) END AS price, random() * 10 + 0.1 AS amount FROM generate_series(1, 10000000) UNION ALL -- ======================== -- 3. Day 2 peak: 2025-06-02 8:00 - 16:00, 40 million entries -- ======================== SELECT '2025-06-02 08:00:00'::TIMESTAMP + (random() * 28800)::INT * INTERVAL '1 second' + (random() * 1000)::INT * INTERVAL '1 millisecond' AS trade_ts, CASE WHEN random() < 0.6 THEN 'BTC-USDT' ELSE 'ETH-USDT' END AS market_id, CASE WHEN random() < 0.6 THEN 30000 + (random() * 1000) ELSE 2000 + (random() * 100) END AS price, random() * 10 + 0.1 AS amount FROM generate_series(1, 40000000) UNION ALL -- ======================== -- 4. Day 2 off-peak: 2025-06-02 16:00 - 2025-06-03 08:00, 10 million entries -- ======================== SELECT CASE WHEN random() < 0.5 THEN -- 16:00 - 24:00 '2025-06-02 16:00:00'::TIMESTAMP + (random() * 28800)::INT * INTERVAL '1 second' ELSE -- 00:00 - 08:00 (early morning of day 3) '2025-06-03 00:00:00'::TIMESTAMP + (random() * 28800)::INT * INTERVAL '1 second' END + (random() * 1000)::INT * INTERVAL '1 millisecond' AS trade_ts, CASE WHEN random() < 0.6 THEN 'BTC-USDT' ELSE 'ETH-USDT' END AS market_id, CASE WHEN random() < 0.6 THEN 30000 + (random() * 1000) ELSE 2000 + (random() * 100) END AS price, random() * 10 + 0.1 AS amount FROM generate_series(1, 10000000) ) AS data;Create a columnstore index for the transaction records table.

CREATE INDEX idx_csi_market_trades ON market_trades USING CSI;

Step 3: Run the candlestick aggregation query

Scenario: Calculate the candlestick data for each fixed time window.

Example: Calculate the highest price, lowest price, opening price, closing price, and total transaction volume for each second.

The following examples calculate candlestick data per second, per minute, per hour, and per day.

Second-level candlestick aggregation

-- Second-level candlestick aggregation

/*+ SET (polar_csi.enable_query on) */

SELECT

time_bucket('1 second', trade_ts) AS candle_ts, -- Data within 1 second

market_id,

MIN(price) AS low, -- Lowest price within 1 second

MAX(price) AS high, -- Highest price within 1 second

FIRST(price ORDER BY trade_ts) AS open, -- Opening price within 1 second

LAST(price ORDER BY trade_ts) AS close, -- Closing price within 1 second

SUM(amount) AS vol -- Total transaction volume within 1 second

FROM market_trades

WHERE trade_ts >= '2025-06-01 00:00:00' AND trade_ts <= '2025-06-02 00:00:00'

GROUP BY candle_ts, market_id

ORDER BY candle_ts, market_id;Minute-level candlestick aggregation

-- Minute-level candlestick aggregation

/*+ SET (polar_csi.enable_query on) */

SELECT

time_bucket('1 minute', trade_ts) AS candle_ts, -- Data within 1 minute

market_id,

MIN(price) AS low, -- Lowest price within 1 minute

MAX(price) AS high, -- Highest price within 1 minute

FIRST(price ORDER BY trade_ts) AS open, -- Opening price within 1 minute

LAST(price ORDER BY trade_ts) AS close, -- Closing price within 1 minute

SUM(amount) AS vol -- Total transaction volume within 1 minute

FROM market_trades

WHERE trade_ts >= '2025-06-01 00:00:00' AND trade_ts <= '2025-06-02 00:00:00'

GROUP BY candle_ts, market_id

ORDER BY candle_ts, market_id;Hour-level candlestick aggregation

-- Hour-level candlestick aggregation

/*+ SET (polar_csi.enable_query on) */

SELECT

time_bucket('1 hour', trade_ts) AS candle_ts, -- Data within 1 hour

market_id,

MIN(price) AS low, -- Lowest price within 1 hour

MAX(price) AS high, -- Highest price within 1 hour

FIRST(price ORDER BY trade_ts) AS open, -- Opening price within 1 hour

LAST(price ORDER BY trade_ts) AS close, -- Closing price within 1 hour

SUM(amount) AS vol -- Total transaction volume within 1 hour

FROM market_trades

WHERE trade_ts >= '2025-06-01 00:00:00' AND trade_ts <= '2025-06-02 00:00:00'

GROUP BY candle_ts, market_id

ORDER BY candle_ts, market_id;Day-level candlestick aggregation

-- Day-level candlestick aggregation

/*+ SET (polar_csi.enable_query on) */

SELECT

time_bucket('1 day', trade_ts) AS candle_ts, -- Data within 1 day

market_id,

MIN(price) AS low, -- Lowest price within 1 day

MAX(price) AS high, -- Highest price within 1 day

FIRST(price ORDER BY trade_ts) AS open, -- Opening price within 1 day

LAST(price ORDER BY trade_ts) AS close, -- Closing price within 1 day

SUM(amount) AS vol -- Total transaction volume within 1 day

FROM market_trades

WHERE trade_ts >= '2025-06-01 00:00:00' AND trade_ts <= '2025-06-02 00:00:00'

GROUP BY candle_ts, market_id

ORDER BY candle_ts, market_id;SQL description

/*+ SET (polar_csi.enable_query on) */: This hint forces the query to use the columnstore index execution plan. In some scenarios, the optimizer might incorrectly determine that the row store is more optimal. You can use thisHintto ensure that the query uses the columnstore path.time_bucket(bucket_width, ts): A function provided by the Time Series Database that groups timestamps (ts) by a specified time interval (bucket_width).