You can use the Text Summarization Predict component provided by Platform for AI (PAI) to test a pre-trained Text Summarization model and evaluate the model performance. This topic describes how to configure the Text Summarization Predict component.

Prerequisites

OSS is activated, and Machine Learning Studio is authorized to access OSS. For more information, see Activate OSS and Grant the permissions that are required to use Machine Learning Designer.

Limits

The Text Summarization Training component can use only Deep Learning Containers (DLC) computing resources.

Configure the component in the PAI console

You can configure parameters of the Text Summarization Predict component in the Machine Learning Designer.

Input ports

Input port (from left to right)

Type

Recommended upstream component

Required

Data for prediction

OSS

Yes

Prediction model

Component output

No

Configure the component

Tab

Parameter

Description

Fields Setting

Input Schema

The text columns in the input table. Default value: target:str:1,source:str:1.

TextColumn

The name of the column that stores the source text in the input table. Default value: source.

AppendColumn

The names of the text columns to be appended to the output table from the input table. Separate multiple column names with commas (,). Default value: source.

Output Schema

The names of the columns that store the text summarization results in the output table. Default value: predictions,beams.

Output data file

The path of the output table in an Object Storage Service (OSS) bucket.

Use User-defined Model

Specifies whether to use the default model of PAI to perform prediction. Valid values:

yes

no (default)

Whether the Model is a Megatron One

Only pre-trained text summarization models that are prefixed with mg are supported. Valid values:

yes

no (default)

OSS Directory for Alink Model

This parameter is required only if you set the Use User-defined Model parameter to yes.

The path of the custom model in an OSS bucket.

Parameters Setting

batchSize

The number of samples to be processed at a time. The value must be of the INT type. Default value: 8.

If the model is trained on multiple servers with multiple GPUs, this parameter indicates the number of samples to be processed by each GPU at a time.

sequenceLength

The maximum length of a sequence. The value must be of the INT type. Valid values: 1 to 512. Default value: 512.

The model language

The language that is used. Valid values:

zh: Chinese

en: English

Whether to copy text from input while decoding

Specify whether to copy the text. Valid values:

false (default)

true

The Minimal Length of the Predicted Sequence

The minimum length of the output text, which is of the INT type. Default value: 12. The text output by the model must be longer than the specified value.

The Maximal Length of the Predicted Sequence

The maximum length of the output text, which is of the INT type. Default value: 32. The text output by the model must be shorter than the specified value.

The Minimal Non-Repeated N-gram Size

The minimum size of a non-repeated n-gram phrase, which is of the INT type. Default value: 2.

The Number of Beam Search Scope

The beam search scope, which is of the INT type. Default value: 5.

The Number of Returned Candidate Sequences

The number of results to be returned, which is of the INT type. Default value: 5.

ImportantThis parameter must be set to the value of The Number of Beam Search Scope.

Execution Tuning

GPU Machine type

The GPU-accelerated instance type of the computing resource. Default value: gn5-c8g1.2xlarge.

Examples

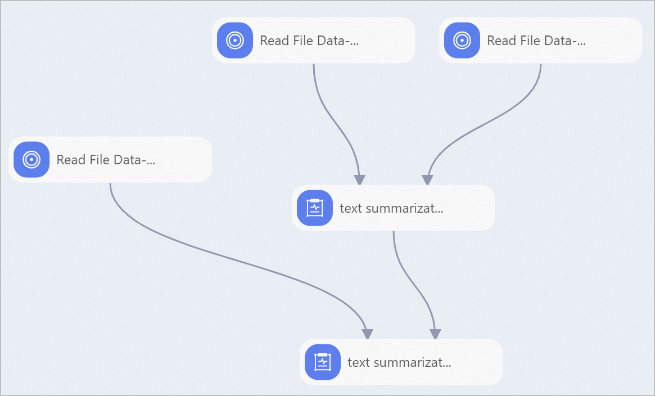

You can use the Text Summarization Predict component to build a pipeline by using one of the following methods:

Method 1: Fine-tune a model that is trained by using the Text Summarization component.

Method 2: Use a custom model.

In this example, perform the following operations to configure the component and run the pipeline:

Build a pipeline based on the instructions of building a Text Summarization pipeline. For more information, see the "Examples" section in the Use the Text Summarization Training component topic.

Prepare the dataset (predict_data.txt) for which you want to generate a summary and upload the dataset to an OSS bucket. The test dataset used in this example is a tab-delimited TXT file.

You can also upload CSV files to MaxCompute by running the Tunnel commands on a MaxCompute client. For more information about how to install and configure a MaxCompute client, see MaxCompute client (odpscmd). For more information about Tunnel commands, see Tunnel commands.

Use the Read File Data - 3 component in Method 1 or Read File Data - 1 component in Method 2 to read the test dataset. Set the OSS Data Path parameter of the Read File Data component to the OSS path in which the test dataset is stored.

Connect the model file and test dataset to the Text Summarization Predict component and set the required parameters. For more information, see Configure the component in the PAI console.

If you want to use a model fine-tuned by the Text Summarization component, configure the output of the Text Summarization component as the input of the Text Summarization Predict component.

If you want to use a custom model, set the Use User-defined Model parameter to yes on the Fields Setting tab and set the ModelSavePath parameter to the OSS path where the model is stored.

Click

to run the pipeline. After you run the pipeline, you can view the output in the OSS path that you configured in the Output data file parameter of the Text Summarization Predict component.

to run the pipeline. After you run the pipeline, you can view the output in the OSS path that you configured in the Output data file parameter of the Text Summarization Predict component.

References

For more information about how to configure the text summary training component, see Use the Text Summarization Training component.