Elastic Remote Direct Memory Access (eRDMA) is a cloud-based, elastic RDMA network from Alibaba Cloud. Specific GPU instance types, available as PAI general computing resources, support eRDMA. To use this feature, submit a DLC job with a specific image for these GPU instance types. The system automatically mounts eRDMA network interface cards in the container, accelerating the distributed training process.

Limits

This topic applies only to training jobs that are submitted using subscription-based general computing resources.

This feature requires NCCL version 2.19 or later.

The following table lists the GPU instance types on the PAI-DLC platform that support eRDMA and the number of eRDMA network interface cards available for each type.

GPU instance type

Number of eRDMA network interface cards

ecs.ebmgn7v.32xlarge

2

ecs.ebmgn8v.48xlarge

2

ecs.ebmgn8is.32xlarge

2

ecs.ebmgn8i.32xlarge

4

ecs.gn8is.2xlarge

1

ecs.gn8is.4xlarge

1

ecs.gn8is-2x.8xlarge

1

ecs.gn8is-4x.16xlarge

1

ecs.gn8is-4x.16xlarge

1

Preset environment variables

PAI automatically enables the eRDMA feature and sets the default NCCL environment variables for instance types that support eRDMA. You can adjust these variables based on the training framework, communication framework, and model characteristics. For optimal performance, use the default variables provided by the platform.

Public environment variables

Environment variable | Value |

PYTHONUNBUFFERED | 1 |

TZ | Set based on the region where the current job runs. The value is usually "Asia/Shanghai". |

eRDMA high-performance network variables

A hyphen (-) indicates that the environment variable is not applicable to the corresponding environment.

Environment variable | Value |

NCCL_DEBUG | INFO |

NCCL_SOCKET_IFNAME | eth0 |

NCCL_IB_TC | - |

NCCL_IB_SL | - |

NCCL_IB_GID_INDEX | 1 |

NCCL_IB_HCA | erdma |

NCCL_IB_TIMEOUT | - |

NCCL_IB_QPS_PER_CONNECTION | 8 |

NCCL_MIN_NCHANNELS | 16 |

NCCL_NET_PLUGIN | none |

Configure a custom image

When you submit training jobs that use general computing resources that support eRDMA, you can build and use a custom image. The custom image must meet the following requirements:

Environment requirements

CUDA 12.1 or later

NCCL 2.19 or later

Python 3

Install the eRDMA library

The installation process for the eRDMA library varies based on the Linux distribution of the image. The following example demonstrates how to install the eRDMA library on Ubuntu 22.04:

# Add the PGP signature.

wget -qO - http://mirrors.cloud.aliyuncs.com/erdma/GPGKEY | sudo gpg --dearmour -o /etc/apt/trusted.gpg.d/erdma.gpg

# Add the apt source.

mkdir -p /etc/apt/sources.list.d

echo "deb [ ] http://mirrors.cloud.aliyuncs.com/erdma/apt/ubuntu jammy/erdma main" | sudo tee /etc/apt/sources.list.d/erdma.list

# Update and install the eRDMA user-mode driver package.

sudo apt update

sudo apt install -y libibverbs1 ibverbs-providers ibverbs-utils librdmacm1For more information about the installation process on other distributions, see Use eRDMA in Docker containers.

Example Dockerfile

# Replace ${user_docker_image_url} with your existing Docker image.

FROM ${user_docker_image_url}

# If the RDMA library is already installed in the image, uninstall it first.

RUN rm /etc/apt/sources.list.d/mellanox_mlnx_ofed.list && \

apt remove -y libibverbs1 ibverbs-providers ibverbs-utils librdmacm1

RUN wget -qO - http://mirrors.aliyun.com/erdma/GPGKEY | gpg --dearmour -o /etc/apt/trusted.gpg.d/erdma.gpg && \

echo "deb [ ] http://mirrors.aliyun.com/erdma/apt/ubuntu jammy/erdma main" | tee /etc/apt/sources.list.d/erdma.list && \

apt update && apt install -y libibverbs1 ibverbs-providers ibverbs-utils librdmacm1Use MPIJob to run an NCCL Test

To submit a training job that uses the MPIJob framework, configure the following key parameters. For more information about other parameters, see Submit an MPIJob training job.

Parameter | Description | |

Environment Information | Node Image | On the Image Address tab, enter the prepared custom image. You can use the NCCL test image provided by PAI-DLC. This image has the eRDMA dependencies pre-installed: Replace |

Startup Command | | |

Resource Information | Resource Source | Select Resource Quota. |

Resource Quota | Select a created general computing resource quota, such as one with the ecs.ebmgn8v.48xlarge instance type. For more information about how to create a resource quota, see General computing resource quotas. | |

Framework | Select MPIJob. | |

Job Resource | Configure the following parameters:

| |

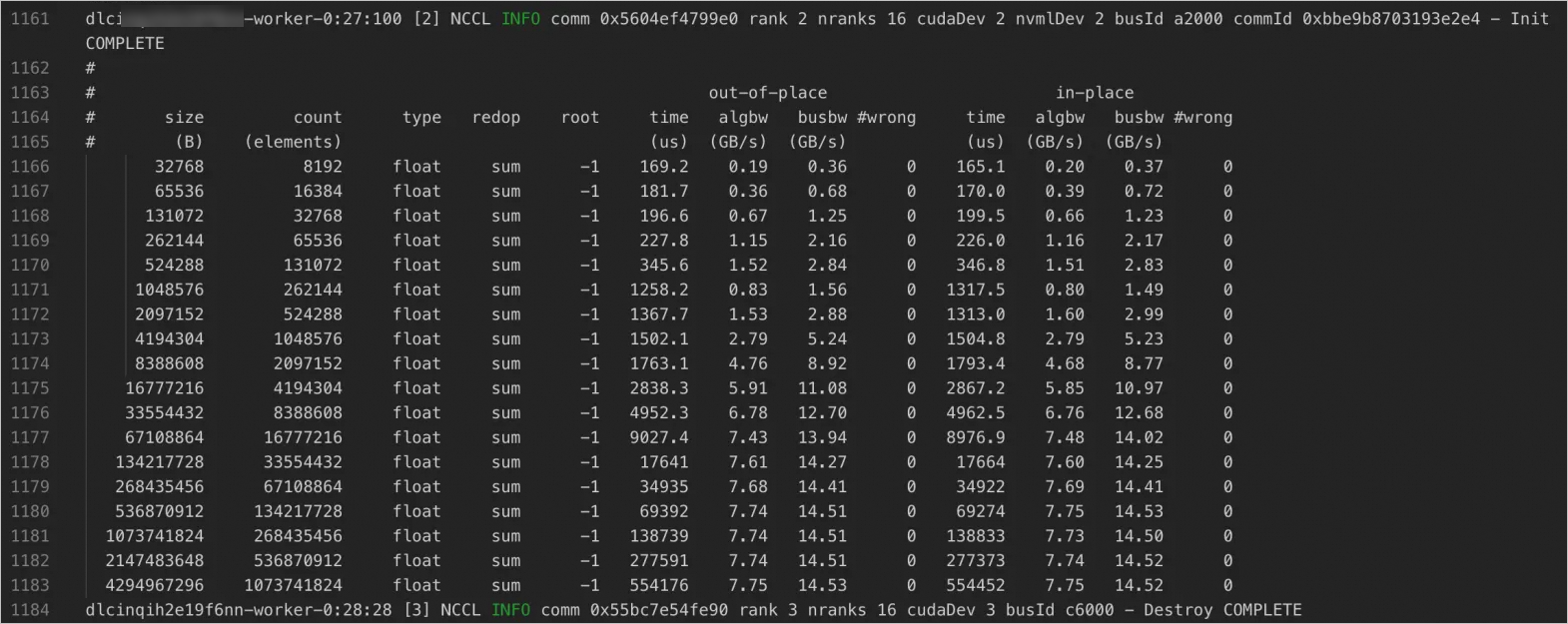

The following figure shows sample results of an NCCL Test for eRDMA network bandwidth.