Manually managing replicas to handle traffic peaks and valleys is inefficient and can lead to slow response times, service overloads, or idle resources. Horizontal auto scaling in Elastic Algorithm Service (EAS) automatically adjusts the number of service replicas based on real-time load. This ensures service stability while maximizing resource utilization, striking an optimal balance between cost and performance.

How it works

Horizontal auto scaling dynamically adjusts the number of replicas based on configured metric thresholds.

Calculate the target number of replicas: The system determines the target number of replicas (desiredReplicas) by the ratio of the current metric value (currentMetricValue) to the desired metric value (desiredMetricValue), factored by the current number of replicas (currentReplicas).

Formula:

desiredReplicas = ceil[currentReplicas × ( currentMetricValue / desiredMetricValue )]Example: Assume you have 2 current replicas and the QPS Threshold of Individual Instance is set to 10. When the average QPS per replica rises to 23, the target number of replicas becomes

5 = ceil[2 * (23/10)]. Later, if the average QPS drops to 2, the target number of replicas becomes1 = ceil[5 * (2/10)].If you configure multiple metrics, the system calculates the target number of replicas for each metric and uses the maximum of these values as the final target number of replicas.

Trigger logic: When the calculated target number of replicas is greater than the current number, the system triggers a scale-out. When the target is less than the current number, it triggers a scale-in.

ImportantTo prevent frequent scale-out and scale-in operations due to metric fluctuations, the system applies a 10% toleration range to the threshold. For example, if the queries per second (QPS) threshold is set to 10, a scale-out operation is triggered only when the QPS is consistently above 11 (10 × 1.1). This means:

If the QPS briefly fluctuates between 10 and 11, the system does not scale out.

A scale-out operation is triggered only when the QPS remains stable at 11 or higher.

This mechanism reduces unnecessary resource changes and improves system stability and cost-effectiveness.

Delayed execution: Scaling operations support a delay mechanism to prevent frequent adjustments caused by brief traffic fluctuations.

User guide

Configure horizontal auto scaling policies using the PAI console or the eascmd client.

Enable or update horizontal auto scaling

Use the console

-

Log on to the PAI console. Select a region on the top of the page. Then, select the desired workspace and click Elastic Algorithm Service (EAS).

On the service list, click the name of the target service to go to the service details page.

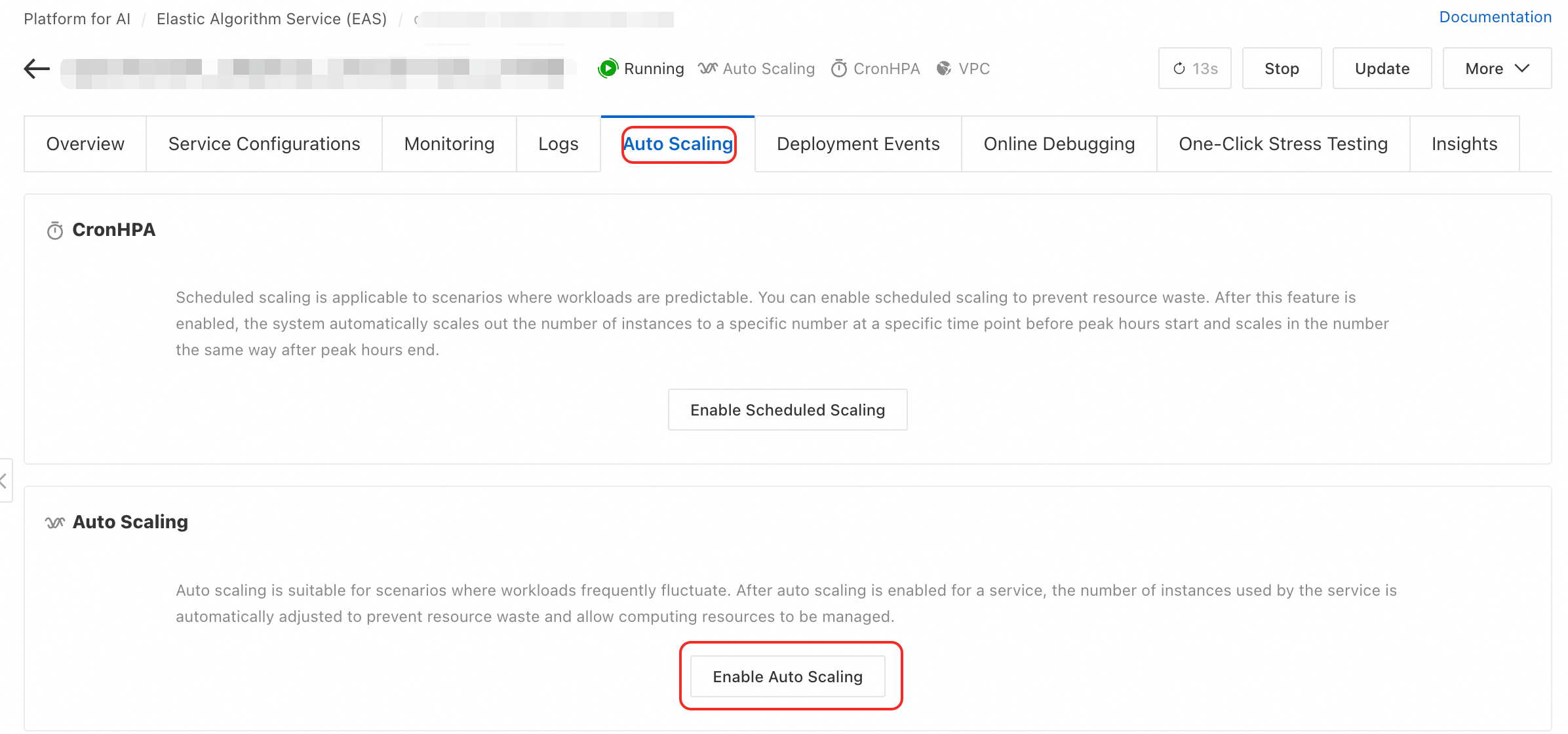

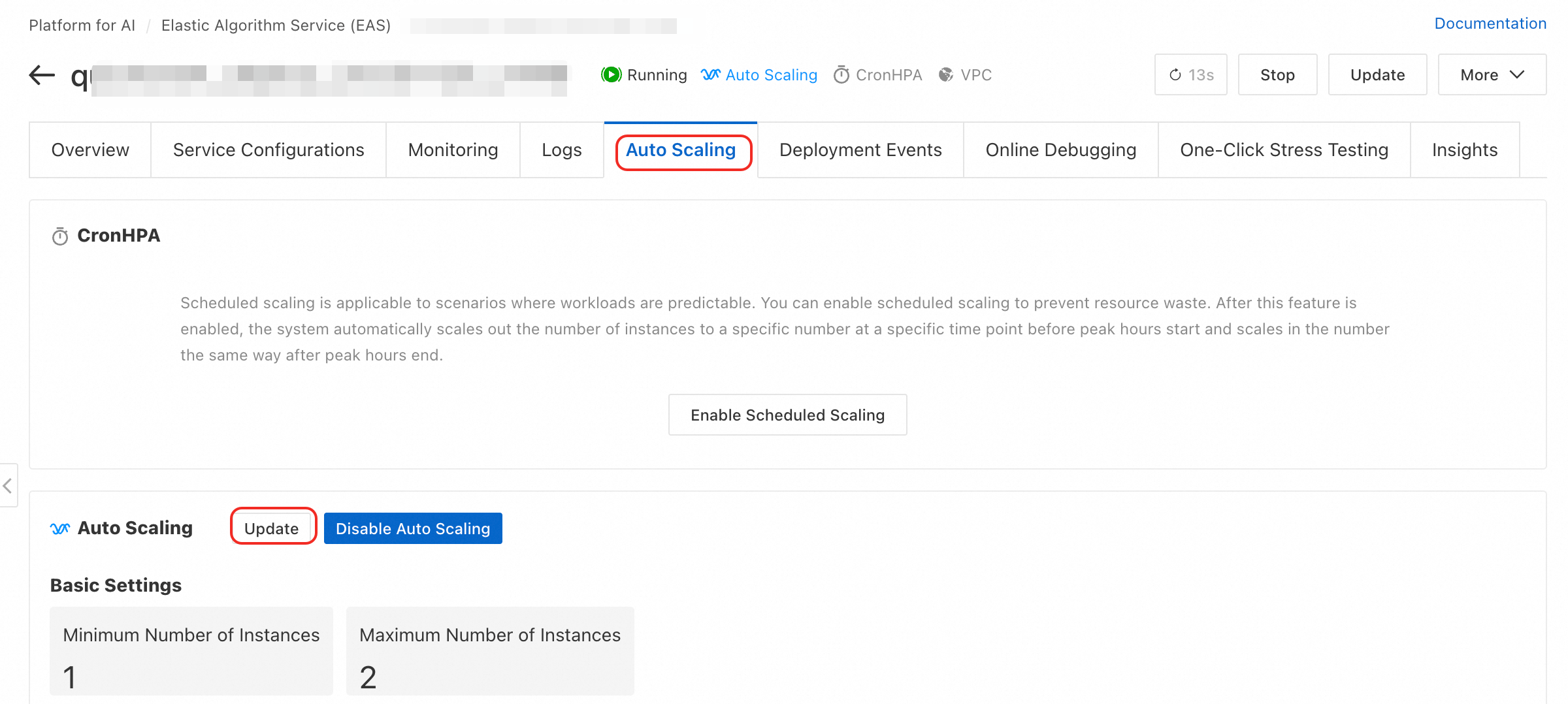

On the Auto Scaling tab, in the Auto Scaling section, click Enable Auto Scaling or Update.

In the Auto Scaling Settings dialog box, configure the following parameters.

Parameter description

Basic configuration

Parameter

Description

Recommendations and risk warnings

Minimum Replicas

The minimum number of replicas a service can scale in to. The minimum value is 0.

Production environment recommendation: For services that require continuous availability, it is strongly recommended to set this value to

1or higher.ImportantSetting this to

0removes all service replicas when there is no traffic. New requests will then face a full cold start delay (which can range from tens of seconds to several minutes), during which the service is unavailable. Additionally, services that use a dedicated gateway do not support setting this value to0.Maximum Replicas

The maximum number of replicas a service can scale out to. The maximum value is 1000.

Set this value based on your estimated peak traffic and account resource quota to prevent unexpected traffic spikes from causing cost overruns.

General Scaling Metrics

Built-in performance metrics used to trigger scaling.

QPS Threshold of Individual Instance: Set based on stress test results, typically at 70% to 80% of a single replica's optimal performance.

ImportantTo set the single-replica QPS threshold to a decimal value, use the client (eascmd) and set the

qps1kfield.CPU Utilization Threshold: Setting this too low can waste resources, while setting it too high can negatively impact request latency. Set this value based on response time (RT) metrics.

GPU utilization threshold: Set this value based on RT metrics.

Asynchronous Queue Length: Applies only to asynchronous services. Set this based on the average task processing time and acceptable latency. For more information, see Configure horizontal auto scaling for asynchronous inference services.

Custom Scaling Metric

You can report custom metrics and use them for auto scaling. For more information, see Custom monitoring and scaling metrics.

Suitable for complex scenarios where built-in metrics do not meet business requirements.

Advanced configuration

Parameter

Description

Recommendations and risk warnings

Scale-out Starts in

The observation window for scale-out decisions. After a scale-out is triggered, the system observes the metric during this period. If the metric value falls back below the threshold, the scale-out is canceled. The unit is seconds.

The default is

0seconds, which means scale-out occurs immediately. Increase this value (for example, to 60 seconds) to prevent unnecessary scaling caused by transient traffic spikes.Scale-in Starts in

The observation window for scale-in decisions, which is a key parameter to prevent service jitter. A scale-in only occurs after the metric remains below the threshold for this entire duration. The unit is seconds.

The default is

300seconds. This is the core safeguard against frequent scale-in events due to traffic fluctuations. Do not set this value too low, as it may affect service stability.Scale-in to 0 Instance Starts in

When Minimum Replicas is

0, this parameter defines the wait time before the replica count is reduced to0.Delays the complete shutdown of the service, providing a buffer for potential traffic recovery.

Scale-from-Zero Replica Count

The number of replicas to add when the service scales out from

0replicas.Set to a value that can handle the initial traffic burst and reduce service unavailability during a cold start.

Use the client

Before you run the commands, ensure you have downloaded and authenticated the client. Both enabling and updating use the autoscale command. Set the policy by using the -D parameter or a JSON configuration file.

Parameter format:

# Format: eascmd autoscale [region]/[service_name] -D[attr_name]=[attr_value] # Example: Set the minimum number of replicas to 2, the maximum to 5, and the QPS threshold to 10. eascmd autoscale cn-shanghai/test_autoscaler -Dmin=2 -Dmax=5 -Dstrategies.qps=10 # Example: Set the scale-in delay to 100 seconds. eascmd autoscale cn-shanghai/test_autoscaler -Dbehavior.scaleDown.stabilizationWindowSeconds=100Configuration file format:

# Step 1: Create a configuration file (for example, scaler.json). # Step 2: Run the command: eascmd autoscale [region]/[service_name] -s [desc_json] # Example eascmd autoscale cn-shanghai/test_autoscaler -s scaler.json

Configuration example

The following scaler.json example includes common configuration options:

Parameter description

Parameter | Description |

| Minimum number of replicas. |

| Maximum number of replicas. |

| Scaling metrics and thresholds.

|

| Corresponds to Scale-out Delay in the console. |

| Corresponds to Scale-in Delay in the console. |

Disable horizontal auto scaling

Using the client

Command format

eascmd autoscale rm [region]/[service_name]Example

eascmd autoscale rm cn-shanghai/test_autoscaler

Production best practices

Scenario-specific configuration guide

CPU-intensive online inference services: Configure both the CPU Utilization Threshold and the QPS Threshold Per Replica. CPU utilization reflects resource consumption and QPS reflects business load. Combining these metrics enables more precise scaling.

GPU-intensive online inference services: Focus primarily on the GPU Utilization Threshold. When GPU computing units are saturated, scale out promptly to allow the service to handle more concurrent tasks.

Asynchronous task processing services: Use the Asynchronous Queue Length as the core metric. When the number of backlogged tasks in the queue exceeds the threshold, scaling out increases processing capacity and reduces task waiting times.

Stability best practices

Avoid scaling in to zero: For synchronous services in a production environment, always set the Minimum Replicas to

1or higher to ensure continuous availability and low latency.Set a reasonable delay: Use the Scale-in Delay to prevent service jitter caused by normal traffic fluctuations. The default value of

300seconds is suitable for most scenarios.

FAQ

Why does my service not scale out even when the threshold is met?

Possible reasons include:

Insufficient resource quota: The available vCPU or GPU quota in your account for the current region is exhausted.

Scale-out delay is active: If you configured a Scale-out Delay, the system is waiting for this period to end to confirm the traffic increase is sustained.

replica health check failed: The newly scaled-out replicas failed their health checks, causing the operation to fail.

Maximum number of replicas reached: The current number of replicas has reached the configured Maximum Replicas limit.

Why does my service scale in and out frequently (jitter)?

This is usually caused by an improperly configured scaling policy:

Threshold is too sensitive: The threshold is set too close to the normal load level, causing minor fluctuations to trigger scaling events.

Scale-in delay is too short: A short delay period makes the system overreact to brief drops in traffic, leading to unnecessary scale-ins. When traffic recovers, another scale-out is immediately triggered. Increase the Scale-in Delay.

References

For more information about automatically scaling the number of replicas at scheduled times, see Scheduled auto scaling.

For more information about flexibly allocating resources to meet changing demands, see Elastic resource pools.

For more information about monitoring the effects of auto scaling using custom monitoring metrics, see Custom monitoring and scaling metrics.