This topic describes the vector models that are supported by OpenSearch Vector Search Edition.

Vector-based retrieval

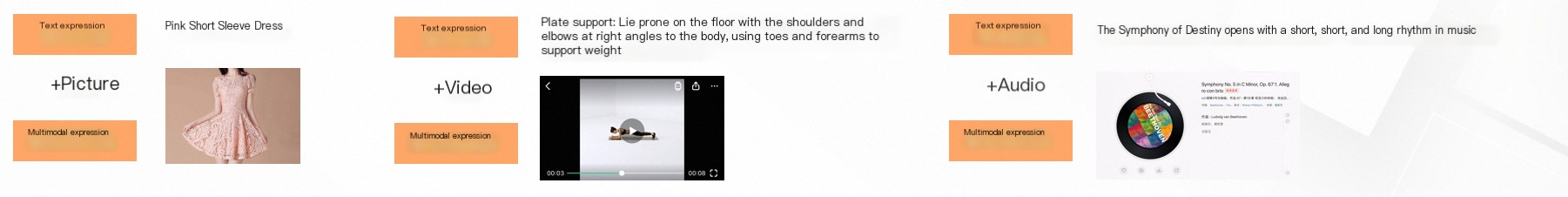

In the information age, information may consist of multiple modalities, such as text, image, video, and audio. Multimodal information can present more information than text, such as color, shape, motion state, sound, and spatial relationship.

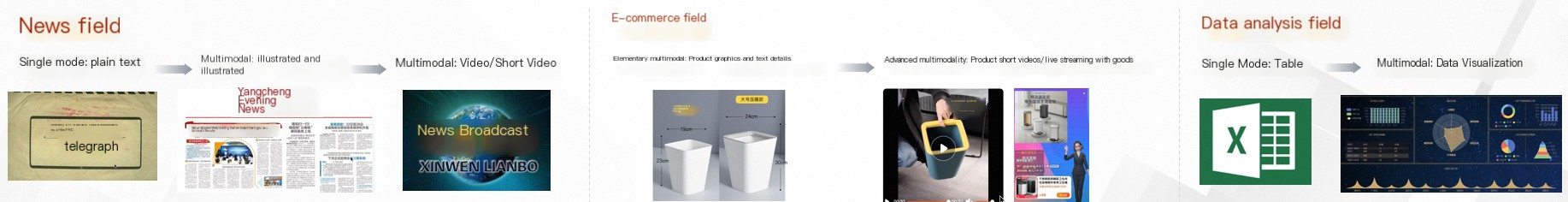

The modalities of information in various fields have greatly changed.

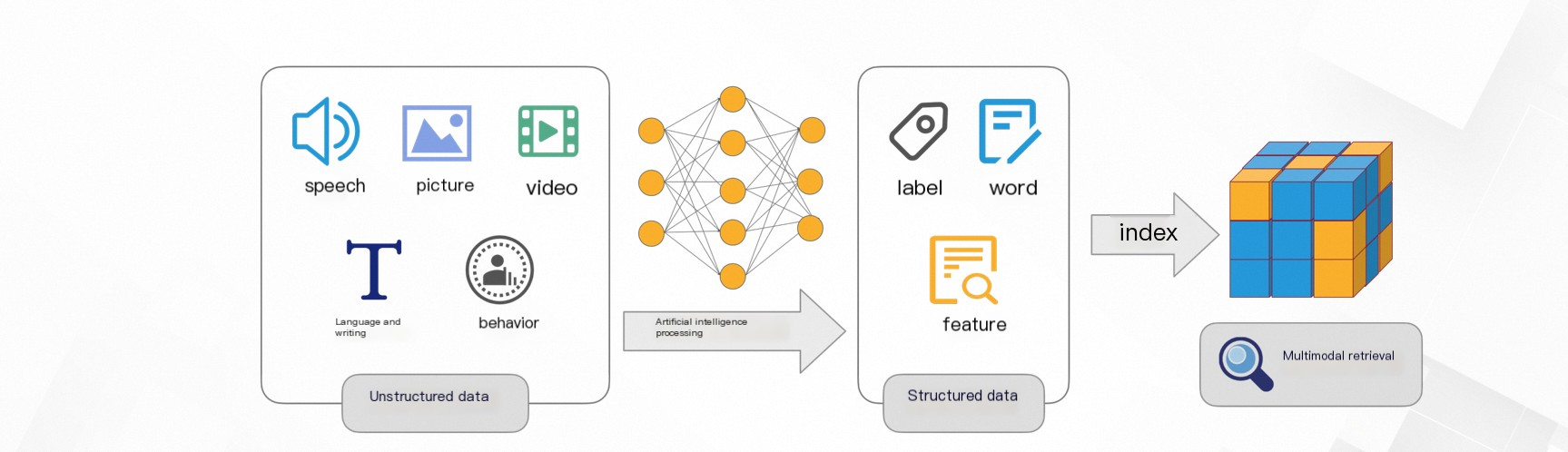

Multimodal information is classified into two categories: structured data and unstructured data.

Unstructured data is difficult for computers to understand. The traditional query method by analyzing text terms cannot meet the search requirements of various fields. To resolve the issue, OpenSearch Vector Search Edition provides vector-based retrieval.

This topic describes vectors and how to perform vector-based retrieval.

Unstructured data generated in the physical world can be transformed into structured multidimensional vectors that identify relationships between entities.

Then, the distance between the vectors is calculated. In most cases, the closer the distance, the higher the vector similarity. The top N results with the highest vector similarity are retrieved. This implements vector-based retrieval.

Vector retrieval algorithms

Linear algorithm

The linear algorithm linearly processes all vector data.

Scenario: You can use this algorithm if you require a recall rate of 100%.

Disadvantage: This algorithm delivers low efficiency and requires high CPU utilization and memory usage when it processes large volumes of data.

Clustering algorithms

Quantized clustering

Overview

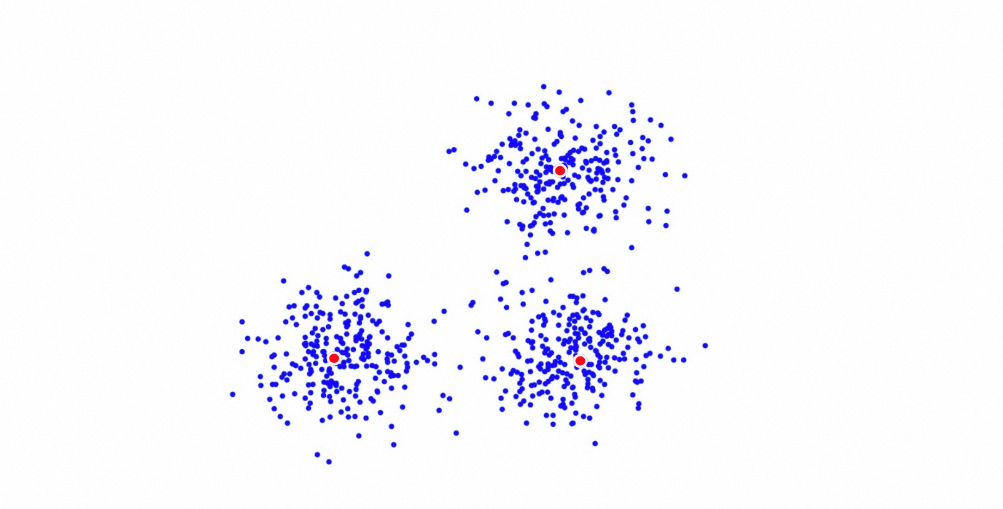

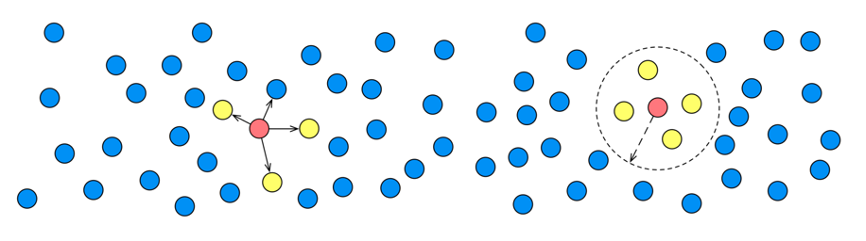

Quantized clustering is a vector retrieval algorithm developed by Alibaba Cloud based on K-means clustering. This algorithm seeks n centroids of the vector documents, and clusters each document to the centroid closest to the document. This way, n posting lists are built. The n centroids and the corresponding posting lists are the main content of quantized clustering indexing. During the retrieval, OpenSearch calculates the distance between several centroids and the request vector, and selects multiple centroids closest to the request vector. Then, OpenSearch calculates the vector distance for the documents within the posting lists that correspond to the selected centroids and returns the top K documents closest to the request vector.

Quantized clustering supports the FP16 and INT8 quantization feature, which can reduce the index size and improve the retrieval performance. However, this feature may compromise the retrieved results. You can enable or disable the FP16 and INT8 quantization feature based on your business requirements.

The quantized clustering algorithm is suitable for real-time data scenarios or scenarios in which you use GPU resources and require low latency.

Parameter tuning:

For more information, see Quantized clustering configurations.

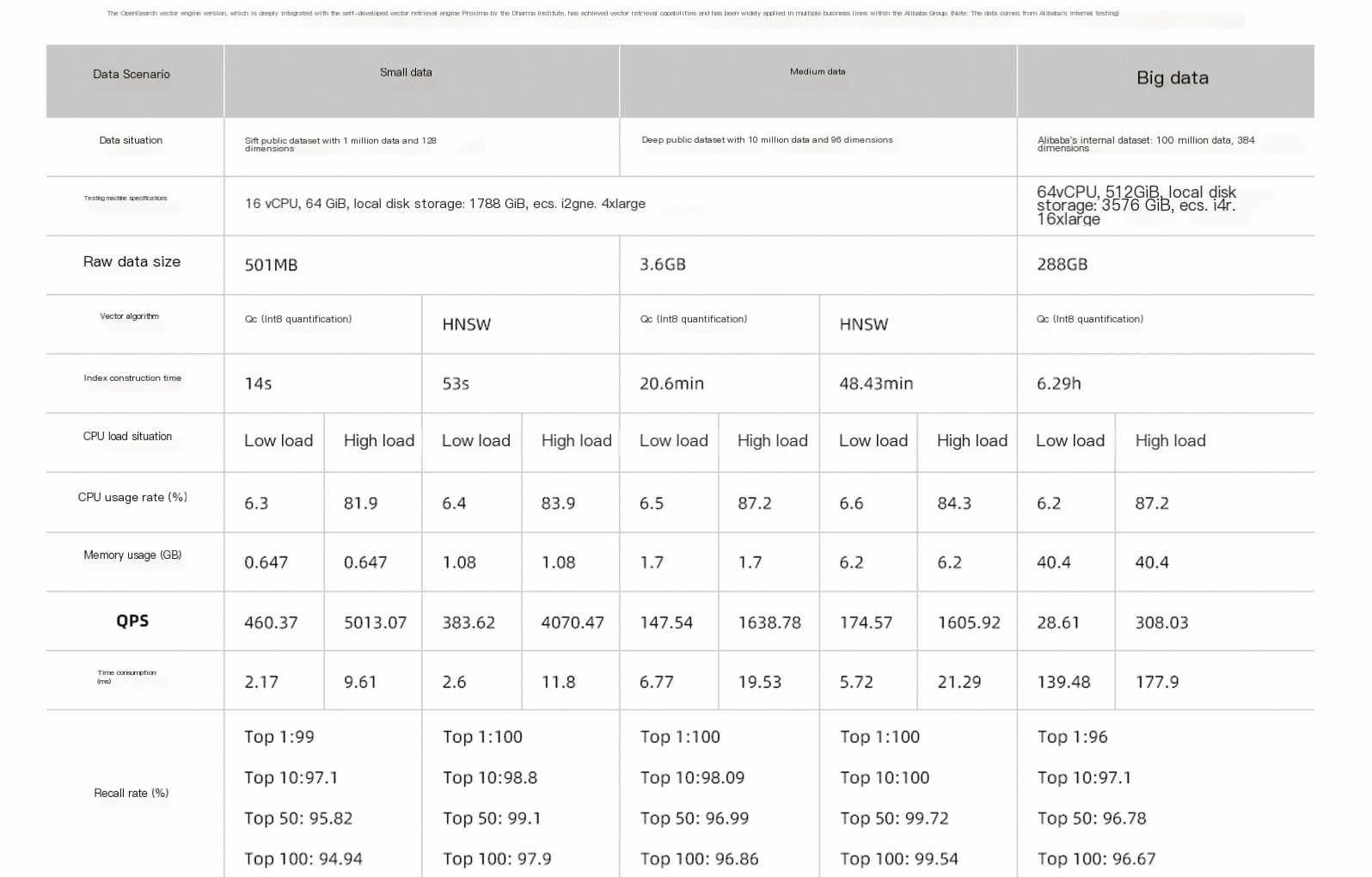

The following figure shows the performance of different vector algorithms to process different volumes of data.

HNSW

Overview

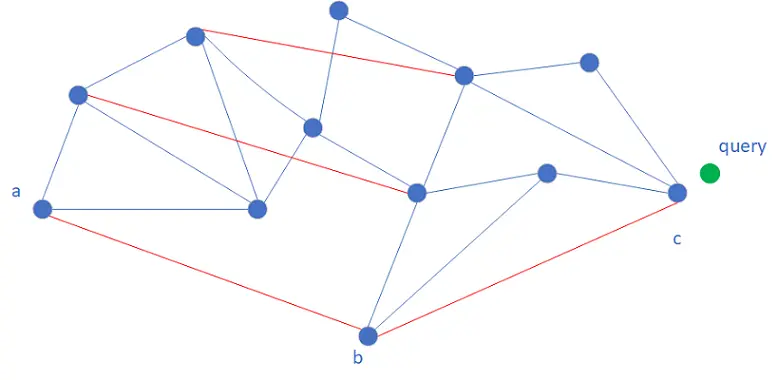

Hierarchical navigable small world (HNSW) is a vector retrieval algorithm based on proximity graphs. This algorithm requires you to create a proximity graph and build edges between vectors that are close to each other. The vectors and the edges are the main content of HNSW indexing. During the retrieval, OpenSearch starts the traversal from the entry vector, calculates the distance between the request vector and each adjacent vector of the entry vector, and selects the nearest adjacent vector as the next vector for traversal. OpenSearch traverses the vectors based on the preceding rule until the convergence appears. Convergence refers to that OpenSearch finds an adjacent vector of the current vector closer to the request vector than all other retrieved adjacent vectors.

To accelerate convergence, HNSW builds a multi-layer proximity graph structure based on the logic of skip list query. The retrieval proceeds from top to bottom. The retrieval logic of each layer is similar except for Layer 0. If OpenSearch traverses to a vector in Layer K when the convergence appears, the vector is considered the entry vector of Layer K-1, and OpenSearch continues to traverse Layer K-1. Compared to Layer K-1, Layer K contains sparser nodes between which the distance is longer. In this case, OpenSearch traverses vectors in Layer K with a larger step size and a higher iteration speed than in Layer K-1.

Parameter tuning:

For more information, see HNSW configuration.

QGraph

Overview

Quantized Graph (QGraph) is an improved HNSW-based algorithm developed by OpenSearch. QGraph automatically quantifies the raw data of users during index construction and then builds a graph index. Compared with the HNSW algorithm, the QGraph algorithm can effectively reduce the index size and save memory overhead, and can reduce the index to up to 1/8 of the original one. At the same time, with the optimization of CPU instructions for integer computing, the performance of QGraph is improved by several times compared with HNSW. After quantization, the discrimination of vectors is reduced, and the recall rate of QGraph is lower than that of HNSW. In real scenarios, more recalls can be used to reduce this impact.

QGraph supports quantization of data at int4, int8, or int16 level. Different quantization levels have different effects on memory consumption of vector indexes, query performance, and recall rate. Generally, as the integer bit-width decreases, the memory occupied by the vector index becomes smaller, performance improves, and recall rate decreases. int16 quantization, due to issues related to the underlying CPU instruction set, has performance and recall rates nearly identical to those of unquantized data.

Parameter tuning:

QGraph (Quantized Graph) configuration

CAGRA

Overview

CAGRA (CUDA ANN GRAph-based) is a vector retrieval algorithm based on proximity graphs. CAGRA is used to optimize NVIDIA GPUs and is suitable for scenarios with high concurrency and batch requests.

Unlike HNSW, CAGRA builds a single-layer proximity graph for parallel computing. During the retrieval, the algorithm maintains an ordered set of candidate vectors along with iterations. In each iteration, the algorithm selects the most appropriate top K vectors from the candidate set as the starting points for retrieval and attempts to update the candidate set with the adjacent vectors. This process iterates until all top K vectors in the candidate set have been used as retrieval starting points. In this case, the algorithm is considered to be converged.

The CAGRA algorithm is implemented based on two policies: single-CTA and multi-CTA. Single-CTA uses a single thread block to implement the retrieval of a single vector, which is more efficient when the batch size is large. Multi-CTA uses multiple thread blocks to implement the retrieval of a single vector, which can utilize the parallel computing capability of GPUs and achieve a higher recall rate when the batch size is small. However, the throughput of multi-CTA is lower than the throughput of single-CTA when the batch size is large. The optimal policy is automatically used based on the workload during actual calls.

Parameter tuning:

Vector distance types

The process of vector-based retrieval is to calculate the similarity between vectors and return the top K vectors based on the similarity. The similarity between vectors is obtained by calculating the distance between vectors. In most cases, the smaller the score, the closer the vector distance. The larger the score, the further the vector distance.

In different vector spaces, different distance metrics are defined to calculate the distance between vectors. OpenSearch supports the following distance metrics: squared Euclidean distance and inner product.

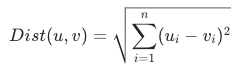

Squared Euclidean distance

Squared Euclidean distance refers to the distance between two vectors on the plane. As one of the most common distance metrics, the squared Euclidean distance can be obtained by calculating the square root of the sum of the squares of the coordinate differences between two vectors. The smaller the squared Euclidean distance, the higher the vector similarity. The squared Euclidean distance is calculated based on the following formula:

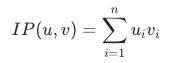

Inner product

The inner product is the dot product or scalar product between two vectors. The larger the inner product, the higher the vector similarity. The inner product can be calculated by multiplying the corresponding elements of the two vectors and summing the products. This distance metric is commonly used in search recommendation scenarios. Generally, whether to calculate the inner product depends on whether the algorithm has an inner product model. The inner product is calculated based on the following formula:

Comparison among vector retrieval algorithms

Vector retrieval algorithm | Advantage | Disadvantage | Scenario |

Quantized clustering | Low CPU utilization and memory usage. |

| The quantized clustering algorithm is suitable for scenarios in which hundreds of millions of data records need to be processed and high data accuracy and low query latency are not required. |

HNSW | High recall rate and high query speed. | High CPU utilization and memory usage. | The HNSW algorithm is suitable for scenarios in which tens of millions of data records need to be processed and high data accuracy and low query latency are required. |

linear | Recall rate of 100%. |

| The linear algorithm is suitable for scenarios in which tens of thousands of data records need to be processed. |

QGraph | Low CPU utilization and memory usage. High speed and performance. | Lower recall rate than HNSW. | The QGraph algorithm is suitable for scenarios in which billions of data records need to be processed. High query speed and performance are required and high accuracy is not required. |

CAGRA | GPU-based algorithm. Several times or even dozens of times higher performance than CPU-based algorithms. | High GPU costs and low cost-effectiveness in scenarios with low queries per second (QPS). | The CAGRA algorithm is suitable for scenarios in which high QPS and low time consumption are required. |