This topic describes how to import full data and incremental data from an ApsaraDB RDS instance.

Precautions

Full data can no longer be synchronized from ApsaraDB RDS by using LTS after March 10, 2023. If your LTS instance is purchased after March 10, 2023, the instance cannot be used to synchronize full data from ApsaraDB RDS. If your LTS instance is purchased before March 10, 2023, you can still use the LTS instance to synchronize full data from ApsaraDB RDS.

Scenarios

You want to import historical data from an ApsaraDB RDS instance to Lindorm to reduce storage costs.

You want to import full data from an ApsaraDB RDS instance to Lindorm.

Prerequisites

The LTS instance is purchased before March 10, 2023.

You are logged on to the web UI of the LTS instance. For more information, see Create a synchronization task.

LTS, the destination ApsaraDB for HBase cluster, and the source ApsaraDB RDS instance are connected or deployed in the same virtual private cloud (VPC).

Features

The full data and incremental data migration feature is supported. Full data and incremental data in an ApsaraDB RDS instance can be imported to a LindormTable instance that is compatible with HBase.

The data change feature is supported. Data can be changed when data is migrated from the source table to the destination table. For more information, see the Sample configurations section in this topic.

Data in multiple tables can be imported at a time.

Limits

The data source for full data import must be a MySQL database.

The data source for incremental data import must be a Data Transmission Service (DTS) task.

The destination data source can be a LindormTable node configured with the SQL endpoint or HBase-compatible endpoint.

Procedure

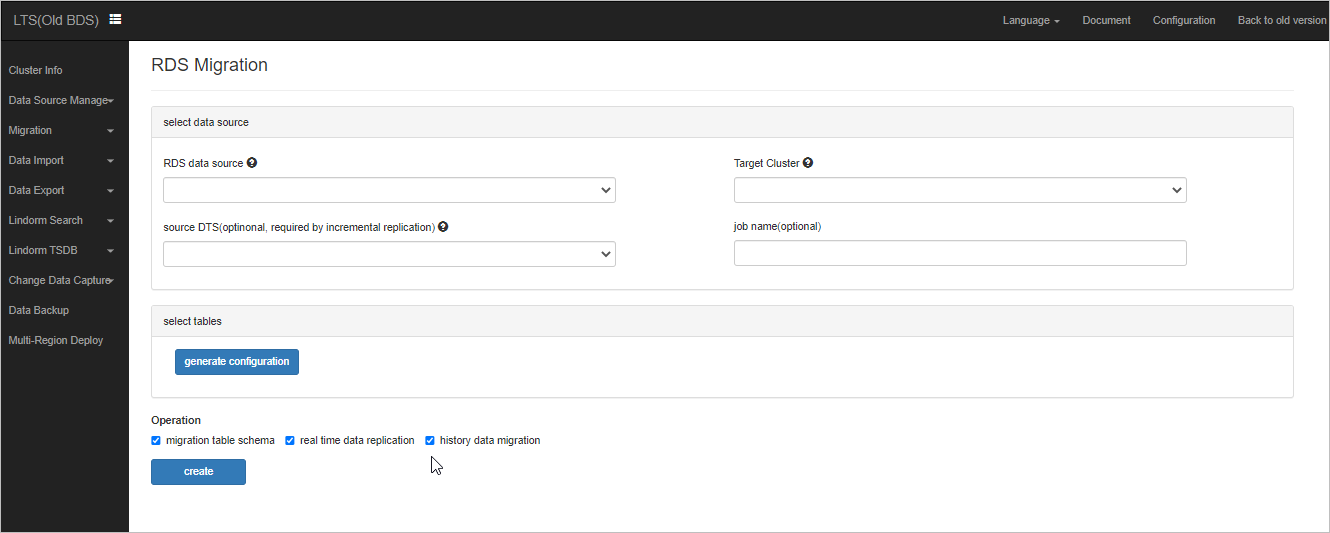

In the web UI of the LTS instance, choose Data Import > RDS Migration.

Click create.

Select the RDS data source, DTS data source, and the destination data source.

NoteFor more information about how to add a LindormTable destination data source, see Add a LindormTable data source.

For more information about how to add an ApsaraDB RDS data source, see ApsaraDB RDS data source.

For more information about how to add a DTS data source, see Add a DTS data source.

Click Edit to view the default configuration. You can also modify the configuration. For more information, see the Sample configurations section in this topic.

Select the tables to which you want to import data. Then, click generate configuration.

NoteWhen LTS imports the full data and incremental data from specified ApsaraDB RDS database tables to LindormTable, historical data is imported before incremental data.

When LTS migrates data to a LindormTable node that is compatible with Cassandra query language (CQL), the system generates columns whose names and data types are the same as the names and data types of the columns in the source ApsaraDB RDS tables by default. You can specify the names of columns in the destination tables and the mapping relationships between columns in the source tables and columns in the destination tables based on your business requirements. For more information, see the Sample configurations section in this topic.

The system automatically generates a column family named f after the data is migrated to the destination instance. Each column in the specified ApsaraDB RDS tables is associated with one column in the f column family. The row key is a concatenated string of the primary key columns of the source ApsaraDB RDS tables.

If the default configuration is used, data that is deleted from the source ApsaraDB RDS database tables after the import job is completed is not deleted from the destination table. If you want the system to delete the data that is deleted from the source tables, modify the configuration. For more information, see the Sample configurations section in this topic.

Click Create.

Sample configurations

The following code provides a sample configuration for importing data to a table by using Lindorm SQL. For more information, see Jtwig Reference Manual.

{

"reader": {

"querySql": [

"select * from dts.cluster where id < 1000",// This query statement is executed to synchronize full data. One statement is executed by using one read thread.

"select * from dts.cluster where id >= 1000"// We recommend that you split queries to increase the query speed and reduce costs for retries.

]

},

"writer": {

"columns": [

{

"name": "f:id",// The name of the column in the destination table.

"value": "id", // The name of the column in the source table.

"isPk": true , // Specify whether the column is a primary key column.

"type": "BIGINT" // The data type of the column in the destination table. This parameter is optional. If you do not specify this parameter, the data type of the column in the destination table is the same as the data type of the column in the source table.

},

{

"name": "cluster_id",

"value": "cluster_id",

"isPk": false

},

{

"name": "id_and_cluster",

"value": "{{concat(id, cluster_id)}}",// Jtwig domain names can be used to change data.

"isPk": true

},

],

"config": {

"skipDelete": true // Specify that delete operations are skipped.

},

"table": {

"name": "dts:cluster",// The names of the tables in the destination Lindorm cluster. Separate table names with periods (.).

"parameter": {

"compression": "ZSTD"

}

},

"sourceTable": "dts.cluster"

}

}The following code provides a sample configuration for importing data to a table by using the ApsaraDB for HBase API.

{

"reader": {

"querySql": [

"select * from dts.cluster where id < 1000",// This query statement is executed to synchronize full data. One statement is executed by using one read thread.

"select * from dts.cluster where id >= 1000"// We recommend that you split queries to increase the query speed and reduce costs for retries.

]

},

"writer": {

"columns": [

{

"name": "f:id",// The name of the column in the destination table.

"value": "id", // The name of the column in the source table.

"isPk": false // This parameter is optional. This parameter does not affect the import process.

},

{

"name": "f:cluster_id",

"value": "cluster_id",

"isPk": false

},

{

"name": "f:id_and_cluster",

"value": "{{concat(id, cluster_id)}}",// Jtwig domain names can be used to change data.

}

],

"rowkey": {

"value": "id" // The row key of the destination table. The row key consists of the primary key columns in the source ApsaraDB RDS database table. The value of this parameter can be specified based on the Jtwig syntax.

},

"config": {

"skipDelete": true // Specify that delete operations are skipped.

},

"table": {

"name": "dts:cluster",// The name of the table in the destination cluster.

"parameter": {

"compression": "ZSTD",// The compression algorithm that is used for the destination table. we recommend that you use Zstandard (zstd) as the compression algorithm for the destination table.

"split":["1", "5", "9", "b"] // Specify the split key to pre-partition the destination table.

}

},

"sourceTable": "dts.cluster"

}

}