This topic describes basic principles and configuration optimization methods for batch processing of Realtime Compute for Apache Flink.

Background information

As a computing framework with unified stream processing and batch processing, Flink can process batch data and streaming data at the same time. The stream processing and batch processing modes of Flink share multiple core execution mechanisms. However, the two modes have key differences in terms of job execution mechanisms, configuration parameters, and performance optimization. This topic describes the execution mechanisms and configuration parameters of batch jobs of Realtime Compute for Apache Flink. You can optimize jobs more efficiently after you understand the key differences between the stream and batch processing modes. This helps troubleshoot issues that you may encounter when you run batch jobs of Realtime Compute for Apache Flink.

Realtime Compute for Apache Flink supports batch processing in features such as draft development, job O&M, workflows, queue management, and data profiling. For more information about how to perform batch processing, see Get started with batch processing of Realtime Compute for Apache Flink.

Differences between batch and streaming jobs

You must understand the differences between the execution mechanisms of batch and streaming jobs before you learn about the configuration parameters and optimization methods of batch jobs.

Execution mode

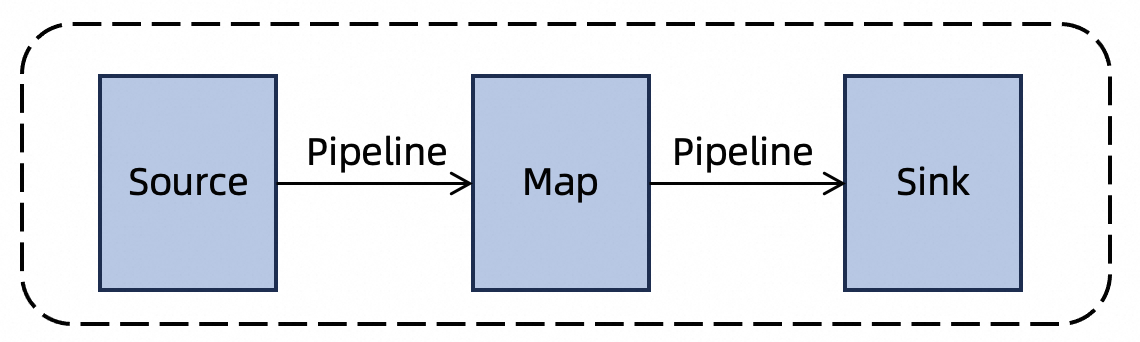

Streaming job: The stream processing mode focuses on processing continuous and unbounded data streams. This mode is used to achieve low-latency data processing. In this mode, data is instantly passed between operators and processed in pipeline mode. Therefore, the subtasks of all streaming job operators are deployed and run at the same time.

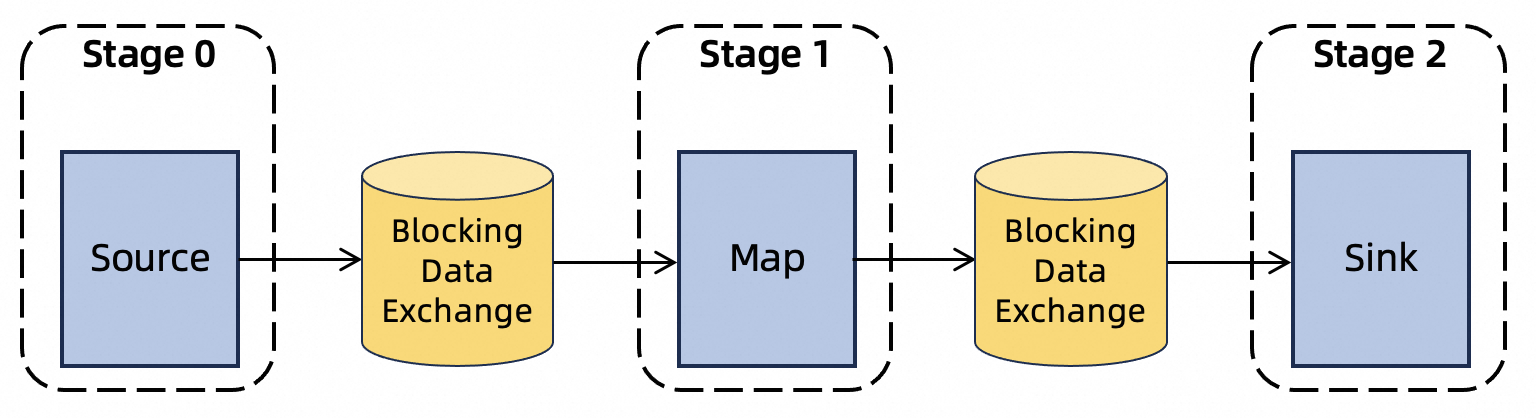

Batch job: The batch processing mode focuses on processing bounded datasets. This mode is used to achieve a high throughput when processing data. In this mode, a job is usually run in multiple phases. For phases that are independent of each other, subtasks can run in parallel to improve resource utilization. For phases that have data dependencies, downstream subtasks can be started only after the upstream subtasks are complete.

Data transmission

Streaming job: To process data with low latency, the intermediate data of a streaming job is temporarily stored in the memory for direct data transmission. The intermediate data is not persisted. If the processing capability of the downstream operators is insufficient, the upstream operators may have backpressure.

Batch job: The intermediate result data of a batch job is written to an external storage system for downstream operators to use. By default, the result files are stored in the local disk of the TaskManagers. If the remote shuffle service is used, the result files are stored in the remote shuffle service.

Resource requirements

Streaming job: All resources must be allocated before a streaming job starts. This ensures that all subtasks of the job can be deployed and run at the same time.

Batch job: The allocation of all resources is not required before a batch job starts. Realtime Compute for Apache Flink can batch schedule subtasks whose input data is prepared. This efficiently utilizes existing resources and ensures smooth execution of batch jobs in the scenario where resources are limited (even in the scenario where only one slot is available).

Restart of a failed subtask

Streaming jobs: A streaming job can be resumed from the last checkpoint or savepoint if a failure occurs. This way, the job can be resumed at the lowest cost. When a job resumes, all subtasks of the job are restarted because the intermediate result data of streaming jobs is not persisted.

Batch jobs: The intermediate result data of a batch job is stored in disks. Therefore, the intermediate result data can be reused when a subtask restarts due to a failure. Only the failed subtask and its downstream subtasks need to be restarted without the need to backtrack all data. This reduces the number of subtasks that need to be restarted after a failure occurs and improves the efficiency of job resuming. The subtasks are restarted from the beginning because batch jobs do not have a checkpoint mechanism.

Key configuration parameters and optimization methods

This section describes the key configurations of batch jobs.

Resource configurations

CPU and memory resources

In the Resources section of the Configuration tab of a job, you can configure CPU and memory resources for a single JobManager and a single TaskManager. Take note of the following configuration suggestions:

JobManager: We recommend that you configure one CPU core and at least 4 GiB of memory for the JobManager of a job. This ensures smooth scheduling and management of the job.

TaskManager: We recommend that you configure resources for a TaskManager based on the number of slots. Specifically, we recommend that you configure 1 CPU core and 4 GiB of memory for each slot. For a TaskManager with n slots, n CPU cores and 4n GiB of memory are recommended.

By default, one slot is allocated to each TaskManager in a batch job of Realtime Compute for Apache Flink. To reduce the overhead from TaskManager scheduling and management, you can increase the number of slots for each TaskManager to 2 or 4.

The disk space of each TaskManager is limited and proportional to the number of CPU cores that you configure. Specifically, each CPU core is allocated 20 GiB of disk space. A minimum of 20 GiB and a maximum of 200 GiB of disk space can be allocated to a TaskManager.

If you increase the number of slots on each TaskManager, more subtasks are run on the same TaskManager node. This occupies more local disk space and may lead to insufficient disk space. If the disk space is insufficient, the job fails and restarts.

The JobManager and TaskManagers of a large-scale job or a job with a complex network topology may require higher resource configurations. In this case, you must configure more resources for the job based on your business requirements to ensure the efficient and stable running of the job.

If you encounter a resource-related issue when you run a job, you can troubleshoot the issue by following the instructions provided in the following document:

To ensure the stable running of jobs, you must configure at least 0.5 CPU core and 2 GiB of memory for each JobManager and TaskManager.

Maximum number of slots

You must configure the maximum number of slots that can be allocated to a Realtime Compute for Apache Flink job. Batch jobs of Realtime Compute for Apache Flink can run in scenarios where resources are limited. You can configure the maximum number of slots in a batch job to specify the maximum number of resources that can be used by the job. This helps prevent batch jobs from occupying an excessive number of resources and affecting the running of other jobs. For details, see How do I limit the number of TaskManagers?.

Parallelism

You can configure the global parallelism or the automatic parallelism inference feature in the Resources section of the Configuration tab of a job.

Global parallelism: The global parallelism determines the maximum number of subtasks that can be run in parallel in a job. You can enter a value in the Parallelism field in the Resources section of the Configuration tab of a job. This value is used as the default global parallelism.

Automatic parallelism inference: After you configure the automatic parallelism inference feature for a batch job of Realtime Compute for Apache Flink, the job automatically infers the parallelism by analyzing the total amount of data consumed by each operator and the average amount of data expected to be processed by each subtask. This helps you optimize the parallelism configuration.

In Realtime Compute for Apache Flink that uses Ververica Runtime (VVR) 8.0 or later, you can configure the parameters that are described in the following table to further optimize the automatic parallelism inference feature. You can configure the parameters in the Parameters section of the Configuration tab of a job.

In Realtime Compute for Apache Flink that uses VVR 8.0 or later, the automatic parallelism inference feature is enabled by default for batch jobs. The global parallelism that you configure is used as the upper limit of the automatically inferred parallelism. We recommend that you use Realtime Compute for Apache Flink that uses VVR 8.0 or later to improve the performance of batch jobs.

Parameter | Description | Default value |

execution.batch.adaptive.auto-parallelism.enabled | Specifies whether to enable the automatic parallelism inference feature. | true |

execution.batch.adaptive.auto-parallelism.min-parallelism | The minimum value of the automatically inferred parallelism. | 1 |

execution.batch.adaptive.auto-parallelism.max-parallelism | The maximum value of the automatically inferred parallelism. If this parameter is not configured, the global parallelism is used as the default value. | 128 |

execution.batch.adaptive.auto-parallelism.avg-data-volume-per-task | The average amount of data expected to be processed by each subtask. Realtime Compute for Apache Flink determines the parallelism of an operator based on the configuration of this parameter and the amount of data to be processed by the operator. | 16MiB |

execution.batch.adaptive.auto-parallelism.default-source-parallelism | The default parallelism of the source operator. Realtime Compute for Apache Flink cannot accurately perceive the amount of data to be read by the source operator. Therefore, you need to configure the parallelism of the source operator. If this parameter is not configured, the global parallelism is used. | 1 |

FAQ

How do I limit the number of TaskManagers?

TaskManagers (TMs) are dynamically created and released on demand in batch jobs to optimize resource utilization. Consider this:

A job has a parallelism of 16 and each TM has 1 slot.

16 TMs are initially launched.

Some subtasks finish early, their corresponding TMs are automatically released after a period of inactivity.

As subsequent operators begin, Flink requests new TMs, potentially causing the total number to temporarily exceed the job parallelism (e.g., reaching 17, 18, or 19 TMs).

This dynamic behavior is normal for elastic batch job scheduling and not an exception.

To enforce a strict limit, utilize the maximum number of slots configuration.

What is the difference between parallelism and slots?

Parallelism: Defines the maximum number of parallel subtasks an operator can execute. It dictates how many instances of a specific task can potentially run concurrently.

Slot: A unit of resource allocation within a Flink job. The total number of available slots determines how many subtask instances can run simultaneously across the entire cluster.

Stream jobs: Typically enable slot sharing by default. At startup, they request a number of slots equal to the global parallelism to ensure all subtasks begin executing immediately.

Batch jobs: Do not pre-allocate all resources. Their actual parallelism is limited by the number of currently available slots, even if the global parallelism is set higher.

Example: A stream job with a parallelism of 4 requires 4 slots. A batch job can run a maximum of 4 subtasks if the cluster has only 4 available slots. The remaining tasks wait for slots to be released before they can run.

What do I do if a batch job becomes stuck when it is running?

You can monitor the memory usage, CPU utilization, and thread usage of the TaskManagers of a job. For more information, see Monitor job performance.

Troubleshoot memory issues: Check the memory usage to determine whether garbage collection (GC) frequently occurs due to insufficient memory. If the memory of the TaskManagers is insufficient, increase memory resources for the TaskManagers to reduce performance issues caused by frequent GC.

Analyze CPU usage: Check whether specific threads consume a large number of CPU resources.

Trace thread stacks: Analyze execution bottlenecks of the current operator based on thread stack information.

How do I fix the "No space left on device" error?

If the error message "No space left on device" appears when you run a batch job of Realtime Compute for Apache Flink, the local disk space that is used by the TaskManagers of the job to store intermediate result files is used up. The available disk space of each TaskManager is limited and proportional to the number of CPU cores that you configure.

Solutions:

Reduce the number of slots on each TaskManager. This reduces the number of parallel subtasks on a single operator and thereby reduces the demand for local disk space.

Increase the number of CPU cores of a TaskManager. This expands the disk space of the TaskManager.

References

For more information about how to use key features of Realtime Compute for Apache Flink to perform batch processing, see Get started with batch processing of Realtime Compute for Apache Flink.

For more information about how to configure parameters for job running, see How do I configure custom parameters for job running?