This topic describes the content of the health report of an E-MapReduce (EMR) Serverless StarRocks instance. This topic also explains the fields in the health report. Health reports are generated with a delay of one day. A health report consists of the following parts: SQL queries, table analysis, import tasks, and cache analysis.

View the health report of a StarRocks instance

-

Navigate to the EMR Serverless StarRocks instance list page.

-

Log in to the E-MapReduce console.

-

In the left-side navigation pane, select .

-

In the top menu bar, select a region that suits your business needs.

-

On the Instances tab, find the desired StarRocks instance and click the name of the instance.

Click the Health Report tab.

On the page that appears, you can view information on the SQL Query, Table Analysis, Import Tasks, and Cache Analysis tabs.

SQL Query

On this tab, you can view information in the following sections: Query Latency, DB Lock, SQL Analysis, and Parameterized SQL Analysis. You can configure the Date, SqlCategory, and Catalog parameters to view specific top SQL metrics.

Valid values of the SqlCategory parameter:

DML: DML statements that are used to query and modify data. Example: SELECT, UPDATE, and DELETE statements.

DDL: DDL statements that are used to define and modify the data structure. Example: CREATE and ALTER statements.

Other: SQL statements other than DML and DDL statements, such as auxiliary statements. Example: SHOW statements.

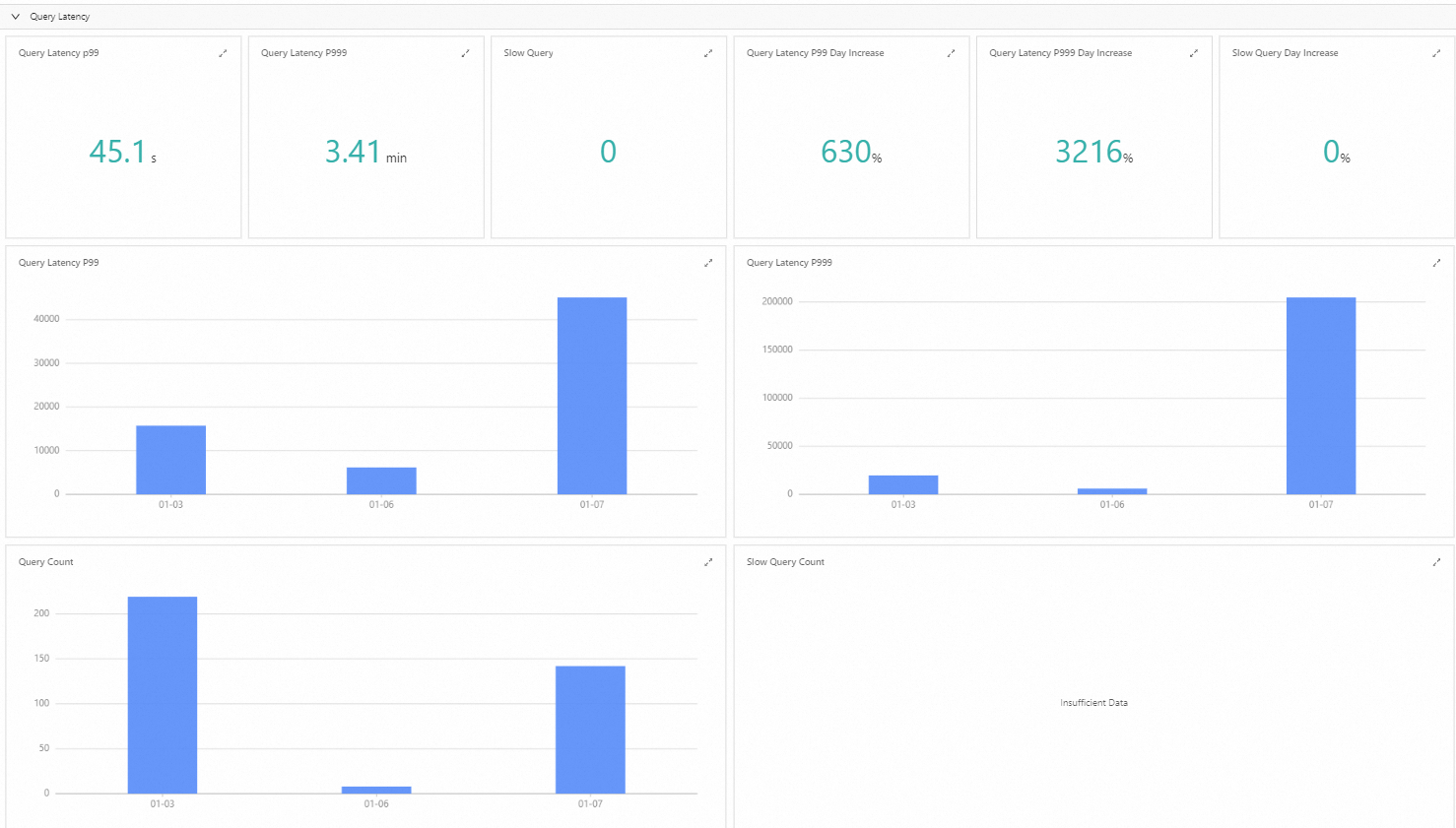

Query Latency

This section displays the metrics related to query latency. The values of the metrics are obtained based on the audit data of a specific day.

For example, the Query Latency P99 metric specifies the time within which 99% of requests are responded. This metric provides insight into the query performance by analyzing the distribution of the system response time. This metric is essential for assessing service quality and user experience.

The Query Latency P99 metric reflects the service response speed that most users actually experience. When the value of the Query Latency P99 metric is large, it may indicate the need to increase computing resources or optimize the query logic to enhance processing efficiency.

You can view the query latency data of a specific day to identify potential performance risks on an instance in advance and prevent your business from being affected.

DB Lock

Database locks are used to monitor and analyze lock races that occur during access to a database. Database locks prevent multiple transactions from accessing or modifying the same data resource at the same time. To ensure data consistency and integrity, database locks are used to control concurrent access to specific data rows or tables. If a transaction needs to wait for a long time to acquire a lock, the response speed and service quality of the overall system decrease. Data in this section can help you identify and troubleshoot performance issues.

SQL Analysis

This section displays the top SQL statements and related fields based on specific dimensions, such as query time, CPU consumption, and memory consumption. You can troubleshoot potential performance issues based on the fields. For example, you can view the top 10 SQL statements with the longest execution time on a specific day in the Slow Query Top 10 section. You can optimize the slow SQL statements based on the detailed information about query profiles. For more information about profile analysis, see Query profile.

The following table describes the fields.

Field | Description |

QueryId | The unique ID that is generated when an SQL statement is executed in StarRocks. An ID is generated each time an SQL statement is executed. |

User | The user who executes SQL statements in StarRocks. |

Query Time (ms) | The time consumed to execute SQL statements. Unit: millisecond. |

CPU Cost (ns) | The CPU time consumed to execute SQL statements. Unit: nanosecond. |

Memory Bytes | The memory consumed to execute SQL statements. Unit: bytes. |

Scan Bytes | The amount of data that is accessed during the execution of SQL statements. Unit: bytes. |

Scan Rows | The number of data rows that are accessed during the execution of SQL statements. |

Return Rows | The number of rows that are returned after SQL statements are executed. |

SQL Text | The texts of the executed SQL statement. |

Parameterized SQL Analysis

SQL statement parameterization is the process of replacing constants in an SQL statement with the ? parameter, deleting comments, and adjusting spaces in the SQL statement to generate a new SQL statement that has the same syntax as the original SQL statement. You can parameterize an SQL statement to generate a new SQL statement that has the same syntax as the original SQL statement. This helps you analyze SQL statements of the same type in a comprehensive manner.

In the following example, after parameterization of two SQL statements, one SQL statement is obtained. The result shows that the SQL statements are of the same type.

Original SQL statements

SELECT * FROM orders WHERE customer_id=10 AND quantity>20 SELECT * FROM orders WHERE customer_id=20 AND quantity>100SQL statement after parameterization

SELECT * FROM orders WHERE customer_id=? AND quantity>?

The Parameterized SQL Analysis section displays top SQL statements and related fields based on the execution count, execution time, variance and covariance (VC) of execution time, total CPU consumption, total memory consumption, and execution error count.

Parameterized SQL statements can help you achieve the following purposes:

Obtain the overall execution status of SQL statements in StarRocks.

Optimize SQL statements that are frequently executed, take a long time to execute, and consume a large amount of CPU and memory resources to achieve greater optimization benefits.

Use the CV Of Query Time field to measure the stability of SQL execution time and identify potential performance issues. For example, if the values of the CV Of Query Time field for two SQL statements of the same type vary significantly, the larger value indicates that the corresponding SQL statement may be pended due to reasons, such as data skew or insufficient resources.

The following table describes the key fields.

Field

Description

Parameterized SQL ID

The hash value of the parameterized SQL statement.

CV Of Query Time

The ratio of the standard deviation of the execution time of SQL statements to the average value of the execution time of SQL statements. In most cases, a larger value indicates a greater variance in the execution time of SQL statements of the same type.

Execution Count

The total number of times that the parameterized SQL statement is executed.

Parameterized SQL Text

The texts of the parameterized SQL statement.

You can use the Execution Error Count field to identify the causes of SQL statement execution failures.

The following table describes the key fields.

Field

Description

Parameterized SQL ID

The hash value of the parameterized SQL statement.

Execution Error Count

The number of times that the parameterized SQL statement failed to be executed.

Execution Count

The total number of times that the parameterized SQL statement is executed.

Parameterized SQL Text

The texts of the parameterized SQL statement.

Table Analysis

This tab displays top tables and related fields based on the query frequency, types of SQL statements, and data distribution. You can optimize specific tables based on the fields. The following table describes the fields.

Field | Description |

SQL Exec Count | The total number of executions of SQL statements on the table. In most cases, a large value indicates that the table requires fine-grained optimization to improve the performance of the StarRocks instance. |

Associated Parameterized SQL Count | The number of parameterized SQL statements that are associated with the table. You can analyze the types of SQL statements that are executed on the table to optimize the table design. Furthermore, you can identify commonalities among different types of SQL statements and check whether materialized views are required to accelerate the query of data in the table. |

CV Data Size Of Tablet | The CV of tablet data size in a partition. This field reflects the distribution of tablet data in the table. The value of this field is calculated by using the following formula: Standard deviation of tablet data size in a partition/Average tablet data size in the partition. A large value indicates that a partition may have data skew issues. |

Import Tasks

This tab displays statistical data and analysis results of import tasks from multiple dimensions in the Too many versions and Top Analysis sections.

Only statistical data and analysis results of import tasks of StarRocks shared-nothing instances are provided.

Too many versions

This section provides information about tables to which data is frequently imported based on the analysis of logs that contain the error message "too many versions". When the compaction score exceeds 1,000, the "Too many versions" error is reported. To resolve the issue, you can reduce the number of concurrent import tasks and decrease the import frequency.

Top Analysis

Top Analysis of Potential Small Files When Data Load Table

The system analyzes the data files generated by all import tasks of each table to evaluate the severity of potential small file issues and obtain an impact score. This section displays the top 20 tables based on the impact score in descending order. Small files may decrease query performance and reduce compaction efficiency. To reduce the number of small files, we recommend that you perform the following operations:

Specify the number of partitions and buckets based on the actual data size of a table.

Increase the data scale for batch processing. This helps increase the data processing capability and reduces the number of small files in Object Storage Service (OSS).

Adjust the compaction frequency if resources are insufficient. Compaction can integrate data files and improve system performance. However, compaction consumes system resources. Therefore, we recommend that you adjust the compaction frequency to balance resource usage.

The formula used to calculate the impact score of small files varies based on the table type.

Primary key tables: Impact score of small files =

Total number of files/Average file size. If the average file size is small and the number of files is large, small files have a greater impact on a table.Non-primary key tables: Impact score of small files =

Total number of files/Average time required to write data to a single file. If the average time required to write data to a file is short and the number of files is large, small files have a greater impact on a table.

You can use the preceding formulas to quantify the impact exerted by small files and optimize the top 20 tables obtained based on the impact score in descending order to improve the overall performance of the StarRocks instance.

The following table describes the key fields.

Field | Description |

Tables | The collection that contains information about multiple tables that are involved in an import task. |

Table Type | The table type. Primary key tables and non-primary key tables are included. Non-primary key tables include fact tables, aggregate tables, and update tables. |

Small File Score | The score used to evaluate the potential impact of small files on a table. A higher score indicates that small files have a greater impact on the table. |

Update Bucket Count | The total number of tablets that need to be updated for an import task. |

Write File Count | The total number of segment files to which data is written. |

AVG of Write File (KB) | The average data size that is written to a file. This value is calculated by using the following formula: Total data size/Total number of files. |

AVG of Write Time (ms) | The average time required to write data to a file. The value is calculated by using the following formula: Total time required to write data to all files/Total number of files. |

Top Hot Analysis Of Data Load Table

This section displays the top 20 tables based on the number of import tasks in descending order. The import tasks of the top 20 tables are most frequently run and generate the maximum number of data import transactions.

Hot Load Node Analysis

This section displays the statistical data of nodes to help you check whether data is evenly distributed. For example, you can compare the values of the Total Write Size (MB) field for brokers to check whether data is evenly written to the brokers.

Cache Analysis

This tab displays cache-related information about StarRocks shared-data instances in the Table Dimension, Parameterized SQL Dimension, and SQL Dimension sections. You can configure the Date parameter to view cache-related information of a specific day in different sections.

The cache analysis feature is not applicable to StarRocks shared-nothing instances.

Usage notes

Increasing the cache hit ratio of StarRocks instances helps improve query performance. This tab provides a cache analysis of StarRocks instances based on the query profiles of the instances. You can query and obtain cache-related metrics in a query profile. This helps you implement an in-depth analysis of cache usage.

In a query profile, a CONNECTOR_SCAN operator is generated each time a table, view, or materialized view is scanned. The query profile provides various metrics for the CONNECTOR_SCAN operator. The following table describes specific cache-related metrics.

Metric name | Description | Internal table | External table |

Cache hit volume | The number of bytes read from the cache. | CompressedBytesReadLocalDisk | dataCacheReadBytes |

Cache miss volume | The number of bytes read from the remote storage. | CompressedBytesReadRemote | FSIOBytesRead |

Local I/O count | The number of I/O operations that are triggered when data is read from the local cache. | IOCountLocalDisk | dataCacheReadCounter |

Remote I/O count | The number of I/O operations that are triggered when data is read from the remote storage. | IOCountRemote | FSIOCounter |

Local I/O time | The I/O time consumed to read data from the local cache. | IOTimeLocalDisk | dataCacheReadTimer |

Remote I/O time | The I/O time consumed to read data from the remote storage. | IOTimeRemote | FSIOTime |

You can calculate the values of the following cache-related metrics based on the preceding metrics:

Cache hit ratio = Cache hit volume/(Cache hit volume + Cache miss volume)

Local I/O time percentage = Total local I/O time/(Total local I/O time + Total remote I/O time)

Local I/O count percentage = Total local I/O count/(Total local I/O count + Total remote I/O count)

The system extracts the preceding metrics of the CONNECTOR_SCAN operator of all SQL statements from the query profiles of a specific day, obtains other cache-related metrics, such as average cache hit volume and cache hit ratio, and then displays cache-related information in the Table Dimension, Parameterized SQL Dimension, and SQL Dimension sections of the Cache Analysis tab. You can view the information in the sections and analyze the cache usage based on your business requirements. The following table describes the sections.

Section | Scenario |

Table Dimension | This section provides cache-related information about tables with high access frequencies, low cache hit ratios, and high cache miss volumes. In this case, you can optimize cache performance and improve query performance by using methods such as cache prefetch. |

Parameterized SQL Dimension | This section provides cache-related information about the parameterized SQL statements that have the largest number of CONNECTOR_SCAN operators or have the longest average query time. You can optimize the parameterized SQL statements or prefetch cached data of specific tables that are queried by the parameterized SQL statements to improve query performance. |

SQL statement | This section provides cache-related information about SQL statements with long query time, SQL statements with low cache hit ratios, and SQL statements with high cache miss volumes. You can optimize the SQL statements or prefetch cached data of specific tables that are queried by the SQL statements to improve query performance. |

Table Dimension

This section displays top tables and related fields based on the access frequency, cache hit rate, cache hit volume, and cache miss volume. In this section, you can perform the following operations:

View the cache hit statistics of tables that are most frequently accessed. For example, the number of times that a table is accessed is 6411, and the cache hit ratio is 24.2%. In this case, you can increase the cache hit ratio of this table to improve query performance. To optimize cache performance for tables with high access frequencies and low cache hit ratios, you can prefetch cached data for the tables.

View tables with low cache hit ratios and tables with large cache miss volumes. You can prefetch cached data for these tables to optimize cache performance and improve query performance.

During actual analysis, you can determine whether to prefetch the cached data of a table based on multiple fields. For example, a table appears in the Hot Table Top 20, Low Cache Hit Rate Top 20, and Average Cache Miss Volume Top 20 sections under Table Dimension at the same time. This indicates that you need to prefetch cached data for the table.

The following table describes the key fields.

Field | Description |

Scan Count | The number of CONNECTOR_SCAN operators generated by a table. |

Cache Hit Ratio | The ratio of data that is successfully read from the local cache to total data. The value of this field is calculated by using the following formula: |

Local IO Time Percentage | The ratio of I/O time that is consumed to read data from the local cache to the total I/O time. The value of this field is calculated by using the following formula: |

Local IO Count Percentage | The ratio of I/O operations that are triggered when data is read from the local cache to total I/O operations. The value of this field is calculated by using the following formula: |

Average Cache Hit Volume | The average amount of data that is read from the cache. The value of this field is calculated by using the following formula: |

Average Cache Miss Volume | The average amount of data that is read from the remote storage. The value of this field is calculated by using the following formula: |

Parameterized SQL Dimension

SQL statement parameterization is the process of replacing constants in an SQL statement with the ? parameter, deleting comments, and adjusting spaces in the SQL statement to generate a new SQL statement that has the same syntax as the original SQL statement. You can parameterize an SQL statement to generate a new SQL statement that has the same syntax as the original SQL statement. This helps you analyze SQL statements of the same type in a comprehensive manner.

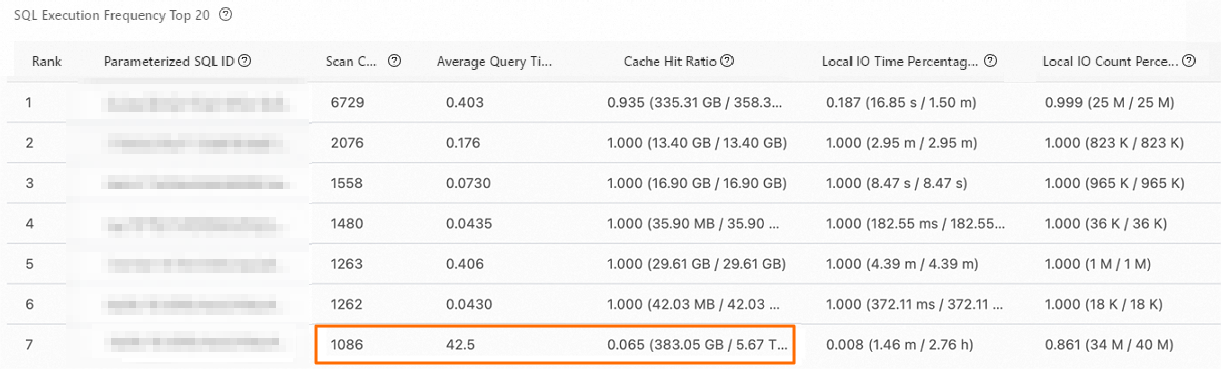

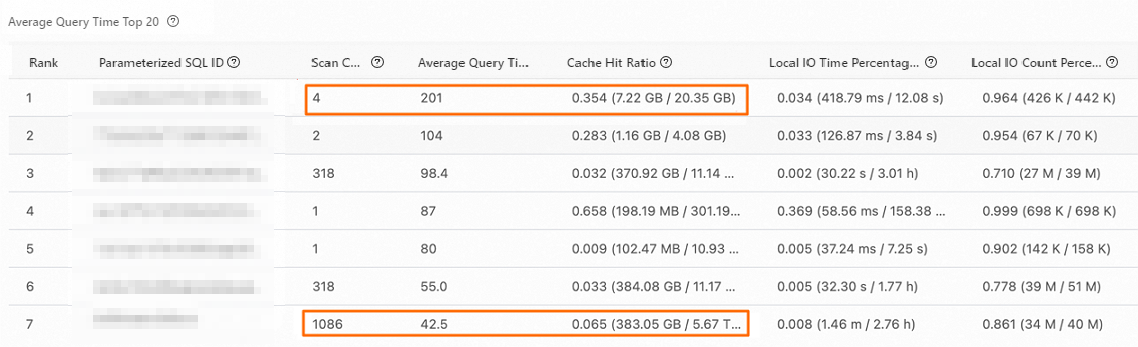

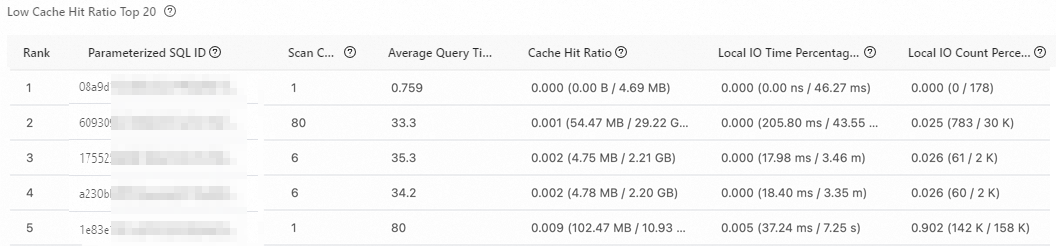

This section displays parameterized SQL statements and specific fields based on the scan count, cache hit ratio, cache hit volume, and cache miss volume.

Parameterized SQL statements can help you achieve the following purposes:

View the cache hit statistics of top parameterized SQL statements based on the number of scans. The following figure shows the top parameterized SQL statements based on the number of scans. In the figure, the number of scans of the seventh parameterized SQL statement is 1,086, and the average query time is 42.5 seconds. However, the cache hit ratio is only 6.5%. In this case, you can optimize the parameterized SQL statement or prefetch cached data of specific tables to optimize cache performance and improve query performance.

View the cache hit statistics of top parameterized SQL statements by average query time. The following figure shows top parameterized SQL statements by average query time. In the figure, the average query time of the first parameterized SQL statement is 201 seconds, and the scan count is 4. This indicates that the parameterized SQL statement corresponds to a cold query. You can determine whether to optimize the SQL statement based on your business requirements. The average query time of the seventh parameterized SQL statement is 42.5 seconds, and the scan count is 1,086. You must pay attention to such parameterized SQL statements.

View parameterized SQL statements with low cache hit ratios and parameterized SQL statements with high cache miss volumes, and determine whether to optimize such parameterized SQL statements based on specific fields, such as Scan Count and Average Query Time(s).

The following table describes the key fields.

Field | Description |

Parameterized SQL ID | The hash value of the parameterized SQL statement. |

Scan Count | The total number of CONNECTOR_SCAN operators generated by all SQL statements that correspond to the parameterized SQL statement. |

Cache Hit Ratio | The ratio of data that is successfully read from the local cache to total data. The value of this field is calculated by using the following formula: |

Local IO Time Percentage(%) | The ratio of I/O time that is consumed to read data from the local cache to the total I/O time. The value of this field is calculated by using the following formula: |

Local IO Count Percentage | The ratio of I/O operations that are triggered when data is read from the local cache to total I/O operations. The value of this field is calculated by using the following formula: |

Average Cache Hit Volume | The average amount of data that is read from the cache. The value of this field is calculated by using the following formula: |

Average Cache Miss Volume | The average amount of data that is read from the remote storage. The value of this field is calculated by using the following formula: |

Parameterized SQL | The texts of the parameterized SQL statement. |

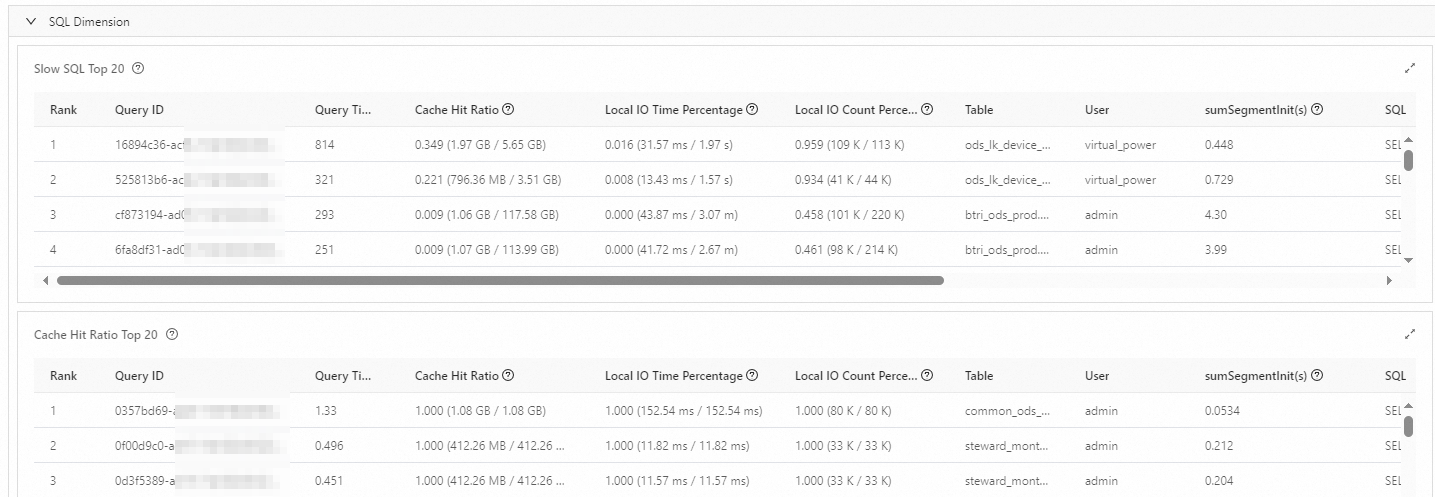

SQL Dimension

This section displays top SQL statements and specific fields based on the query time, cache hit ratio, cache hit volume, and cache miss volume.

You can perform an in-depth analysis of cache performance based on these fields. For example, you can view SQL statements with long query time, SQL statements with low cache hit ratios, and SQL statements with high cache miss volumes. You can optimize the preceding SQL statements or prefetch cached data of specific tables that are queried by the SQL statements to improve the cache hit ratio.

The following table describes the key fields.

Field | Description |

Query ID | The unique ID that is generated when an SQL statement is executed in StarRocks. A new ID is generated each time an SQL statement is executed. |

Query Time (s) | The time consumed to execute an SQL statement. Unit: seconds. |

Cache Hit Ratio | The ratio of data that is successfully read from the local cache to total data. The value of this field is calculated by using the following formula: |

Local IO Time Percentage | The ratio of I/O time that is consumed to read data from the local cache to the total I/O time. The value of this field is calculated by using the following formula: |

Local IO Count Percentage | The ratio of I/O operations that are triggered when data is read from the local cache to total I/O operations. The value of this field is calculated by using the following formula: |

Table | The tables, views, or materialized views that are queried by using an SQL statement. |

Username | The user who executes SQL statements in StarRocks. |

sumSegmentInit | The sum of the initialization time for the segments of all CONNECTOR_SCAN operators in an SQL query. |

SQL | The texts of the executed SQL statement. |