Apache Hudi enables record-level inserts, updates, and deletes on data lake storage, along with change data capture for incremental processing. This topic describes how to create a Hudi-enabled Spark SQL session in EMR Serverless Spark and perform read and write operations on a Hudi table. For more information, see Apache Hudi.

Prerequisites

Before you begin, ensure that you have:

An EMR Serverless Spark workspace. For more information, see Create a workspace

Step 1: Create an SQL session

Log on to the EMR console.

In the left-side navigation pane, choose EMR Serverless > Spark.

On the Spark page, click the name of the workspace that you want to manage.

In the left-side navigation pane of the EMR Serverless Spark page, choose O&M Center > Sessions.

On the SQL Session tab, click Create SQL Session.

On the Create SQL Session page, expand the Custom Configuration section. In the Spark Configuration editor, add the following configuration, and then click Create. For more information, see Manage SQL sessions. > Note: This configuration uses the default catalog of the workspace. To use an external Hive Metastore as a catalog instead, see Use EMR Serverless Spark to connect to an external Hive Metastore.

Parameter Value Description spark.sql.extensionsorg.apache.spark.sql.hudi.HoodieSparkSessionExtensionRegisters Hudi SQL extensions with Spark, enabling Hudi-specific syntax such as USING hudiandTBLPROPERTIES.spark.sql.catalog.spark_catalogorg.apache.spark.sql.hudi.catalog.HoodieCatalogReplaces the default Spark catalog with the Hudi catalog so that Spark can manage Hudi tables through standard SQL. spark.serializerorg.apache.spark.serializer.KryoSerializerUses Kryo serialization instead of the default Java serializer. Kryo serialization is recommended for Hudi to achieve optimal performance. spark.sql.extensions org.apache.spark.sql.hudi.HoodieSparkSessionExtension spark.sql.catalog.spark_catalog org.apache.spark.sql.hudi.catalog.HoodieCatalog spark.serializer org.apache.spark.serializer.KryoSerializer

Step 2: Write data to and read data from a Hudi table

In the left-side navigation pane of the EMR Serverless Spark page, click Development.

On the Development tab, click the

icon.

icon.In the New dialog box, set Name to

users_task, keep the default Type value SparkSQL, and then click OK.On the users_task tab, paste the following SQL statements into the code editor: The following table describes what each statement does. Hudi table types The

typeproperty inTBLPROPERTIESspecifies the storage type. Hudi supports two table types: The example above usescow. Choose the type that matches your read/write pattern. Primary key TheprimaryKeyproperty specifies which column uniquely identifies each record. Hudi uses the primary key to handle upserts and deduplication. In this example,idserves as the primary key.Type Behavior Best for cow(Copy-on-Write)Each write creates a new version of the data file. Reads are fast because data is always in columnar format. Read-heavy workloads mor(Merge-on-Read)Writes go to a delta log and are merged at read time. Writes are faster, but reads incur a merge overhead. Write-heavy or streaming workloads CREATE DATABASE IF NOT EXISTS ss_hudi_db; CREATE TABLE ss_hudi_db.hudi_tbl (id INT, name STRING) USING hudi TBLPROPERTIES ( type = 'cow', primaryKey = 'id' ); INSERT INTO ss_hudi_db.hudi_tbl VALUES (1, "a"), (2, "b"); SELECT id, name FROM ss_hudi_db.hudi_tbl ORDER BY id; DROP TABLE ss_hudi_db.hudi_tbl; DROP DATABASE ss_hudi_db;Select a database from the Default Database drop-down list and the created SQL session from the SQL Session drop-down list.

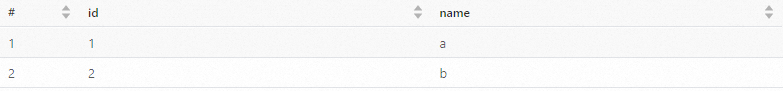

Click Run. Expected output:

References

Get started with the development of Spark SQL jobs -- Learn how to develop and orchestrate SQL jobs.

Apache Hudi -- Official Hudi documentation covering table types, indexing, incremental queries, and more.