After you create a Trino cluster in the E-MapReduce (EMR) console, you must configure the cluster before you can use it. This topic describes how to configure connectors and a metadata storage center for data in data lakes to use the Trino cluster that you created.

Background information

To use the Trino service, you can create a DataLake cluster, custom cluster, or Hadoop cluster with the Trino service deployed or create a Trino cluster in the EMR console. A Trino cluster has the following features:

Allocates exclusive resources to Trino. This way, other services can hardly affect the Trino service.

Supports auto scaling.

Supports the analysis of data in data lakes and real-time data warehousing.

Stores no data.

Hudi and Iceberg are not processes and do not occupy cluster resources.

If Hue and JindoData or SmartData are no longer used, you can stop the services.

To use a Trino cluster, you must create a DataLake cluster, custom cluster, or Hadoop cluster first or use an existing DataLake cluster, custom cluster, or Hadoop cluster as a data cluster.

After you create a Trino cluster, you need to perform the following operations:

Optional. Configure a metadata storage center for data in data lakes.

If the Metadata parameter is not set to DLF Unified Metadata for the data cluster, you can skip the configurations in this section.

Configure connectors

This section describes how to configure query objects in the connectors that you want to use. In this example, a Hive connector is used.

Go to the Services tab.

Log on to the EMR console. In the left-side navigation pane, click EMR on ECS.

In the top navigation bar, select the region where your cluster resides and select a resource group based on your business requirements.

On the EMR on ECS page, find the desired cluster and click Services in the Actions column.

On the Services tab, find the Trino service and click Configure.

Modify parameters.

On the Configure tab, click hive.properties.

Change the value of the hive.metastore.uri parameter to the value of the hive.metastore.uri parameter that is configured for the Trino service deployed in your data cluster.

Save the configuration.

Click Save.

In the dialog box that appears, configure the parameters and click Save.

Make the configuration take effect.

Click Deploy Client Configuration.

In the dialog box that appears, configure the parameters and click OK.

In the Confirm message, click OK.

Restart the Trino service. For more information, see Restart a service.

Configure host information.

ImportantIf all the data that you want to query is stored in Object Storage Service (OSS) or the Location parameter is configured when you execute the CREATE TABLE statement, you do not need to configure host information.

Some Hive tables may be stored in the specified default directory. When you query data that is stored in your data cluster, you must configure the host information of the master node of your data cluster for each node in the Trino cluster to make sure that you can read data from the tables in the query process.

Method 1: Log on to the EMR console and add a cluster script or a bootstrap action to configure the host information. For more information, see Manually run scripts or Manage bootstrap actions. We recommend that you use this method.

Method 2: Directly modify the hosts file. Perform the following steps:

Obtain the internal IP address of the master node of your data cluster. To view the internal IP address of the master node, perform the following steps: Go to the Nodes tab of your data cluster in the EMR console. Find the master node group and click the

icon.

icon.Log on to your data cluster. For more information, see Log on to a cluster.

Run the

hostnamecommand to obtain the hostname of the master node.For example, the hostname is in one of the following formats:

Hadoop cluster: emr-header-1.cluster-26****

Other types of clusters: master-1-1.c-f613970e8c****

Log on to the Trino cluster. For more information, see Log on to a cluster.

Run the following command to edit the hosts file:

vim /etc/hostsAdd the following content to the end of the hosts file:

Add the internal IP address and hostname of the master node of your data cluster to the hosts file that is stored in the /etc/ directory of each node of the Trino cluster.

Hadoop cluster

192.168.**.** emr-header-1.cluster-26****Other types of clusters

192.168.**.** master-1-1.c-f613970e8c****

Configure a metadata storage center for data in data lakes

A metadata storage center for data in data lakes can be automatically configured when you create an EMR cluster of V3.45.0 or a later minor version, or V5.11.0 or a later minor version.

If the Metadata parameter is set to DLF Unified Metadata for your data cluster, you must configure connectors such as Hive, Iceberg, and Hudi. In this case, data queries no longer depend on your data cluster. You can configure the hive.metastore.uri parameter based on your business requirements. Trino can directly access Data Lake Formation (DLF) metadata within the same account.

The following table describes the parameters that are used to configure a metadata storage center for data in data lakes.

Parameter | Description | Remarks |

hive.metastore | The type of the Hive metastore. | Set the value to DLF. |

dlf.catalog.id | The ID of the DLF catalog. | By default, this parameter is set to the ID of your Alibaba Cloud account. |

dlf.catalog.region | The ID of the region in which DLF is activated. | For more information, see Supported regions and endpoints. Note Make sure that the value of this parameter matches the endpoint specified by the dlf.catalog.endpoint parameter. |

dlf.catalog.endpoint | The endpoint of DLF. | For more information, see Supported regions and endpoints. We recommend that you set the dlf.catalog.endpoint parameter to a VPC endpoint of DLF. For example, if you select the China (Hangzhou) region, set the dlf.catalog.endpoint parameter to dlf-vpc.cn-hangzhou.aliyuncs.com. Note You can also use a public endpoint of DLF. For example, if you select the China (Hangzhou) region, set the dlf.catalog.endpoint parameter to dlf.cn-hangzhou.aliyuncs.com. |

dlf.catalog.akMode | The AccessKey mode of the DLF service. | We recommend that you set this parameter to EMR_AUTO. |

dlf.catalog.proxyMode | The proxy mode of the DLF service. | We recommend that you set this parameter to DLF_ONLY. |

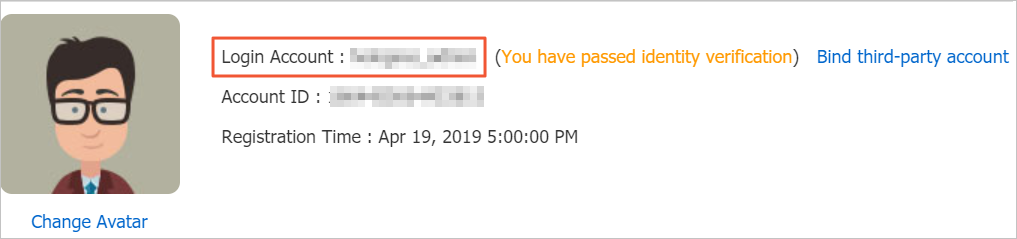

dlf.catalog.uid | The ID of your Alibaba Cloud account. | To obtain the ID of your Alibaba Cloud account, go to the Security Settings page. |

Example: Query data in a table

Run commands to access Trino. For more information, see Log on to the Trino console by running commands.

Run the following command to query data in the test_hive table:

select * from hive.default.test_hive;