Non-Volatile Memory Express (NVMe) helps you improve the storage performance of your Elastic Compute Service (ECS) instances. This topic describes the limits of NVMe in terms of ECS instance families, images, and cloud disks and the operations that you can perform on NVMe disks.

NVMe is a high-speed interface protocol for solid-state storage such as flash-based SSDs. NVMe allows storage devices to communicate directly with CPUs. This helps reduce data transfer latency. When a cloud disk is attached to an ECS instance based on NVMe, this significantly reduces I/O access latency.

Limits

To use NVMe on an ECS instance, the instance family, image, and cloud disks of the ECS instance must meet the requirements in the following table.

Resource | Limit |

Instance family | The instance family must support NVMe. Note To query instance families and check whether an instance family supports NVMe, call the DescribeInstanceTypes operation and view the value of the NvmeSupport parameter in the response. |

Image | The image must contain the NVMe driver. Note

|

Cloud disk | Only Enterprise SSDs (ESSDs) and ESSD AutoPL disks support NVMe. Note

|

Billing

NVMe is provided free of charge. You are charged for resources that support NVMe based on their corresponding billing methods. For information about the billing of ECS resources, see Billing overview.

Related operations

You can perform operations to use NVMe to improve the storage performance of ECS instances. The following table describes the related operations.

Operation | Description |

Create an ECS instance that supports NVMe | When you purchase a custom instance, you can create ESSDs or ESSD AutoPL disks together with the instance. For more information, see Create an instance on the Custom Launch tab. |

Separately create an ESSD, an ESSD AutoPL disk, or a Regional ESSD and attach the cloud disk to an ECS instance |

|

Initialize an NVMe disk | Before you can use NVMe disks, you must initialize the disks regardless of whether the disks are created with instances or separately created. For more information, see Initialize a data disk. Note When you initialize an NVMe disk, make sure that the device name and partition name of the cloud disk are different from the device names and partition names of other cloud disks. For more information, see the Device names of NVMe disks section of this topic. |

Other operations on NVMe disks are similar to the operations performed on cloud disks. For more information, see Disks.

Device names of NVMe disks

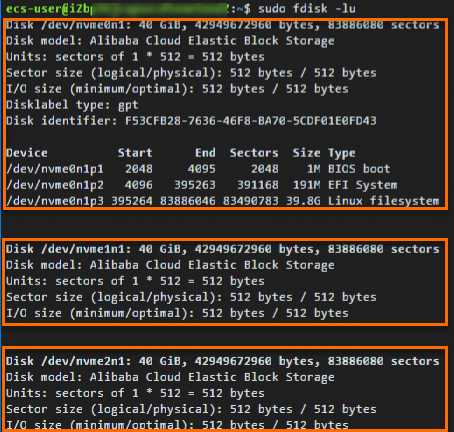

If an ESSD or ESSD AutoPL disk is attached to a Linux instance based on NVMe, the device name and partition name of the cloud disk are different from the device names and partition names of other cloud disks. To view the device names and partition names of NVMe disks, run the sudo fdisk -lu command.

For example, the following figure shows that three cloud disks are attached to the ECS instance. The cloud disks are NVMe disks.

The device names of the cloud disks are displayed in the

/dev/nvmeXn1format.System disk: /dev/nvme0n1.

Data disks: /dev/nvme1n1, /dev/nvme2n1, and so on.

The partition names of the cloud disks are displayed in the

<Device name>p<Partition number>format. For example, the system disk named /dev/nvme0n1 has the following partitions: /dev/nvme0n1p1, /dev/nvme0n1p2, and /dev/nvme0n1p3.

Reference

To query the serial number of an NVMe disk, refer to Query the serial numbers of block storage devices.