This guide describes how to build a heterogeneous confidential computing environment on an Alibaba Cloud heterogeneous confidential computing instance (gn8v-tee). It also shows how to run sample code to verify the GPU confidential computing feature.

Background

Alibaba Cloud heterogeneous confidential computing instances (gn8v-tee) extend CPU TDX confidential computing instances by incorporating a GPU into the Trusted Execution Environment (TEE). This protects data transfers between the CPU and GPU, and data computation within the GPU. For information about building a CPU TDX confidential computing environment and verifying its remote attestation capabilities, see Build a TDX confidential computing environment. To deploy a large language model (LLM) inference environment on a heterogeneous confidential computing instance, see Build a measurement-enabled LLM inference environment on a heterogeneous confidential computing instance.

The figure above shows that the GPU on a heterogeneous confidential computing instance starts in confidential computing mode. The following mechanisms ensure the instance's confidentiality:

The TDX feature ensures that the Hypervisor/Host OS cannot access the instance's sensitive registers or memory data.

A PCIe firewall prevents the CPU from accessing the GPU's critical registers and protected video memory. The Hypervisor/Host OS can only perform limited operations on the GPU, such as resetting it, but cannot access sensitive data, ensuring data confidentiality on the GPU.

The GPU's NVLink Firewall blocks other GPUs from directly accessing its video memory.

During initialization, the GPU driver and library functions within the CPU TEE establish an encrypted channel with the GPU using the Security Protocol and Data Model (SPDM) protocol. After key negotiation is complete, only ciphertext is transmitted over PCIe between the CPU and GPU, ensuring the confidentiality of the data transfer link.

The GPU's remote attestation capability confirms that the GPU is in a secure state.

Specifically, applications in a confidential computing instance can use the Attestation software development kit (SDK) to call the GPU driver and obtain a cryptographic report of the GPU's security status from the hardware. This report contains cryptographically signed information about the GPU hardware, VBIOS, and hardware status measurements. A relying party can compare these measurements with the reference measurements provided by the GPU vendor to verify that the GPU is in a secure confidential computing state.

Usage note

Heterogeneous confidential computing requires Alibaba Cloud Linux 3 images. If you create an instance using a custom image based on Alibaba Cloud Linux 3, ensure that its kernel version is 5.10.134-18 or later.

Create a heterogeneous confidential computing instance (gn8v-tee)

Console

The process of creating a heterogeneous confidential computing instance in the console is similar to creating a standard instance but requires specific configuration options. This section highlights the key configurations for heterogeneous confidential computing instances. For details on other general configurations, see Create an instance using the wizard.

-

Go to ECS console - Instances.

-

In the top navigation bar, select the region and resource group of the resource that you want to manage.

Click Create Instance and configure the instance with the following settings.

Configuration Item

Description

Region and Zone

China (Beijing) Zone L

Instance Type

Only instance types ecs.gn8v-tee.4xlarge and higher are supported.

Image

Select the Alibaba Cloud Linux 3.2104 LTS 64-bit image.

Public IP Address

Assign Public IPv4 Address. This ensures you can download the driver from the official NVIDIA website later.

ImportantWhen creating or restarting a confidential instance with 8 GPUs, do not add additional secondary Elastic Network Interfaces (ENIs) or data disks. This can cause an instance startup failure.

Follow the on-screen instructions to complete the instance creation.

API/CLI

You can call the RunInstances operation or use the Alibaba Cloud CLI to create a TDX-enabled ECS instance. The key parameters are described in the table below.

Parameter | Description | Example |

RegionId | China (Beijing) | cn-beijing |

ZoneId | Zone L | cn-beijing-l |

InstanceType | Select ecs.gn8v-tee.4xlarge or a larger instance type. | ecs.gn8v-tee.4xlarge |

ImageId | Specify the ID of an image that supports confidential computing. Only 64-bit Alibaba Cloud Linux 3.2104 LTS images with a kernel version of 5.10.134-18.al8.x86_64 or later are supported. | aliyun_3_x64_20G_alibase_20250117.vhd |

CLI example:

In the command,<SECURITY_GROUP_ID>represents the security group ID,<VSWITCH_ID>represents the vSwitch ID, and<KEY_PAIR_NAME>represents the SSH key pair name.

aliyun ecs RunInstances \

--RegionId cn-beijing \

--ZoneId cn-beijing-l \

--SystemDisk.Category cloud_essd \

--ImageId 'aliyun_3_x64_20G_alibase_20250117.vhd' \

--InstanceType 'ecs.gn8v-tee.4xlarge' \

--SecurityGroupId '<SECURITY_GROUP_ID>' \

--VSwitchId '<VSWITCH_ID>' \

--KeyPairName <KEY_PAIR_NAME>Build the heterogeneous confidential computing environment

Step 1: Install the NVIDIA driver and CUDA Toolkit

Heterogeneous confidential computing instances take a long time to initialize. Wait until the instance status is Running and the instance's operating system has fully started.

The installation steps vary based on the instance type:

Single-GPU confidential instances: ecs.gn8v-tee.4xlarge and ecs.gn8v-tee.6xlarge

8-GPU confidential instances: ecs.gn8v-tee-8x.16xlarge and ecs.gn8v-tee-8x.48xlarge

Single-GPU confidential instances

Connect to the confidential computing instance.

For more information, see Log on to a Linux instance using Workbench.

Adjust the kernel parameters to set the SWIOTLB buffer to 8 GB.

sudo grubby --update-kernel=ALL --args="swiotlb=4194304,any"Restart the instance to apply the changes.

For more information, see Restart an instance.

Download the NVIDIA driver and CUDA Toolkit.

Single-GPU confidential instances require driver version

550.144.03or later. This topic uses version550.144.03as an example.wget --referer=https://www.nvidia.cn/ https://cn.download.nvidia.cn/tesla/550.144.03/NVIDIA-Linux-x86_64-550.144.03.run wget https://developer.download.nvidia.com/compute/cuda/12.4.1/local_installers/cuda_12.4.1_550.54.15_linux.runInstall dependencies and disable the CloudMonitor service.

sudo yum install -y openssl3 sudo systemctl disable cloudmonitor sudo systemctl stop cloudmonitorCreate and configure

nvidia-persistenced.service.cat > nvidia-persistenced.service << EOF [Unit] Description=NVIDIA Persistence Daemon Wants=syslog.target Before=cloudmonitor.service [Service] Type=forking ExecStart=/usr/bin/nvidia-persistenced --user root ExecStartPost=/usr/bin/nvidia-smi conf-compute -srs 1 ExecStopPost=/bin/rm -rf /var/run/nvidia-persistenced [Install] WantedBy=multi-user.target EOF sudo cp nvidia-persistenced.service /usr/lib/systemd/system/nvidia-persistenced.serviceInstall the NVIDIA driver and CUDA Toolkit.

sudo bash NVIDIA-Linux-x86_64-550.144.03.run --ui=none --no-questions --accept-license --disable-nouveau --no-cc-version-check --install-libglvnd --kernel-module-build-directory=kernel-open --rebuild-initramfs sudo bash cuda_12.4.1_550.54.15_linux.run --silent --toolkitStart the nvidia-persistenced and CloudMonitor services.

sudo systemctl start nvidia-persistenced.service sudo systemctl enable nvidia-persistenced.service sudo systemctl start cloudmonitor sudo systemctl enable cloudmonitor

8-GPU confidential instances

Connect to the confidential computing instance.

For more information, see Log on to a Linux instance using Workbench.

ImportantConfidential computing instances have a slow initialization process. Wait for the process to complete before proceeding.

Adjust the kernel parameters to set the SWIOTLB buffer to 8 GB.

sudo grubby --update-kernel=ALL --args="swiotlb=4194304,any"Configure the loading behavior of the NVIDIA driver and regenerate the initramfs.

sudo bash -c 'cat > /etc/modprobe.d/nvidia-lkca.conf << EOF install nvidia /sbin/modprobe ecdsa_generic; /sbin/modprobe ecdh; /sbin/modprobe --ignore-install nvidia options nvidia NVreg_RegistryDwords="RmEnableProtectedPcie=0x1" EOF' sudo dracut --regenerate-all -fRestart the instance to apply the changes.

For more information, see Restart an instance.

Download the NVIDIA driver and CUDA Toolkit.

8-GPU confidential computing instances require driver version

570.148.08or later and the corresponding version ofFabric Manager. This topic uses version570.148.08as an example.wget --referer=https://www.nvidia.cn/ https://cn.download.nvidia.cn/tesla/570.148.08/NVIDIA-Linux-x86_64-570.148.08.run wget https://developer.download.nvidia.com/compute/cuda/12.8.1/local_installers/cuda_12.8.1_570.124.06_linux.run wget https://developer.download.nvidia.cn/compute/cuda/repos/rhel8/x86_64/nvidia-fabric-manager-570.148.08-1.x86_64.rpmInstall dependencies and disable the CloudMonitor service.

sudo yum install -y openssl3 sudo systemctl disable cloudmonitor sudo systemctl stop cloudmonitorCreate and configure

nvidia-persistenced.service.cat > nvidia-persistenced.service << EOF [Unit] Description=NVIDIA Persistence Daemon Wants=syslog.target Before=cloudmonitor.service After=nvidia-fabricmanager.service [Service] Type=forking ExecStart=/usr/bin/nvidia-persistenced --user root --uvm-persistence-mode --verbose ExecStartPost=/usr/bin/nvidia-smi conf-compute -srs 1 ExecStopPost=/bin/rm -rf /var/run/nvidia-persistenced TimeoutStartSec=900 TimeoutStopSec=60 [Install] WantedBy=multi-user.target EOF sudo cp nvidia-persistenced.service /usr/lib/systemd/system/nvidia-persistenced.serviceInstall Fabric Manager, the NVIDIA driver, and the CUDA Toolkit.

sudo rpm -ivh nvidia-fabric-manager-570.148.08-1.x86_64.rpm sudo bash NVIDIA-Linux-x86_64-570.148.08.run --ui=none --no-questions --accept-license --disable-nouveau --no-cc-version-check --install-libglvnd --kernel-module-build-directory=kernel-open --rebuild-initramfs sudo bash cuda_12.8.1_570.124.06_linux.run --silent --toolkitStart and enable the nvidia-fabricmanager, nvidia-persistenced, and cloudmonitor services.

sudo systemctl start nvidia-fabricmanager.service sudo systemctl enable nvidia-fabricmanager.service sudo systemctl start nvidia-persistenced.service sudo systemctl enable nvidia-persistenced.service sudo systemctl start cloudmonitor sudo systemctl enable cloudmonitor

Step 2: Check the TDX status

This feature is built on TDX. First, check the instance's TDX status to verify that it is protected.

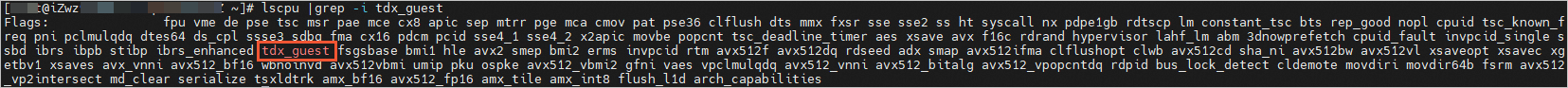

Check whether TDX is enabled.

lscpu |grep -i tdx_guestThe following command output indicates that TDX is enabled.

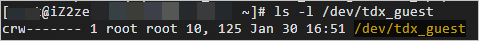

Check the installation of TDX-related drivers.

ls -l /dev/tdx_guestThe following figure shows that the TDX-related drivers are installed.

Step 3: Check the GPU confidential computing feature status

Single-GPU confidential instances

View the confidential computing feature status.

nvidia-smi conf-compute -fCC status: ON indicates that the confidential computing feature is enabled. CC status: OFF indicates that the feature is disabled due to an instance error. If this occurs, submit a ticket.

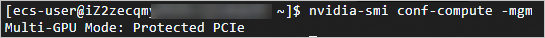

8-GPU confidential instances

View the status of the confidential computing attribute.

nvidia-smi conf-compute -mgmMulti-GPU Mode: Protected PCIe indicates that the multi-GPU confidential computing feature is enabled. Multi-GPU Mode: None indicates that the feature is disabled due to an instance error. If this occurs, submit a ticket.

For 8-GPU confidential instances, the nvidia-smi conf-compute -f command normally returns CC status: OFF.

Step 4: Verify GPU and NVSwitch trust by using local attestation

Single-GPU confidential instances

Install the dependencies required for GPU trust.

sudo yum install -y python3.11 python3.11-devel python3.11-pip sudo alternatives --install /usr/bin/python3 python3 /usr/bin/python3.11 60 sudo alternatives --set python3 /usr/bin/python3.11 sudo python3 -m ensurepip --upgrade sudo python3 -m pip install --upgrade pip sudo python3 -m pip install nv_attestation_sdk==2.5.0.post6914366 nv_local_gpu_verifier==2.5.0.post6914366 nv_ppcie_verifier==1.5.0.post6914366 -f https://attest-public-cn-beijing.oss-cn-beijing.aliyuncs.com/repo/pip/attest.htmlVerify the GPU's trust status.

python3 -m verifier.cc_admin --user_modeThe output indicates that the GPU is in confidential computing mode and that measurements such as the driver and VBIOS match their expected values:

8-GPU confidential instances

Install the dependencies required for GPU trust.

sudo yum install -y python3.11 python3.11-devel python3.11-pip sudo alternatives --install /usr/bin/python3 python3 /usr/bin/python3.11 60 sudo alternatives --set python3 /usr/bin/python3.11 sudo python3 -m ensurepip --upgrade sudo python3 -m pip install --upgrade pip sudo python3 -m pip install nv_attestation_sdk==2.5.0.post6914366 nv_local_gpu_verifier==2.5.0.post6914366 nv_ppcie_verifier==1.5.0.post6914366 -f https://attest-public-cn-beijing.oss-cn-beijing.aliyuncs.com/repo/pip/attest.htmlInstall NVSwitch-related dependencies.

wget https://developer.download.nvidia.cn/compute/cuda/repos/rhel8/x86_64/libnvidia-nscq-570-570.148.08-1.x86_64.rpm sudo rpm -ivh libnvidia-nscq-570-570.148.08-1.x86_64.rpmRun the following command to verify the GPU/NVSwitch trust status.

python3 -m ppcie.verifier.verification --gpu-attestation-mode=LOCAL --switch-attestation-mode=LOCALThe sample code verifies eight GPUs and four NVSwitches. An output of

SUCCESSindicates that the verification is successful:

Limitations

Because this feature is built on TDX, it inherits the limitations of TDX confidential computing instances. For more information, see Known limitations of TDX instances.

After the GPU confidential computing feature is enabled, data transfer between the CPU and GPU requires encryption and decryption. This encryption and decryption process results in lower performance for GPU-related tasks than on non-confidential heterogeneous instances.

Usage notes

Single-GPU instances use CUDA 12.4. The NVIDIA cuBLAS library has a known issue that may cause errors when you run CUDA or LLM tasks. To resolve this, install a specific version of cuBLAS.

pip3 install nvidia-cublas-cu12==12.4.5.8After the GPU confidential computing feature is enabled, the initialization process is slow, especially for 8-GPU instances. After the guest OS starts, you must verify that the

nvidia-persistencedservice has finished starting before you use the GPU by runningnvidia-smior other commands. To check the status of thenvidia-persistencedservice, run the following command:systemctl status nvidia-persistenced | grep "Active: "activating (start)indicates that the service is starting.Active: activating (start) since Wed 2025-02-19 10:07:54 CST; 2min 20s agoactive (running) indicates that the service is running.

Active: active (running) since Wed 2025-02-19 10:10:28 CST; 22s ago

Any auto-start service that uses the GPU, such as

cloudmonitor.service,nvidia-cdi-refresh.service(from the nvidia-container-toolkit-base package), orollama.service, must be configured to start afternvidia-persistenced.service.The following is an example configuration for

/usr/lib/systemd/system/nvidia-persistenced.service:[Unit] Description=NVIDIA Persistence Daemon Wants=syslog.target Before=cloudmonitor.service nvidia-cdi-refresh.service ollama.service After=nvidia-fabricmanager.service [Service] Type=forking ExecStart=/usr/bin/nvidia-persistenced --user root --uvm-persistence-mode --verbose ExecStartPost=/usr/bin/nvidia-smi conf-compute -srs 1 ExecStopPost=/bin/rm -rf /var/run/nvidia-persistenced TimeoutStartSec=900 TimeoutStopSec=60 [Install] WantedBy=multi-user.target