DataWorks provides the data push feature that allows you to query business data from a database and push the data to specific destinations by using various push channels. You can also push the monitoring data of a database. This topic describes how to push the monitoring data of Hologres at 9:00 every day. This helps learn about the status of Hologres.

Preparations

Create a DataWorks workspace. In this topic, a DataWorks workspace in standard mode is created. For more information, see Create a workspace.

Add a Hologres data source. For more information, see Add a Hologres data source.

Create a DataWorks resource group and associate the resource group with the created workspace. For more information, see Use serverless resource groups.

Limits

Limits on the data size:

If you want to push data to DingTalk, the data size cannot exceed 20 KB.

If you want to push data to Lark, the data size cannot exceed 20 KB, and the size of an image must be less than 10 MB.

If you want to push data to WeCom, each chatbot can send a maximum of 20 messages every minute.

If you want to push data to Microsoft Teams, the data size cannot exceed 28 KB.

If you want to push data to Email, only one email body can be added to each data push task. If the email body is added, the email body cannot be added again. For more information about restrictions, see the Simple Mail Transfer Protocol (SMTP) limits of the used email service.

The data push feature is available only in DataWorks workspaces in the following regions: China (Hangzhou), China (Shanghai), China (Beijing), China (Shenzhen), China (Chengdu), Singapore, US (Silicon Valley), and US (Virginia).

Step 1: Create a destination

A data push task can push queried data to specific destinations by using various push channels. Before you create a data push task, you must first create a destination.

Go to the DataService Studio page.

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to DataService Studio.

In the lower-left corner of the DataService Studio page, click the  icon. On the page that appears, click the Destination Management tab. Then, click Create Destination.

icon. On the page that appears, click the Destination Management tab. Then, click Create Destination.

In the Crete Destination dialog box, configure the following parameters:

Type: Select a push channel. Valid values: DingTalk, Lark, WeCom, and Teams.

Destination Name: Enter a name for the destination.

Webhook: Enter the webhook URL of the push channel that you selected.

Step 2: Create a data push task

Go to the DataService Studio page.

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to DataService Studio.

Create a data push task.

In the Service Development pane of the DataService Studio page, click Data Push. Move the pointer over the  icon and select Create Data Push Task. In the Data Push dialog box, configure the Name parameter and click OK.

icon and select Create Data Push Task. In the Data Push dialog box, configure the Name parameter and click OK.

Step 3: Configure the data push task

The following table provides best practices in different scenarios. You can select a best practice based on your business requirements.

Practice 1: Query the user who initiates the highest number of slow queries and the top 50 slow queries

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

-- Query the user who initiates the highest number of slow queries.

SELECT usename AS topuser, count(1) AS topuser_counts

FROM hologres.hg_query_log

WHERE query_start >= '${date_start}'

AND query_start < '${date_end}'

GROUP BY usename

ORDER BY topuser_counts DESC

LIMIT 1;

-- Query the top 50 slow queries.

SELECT usename, datname, duration, application_name

FROM hologres.hg_query_log

WHERE query_start >= '${date_start}'

AND query_start < '${date_end}'

ORDER BY duration DESC

LIMIT 50;

Configure assignment parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

You can configure variables in the ${Variable name} format in the SQL statements and use assignment parameters to assign values to the variables. The assignment parameters can be date and time expressions or constants. This way, the values of variables are dynamically replaced in the code of the data push task based on the configurations of the assignment parameters. For more information, see the Configure the content to push section of the Data push topic.

In this example, you must configure the ${date_start} and ${date_end} assignment parameters.

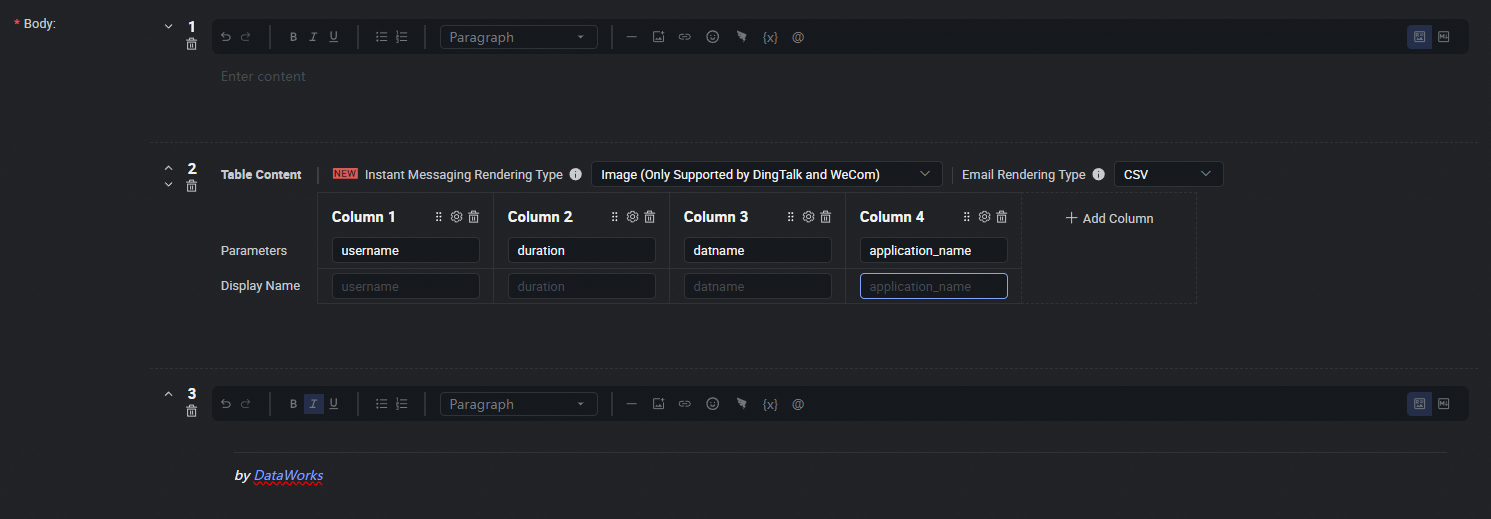

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

Practice 2: Query the queries that are most resource-consuming in the previous 12 hours

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

SELECT status AS "Status",

duration AS "Time consumed (ms)",

query_start AS "Start time",

(read_bytes/1048576)::text || ' MB' AS "Megabytes read",

(memory_bytes/1048576)::text || ' MB' AS "Memory",

(shuffle_bytes/1048576)::text || ' MB' AS "Shuffle",

(cpu_time_ms/1000)::text || ' s' AS "CPU time",

physical_reads AS "Physical reads",

query_id AS "QueryID",

query::char(30)

FROM hologres.hg_query_log

WHERE query_start >= now() - interval '12 hour'

AND duration > 1000

ORDER BY duration DESC,

read_bytes DESC,

shuffle_bytes DESC,

memory_bytes DESC,

cpu_time_ms DESC,

physical_reads DESC

LIMIT 100;

Configure the parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

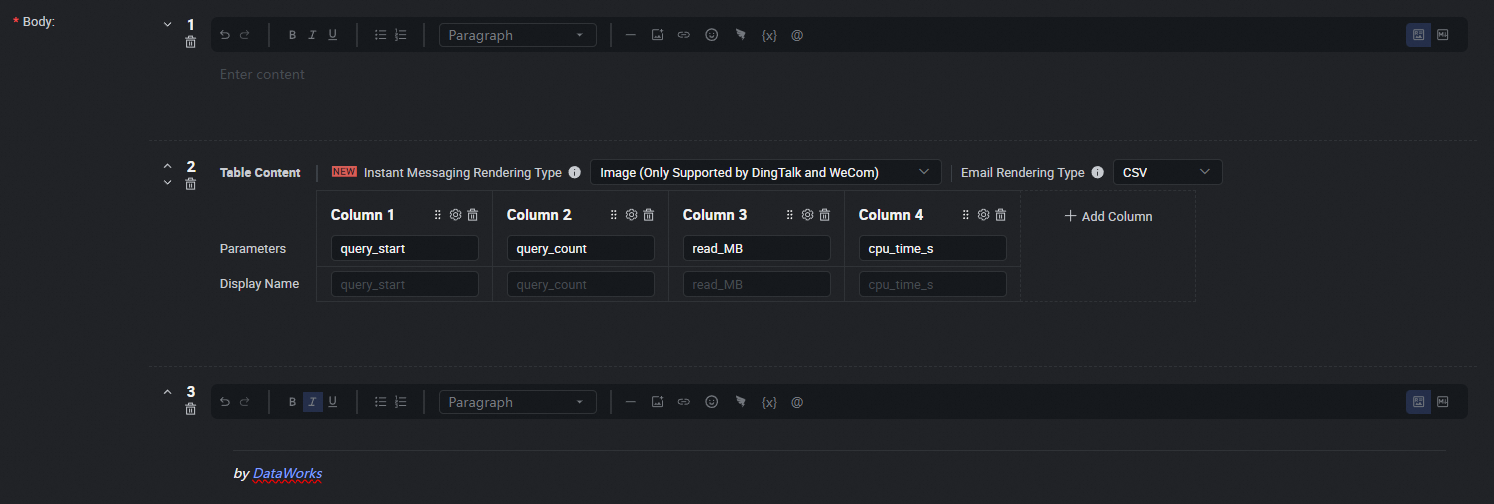

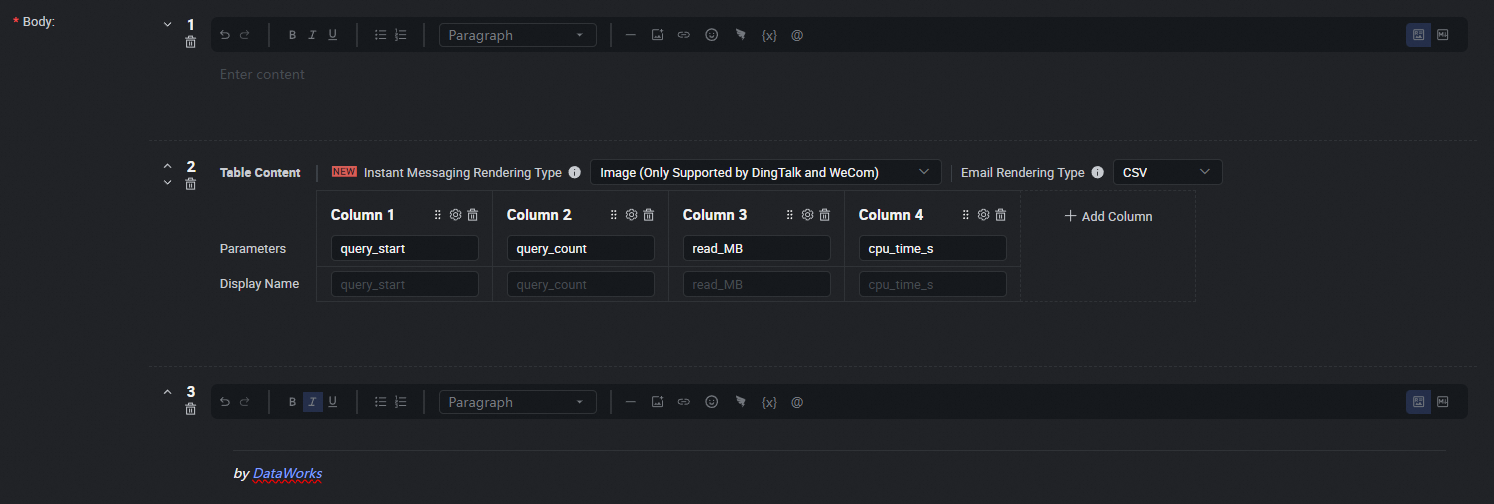

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

Practice 3: Query the number of queries and data read amount in each hour of the previous 3 hours

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

SELECT

date_trunc('hour', query_start) AS query_start,

count(1) AS query_count,

sum(read_bytes/1048576)::text || ' MB' AS read_MB,

sum(cpu_time_ms/1000)::text || ' s' AS cpu_time_s

FROM

hologres.hg_query_log

WHERE

query_start >= now() - interval '3 h'

GROUP BY 1;

Configure the parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

Practice 4: Query the number of queries that were initiated on the previous day

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

SELECT

COUNT(1) AS "increse_queries"

FROM ( SELECT DISTINCT

t1.digest

FROM

hologres.hg_query_log t1

WHERE

t1.query_start >= CURRENT_DATE - INTERVAL '1 day'

AND t1.query_start < CURRENT_DATE

AND NOT EXISTS (

SELECT

1

FROM

hologres.hg_query_log t2

WHERE

t2.digest = t1.digest

AND t2.query_start < CURRENT_DATE - INTERVAL '1 day')

AND digest IS NOT NULL

) AS a;

Configure the parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

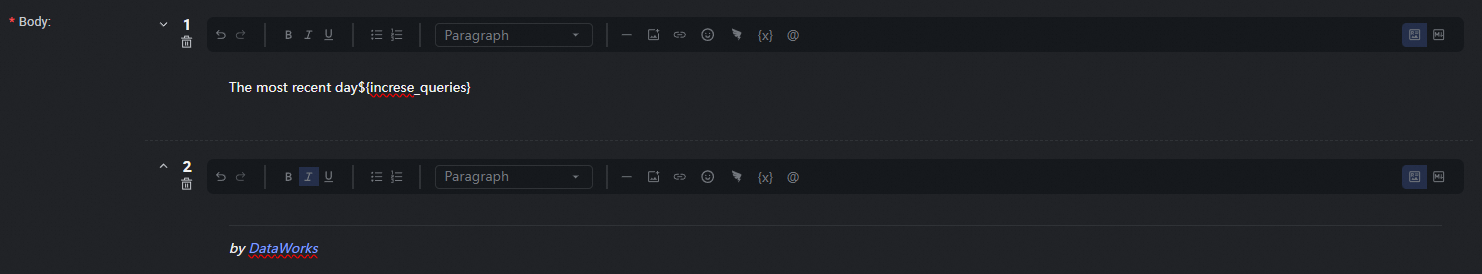

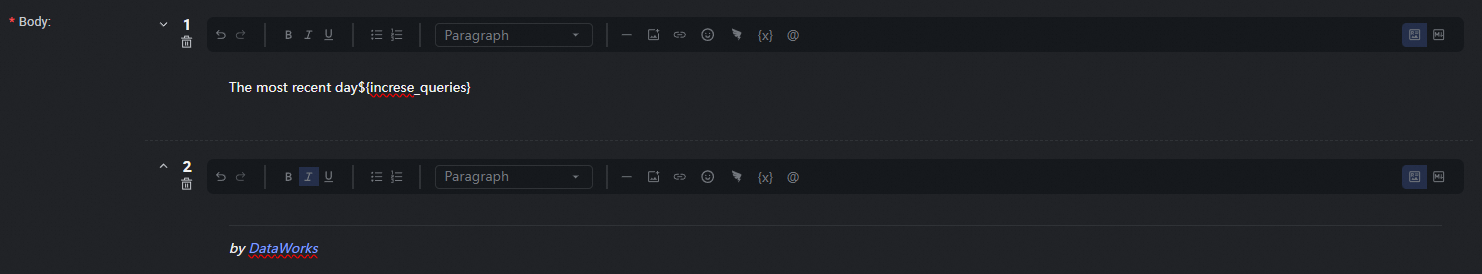

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

Practice 5: Query the data access amount of queries in the previous 3 hours and that in the same period on the previous day for comparison

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

SELECT

query_date,

count(1) AS query_count,

sum(read_bytes) AS read_bytes,

sum(cpu_time_ms) AS cpu_time_ms

FROM

hologres.hg_query_log

WHERE

query_start >= now() - interval '180min'

GROUP BY

query_date

UNION ALL

SELECT

query_date,

count(1) AS query_count,

sum(read_bytes) AS read_bytes,

sum(cpu_time_ms) AS cpu_time_ms

FROM

hologres.hg_query_log

WHERE

query_start >= now() - interval '1d 180min'

AND query_start <= now() - interval '1d'

GROUP BY

query_date;

Configure the parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

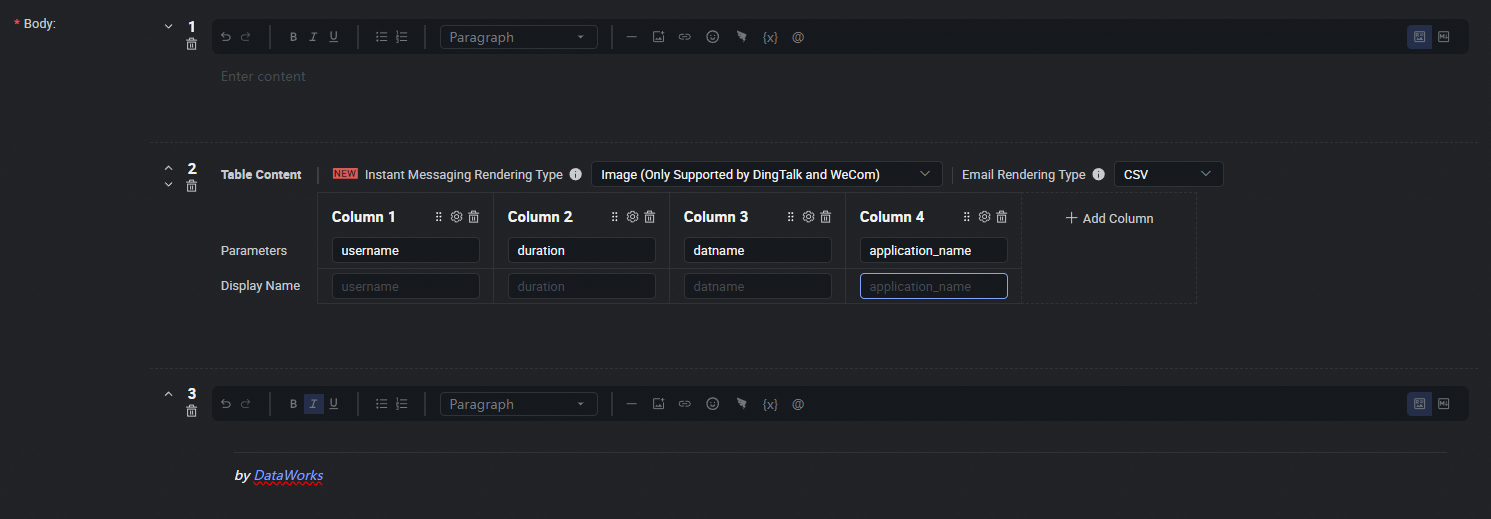

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

Practice 6: Query the queries that are most time-consuming at different query stages in the previous 30 minutes

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

SELECT

status AS "Status",

duration AS "Time consumed (ms)",

optimization_cost AS "Optimization duration (ms)",

start_query_cost AS "Startup duration (ms)",

get_next_cost AS "Execution duration (ms)",

duration - optimization_cost - start_query_cost - get_next_cost AS "Others (ms)",

query_id AS "QueryID"

FROM

hologres.hg_query_log

WHERE

query_start >= now() - interval '30 min'

ORDER BY

duration DESC,

start_query_cost DESC,

optimization_cost,

get_next_cost DESC,

duration - optimization_cost - start_query_cost - get_next_cost DESC

LIMIT 100;

Configure the parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

Practice 7: Query the first failed queries

Double-click the created data push task to go to the configuration tab of the task.

Configure the parameters in the Select Table section.

Datasource Type: Select Hologres.

Datasource Name: Select the Hologres data source that you added in the Preparations section.

Datasource Env.: Select Development.

Write SQL statements in the Edit Query SQL section.

Sample SQL statements:

SELECT

status AS "Status",

regexp_replace(message, '\n', ' ')::char(150) AS "Error message",

duration AS "Time consumed (ms)",

query_start AS "Start time",

query_id AS "QueryID",

query::char(100) AS "Query"

FROM

hologres.hg_query_log

WHERE

query_start BETWEEN '2024-07-10 17:00:00'::timestamptz AND '2024-07-10 17:42:00'::timestamptz + interval '2 min'

AND status = 'FAILED'

ORDER BY

query_start ASC

LIMIT 100;

Configure the parameters.

After you write the SQL statements, the response parameters are automatically displayed on the Output Parameters tab of the Parameters tab. If the parameters fail to be displayed or are invalid, you can turn off Automatically Parse Parameters and manually add parameters.

Configure the content to push.

In the Content to Push section, configure the content that you want to push by using tables or Markdown. If you configure the content by using Markdown, you can add assignment parameters and output parameters as variables in the ${Parameter name} format to the rich text. If you configure the content by using tables, you can add output parameters to a table. For more information, see the Configure the content to push section of the Data push topic.

Configure data push settings.

Scheduling period: Select Day.

Scheduling time: Select 09:00.

Timeout Definition: Select System Default.

Effective Date: Select Permanent.

Resource Group for Scheduling: Select the general-purpose resource group that you prepared in the Preparations section.

Destination: Select the destination that you created in Step 1: Create a destination.

Note You can configure the data push settings based on your business requirements. For more information, see the Configure data push settings section of the Data push topic.

Test the data push task.

After you create the data push task, click Save in the toolbar to save the configurations. Then, click Test in the toolbar. In the Test Data Push Task dialog box, assign constants to the variables in the Request Parameters section and click Test.

Publish the data push task.

What to do next

After you create a data push task, you can manage the data push task. For more information, see the Publish a push task section of the Data push topic.

icon. On the page that appears, click the Destination Management tab. Then, click Create Destination.

icon. On the page that appears, click the Destination Management tab. Then, click Create Destination.  icon and select Create Data Push Task. In the Data Push dialog box, configure the Name parameter and click OK.

icon and select Create Data Push Task. In the Data Push dialog box, configure the Name parameter and click OK.