This topic describes how to process the ods_user_info_d_starrocks table that stores basic user information and the ods_raw_log_d_starrocks table that stores website access logs of users by using StarRocks nodes in DataWorks to obtain user profile data. This topic helps you understand how to compute and analyze the synchronized data by using DataWorks and StarRocks to complete simple data processing in data warehouses.

Prerequisites

The required data is synchronized. For more information, see Synchronize data.

The basic user information that is stored in the

ods_user_info_dtable in MySQL is synchronized to theods_user_info_d_starrockstable in StarRocks by using a data synchronization task in Data Integration.The website access logs of users that are stored in the

user_log.txtobject in Object Storage Service (OSS) are synchronized to theods_raw_log_d_starrockstable in StarRocks by using a synchronization task in Data Integration.

Objective

The objective is to process the ods_user_info_d_starrocks and ods_raw_log_d_starrocks tables to obtain a basic user profile table.

Split the log information field in the

ods_raw_log_d_starrockstable into multiple fields and generate thedwd_log_info_di_starrocksfact table.Join the

dwd_log_info_di_starrocksfact table with theods_user_info_d_starrockstable based on the uid field to generate thedws_user_info_all_di_starrocksaggregate table.Process the

dws_user_info_all_di_starrockstable to generate a table namedads_user_info_1d_starrocks. The dws_user_info_all_di_starrocks table contains a large number of fields and a large amount of data. In this case, data consumption may require a long period of time to complete. Therefore, further data processing is required.

Go to the DataStudio page

Log on to the DataWorks console. In the top navigation bar, select the desired region. In the left-side navigation pane, choose . On the page that appears, select the desired workspace from the drop-down list and click Go to Data Development.

Step 1: Design a workflow

In the data synchronization phase, the required data is synchronized to StarRocks tables. The next objective is to further process the data to generate the basic user profile data.

Nodes at different levels and the work logic of the nodes

In the upper part of the workflow canvas, click Create Node to create the nodes described in the following table for data processing.

Node category

Node type

Node name

(Named after the output table)

Code logic

Database

StarRocks

StarRocksdwd_log_info_di_starrocksUse built-in functions and user-defined functions (UDFs) to split data in the

ods_raw_log_d_starrockstable and write data to multiple fields in thedwd_log_info_di_starrockstable.Database

StarRocks

StarRocksdws_user_info_all_di_starrocksJoin the table that stores the basic user information and the dwd_log_info_di_starrocks table that stores the preliminarily processed log data to generate an aggregate table.

Database

StarRocks

StarRocksads_user_info_1d_starrocksFurther process the data to generate a basic user profile.

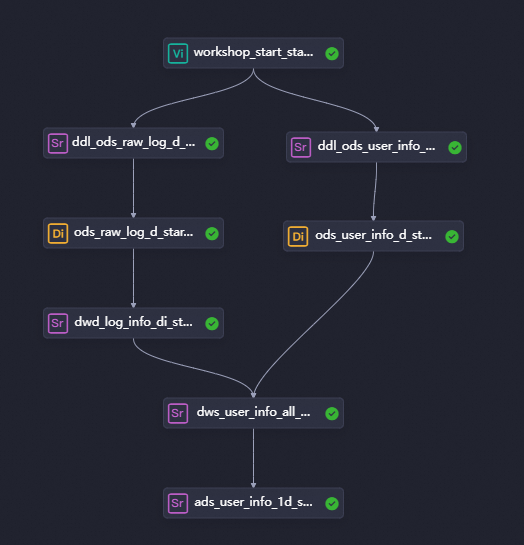

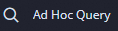

Directed acyclic graph (DAG) in the workflow

Drag the nodes to the workflow canvas, and configure dependencies between the nodes by drawing lines to design the workflow for data processing.

Step 2: Create a function

In this example, a function resource is uploaded and registered as a function for a data source. Then, the function is used to process data in the workflow. For more information, see Create resources and functions.

Before you register a Java UDF in StarRocks, you must set the enable_udf parameter to TRUE in the FE section on the Instance Configuration tab of the details page of your EMR Serverless StarRocks instance, and then restart the instance for the configuration to take effect.

If the enable_udf parameter is not set to TRUE, the following error is reported:

FAILED: Getting analyzing error. Detail message: UDF is not enabled in FE, please configure enable_udf=true in fe/conf/fe.conf or .

Upload a function resource

In this example, the ip2region-starrocks.jar resource that is required for a UDF is provided. You must download the resource to your on-premises machine, log on to the OSS console, and then upload the resource to your OSS bucket.

For information about how to create an OSS bucket, see Create a bucket. You can upload the JAR package to the created OSS bucket. You are charged for using the OSS bucket.

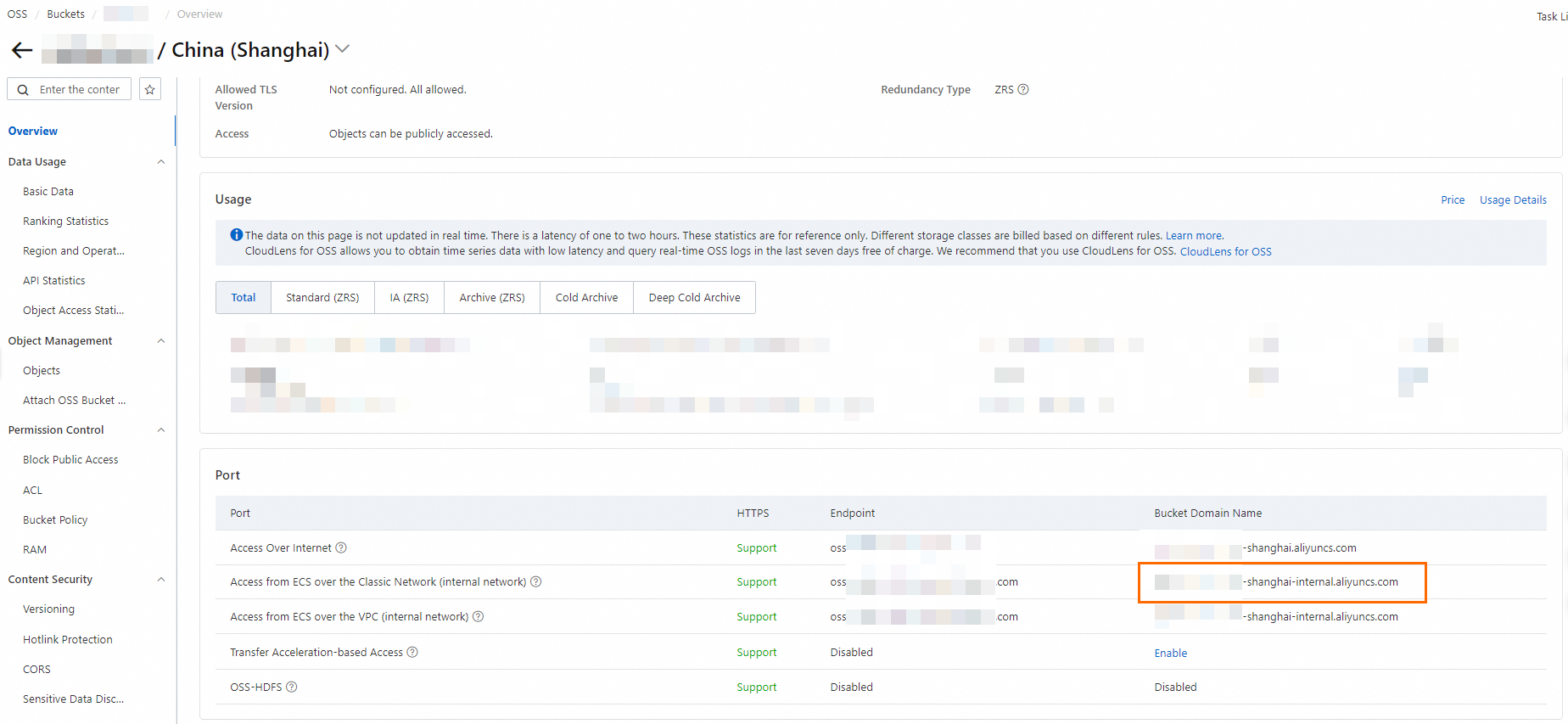

In this example, the following OSS bucket and storage paths are used:

Bucket name:

test. The name of an OSS bucket must be unique. You can specify the name of an OSS bucket based on your business requirements.Resource storage path:

dataworks_starrocks/ip2region-starrocks.jar.Full path:

https://test.oss-cn-shanghai-internal.aliyuncs.com/dataworks_starrocks/ip2region-starrocks.jar. The full storage path of a resource must be in the following format:https://${Bucket name}.oss-cn-shanghai-internal.aliyuncs.com/${Resource storage path}.NoteThe network address of the bucket to which the UDF belongs is the address that is used for access from Elastic Compute Service (ECS) over the classic network (internal network).

If you use an internal endpoint, the OSS bucket must reside in the same region as the DataWorks workspace. In this example, the China (Shanghai) region is used.

Register a function

Create an ad hoc query.

Log on to the DataWorks console. In the left-side navigation pane, choose Data Development and Governance > Data Development. On the Data Development page, select the desired workspace and click go to Data Development. In the left-side navigation pane of the DataStudio page, click

. The Ad Hoc Query pane appears. Right-click Ad Hoc Query and choose .

. The Ad Hoc Query pane appears. Right-click Ad Hoc Query and choose . Modify and run the code of the node.

CREATE FUNCTION getregion(string) RETURNS string PROPERTIES ( "symbol" = "com.starrocks.udf.sample.Ip2Region", "type" = "StarrocksJar", "file" = "Enter the full storage path of the uploaded function resource. You can obtain the path in the step of uploading a resource." );Check whether the function is registered.

select getregion('The IP address of your on-premises machine');

Step 3: Configure StarRocks nodes

Configure the dwd_log_info_di_starrocks node

On the configuration tab of the workflow, double-click the dwd_log_info_di_starrocks node. On the configuration tab of the node, enter the SQL code that processes fields in the ods_raw_log_d_starrocks ancestor table and writes the processed data to the dwd_log_info_di_starrocks table.

1. Configure the node code

Double-click the dwd_log_info_di_starrocks node to go to the configuration tab of the node. Write the following statements:

CREATE TABLE IF NOT EXISTS dwd_log_info_di_starrocks (

uid STRING COMMENT 'The user ID',

ip STRING COMMENT 'The IP address',

TIME STRING COMMENT 'The time in the yyyymmddhh:mi:ss format',

status STRING COMMENT 'The status code that is returned by the server',

bytes STRING COMMENT 'The number of bytes that are returned to the client',

region STRING COMMENT 'The region, which is obtained based on the IP address',

method STRING COMMENT 'The HTTP request type',

url STRING COMMENT 'url',

protocol STRING COMMENT 'The version number of HTTP',

referer STRING COMMENT 'The source URL',

device STRING COMMENT 'The terminal type',

identity STRING COMMENT 'The access type, which can be crawler, feed, user, or unknown',

dt DATE NOT NULL COMMENT 'The time'

) DUPLICATE KEY(uid)

COMMENT 'User behavior analysis case - The fact table for the website access logs of users'

PARTITION BY(dt)

PROPERTIES ("replication_num" = "1");

-- In this example, field data is dynamically partitioned based on the dt field. To prevent the node from rerunning and repeatedly writing data, the following SQL statement is used to delete the existing destination partitions before each processing operation.

ALTER TABLE dwd_log_info_di_starrocks DROP PARTITION IF EXISTS p${var} FORCE;

-- Scenario: The following SQL statements use the getregion function to parse the IP address in the raw log data, and use regular expressions to split the raw data to analyzable fields and write the fields to the dwd_log_info_di_starrocks table.

-- Note:

-- 1. Before you can use a UDF on a DataWorks node, you must register a function.

-- 2. You can configure scheduling parameters for nodes in DataWorks to write incremental data to the related partition in the desired table every day in the scheduling scenario.

-- In actual development scenarios, you can define variables in the code of a node in the ${Variable name} format and assign scheduling parameters to the variables on the Properties tab of the configuration tab of the node. This way, the values of scheduling parameters can be dynamically replaced in the node code based on the configurations of the scheduling parameters.

INSERT INTO dwd_log_info_di_starrocks

SELECT

uid

, ip

, time

, status

, bytes

, getregion(ip) as region-- Obtain the region by using the UDF based on the IP address.

,REGEXP_EXTRACT(request, '([^ ]+)', 1) AS method

,REGEXP_EXTRACT(request, '^[^ ]+ (.*) [^ ]+$', 1) AS url

,REGEXP_EXTRACT(request, '([^ ]+)$', 1) AS protocol

,REGEXP_EXTRACT(referer, '^[^/]+://([^/]+)', 1) AS referer

, CASE

WHEN LOWER(agent) REGEXP 'android' THEN 'android'

WHEN LOWER(agent) REGEXP 'iphone' THEN 'iphone'

WHEN LOWER(agent) REGEXP 'ipad' THEN 'ipad'

WHEN LOWER(agent) REGEXP 'macintosh' THEN 'macintosh'

WHEN LOWER(agent) REGEXP 'windows phone' THEN 'windows_phone'

WHEN LOWER(agent) REGEXP 'windows' THEN 'windows_pc'

ELSE 'unknown'

END AS device

, CASE

WHEN LOWER(agent) REGEXP '(bot|spider|crawler|slurp)' THEN 'crawler'

WHEN LOWER(agent) REGEXP 'feed' OR REGEXP_EXTRACT(request, '^[^ ]+ (.*) [^ ]+$', 0) REGEXP 'feed' THEN 'feed'

WHEN NOT (LOWER(agent) REGEXP '(bot|spider|crawler|feed|slurp)')

AND agent REGEXP '^(Mozilla|Opera)'

AND NOT (REGEXP_EXTRACT(request, '^[^ ]+ (.*) [^ ]+$', 0) REGEXP 'feed') THEN 'user'

ELSE 'unknown'

END AS identity,

cast('${var}' as DATE )as dt

FROM (

SELECT

SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 1) AS ip

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 2) AS uid

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 3) AS time

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 4) AS request

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 5) AS status

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 6) AS bytes

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 7) AS referer

, SPLIT_PART(CAST(col AS VARCHAR(65533)), '##@@', 8) AS agent

FROM

ods_raw_log_d_starrocks

WHERE

dt = '${var}'

) a;2. Configure scheduling properties for the node

In the right-side navigation pane of the configuration tab of the dwd_log_info_di_starrocks node, click General. The following table describes the parameters.

Section | Description | Screenshot |

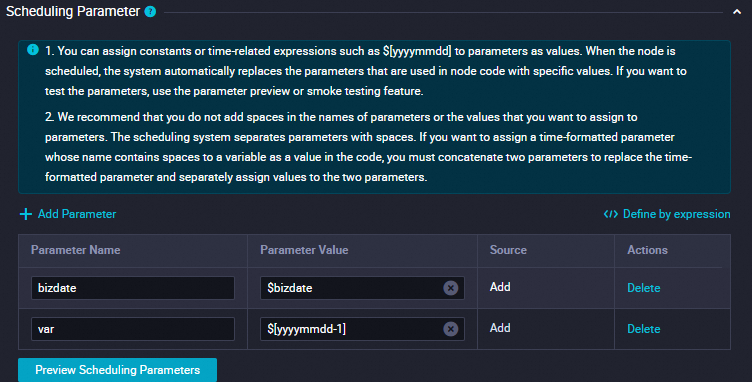

Scheduling Parameter | Click Add Parameter in the Scheduling Parameter section. In the row that appears in the table, you can specify a scheduling parameter and the value of the scheduling parameter.

|

|

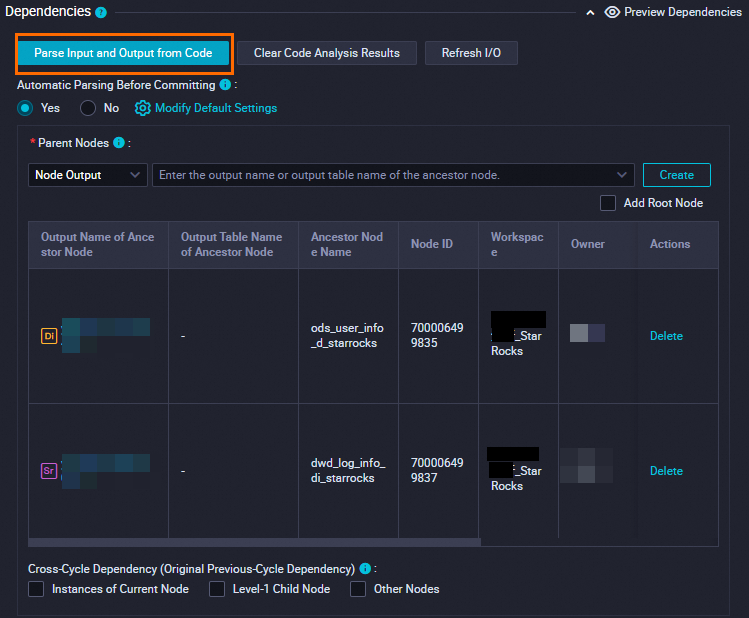

Dependencies | In the Dependencies section, click Parse Input and Output from Code to quickly configure the scheduling dependencies for the node by using the table lineage in the node code. For more information, see Scheduling dependency configuration guide. |

|

In the Schedule section, set the Scheduling Cycle parameter to Day. You do not need to separately configure the Scheduled time parameter for the current node. The time when the current node is scheduled to run every day is determined by the scheduling time of the workshop_start_starrocks zero load node of the workflow. The current node is scheduled to run after 00:30 every day.

3. Save the configurations

In this example, other required configuration items can be configured based on your business requirements. After the configuration is complete, click the ![]() icon in the top toolbar on the configuration tab of the node to save the node configurations.

icon in the top toolbar on the configuration tab of the node to save the node configurations.

Configure the dws_user_info_all_di_starrocks node

On the configuration tab of the workflow, double-click the dws_user_info_all_di_starrocks node. On the configuration tab of the node, enter the SQL code that merges the dwd_log_info_di_starrocks and ods_user_info_d_starrocks ancestor tables and writes the merged data to the dws_user_info_all_di_starrocks table.

Configure the node code

Double-click the dws_user_info_all_di_starrocks node to go to the configuration tab of the node. Write the following statements:

CREATE TABLE IF NOT EXISTS dws_user_info_all_di_starrocks (

uid STRING COMMENT 'The user ID',

gender STRING COMMENT 'The gender',

age_range STRING COMMENT 'The age range',

zodiac STRING COMMENT 'The zodiac sign',

region STRING COMMENT 'The region, which is obtained based on the IP address',

device STRING COMMENT 'The terminal type',

identity STRING COMMENT 'The access type, which can be crawler, feed, user, or unknown',

method STRING COMMENT 'The HTTP request type',

url STRING COMMENT 'url',

referer STRING COMMENT 'The source URL',

TIME STRING COMMENT 'The time in the yyyymmddhh:mi:ss format',

dt DATE NOT NULL COMMENT 'The time'

) DUPLICATE KEY(uid)

COMMENT 'User behavior analysis case - Wide table for the website access information about users'

PARTITION BY(dt)

PROPERTIES ("replication_num" = "1");

-- In this example, field data is dynamically partitioned based on the dt field. To prevent the node from rerunning and repeatedly writing data, the following SQL statement is used to delete the existing destination partitions before each processing operation.

ALTER TABLE dws_user_info_all_di_starrocks DROP PARTITION IF EXISTS p${var} FORCE;

-- Scenario: Merge the processed log data stored in the dwd_log_info_di_starrocks table and the basic user information stored in the ods_user_info_d_starrocks table and write the merged data to the dws_user_info_all_di_starrocks table.

-- Note: You can configure scheduling parameters for nodes in DataWorks to write incremental data to the related partition in the desired table every day in the scheduling scenario.

-- In actual development scenarios, you can define variables in the code of a node in the ${Variable name} format and assign scheduling parameters to the variables on the Properties tab of the configuration tab of the node. This way, the values of scheduling parameters can be dynamically replaced in the node code based on the configurations of the scheduling parameters.

INSERT INTO dws_user_info_all_di_starrocks

SELECT

IFNULL(a.uid, b.uid) AS uid,

b.gender,

b.age_range,

b.zodiac,

a.region,

a.device,

a.identity,

a.method,

a.url,

a.referer,

a.time,

a.dt

FROM dwd_log_info_di_starrocks a

LEFT JOIN ods_user_info_d_starrocks b

ON a.uid = b.uid

WHERE a.dt = '${var}';

Configure scheduling properties for the node

In the right-side navigation pane of the configuration tab of the dws_user_info_all_di_starrocks node, click General. The following table describes the parameters.

Section | Description | Screenshot |

Scheduling Parameter | Click Add Parameter in the Scheduling Parameter section. In the row that appears in the table, you can specify a scheduling parameter and the value of the scheduling parameter.

|

|

Dependencies | In the Dependencies section, click Parse Input and Output from Code to quickly configure the scheduling dependencies for the node by using the table lineage in the node code. For more information, see Scheduling dependency configuration guide. |

|

In the Schedule section, set the Scheduling Cycle parameter to Day. You do not need to separately configure the Scheduled time parameter for the current node. The time when the current node is scheduled to run every day is determined by the scheduling time of the workshop_start_starrocks zero load node of the workflow. The current node is scheduled to run after 00:30 every day.

3. Save the configurations

In this example, other required configuration items can be configured based on your business requirements. After the configuration is complete, click the ![]() icon in the top toolbar on the configuration tab of the node to save the node configurations.

icon in the top toolbar on the configuration tab of the node to save the node configurations.

Configure the ads_user_info_1d_starrocks node

On the configuration tab of the workflow, double-click the ads_user_info_1d_starrocks StarRocks node. The configuration tab of the node appears. On the configuration tab of the node, enter the SQL code that processes the dws_user_info_all_di_starrocks ancestor table and writes the processed data to the ads_user_info_1d_starrocks table.

1. Configure the node code

CREATE TABLE IF NOT EXISTS ads_user_info_1d_starrocks (

uid STRING COMMENT 'The user ID',

region STRING COMMENT 'The region, which is obtained based on the IP address',

device STRING COMMENT 'The terminal type',

pv BIGINT COMMENT 'pv',

gender STRING COMMENT 'The gender',

age_range STRING COMMENT 'The age range',

zodiac STRING COMMENT 'The zodiac sign',

dt DATE NOT NULL COMMENT 'The time'

) DUPLICATE KEY(uid)

COMMENT 'User behavior analysis case - User profile data'

PARTITION BY(dt)

PROPERTIES ("replication_num" = "1");

-- In this example, field data is dynamically partitioned based on the dt field. To prevent the node from rerunning and repeatedly writing data, the following SQL statement is used to delete the existing destination partitions before each processing operation.

ALTER TABLE ads_user_info_1d_starrocks DROP PARTITION IF EXISTS p${var} FORCE;

-- Scenario: The following SQL statements are used to further process data in the dws_user_info_d_all_di_starrocks wide table that stores the website access information about users to generate basic user profile data, and write the data to the ads_user_info_1d_starrocks table.

-- Note: You can configure scheduling parameters for nodes in DataWorks to write incremental data to the related partition in the desired table every day in the scheduling scenario.

-- -- In actual development scenarios, you can define variables in the code of a node in the ${Variable name} format and assign scheduling parameters to the variables on the Properties tab of the configuration tab of the node. This way, the values of scheduling parameters can be dynamically replaced in the node code based on the configurations of the scheduling parameters.

INSERT INTO ads_user_info_1d_starrocks

SELECT

uid,

MAX(region) AS region,

MAX(device) AS device,

COUNT(*) AS pv,

MAX(gender) AS gender,

MAX(age_range) AS age_range,

MAX(zodiac) AS zodiac,

dt

FROM dws_user_info_all_di_starrocks

WHERE dt = '${var}'

GROUP BY uid, dt;

select * FROM dws_user_info_all_di_starrocks

WHERE dt = '${var}';2. Configure scheduling properties for the node

In the right-side navigation pane of the configuration tab of the ads_user_info_1d_starrocks node, click General. The following table describes the parameters.

Section | Description | Screenshot |

Scheduling Parameter | Click Add Parameter in the Scheduling Parameter section. In the row that appears in the table, you can specify a scheduling parameter and the value of the scheduling parameter.

|

|

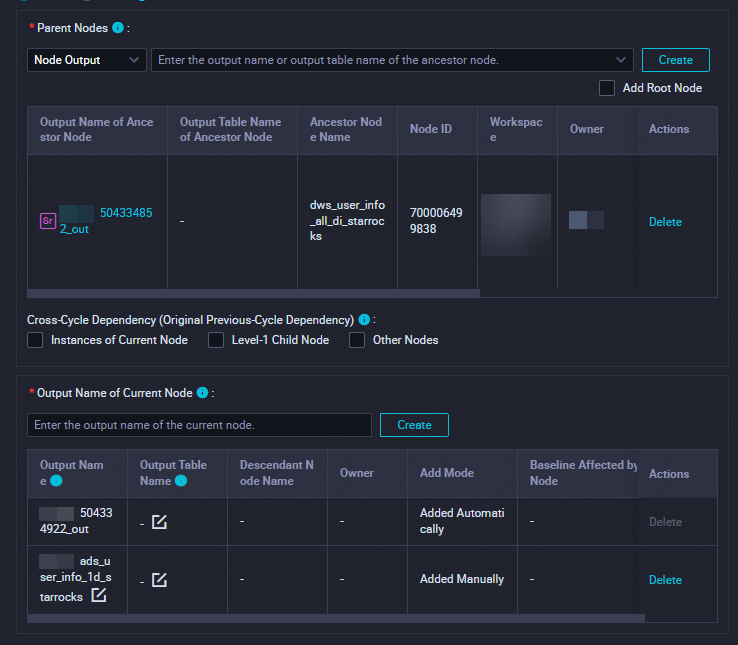

Dependencies | In the Dependencies section, click Parse Input and Output from Code to quickly configure the scheduling dependencies for the node by using the table lineage in the node code. For more information, see Scheduling dependency configuration guide. |

|

In the Schedule section, set the Scheduling Cycle parameter to Day. You do not need to separately configure the Scheduled time parameter for the current node. The time when the current node is scheduled to run every day is determined by the scheduling time of the workshop_start_starrocks zero load node of the workflow. The current node is scheduled to run after 00:30 every day.

3. Save the configurations

In this example, other required configuration items can be configured based on your business requirements. After the configuration is complete, click the ![]() icon in the top toolbar on the configuration tab of the node to save the node configurations.

icon in the top toolbar on the configuration tab of the node to save the node configurations.

Step 4: Run the nodes

Run the workflow

Go to the configuration tab of the workflow.

Double-click the User profile analysis_StarRocks workflow below Business Flow. The workflow canvas appears.

Run the workflow.

In the top toolbar of the workflow canvas, click the

icon to run the workflow in the data integration phase based on the dependencies.

icon to run the workflow in the data integration phase based on the dependencies. View the status of the nodes.

If nodes are in the

state, the synchronization process is normal.

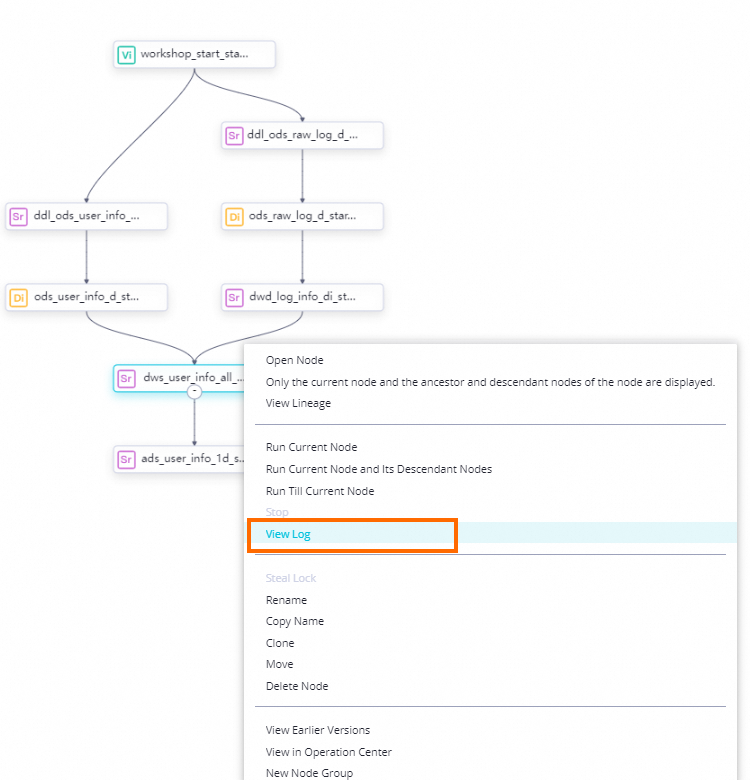

state, the synchronization process is normal. View node execution logs.

Right-click the

dwd_log_info_di_starrocks,dws_user_info_all_di_starrocks, orads_user_info_1d_starrocksnode on the canvas and select View Log to view the synchronization details.

View results

Create an ad hoc query. For more information, see Create an ad hoc query.

In the left-side navigation pane of the DataStudio page, click

. The Ad Hoc Query pane appears. Right-click Ad Hoc Query and choose .

. The Ad Hoc Query pane appears. Right-click Ad Hoc Query and choose . Query the data processing result table.

Execute the following SQL statement to check whether the basic user information and website access logs of users have been synchronized from the test databases to your StarRocks database:

-- In the query statement, change the partition key value to the data timestamp of the node. For example, if the node is run on January 2, 2024, the data timestamp is 20240101, which is one day earlier than the running time of the node. SELECT * from ads_user_info_1d_starrocks where dt=The data timestamp;

Step 5: Schedule the nodes

After you complete the preceding steps and confirm that the nodes are run as expected, commit the nodes and deploy the nodes to the production environment. For more information, see the topics in the Deploy Center topic.

Commit the nodes to the development environment

In the top toolbar of the workflow canvas, click the ![]() icon to commit all nodes in the workflow, and then click Confirm.

icon to commit all nodes in the workflow, and then click Confirm.

Commit the nodes to the production environment

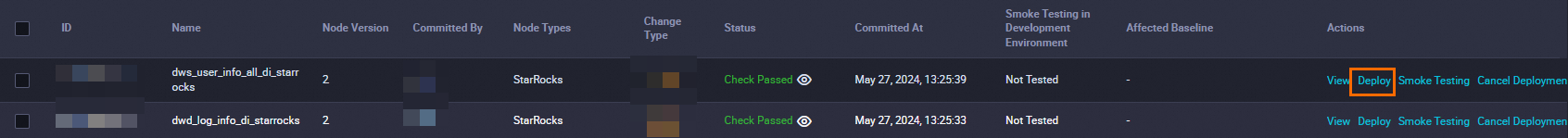

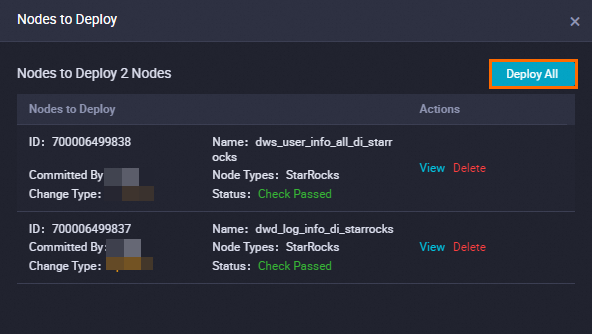

After you commit the nodes, the nodes enter the development environment. You must deploy the nodes to the production environment because nodes in the development environment cannot be automatically scheduled.

You can go to the Create Deploy Task page by using one of the following methods: In the top toolbar of the configuration tab of the workflow, click the ![]() icon. Alternatively, in the upper-left corner of the DataStudio page, you can click the more icon and choose All Products > . On the Create Deploy Task page, you can deploy a single item or multiple items at a time.

icon. Alternatively, in the upper-left corner of the DataStudio page, you can click the more icon and choose All Products > . On the Create Deploy Task page, you can deploy a single item or multiple items at a time.

Deploy a single item: Find the item that you want to deploy and click Deploy in the Actions column.

Deploy multiple items at a time:

Select the items that you want to deploy and click Deploy in the lower part of the page to deploy the selected items at a time.

Select the items that you want to deploy and click Add to List in the lower part of the page. In the upper-right corner of the page, click Nodes to Deploy. Items in the Nodes to Deploy panel will be deployed to the production environment in batches.

To deploy multiple nodes at a time, you must deploy the related resources and functions in the workflow to which the nodes belong.

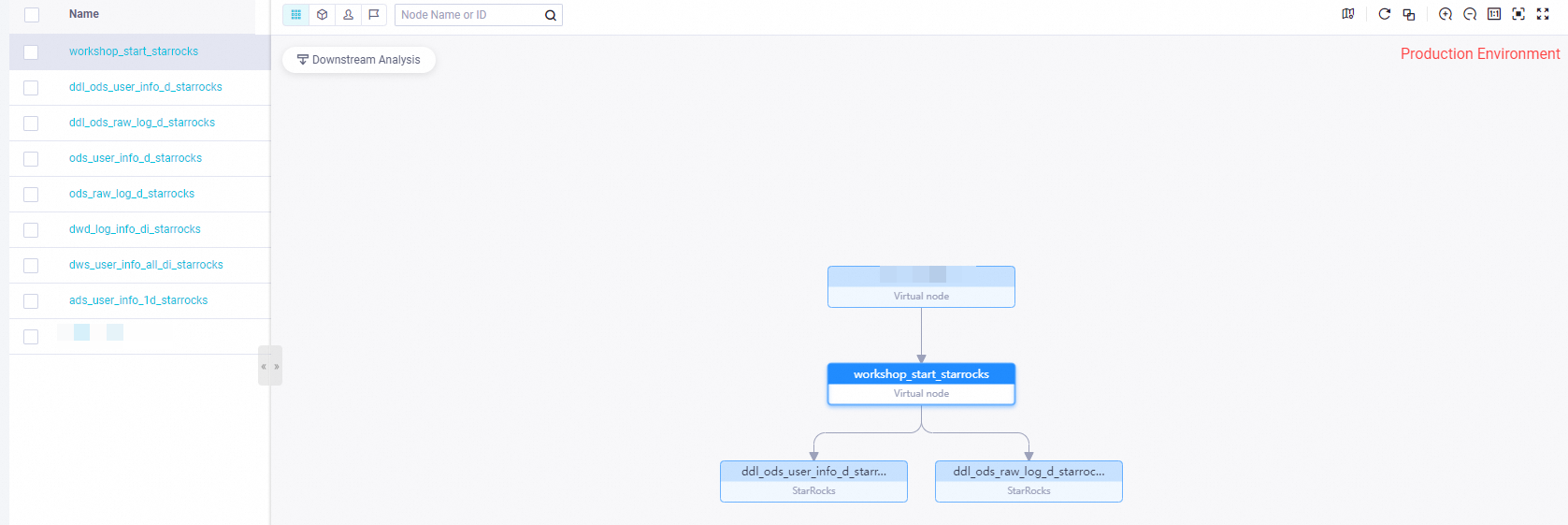

Step 6: Trace back data

In actual development scenarios, you can backfill historical data for an auto triggered node in the production environment. For more information, see Backfill data and view data backfill instances (new version). To backfill historical data for an auto triggered node, perform the following steps:

Go to the Operation Center page.

After an auto triggered node is deployed, go to the Operation Center page to backfill data for the node. You can go to this page by using one of the following methods: In the upper-right corner of the DataStudio page, click Operation Center. Alternatively, in the upper-left corner of the DataStudio page, click the more icon and choose .

Backfill data for the node.

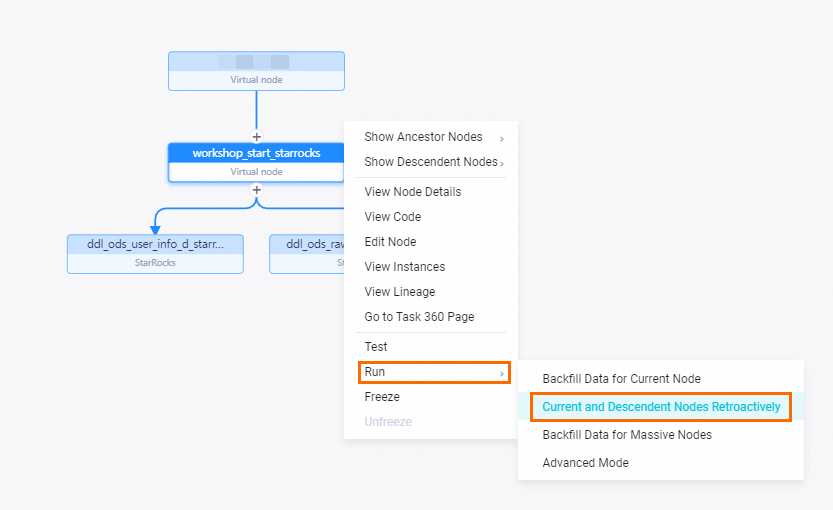

In the left-side navigation pane, choose . On the Auto Triggered Nodes page, find and click the

workshop_start_starrocksnode. The DAG of the node appears.

In the DAG of the node, right-click the

workshop_start_starrocksnode and choose .

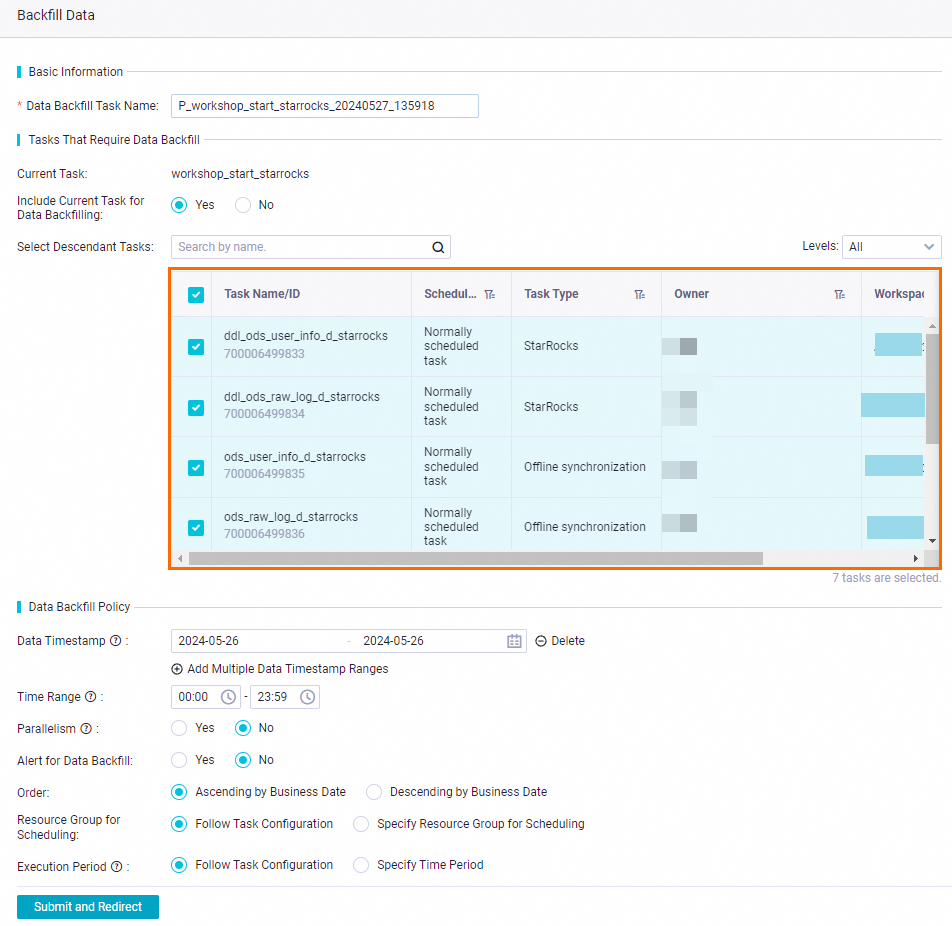

In the Backfill Data panel, select all descendant nodes of the

workshop_start_starrocksnode, specify data timestamps, and then click Submit and Redirect. The Data Backfill page appears.

What to do next

After you deploy the nodes, the entire workflow is complete. You can view the details of the created tables or consume data in the related table. For more information, see Manage data and APIs.