Quality rule templates are essential for abstracting logical consistency and similarity across quality rule configurations, enhancing the efficiency of setting up subsequent quality monitoring rules. Dataphin offers more than 20 built-in quality rule templates and supports custom SQL for tailored expansion to meet enterprise quality monitoring requirements. This topic describes the process for creating quality rule templates.

Permission description

Super administrators and quality administrators have the ability to create, clone, edit, and delete rule templates.

All users can access and use rule templates.

Operation permissions vary for different objects. For more information, see Rule template operation permissions.

Create a rule template

Navigate to the Dataphin home page, select Administration > Data Quality from the top menu bar.

In the navigation pane on the left, click Quality Monitoring > Rule Templates. On the Rule Templates page, select the Data Table tab and then click the Create Rule Template button.

In the Create Rule Template dialog box, enter the required parameters.

Parameter

Description

Basic Information

Template Name

Custom rule template name, no more than 64 characters.

Template Type

Configure the type to which the template belongs for quick search during subsequent references. Supported custom rule template types include the following: Validity, Consistency, Timeliness, and Sql.

Validity includes Standard Reference Table Validation (requires Data Standard module activation), Reference Table Validation, Column Format Validation, Column Value Domain Validation, Column Length Validation.

Consistency includes Single Field Business Logic Consistency Comparison, Columns In Two Tables Statistical Consistency Validation, Columns In Two Tables Processing Logic Consistency Validation, Columns Statistical Consistency Validation, Cross-Source Columns Statistical Consistency Validation.

Timeliness includes Time Interval Comparison, Time Interval Comparison In Two Tables, Time Comparison With Expression.

Sql includes Custom Statistic Validation and Custom Detail Value Validation.

For scenarios of different template types, see template type description.

Data Source Type

The template needs to specify the supported data source type to avoid rule definition failure or abnormal validation results due to inconsistent syntax structures of different data sources. Two types are supported:

General: Validation conditions are independent of specific data sources, and all types can use the same validation statement, such as the integrity template.

Specific Type: Rule definitions are only effective for specific data source types, and you need to select a specific data source type. After configuration, it only supports referencing the currently defined template when creating quality rules under the specified data source type's monitored object.

Description

Custom rule template description. No more than 128 characters.

Template Configuration

Consistency

When Template Type is selected as Consistency, different templates require different template configuration parameters, as follows.

Single Table Field Business Logic Consistency Comparison:

Detection Expression: Enter an SQL expression function. Example:

${total_sales}=${unit_price}*${sales_volume}.NoteDetection expressions support using functions, but you need to ensure that the database supports the function during execution.

You can validate the business logic between multiple fields. For example, total sales = unit price × sales volume. To validate whether the total sales value is correct, you can use the expression

${total_sales}=${unit_price}*${sales_volume}.Variables such as

${total_sales}in the expression must specify actual table fields. They are replaced with the actual table fields during execution.

Columns In Two Tables Statistical Consistency Validation/Columns Statistical Consistency Validation:

Statistical Method: You can count the number of field groups, field maximum values, field repetition rates, etc. For more information, see data table parameter configuration.

Two Table Field Business Logic Consistency Comparison:

Business Logic: Enter an SQL expression function. Example:

${T1.total_sales}=${T2.unit_price}*${T2.sales_volume}.NoteYou can validate the business logic between multiple fields. For example, total sales = unit price × sales volume. To validate whether the total sales value is correct, you can use the expression

${T1.total_sales}=${T2.unit_price}*${T2.sales_volume}.Variables such as

${T1.total_sales}in the expression must specify actual table fields. They are replaced with the actual table fields during execution. A maximum of two tables are supported.

Cross-Source Columns Statistical Consistency Validation:

Statistical Method: You can count the number of field groups, field maximum values, field repetition rates, etc. For more information, see data table parameter configuration.

Data Source Type: Select the data source type of the physical table, including MaxCompute, MySQL, Oracle, Microsoft SQL Server, PostgreSQL, SAP HANA, AnalyticDB for PostgreSQL, ClickHouse, IBM DB2, Hologres, DM (Dameng), StarRocks, Hive, ArgoDB, Lindorm.

Datasource: Select the data source to which the physical table belongs.

Select Comparison Table: Select the table to be compared in the physical table and the fields to be compared in the physical table.

Validity

When Template Type is selected as Validity, different templates require different template configuration parameters, as follows.

Standard Reference Table Validation (requires Data Standard module activation):

Reference Tables: Select the published status code table under the data standard. To create a code table, see create and manage standard codes (code tables).

Code Table Reference: Compare based on the reference value and field value selected here. Options include Code Value, Code Name, Code English Name.

Code Table Reference Comparison:

Data Source Type: Select the data source type.

Datasource: Select the data source corresponding to the data source type.

NoteFor performance considerations, cross-data source will read up to 1000 code table data for comparison. It is recommended that the code table and the inspected table be in the same data source.

Cross-data source comparison fields will be automatically converted to string type. Some special field types may have abnormal situations. It is recommended that the field types in the code table and the validation field types are both string or other same types.

Reference Tables: Select the target code table.

Code Table Name: Enter the code table name as a prompt when configuring quality rules.

Code Table Reference Field: Select the corresponding reference field in the code table.

NoteCode Table Reference Table is used to determine whether a field is in the code table. You need to select a comparison field. For example, to determine whether the user IDs in the user details table are all registered users.

Code Table Filter Condition: Set the filter condition for the partitioned table to filter data.

Field Format Validation:

Content Recognition Form: Options include Detection Expression, Regular Expression, String Matching (%), Starts With, Contains, Ends With. Expressions are used to match data that meets the rules.

Content Detection Pattern - Detection Expression: Enter an SQL expression function. Example:

${column}>0, where${column}is a built-in parameter.Content Detection Pattern - Regular Expression: Enter a regular expression in the input box. For example, to match all names that contain "test", define the regular expression as

.*test.*.Content Recognition Form-String Matching (%): Standard like expression, using % to represent a wildcard character. For example, to match data starting with "a", enter "a%".

Content Recognition Form-Starts With: Enter the string to be matched, and it will automatically append % at the end according to the pattern. For example: "a%".

Content Recognition Form-Contains: Enter the string to be matched, and it will automatically append % at the beginning and end according to the pattern. For example: "%a%".

Content Recognition Form-Ends With: Enter the string to be matched, and it will automatically append % at the beginning according to the pattern. For example: "%a".

NoteDetection expressions support using functions, but you need to ensure that the database supports the function during execution.

Field Value Domain Validation:

Value Domain Type: Choose based on actual business needs.

Text: Validates the validity of text. Make sure that the field to be validated is of the text type. You must set the Range. You can select Enumeration or Interval. Example of validation using an enumeration: To compare "Zhang San" and "Li Si", the generated validation SQL statement is

x in ("Zhang San", "Li Si").Numeric: Validates the validity of numbers. Make sure that the field to be validated is of a numeric type. You must set the Range. You can select Enumeration or Interval. Example of validation using an enumeration: To compare 3, 6, and 9, the generated validation SQL statement is

x in (3, 6, 9).Date: Used to validate the validity of dates. Ensure that the validation field is of date type (date).

Timestamp: Used to validate the validity of timestamps. Ensure that the validation field is of timestamp type (timestamp).

Custom: Used to validate multiple formats and supports using functions. The content entered will be directly executed on the data source.

You need to set the Value Domain Range, supporting Enumeration or Interval Setting.

Example of validation using an enumeration: To compare 3, 6, and 9, the generated validation SQL statement is

x in (3, 6, 9).Example of validation using an interval: The range is from the minimum value y1 to the maximum value y2. If the field to be compared is x, the final validation SQL statement is

x >= y1 and x <= y2.NoteCustom field formats, such as 10 and "10", may have different execution results in different data sources.

Ensure that the functions used are valid in the data source for quality validation.

Column Length Validation:

Field Length: Set the length range. Supports Enumeration or Interval Setting.

Example of validation using an enumeration: To compare 3, 6, and 9, the generated validation SQL statement is

x in (3, 6, 9).Example of validation using an interval: The range is from the minimum value y1 to the maximum value y2. If the field to be compared is x, the final validation SQL statement is

x >= y1 and x <= y2.

Timeliness

When Template Type is selected as Timeliness-Time Interval Comparison, Timeliness-two Table Time Field Comparison, Timeliness-time Function Comparison, the corresponding parameters are as follows.

Validation Item: Define an expression based on the validation field. The built-in parameter for the validation item is

${column}.Comparison Item: The expression for time comparison. The built-in parameters are

${column}and${bizdate}.Define an expression based on the comparison field.

${column}is the built-in parameter for the comparison item. Examples:${column} and substr(${column}).If you select

${bizdate}for the date function comparison, it is recognized as the data timestamp.

Time Tolerance: Set the value of the validation item minus the comparison item. Take the value before the decimal point of the unit item without rounding. For example, if the delivery date and order date need to be on the same day, Validation Item - Comparison Item can be set to less than 1 day.

Custom SQL

When Template Type is selected as Sql, different templates require different template configuration parameters, as follows.

Custom Statistic Validation: Customize the metric processing caliber to be validated. The result must be a number, such as validating the sales amount of the day.

Sql: Custom SQL metric statistical logic. Code examples are as follows:

Single Table Query

select sum(${t1.c1}) from ${t1} where ds=${bizdate};Subquery

select sum(${t1.c1}) from (select ${t1.c1} from ${t1} where ds=${bizdate}) a;Multi-Table Query

select sum(${t1.c1}) from ${t1} join ${t2} on $[t1.id]=$[t2.id] where $[t1.ds]=${bizdate};

Custom Detail Value Validation: Specify the definition caliber of normal and abnormal data content through custom SQL. You can configure total row count SQL, abnormal row count SQL, and abnormal data SQL.

Total Row Count SQL: Required. Define the SQL statistical logic for the total row count metric. The result must be a number. After filling in, you can configure statistical metrics such as normal rate and abnormal rate. The total row count code example is as follows:

select count(*) from ${t1} where ds=${bizdate};Abnormal Row Count SQL: Required. Define the SQL statistical logic for the abnormal row count metric. The result must be a number and must have a condition for abnormal data. After filling in, you can count the abnormal row count. After filling in the total count, you can also count the abnormal rate. The abnormal row count code example is as follows:

select count(*) from ${t1} where ds=${bizdate} and ${t1.c1}<0;Abnormal Data SQL: Optional. Define the SQL statistical logic for the abnormal data metric (single field). After filling in, you can use the abnormal archiving feature. The abnormal data code example is as follows:

select ${t1.c1} as dataphin_quality_error_data from ${t1} where ds=${bizdate} and ${t1.c1}<0;

Parse Variables: Click the Parse Variables button. The system parses the variable fields in the custom SQL. You can add a display name and a description for each variable field. The description can be up to 128 characters long.

The variable description appears as a tip next to the variable name during quality rule configuration to help you enter the correct value. The variable `t1` defaults to the current table being validated and is not parsed.

NoteSQL supports multi-table queries and subqueries.

Supports fixed table names and field names. Dataphin tables support referencing physical tables using project names or referencing logical tables using section names.

Supports using variables to replace table names or field names.

Specify a table: Click Insert Table Name 1 in the editor to quickly enter a table name variable from

${t1} to ${t5}. You can specify up to five data tables. The variables are not case-sensitive.Specify a field of a table: Click Insert Field Name 1 Of Table 1 Only in the editor to quickly enter the field name variable of the table, such as

${t1.c1}.Specify a table and a field: Click Insert Table Name 1 + Field Name 1 in the editor to quickly enter the table name and field name variable, such as

$[t1.c1].

Only the above variable writing methods are supported. Other variable forms will be uniformly parsed as field variables of the validation table, such as ${t6}, ${table1}, ${a.b}, etc. Please avoid using other variable expressions.

Parameter Checking

Enter the SET parameters for the quality rule. The parameters cannot exceed 1,024 characters. Example:

set hive.execution.engine=mr.NoteParameter settings are only effective for quality rules of MaxCompute and Hive type data tables. Other types of data tables will ignore this parameter configuration when referencing this template to configure quality rules.

Effective for all quality rules created based on this template. You can view the final executed SQL statement in Preview SQL.

Click Preview SQL to check the SQL preview of the rule template.

NoteUse variable placeholders for any unfilled configuration fields in the template, such as ${table1}, ${column1}.

To finalize the creation, click OK.

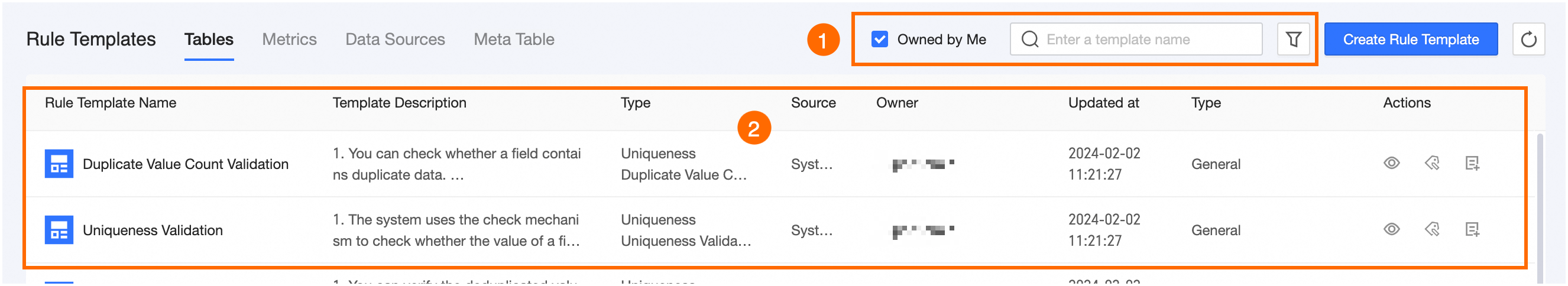

View rule template list

Area | Description |

①Filter and Search Area | You can perform a fuzzy search based on the template name. You can quickly filter My Responsible templates, or perform precise filtering based on template type, template source, data source type, or template owner. |

②List Area | Displays the name, template description, template type, template source, template owner, update time, and data source type information of the rule template. Different types of templates support different operations. Custom Templates (Dataphin Data Table, Global Data Table): Support viewing references, editing, adding quality rules, viewing template details, cloning, changing owner, and deleting operations. System Templates (Dataphin Data Table, Global Data Table, Metric): View references, edit template parameters, and add quality rules operations. System Templates (Data Source, Real-Time Meta Table): Support viewing references and adding quality rules operations.

|

What to do next

Once you have created the rule template, you can set up quality rules using this template. For more information, see create quality rules.