You can configure the SelectDB output component to write data read from external databases to SelectDB, or copy and push data from storage systems connected to the big data platform to SelectDB for data integration and reprocessing. This topic describes how to configure the SelectDB output component.

Prerequisites

A SelectDB data source is created. For more information, see Create a SelectDB data source.

The account used to configure the SelectDB output component properties must have the write-through permission on the data source. If you do not have the permission, you need to request the data source permission. For more information, see Request data source permissions.

Procedure

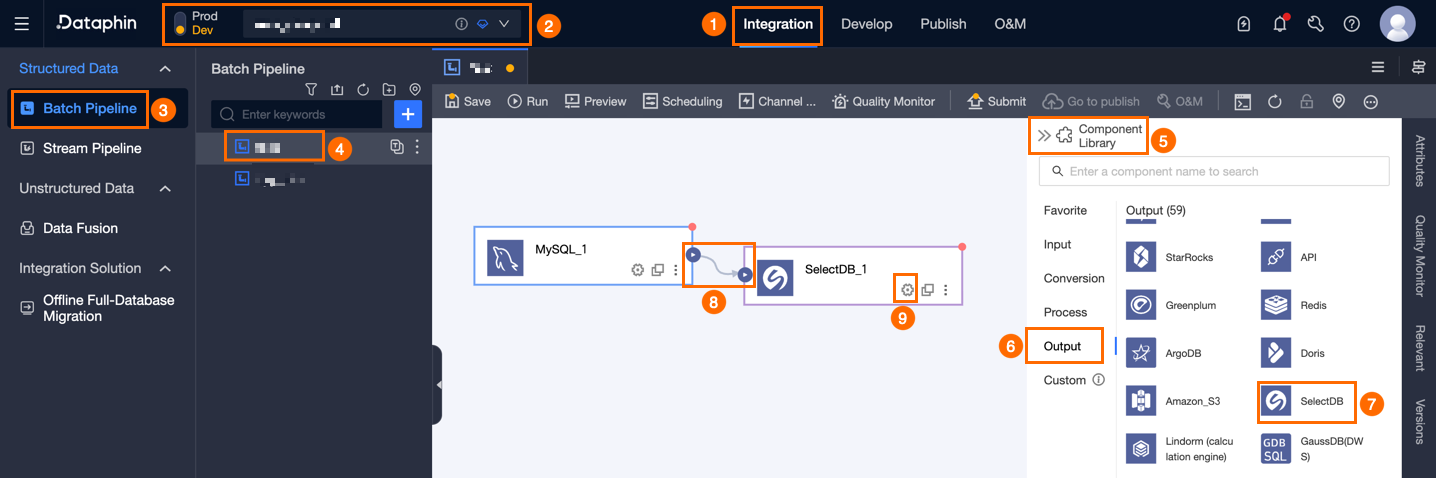

In the top navigation bar of the Dataphin homepage, choose Develop > Data Integration.

In the top navigation bar of the Integration page, select a project (In Dev-Prod mode, you need to select an environment).

In the navigation pane on the left, click Batch Pipeline. In the Batch Pipeline list, click the offline pipeline that you want to develop to open its configuration page.

Click Component Library in the upper-right corner of the page to open the Component Library panel.

In the navigation pane on the left of the Component Library panel, select Outputs. Find the SelectDB component in the output component list on the right and drag it to the canvas.

Click and drag the

icon of the target input, transform, or flow component to connect it to the current SelectDB output component.

icon of the target input, transform, or flow component to connect it to the current SelectDB output component.Click the

icon on the SelectDB output component to open the SelectDB Output Configuration dialog box.

icon on the SelectDB output component to open the SelectDB Output Configuration dialog box.

In the SelectDB Output Configuration dialog box, configure the parameters.

Parameter

Description

Basic Settings

Step Name

The name of the SelectDB output component. Dataphin automatically generates a step name. You can also modify it based on your business scenario. The name must meet the following requirements:

It can contain only Chinese characters, letters, underscores (_), and digits.

It can be up to 64 characters in length.

Datasource

The data source dropdown list displays all SelectDB data sources, including those for which you have the write-through permission and those for which you do not. Click the

icon to copy the current data source name.

icon to copy the current data source name.For data sources for which you do not have the write-through permission, you can click Request next to the data source to request the write-through permission. For more information, see Request data source permissions.

If you do not have a SelectDB data source, click Create Data Source to create one. For more information, see Create a SelectDB data source.

Table

Select the destination table for output data. You can enter a keyword to search for tables or enter the exact table name and click Exact Match. After you select a table, the system automatically checks the table status. Click the

icon to copy the name of the currently selected table.

icon to copy the name of the currently selected table.If the SelectDB data source does not have a destination table for data synchronization, you can use the one-click table creation feature to quickly generate a destination table. To create a table with one click, perform the following steps:

Click Create Table With One Click. Dataphin automatically matches the code for creating the destination table, including the destination table name (default is the source table name), field types (initially converted based on Dataphin fields), and other information.

You can modify the SQL script for creating the destination table as needed, and then click Create.

After the destination table is created, Dataphin automatically sets it as the destination table for output data. The one-click table creation feature is used to create destination tables for data synchronization in development and production environments. Dataphin selects the production environment for table creation by default. If a table with the same name and structure already exists in the production environment, you do not need to select table creation for the production environment.

NoteIf a table with the same name exists in the development or production environment, Dataphin will report an error when you click Create.

When there are no matches, you can still perform integration based on a manually entered table name.

Data Format

You can select CSV or JSON.

If you select CSV, you also need to configure CSV import column delimiter and CSV import row delimiter.

CSV Import Column Delimiter (optional)

For StreamLoad CSV imports, you can configure the column delimiter. The default is

_@dp@_. Do not specify the delimiter if you use the default value. If your data contains_@dp@_, you must use a different character as a custom delimiter.CSV Import Row Delimiter (optional)

For StreamLoad CSV imports, you can configure the row delimiter. The default is

_#dp#_. Do not specify the delimiter if you use the default value. If your data contains_#dp#_, you must use a different character as a custom delimiter.Batch Write Data Volume (optional)

The size of data to be written at once. You can also set Batch Write Count. The system will write data when either limit is reached. The default is 32M.

Batch Write Count (optional)

The default is 2048 records. When synchronizing data, the system uses a batch write strategy with parameters including Batch Write Count and Batch Write Data Volume.

When the accumulated data reaches either limit (batch write data volume or batch write count), the system considers a batch of data to be full and immediately writes this batch of data to the destination at once.

It is recommended to set the batch write data volume to 32MB. For the batch write count limit, you can adjust it flexibly based on the actual size of a single record to fully utilize the advantages of batch writing. For example, if a single record is about 1KB in size, you can set the batch write data volume to 16MB and set the batch write count to a value greater than the result of 16MB divided by 1KB per record (greater than 16384 records), such as 20000 records. With this configuration, the system will trigger batch writes based on the batch write data volume, writing data whenever the accumulated data reaches 16MB.

Preparation Statement (optional)

The SQL script to be executed on the database before data import.

For example, to ensure continuous service availability, before the current step writes data, it first creates a destination table Target_A, then executes writing to Target_A. After the current step completes writing data, it renames the continuously serving table Service_B to Temp_C, then renames Target_A to Service_B, and finally deletes Temp_C.

Completion Statement (optional)

The SQL script to be executed on the database after data import.

Field Mapping

Input Fields

Displays the input fields based on the output of the upstream component.

Output Fields

Displays the output fields. You can perform the following operations:

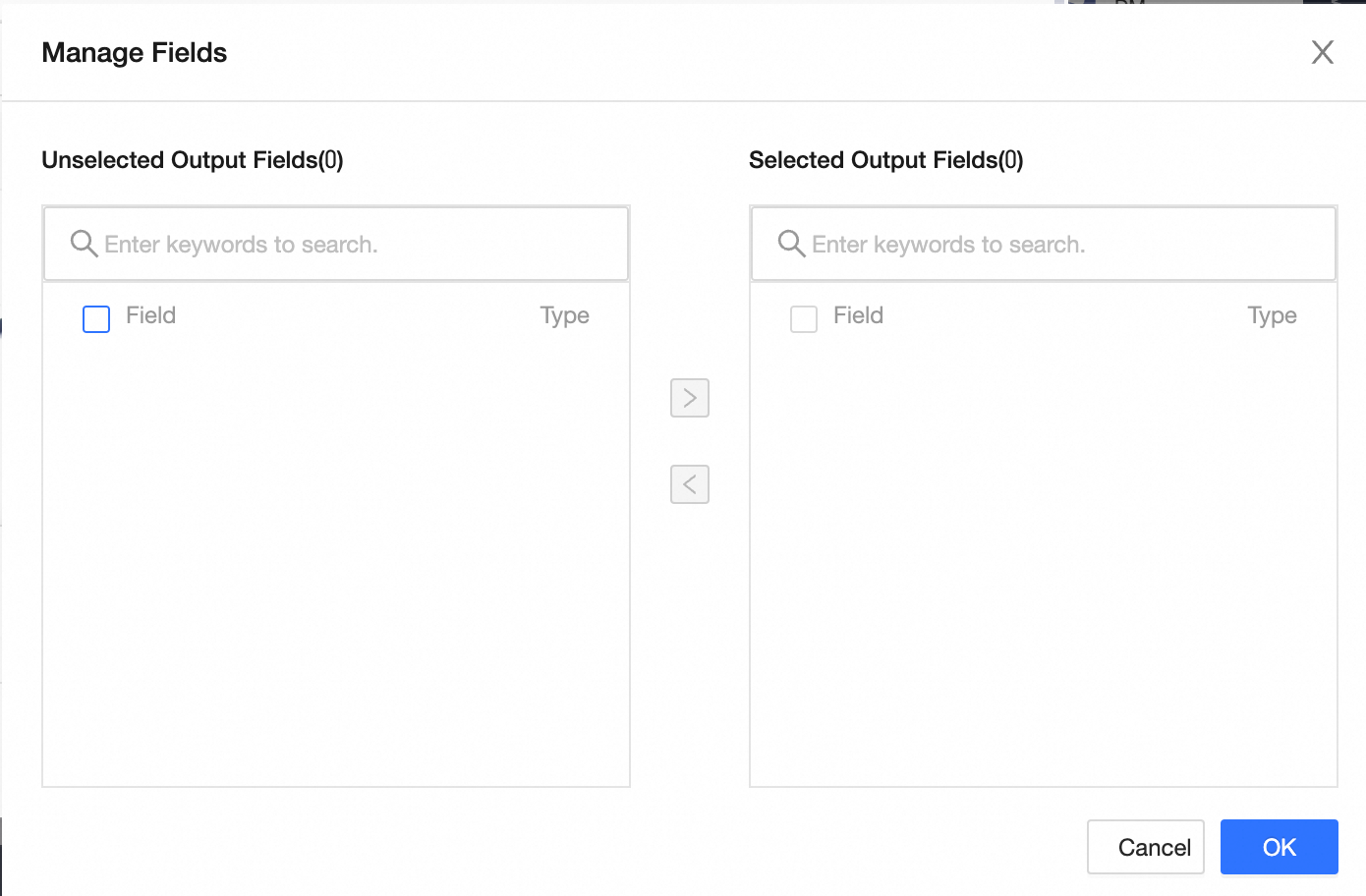

Field management: Click Field Management to select output fields.

Click the

icon to move Selected Input Fields to Unselected Input Fields.

icon to move Selected Input Fields to Unselected Input Fields.Click the

icon to move Unselected Input Fields to Selected Input Fields.

icon to move Unselected Input Fields to Selected Input Fields.

Batch add: Click Batch Add to configure in JSON, TEXT, or DDL format.

Batch configuration in JSON format, for example:

// Example: [{ "name": "user_id", "type": "String" }, { "name": "user_name", "type": "String" }]Note`name` specifies the name of the imported field. `type` specifies the field type after import. For example,

"name":"user_id","type":"String"imports the field named `user_id` and sets its type to `String`.Batch configuration in TEXT format, for example:

// Example: user_id,String user_name,StringThe row delimiter is used to separate information for each field. The default is a line feed (\n), and it supports line feed (\n), semicolon (;), and period (.).

The column delimiter is used to separate field names from field types. The default is a comma (,).

Batch configuration in DDL format, for example:

CREATE TABLE tablename ( id INT PRIMARY KEY, name VARCHAR(50), age INT );

Create a new output field: Click +Create Output Field, fill in the Column and select the Type as prompted. After completing the configuration for the current row, click the

icon to save.

icon to save.

Mapping

Mapping relationships are used to map input fields from the source table to output fields in the destination table. Mapping relationships include Same-name Mapping and Same-row Mapping. The applicable scenarios are as follows:

Same-name mapping: Maps fields with the same name.

Same-row mapping: Maps fields in the same row when the field names in the source and destination tables are different but the data in corresponding rows needs to be mapped.

Click OK to complete the property configuration of the SelectDB Output Component.