The ack-koordinator component allows you to dynamically modify the memory parameters of a pod. You can use ack-koordinator to modify the CPU parameters, memory parameters, and disk IOPS limit for a pod. This topic describes how to use ack-koordinator to dynamically modify the resource parameters of a pod.

Prerequisites

A kubectl client is connected to the cluster. For more information, see Obtain the kubeconfig file of a cluster and use kubectl to connect to the cluster.

ack-koordinator (FKA ack-slo-manager) 0.5.0 or later is installed. For more information about how to install ack-koordinator, see ack-koordinator (ack-slo-manager).

Noteack-koordinator is upgraded and optimized based on resource-controller. If you use resource-controller, you must uninstall it before you install ack-koordinator. For more information, see Uninstall resource-controller.

Background information

If you want to modify container parameters for a pod that is running in a Kubernetes cluster, you must modify the PodSpec parameter and submit the change. However, the pod is deleted and recreated after you submit the change. ack-koordinator allows you to dynamically modify the resource parameters of a pod without the need to restart the pod.

Scenarios

This feature is suitable for scenarios where temporary adjustment is required. For example, the memory usage of a pod increases. In this case, you need to increase the memory limit of the pod to avoid triggering the out of memory (OOM) killer. To perform common O&M operations, we recommend that you use the relevant features that are provided by Container Service for Kubernetes (ACK). For more information, see CPU Burst, Topology-aware CPU scheduling, and Resource profiling.

Modify the memory limit

To dynamically modify the memory limit of a pod by using cgroups, perform the following steps:

You can modify the memory limit only in Kubernetes 1.22 and earlier. Other resource parameters can be modified in all Kubernetes versions.

If you modify the CPU limit to meet common requirements, we recommend that you use the CPU Burst feature. This feature can automatically modify the CPU limit of the pod. For more information, see CPU Burst. If you want to dynamically modify the CPU limit of the pod, see Upgrade from resource-controller to ack-koordinator.

Create a pod-demo.yaml file with the following YAML template:

apiVersion: v1 kind: Pod metadata: name: pod-demo spec: containers: - name: pod-demo image: polinux/stress resources: requests: cpu: 1 memory: "50Mi" limits: cpu: 1 memory: "1Gi" # Set the memory limit to 1 GB. command: ["stress"] args: ["--vm", "1", "--vm-bytes", "256M", "-c", "2", "--vm-hang", "1"]Run the following command to deploy the pod-demo pod in the cluster:

kubectl apply -f pod-demo.yamlRun the following command to query the original memory limit of the container:

# The actual path consists of the UID of the pod and the ID of the container. cat /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podaf44b779_41d8_43d5_a0d8_8a7a0b17****.slice/memory.limit_in_bytesExpected output:

# In this example, 1073741824 is returned, which is the result of 1 × 1024 × 1024 × 1024. This indicates that the original memory limit of the container is 1 GB. 1073741824The output shows that the original memory limit of the container is 1 GB, which is the same as the value of the

spec.containers.resources.limits.memoryparameter in the YAML file that you created in Step 1.Create a cgroups-sample.yaml file with the following YAML template:

The template is used to modify the memory limit of the container.

apiVersion: resources.alibabacloud.com/v1alpha1 kind: Cgroups metadata: name: cgroups-sample spec: pod: name: pod-demo namespace: default containers: - name: pod-demo memory: 5Gi # Change the memory limit of the pod to 5 GB.Run the following command to deploy the cgroups-sample object in the cluster.

kubectl apply -f cgroups-sample.yamlRun the following command to query the new memory limit of the container after you submit the change:

# The actual path consists of the UID of the pod and the ID of the container. cat /sys/fs/cgroup/memory/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podaf44b779_41d8_43d5_a0d8_8a7a0b17****.slice/memory.limit_in_bytesExpected output:

# In this example, 5368709120 is returned, which is the result of 5 × 1024 × 1024 × 1024. This indicates that the new memory limit of the container is 5 GB. 5368709120The output shows that the memory limit of the container is 5 GB, which is the same as the value of the

spec.pod.containers.memoryparameter in the YAML file that you created in Step 4.Run the following command to query the status of the pod:

kubectl describe pod pod-demoExpected output:

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 13m default-scheduler Successfully assigned default/pod-demo to cn-zhangjiakou.192.168.3.238 Normal Pulling 13m kubelet, cn-zhangjiakou.192.168.3.238 Pulling image "polinux/stress" Normal Pulled 13m kubelet, cn-zhangjiakou.192.168.3.238 Successfully pulled image "polinux/stress"The output shows that the pod runs as normal and no restart

eventsare generated.

Change the vCores that are bound to a pod

You can use cgroups to bind vCores to a pod by specifying the serial numbers of vCores. To change the vCores that are bound to a pod, perform the following steps:

In common cases, we recommend that you use topology-aware CPU scheduling to manage CPU resources for CPU-sensitive workloads. For more information, see Topology-aware CPU scheduling.

Create a pod-cpuset-demo.yaml file with the following YAML template:

apiVersion: v1 kind: Pod metadata: name: pod-cpuset-demo spec: containers: - name: pod-cpuset-demo image: polinux/stress resources: requests: memory: "50Mi" limits: memory: "1000Mi" cpu: 0.5 command: ["stress"] args: ["--vm", "1", "--vm-bytes", "556M", "-c", "2", "--vm-hang", "1"]Run the following command to deploy the pod-cpuset-demo object in the cluster:

kubectl apply -f pod-cpuset-demo.yamlRun the following command to query the vCores that are bound to the container:

cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podf9b79bee_eb2a_4b67_befe_51c270f8****.slice/cri-containerd-aba883f8b3ae696e99c3a920a578e3649fa957c51522f3fb00ca943dc2c7****.scope/cpuset.cpusExpected output:

0-31The output shows that the serial numbers of the vCores that can be used by the container range from 0 to 31 before you bind vCores to the container.

Create a cgroups-sample-cpusetpod.yaml file with the following YAML template.

The YAML file specifies the vCores that are bound to the pods.

apiVersion: resources.alibabacloud.com/v1alpha1 kind: Cgroups metadata: name: cgroups-sample-cpusetpod spec: pod: name: pod-cpuset-demo namespace: default containers: - name: pod-cpuset-demo cpuset-cpus: 2-3 # Bind vCore 2 and vCore 3 to the pod.Run the following command to deploy the cgroups-sample-cpusetpod object in the cluster:

kubectl apply -f cgroups-sample-cpusetpod.yamlRun the following command to query the vCores that are bound to the container after you submit the change:

# The actual path consists of the UID of the pod and the ID of the container. cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podf9b79bee_eb2a_4b67_befe_51c270f8****.slice/cri-containerd-aba883f8b3ae696e99c3a920a578e3649fa957c51522f3fb00ca943dc2c7****.scope/cpuset.cpusExpected output:

2-3The output shows that vCore 2 and vCore 3 are bound to the container. The vCores that are bound to the container are the same as the vCores that are specified in the

spec.pod.containers.cpuset-cpusparameter in the YAML file that you created in Step 4.

Modify Deployment-level parameters

To modify Deployment-level parameters by using cgroups, perform the following steps:

Create a go-demo.yaml file with the following YAML template.

The Deployment creates two pods that run a stress testing program. Each pod requests 0.5 vCores.

apiVersion: apps/v1 kind: Deployment metadata: name: go-demo labels: app: go-demo spec: replicas: 2 selector: matchLabels: app: go-demo template: metadata: labels: app: go-demo spec: containers: - name: go-demo image: polinux/stress command: ["stress"] args: ["--vm", "1", "--vm-bytes", "556M", "-c", "1", "--vm-hang", "1"] imagePullPolicy: Always resources: requests: cpu: 0.5 limits: cpu: 0.5Run the following command to deploy the go-demo Deployment in the cluster:

kubectl apply -f go-demo.yamlCreate a cgroups-cpuset-sample.yaml file with the following YAML template.

The YAML file specifies the vCores that are bound to the pods.

apiVersion: resources.alibabacloud.com/v1alpha1 kind: Cgroups metadata: name: cgroups-cpuset-sample spec: deployment: name: go-demo namespace: default containers: - name: go-demo cpuset-cpus: 2,3 # Bind vCore 2 and vCore 3 to the pods.Run the following command to deploy the cgroups-cpuset-sample Deployment in the cluster:

kubectl apply -f cgroups-cpuset-sample.yamlRun the following command to query the vCores that are bound to the container after you submit the change:

# The actual path consists of the UID of the pod and the ID of the container. cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-pod06de7408_346a_4d00_ba25_02833b6c****.slice/cri-containerd-733a0dc93480eb47ac6c5abfade5c22ed41639958e3d304ca1f85959edc3****.scope/cpuset.cpusExpected output:

2-3The output shows that vCore 2 and vCore 3 are bound to the containers. The vCores that are bound to the containers are the same as the vCores that are specified in the

spec.deployment.containers.cpuset-cpusparameter in the YAML file that you created in Step 3.

Modify the disk IOPS

If you want to modify the disk IOPS for a pod, you must use Alibaba Cloud Linux as the operating system of the worker node that you want to manage. To modify the disk IOPS for a pod, perform the following steps:

If you specify a blkio limit in cgoup v1, the OS kernel only limits the direct IOPS of a pod. The OS kernel does not limit the buffered IOPS of a pod. To limit the buffered IOPS of a pod, use cgroup v2 or enable the cgroup writeback feature for Alibaba Cloud Linux. For more information, see Enable the cgroup writeback feature.

Create an I/O-intensive application with the following YAML template.

Mount the host directory /mnt to the pod. The device name of the corresponding disk is /dev/vda1.

apiVersion: apps/v1 kind: Deployment metadata: name: fio-demo labels: app: fio-demo spec: selector: matchLabels: app: fio-demo template: metadata: labels: app: fio-demo spec: containers: - name: fio-demo image: registry.cn-zhangjiakou.aliyuncs.com/acs/fio-for-slo-test:v0.1 command: ["sh", "-c"] # Use Fio to perform write stress tests on the disk. args: ["fio -filename=/data/test -direct=1 -iodepth 1 -thread -rw=write -ioengine=psync -bs=16k -size=2G -numjobs=10 -runtime=12000 -group_reporting -name=mytest"] volumeMounts: - name: pvc mountPath: /data # The disk volume is mounted to the /data path. volumes: - name: pvc hostPath: path: /mntRun the following command to deploy the fio-demo Deployment in the cluster:

kubectl apply -f fio-demo.yamlDeploy a Cgroups object that is used to control the disk IOPS to limit the throughput of the pod.

Create a cgroups-sample-fio.yaml file with the following YAML template.

The YAML file specifies the IOPS limit in bit/s of the /dev/vda1 device.

apiVersion: resources.alibabacloud.com/v1alpha1 kind: Cgroups metadata: name: cgroups-sample-fio spec: deployment: name: fio-demo namespace: default containers: - name: fio-demo blkio: # The IOPS limit in bit/s. Example: 1048576, 2097152, or 3145728. device_write_bps: [{device: "/dev/vda1", value: "1048576"}]Run the following command to query the disk IOPS limit after you submit the change:

# The actual path consists of the UID of the pod and the ID of the container. cat /sys/fs/cgroup/blkio/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-pod0840adda_bc26_4870_adba_f193cd00****.slice/cri-containerd-9ea6cc97a6de902d941199db2fcda872ddd543485f5f987498e40cd706dc****.scope/blkio.throttle.write_bps_deviceExpected output:

253:0 1048576The output shows that the IOPS limit of the disk is

1048576bit/s.

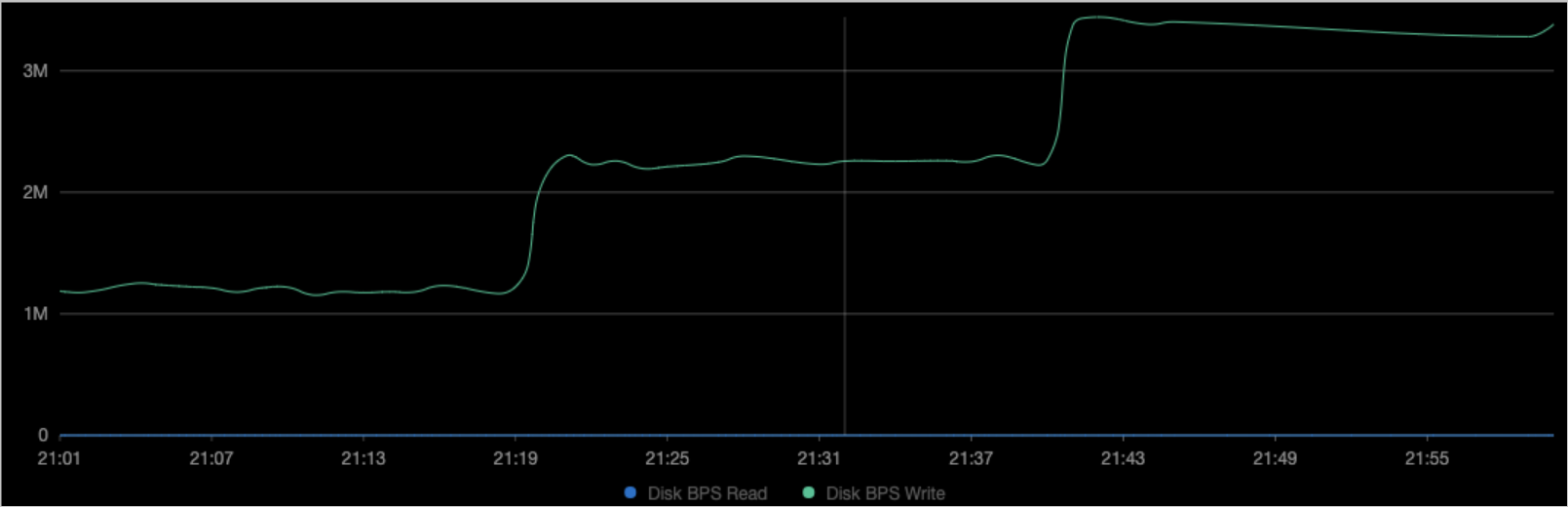

View the monitoring data of the node.

The figures show that the disk IOPS limit is changed to the value that is specified in the

The figures show that the disk IOPS limit is changed to the value that is specified in the device_write_bpsparameter of the YAML file that you created in Step 3. The pod is not restarted after you submit the change.