This topic describes how to use Arena to deploy a TensorFlow model as an inference service.

Prerequisites

Procedure

In this topic, a BERT model trained with TensorFlow 1.15 is used to deploy an inference service. The model is exported as a saved model.

Run the following command to query the GPU resources available in the cluster:

arena top nodeExpected output:

NAME IPADDRESS ROLE STATUS GPU(Total) GPU(Allocated) cn-beijing.192.168.0.100 192.168.0.100 <none> Ready 1 0 cn-beijing.192.168.0.101 192.168.0.101 <none> Ready 1 0 cn-beijing.192.168.0.99 192.168.0.99 <none> Ready 1 0 --------------------------------------------------------------------------------------------------- Allocated/Total GPUs of nodes which own resource nvidia.com/gpu In Cluster: 0/3 (0.0%)The preceding output shows that the cluster has three GPU-accelerated nodes on which you can deploy the model.

Upload the model to a bucket in Object Storage Service (OSS).

ImportantThis example shows how to upload the model to OSS from a Linux system. If you use other operating systems, see ossutil.

Create a bucket named

examplebucket.Run the following command to create a bucket named

examplebucket:ossutil64 mb oss://examplebucketIf the following output is displayed, the bucket named

examplebucketis created:0.668238(s) elapsed

Upload the model to the

examplebucketbucket.ossutil64 cp model.savedmodel oss://examplebucket

Create a persistent volume (PV) and a persistent volume claim (PVC).

Create a file named

Tensorflow.yamlbased on the following template:apiVersion: v1 kind: PersistentVolume metadata: name: model-csi-pv spec: capacity: storage: 5Gi accessModes: - ReadWriteMany persistentVolumeReclaimPolicy: Retain csi: driver: ossplugin.csi.alibabacloud.com volumeHandle: model-csi-pv // The value must be the same as the name of the PV. volumeAttributes: bucket: "Your Bucket" url: "Your oss url" akId: "Your Access Key Id" akSecret: "Your Access Key Secret" otherOpts: "-o max_stat_cache_size=0 -o allow_other" --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: model-pvc spec: accessModes: - ReadWriteMany resources: requests: storage: 5GiParameter

Description

bucket

The name of the OSS bucket, which is globally unique in OSS. For more information, see Bucket naming conventions.

url

The URL that is used to access an object in the bucket. For more information, see Obtain the URL of a single object or the URLs of multiple objects.

akId

The AccessKey ID and AccessKey secret that are used to access the OSS bucket. We recommend that you access the OSS bucket as a Resource Access Management (RAM) user. For more information, see Create an AccessKey pair.

akSecret

otherOpts

Custom parameters for mounting the OSS bucket.

Set

-o max_stat_cache_size=0to disable metadata caching. If this feature is disabled, the system retrieves the latest metadata from OSS each time it attempts to access objects in OSS.Set

-o allow_otherto allow other users to access the OSS bucket that you mounted.

For more information about other parameters, see Custom parameters supported by ossfs.

Run the following command to create a PV and a PVC:

kubectl apply -f Tensorflow.yaml

Run the following command to launch a

TensorFlow Servinginstance namedbert-tfserving:arena serve tensorflow \ --name=bert-tfserving \ --model-name=chnsenticorp \ --gpus=1 \ --image=tensorflow/serving:1.15.0-gpu \ --data=model-pvc:/models \ --model-path=/models/tensorflow \ --version-policy=specific:1623831335Expected output:

configmap/bert-tfserving-202106251556-tf-serving created configmap/bert-tfserving-202106251556-tf-serving labeled configmap/bert-tfserving-202106251556-tensorflow-serving-cm created service/bert-tfserving-202106251556-tensorflow-serving created deployment.apps/bert-tfserving-202106251556-tensorflow-serving created INFO[0003] The Job bert-tfserving has been submitted successfully INFO[0003] You can run `arena get bert-tfserving --type tf-serving` to check the job statusRun the following command to query all running services:

arena serve listThe output indicates that only the

bert-tfservingservice is running.NAME TYPE VERSION DESIRED AVAILABLE ADDRESS PORTS bert-tfserving Tensorflow 202106251556 1 1 172.16.95.171 GRPC:8500,RESTFUL:8501Run the following command to query the details about the

bert-tfservingservice:arena serve get bert-tfservingExpected output:

Name: bert-tfserving Namespace: inference Type: Tensorflow Version: 202106251556 Desired: 1 Available: 1 Age: 4m Address: 172.16.95.171 Port: GRPC:8500,RESTFUL:8501 Instances: NAME STATUS AGE READY RESTARTS NODE ---- ------ --- ----- -------- ---- bert-tfserving-202106251556-tensorflow-serving-8554d58d67-jd2z9 Running 4m 1/1 0 cn-beijing.192.168.0.88The preceding output shows that the model is successfully deployed by using

TensorFlow Serving. Port 8500 is exposed for gRPC and port 8501 is exposed for HTTP.By default, an inference service deployed by using

arena serve tensorflowprovides a cluster IP. You need to create an Internet-facing Ingress to access the inference service through the cluster IP.On the Clusters page, click the name of the cluster that you want to manage and choose in the left-side navigation pane.

On the top of the page, select the

inferencenamespace displayed in Step 6 from the Namespace drop-down list.Click Create Ingress in the upper-right part of the page. For more information about Ingress parameters, see Create an NGINX Ingress.

Name:

Tensorflowis entered in this example.Rules:

Domain Name: Enter a custom domain name, such as

test.example.com.Mappings:

Path: The root path

/is used in this example.Rule: The default rule (ImplementationSpecific) is used in this example.

Service Name: Enter the service name that is returned by the

kubectl get servicecommand.Port: Port 8501 is used in this example.

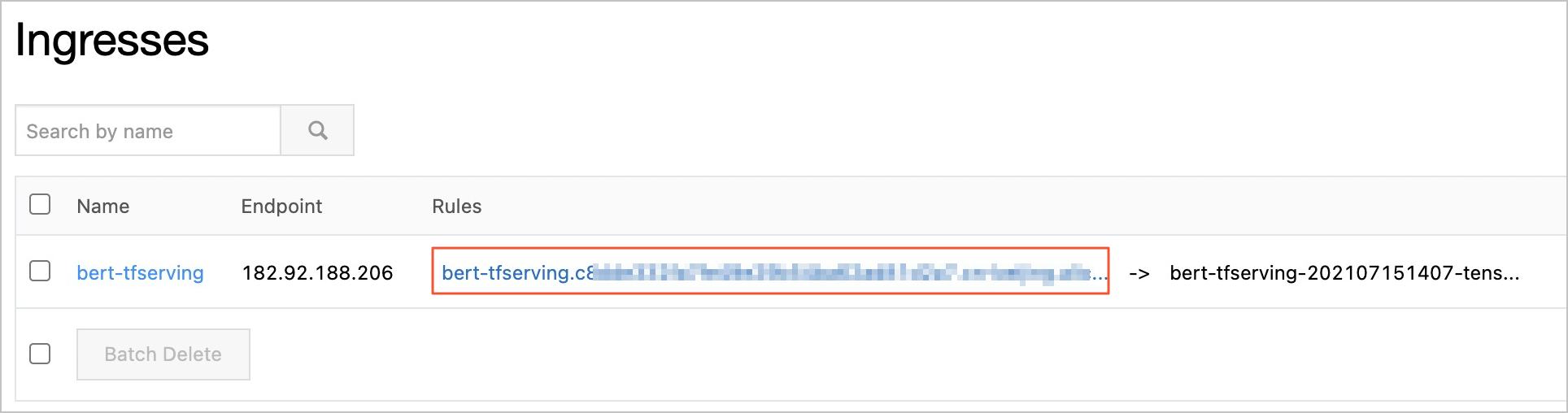

After you create the Ingress, go to the Ingresses page and find the Ingress. The value in the Rules column displays the address of the Ingress.

Run the following command to call the API of the inference service. For more information about

TensorFlow Serving, see TensorFlow Serving API.curl "http://<Ingress address>"Expected output:

{ "model_version_status": [ { "version": "1623831335", "state": "AVAILABLE", "status": { "error_code": "OK", "error_message": "" } } ] }