The Application Monitoring sub-service of Application Real-Time Monitoring Service (ARMS) allows you to configure alerting for the preset metrics. You can also use Prometheus Query Language (PromQL) statements to configure advanced alerting because Application Monitoring data is integrated into Managed Service for Prometheus by default. This topic provides a set of alert configurations and sample PromQL statements to meet the requirements for O&M and emergency response.

Prerequisites

Your application is monitored by Application Monitoring. For more information, see Application Monitoring overview.

Basic alerting

Strategy

In order to ensure service stability and meet the Service-Level Agreement (SLA) requirements, alerting is of great significance for timely emergency response. In this topic, a hierarchical system is provided to vertically define the business, application, and infrastructure to realize quick emergency response and troubleshooting.

In this example, the following metrics are used. For information about all preset metrics provided by Application Monitoring, see Alert rule metrics.

Metric | Description |

Number of Calls | The number of entry calls, including HTTP and Dubbo calls. You can use this metric to analyze the number of calls of the application, estimate the business volume, and check whether exceptions occur in the application. |

Call Error Rate (%) | The error rate of entry calls is calculated by using the following formula: Error rate = Number of entry calls/Total number of entry calls × 100%. |

Call Response Time | The response time of an entry call, such as an HTTP call or a Dubbo call. You can use this metric to check for slow requests and exceptions. |

Number of Exceptions | The number of exceptions that occur during software runtime, such as null pointer exceptions, array out-of-bounds exceptions, and I/O exceptions. You can use this metric to check whether a call stack throws errors and whether application call errors occur. |

Number of HTTP Requests Returning 5XX Status Codes | The number of HTTP requests for which status codes 5XX are returned. 5XX status codes indicate that internal server errors have occurred, or the system is busy. Common 5XX status codes include 500 and 503. |

Database Request Response Time | The time internal between the time that the application sends a request to a database and the time that the database makes a response. The response time of database requests affects the application performance and user experience. If the response time is excessively long, the application may stutter or slow down. |

Downstream Service Call Error Rate (%) | The value of this metric is calculated by using the following formula: Error rate of downstream service calls = Number of failed downstream service requests/Total number of interface requests. You can use this metric to check whether the errors of the downstream services increase and affect the application. |

Average Response Time of Downstream Service Calls (ms) | The average response time of downstream service calls. You can use this metric to check whether the time consumed by the downstream services increases and affects the application. |

Number of JVM Full GCs (Instantaneous Value) | The number of full garbage collections (GCs) performed by the JVM in the last N minutes. If full GCs frequently occur in your application, exceptions may occur. |

Number of Runnable JVM Threads | The maximum number of threads supported by the JVM during runtime. If excessive threads are created, a large amount of memory resources are consumed. The system may run slow or crash. |

Thread Pool Usage | The ratio between the number of threads in use in the thread pool and the total number of threads in the thread pool. |

Node CPU Utilization (%) | The CPU utilization of the node. Each node is a server. Excessive CPU utilization may cause problems such as slow system response and service unavailability. |

Node Disk Utilization (%) | The ratio between the used disk space and the total disk space. The higher the disk utilization, the less the storage capacity of the node. |

Node Memory Usage (%) | The percentage of memory in use. If the memory usage of the node exceeds 80%, you need to reduce memory pressure by adjusting the configurations of the node or optimizing the memory usage of tasks. |

Business

You can configure alerting for interfaces related to the key business. In the e-commerce industry, alerting can be configured for interfaces related to the business volume. In the game industry, alerting can be configured for the interfaces related to sign-in.

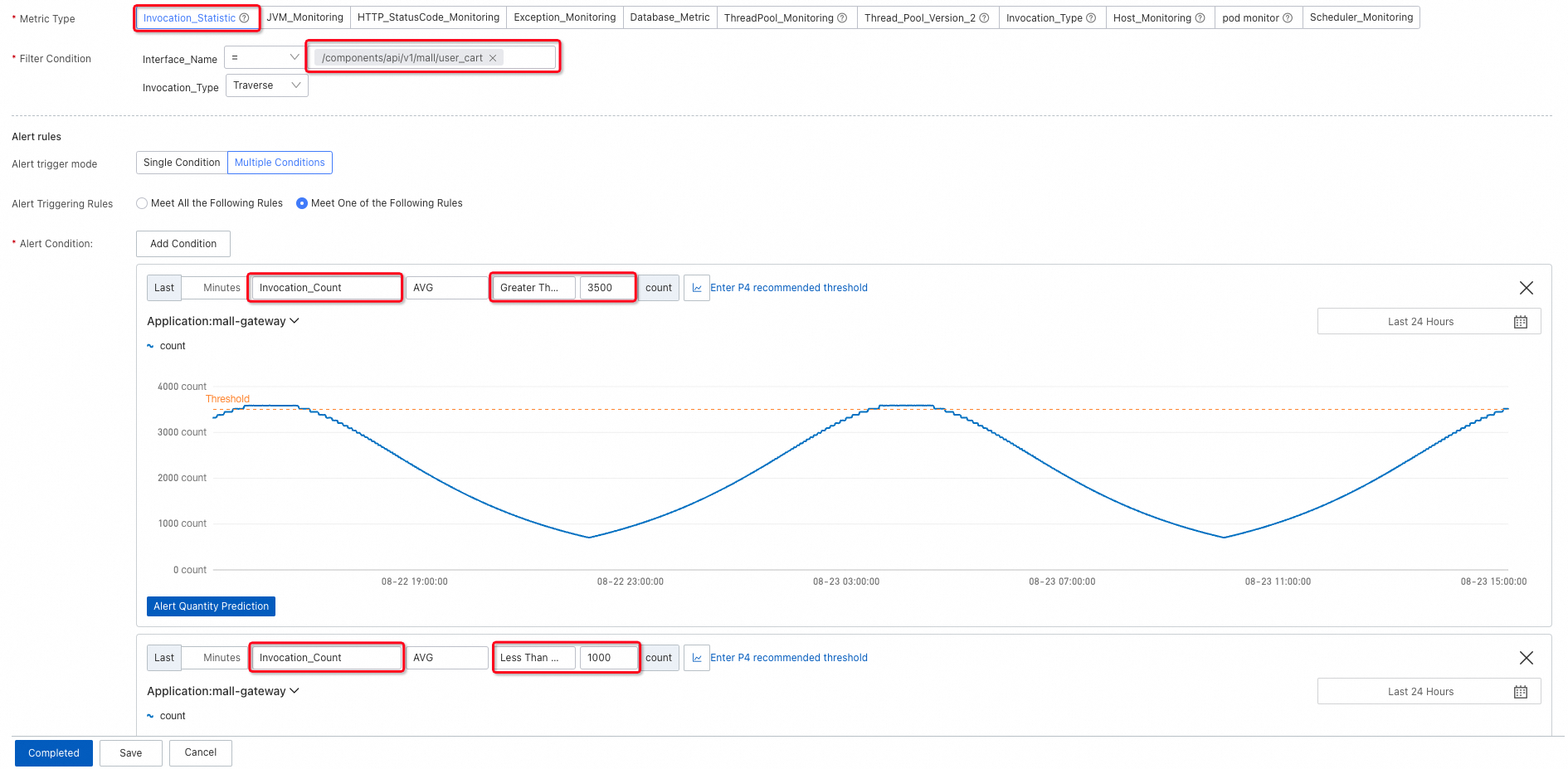

The following example shows an interface related to adding a product to the cart in an e-commerce scenario.

The number of interface calls is commonly used as a metric. Interface calls usually drop when the business is affected. If the interface calls surge and exceed the capacity, the business is overloaded. You can configure a data range to trigger alerts when the interface calls fall out of the data range.

Considering that alerts may be easily triggered during off-peak hours, such as midnight, we recommend that you configure a lower limit and a link relative to monitor the rapid decrease in the interface calls caused by exceptions.

An upper limit is configured for the error rate of interface calls, as shown in the following figure.

In addition, you can configure other metrics based on your business requirements. If your business requires timeliness, you can configure alerting for the response time or the number of slow calls.

Application

When the business is affected, you can troubleshoot problems based on the application metrics.

Generally, a number of exceptions occur together with bugs that happen in release or updates, and abnormal downstream services. Exceptions are crucial to troubleshooting. To monitor exception spikes, we recommend that you configure an upper limit and a link relative to monitor the rapid increase in the exceptions.

An increase in the number of exceptions does not necessarily represent that the application has encountered problems. Graceful degradation handles exceptions without disrupting the application. However, some exceptions may not be discovered and affect the returned result of an interface call, which constitutes an error. Therefore, you can configure an upper limit for the error rate of exceptions.

For HTTP services, you can focus on HTTP status codes. Generally, 4xx status codes represent external errors, whereas 5xx status codes represent server errors. Therefore, we recommend that you configure an upper limit and a link relative to monitor the increase in the 5xx status codes.

When the application encounters problems or the interface calls increase, the overall response time often greatly increases. Therefore, you can configure an upper limit for the response time in the specified time period based on your needs. As shown in the following figure, alerting is configured for the average response time within the last minute. If the business frequently fluctuates, you can specify a longer previous time period, such as 5 minutes or 10 minutes.

The increase in the response time of upstream services is generally caused by external reasons and internal reasons. External reasons are concentrated on databases or services on which the upstream services depend.

In most cases, database issues increase the response time by dozens of or even hundreds of times, which easily triggers alerts. Therefore, you can configure a relatively high upper limit for the response time of database calls.

Then, you can configure upper limits for the response time and error rate of upstream service calls.

Configure an upper limit for the response time of upstream service calls

Configure an upper limit for the error rate of upstream service calls

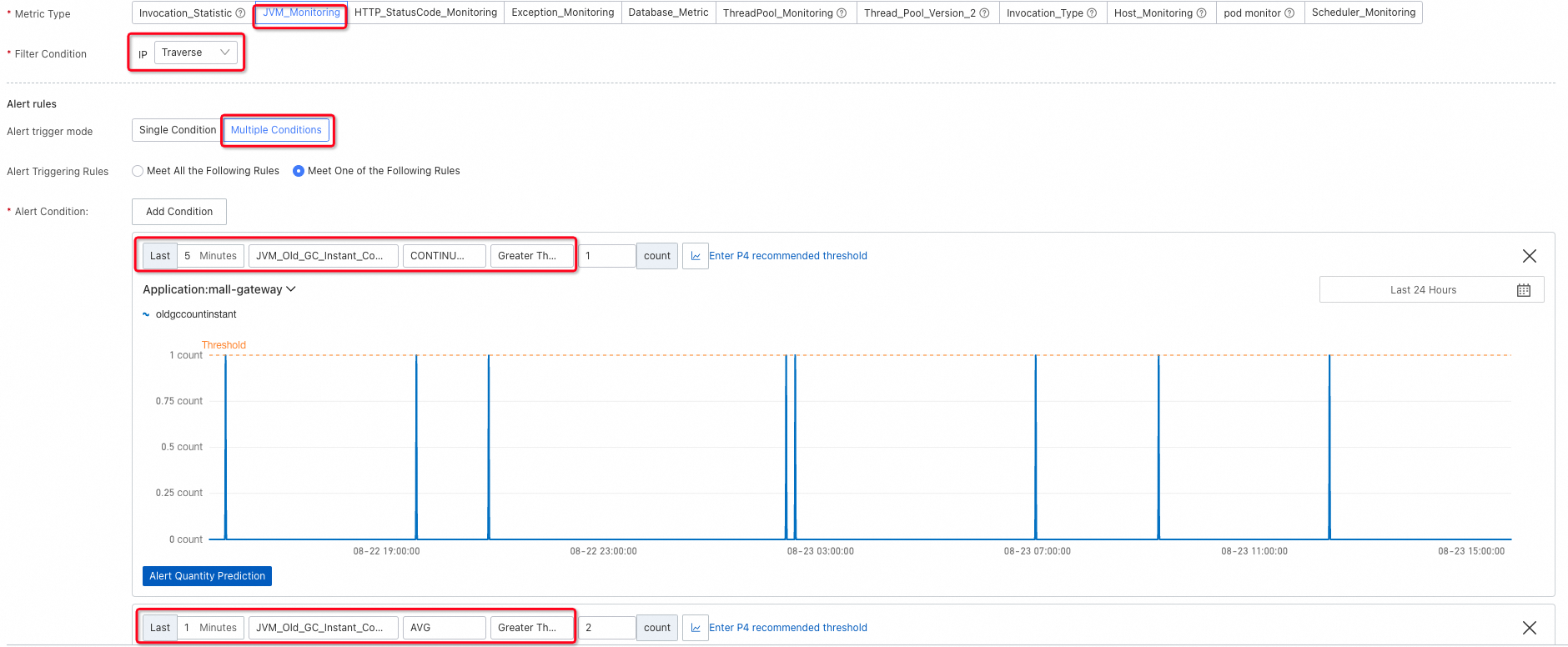

To troubleshoot internal application issues, you can enable Java virtual machine (JVM) and thread pool monitoring.

Even if JVM has a variety of metrics, we recommend that you configure alerting for the number of Full GC events and traverse all nodes. Because continuous Full GC events and frequent Full GC events in a short period of time are both abnormal, you can configure two alerting conditions to monitor them, as shown in the following figure.

On one hand, excessive runnable JVM threads consume a large amount of memory resources. On the other hand, if no JVM thread is runnable, the service is abnormal. Therefore, you can add an alerting condition to search for the absence of runnable JVM threads.

To prevent thread pools from being continuously full, you can configure alerting for the thread pool usage, the number of active threads, or the maximum number of threads within a period of time, as shown in the following figure. If the thread pool size is not specified, 2147483647, which is the maximum positive value for a 32-bit signed binary integer in computing, is considered the thread pool size. In this case, we recommend that you do not add the thread pool size (maximum number of threads) as an alerting condition.

NoteIn the Metric Type section, ThreadPool_Monitoring is available to the ARMS agent V3.x and Thread_Pool_Version_2 is available to the ARMS agent V4.x.

Infrastructure

For applications deployed in Elastic Compute Service (ECS) instances, ARMS collects node information to trigger alerts. We recommend that you configure upper limits for the most significant alerting conditions: CPU utilization, memory usage, and disk utilization.

As the CPU utilization of the nodes greatly fluctuates, you can check whether the CPU utilization continuously reaches the upper limit in a period of time.

Node memory usage

Node disk utilization

For applications deployed in Container Service for Kubernetes (ACK) clusters and monitored by Managed Service for Prometheus, we recommend that you configure Prometheus alerts. For more information, see Monitor an ACK cluster.

For applications deployed in ACK clusters and not monitored by Managed Service for Prometheus, the ARMS agent V4.1.0 and later collects the CPU and memory information of the clusters for monitoring and alerting. As configuring upper limits for the CPU utilization and memory usage of ACK clusters is optional, values are not provided for reference purposes. You can configure the upper limits based on the request volume and resource size of the clusters.

CPU utilization of ACK clusters

Memory usage of ACK clusters

Additional information

Filter conditions

Traversal: traverses all nodes or interfaces, which is similar to the

GROUP BYclause of SQL. Note that the filter condition is not suitable for all interfaces.=: specifies the most significant nodes or interfaces, which is similar to the

WHEREclause of SQL.No dimension: monitors the entire metric data. For metrics about hosts, such as CPU utilization, the host with the highest CPU utilization is monitored. For metrics about upstream services, overall service calls are monitored. For the response time, average response time is monitored.

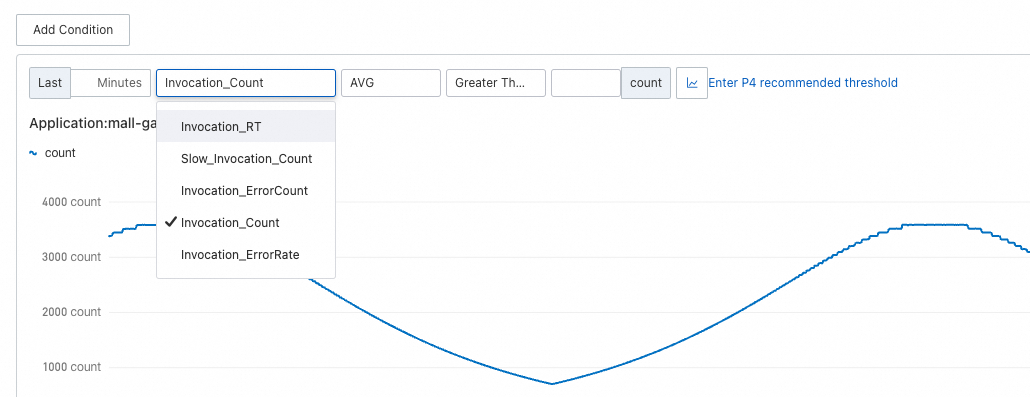

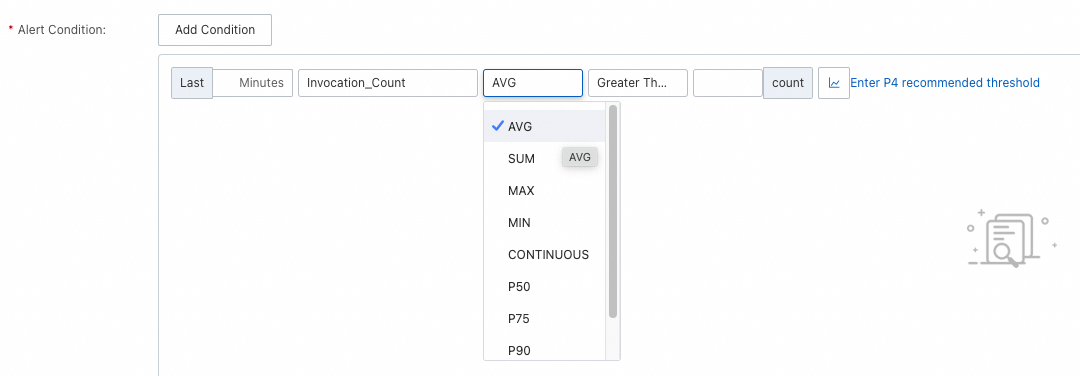

Alerting conditions

AVG/SUM/MAX/MIN: triggers alerts if the average value, sum of all values, maximum value, or minimum value of a metric in the last X minutes reaches the limit.

CONTINUOUS: triggers alerts if the value of a metric continuously reaches the limit in the last X minutes. The alerting condition is often used in scenarios with large fluctuations. Instantaneous values may greatly change.

Pxx: specifies a quantile. The alerting condition is often used in time-consuming scenarios.

The minimum period of time that can be specified for a metric is 1 minute, and the AVG, SUM, MAX, MIN, and CONTINUOUS alerting conditions are no different when 1 minute is specified.

Threshold

Application Monitoring provides recommended thresholds to meet the monitoring requirements for a variety of services or scenarios.

Advanced alerting

You can use Managed Service for Prometheus to configure alerting for metrics through PromQL.

After an application is connected to Application Monitoring, Managed Service for Prometheus automatically creates a Prometheus instance in the region to store the metric data.

In addition to the preset alert rules of Application Monitoring, you can configure advanced Prometheus alerting.

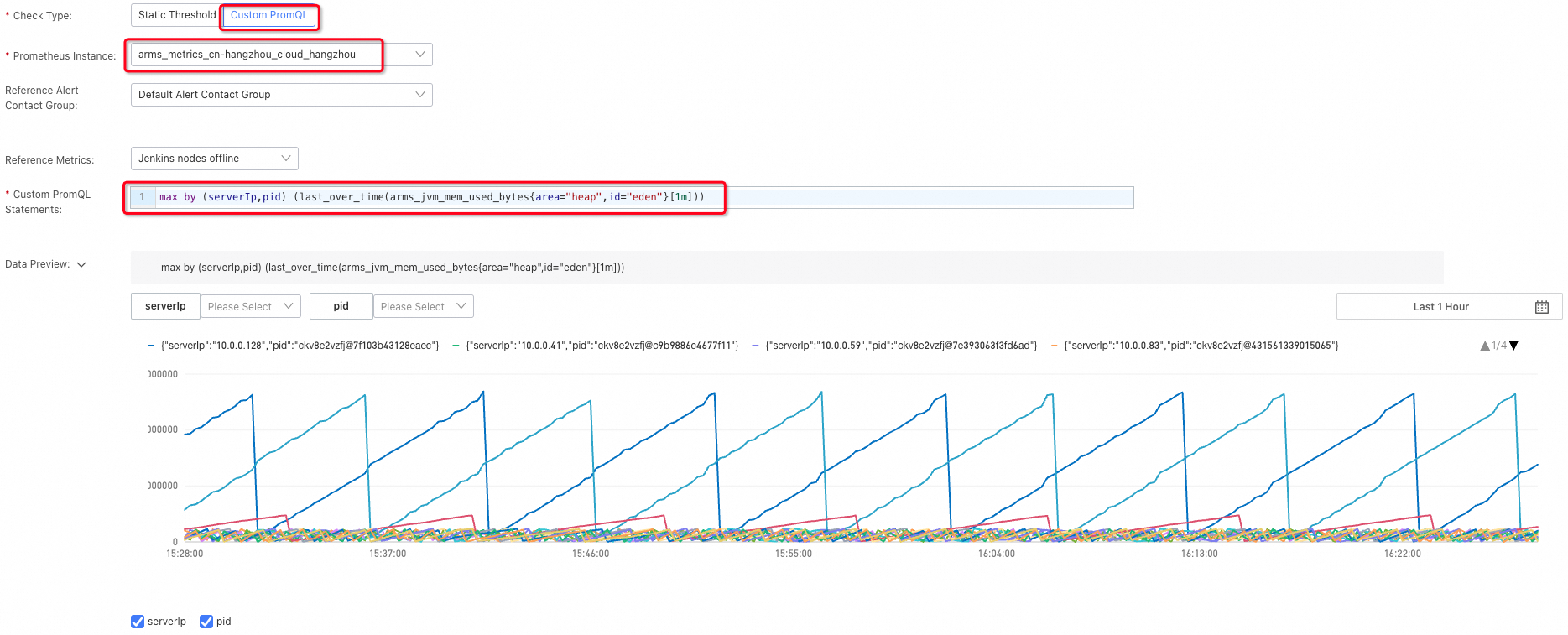

Take the JVM Heap Memory Usage metric as an example. In Application Monitoring, you can monitor the metric of only one application in one region. However, Prometheus alerting allows you to monitor the metric of all applications in the region by using the max by (serverIp,pid) (last_over_time(arms_jvm_mem_used_bytes{area="heap",id="eden"}[1m])) PromQL statement.

For information about the metrics that Application Monitoring supports, see Application Monitoring metrics. We recommend that you use metrics listed in this topic because metrics that are not listed may be incompatible with Application Monitoring in the future.

Sample PromQL statements

Business metrics

Metrics

Metric | PromQL |

Number of HTTP Interface Calls | sum by ($dims) (sum_over_time_lorc(arms_http_requests_count{$labelFilters}[1m])) |

Response Time of HTTP Interface Calls | sum by ($dims) (sum_over_time_lorc(arms_http_requests_seconds{$labelFilters}[1m])) / sum by ($dims) (sum_over_time_lorc(arms_http_requests_count{$labelFilters}[1m])) |

Number of HTTP Interface Calls | sum by ($dims) (sum_over_time_lorc(arms_http_requests_error_count{$labelFilters}[1m])) |

Number of Slow HTTP Interface Calls | sum by ($dims) (sum_over_time_lorc(arms_http_requests_count{$labelFilters}[1m])) |

Dimensions

Similar to the

GROUP BYclause of SQL,$dimsis used for grouping.Similar to the

WHEREclause of SQL,$labelFiltersis used for filtering.

Dimension name | Dimension key |

Service name | service |

Service PID | pid |

Server IP address | serverIp |

Interface | rpc |

Examples:

Calculate the number of HTTP interface calls in the host whose IP address is 127.0.0.1 and group the results by interface.

sum by (rpc) (sum_over_time_lorc(arms_http_requests_count{"serverIp"="127.0.0.1"}[1m]))Calculate the number of HTTP interface calls in the mall/pay interface and group the results by host.

sum by (serverIp) (sum_over_time_lorc(arms_http_requests_count{"rpc"="mall/pay"}[1m]))

JVM metrics

Metrics

Metric | PromQL |

Total JVM Heap Memory | max by ($dims) (last_over_time_lorc(arms_jvm_mem_used_bytes{area="heap",id="old",$labelFilters}[1m)) + max by ($dims) (last_over_time_lorc(arms_jvm_mem_used_bytes{area="heap",id="eden",$labelFilters}[1m])) + max by ($dims) (last_over_time_lorc(arms_jvm_mem_used_bytes{area="heap",id="survivor",$labelFilters}[1m])) |

Number of JVM Young GCs | sum by ($dims) (sum_over_time_lorc(arms_jvm_gc_delta{gen="young",$labelFilters}[1m])) |

Number of JVM Full GC | sum by ($dims) (sum_over_time_lorc(arms_jvm_gc_delta{gen="old",$labelFilters}[1m])) |

Young GC Duration | sum by ($dims) (sum_over_time_lorc(arms_jvm_gc_seconds_delta{gen="young",$labelFilters}[1m])) |

Full GC Duration | sum by ($dims) (sum_over_time_lorc(arms_jvm_gc_seconds_delta{gen="old",$labelFilters}[1m])) |

Number of Active Threads | max by ($dims) (last_over_time_lorc(arms_jvm_threads_count{state="live",$labelFilters}[1m])) |

Heap Memory Usage | (max by ($dims) (last_over_time_lorc(arms_jvm_mem_used_bytes{area="heap",id="old",$labelFilters}[1m])) + max by ($dims) (last_over_time_lorc(arms_jvm_mem_used_bytes{area="heap",id="eden",$labelFilters}[1m])) + max by ($dims) (last_over_time_lorc(arms_jvm_mem_used_bytes{area="heap",id="survivor",$labelFilters}[1m])))/max by ($dims) (last_over_time_lorc(arms_jvm_mem_max_bytes{area="heap",id="total",$labelFilters}[1m])) |

Dimensions

Dimension name | Dimension key |

Service name | service |

Service PID | pid |

Server IP address | serverIp |

System metrics

Metrics

Metric | PromQL |

CPU Utilization | max by ($dims) (last_over_time_lorc(arms_system_cpu_system{$labelFilters}[1m])) + max by ($dims) (last_over_time_lorc(arms_system_cpu_user{$labelFilters}[1m])) + max by ($dims) (last_over_time_lorc(arms_system_cpu_io_wait{$labelFilters}[1m])) |

Memory Usage | max by ($dims) (last_over_time_lorc(arms_system_mem_used_bytes{$labelFilters}[1m]))/max by ($dims) (last_over_time_lorc(arms_system_mem_total_bytes{$labelFilters}[1m])) |

Disk Utilization | max by ($dims) (last_over_time_lorc(arms_system_disk_used_ratio{$labelFilters}[1m)) |

System Load | max by ($dims) (last_over_time_lorc(arms_system_load{$labelFilters}[1m])) |

Number of Error Messages | max by ($dims) (max_over_time_lorc(arms_system_net_in_err{$labelFilters}[1m])) |

Dimensions

Dimension name | Dimension key |

Service name | service |

Service PID | pid |

Server IP address | serverIp |

Thread pool and connection pool metrics

Metrics

ARMS agent V4.1.x and later

Metric | PromQL |

Thread Pool Usage | avg by ($dims) (avg_over_time_lorc(arms_thread_pool_active_thread_count{$labelFilters}[1m]))/avg by ($dims) (avg_over_time_lorc(arms_thread_pool_max_pool_size{$labelFilters}[1m])) |

Connection Pool Usage | avg by ($dims) (avg_over_time_lorc(arms_connection_pool_connection_count{state="used",$labelFilters}[1m]))/avg by ($dims) (avg_over_time_lorc(arms_connection_pool_connection_max_count{$labelFilters}[1m])) |

ARMS agent earlier than V4.1.x

The ARMS agent earlier than V4.1.x uses the same thread pool and connection pool metrics. When you use PromQL statements, you must specify the ThreadPoolType parameter. The value can be Tomcat, apache-http-client, Druid, SchedulerX, okhttp3, or Hikaricp. For more information about frameworks supported by thread pool and connection pool, see Thread pool and connection pool monitoring.

Metric | PromQL |

Thread Pool Usage | avg by ($dims) (avg_over_time_lorc(arms_threadpool_active_size{ThreadPoolType="$ThreadPoolType",$labelFilters}[1m]))/avg by ($dims) (avg_over_time_lorc(arms_threadpool_max_size{ThreadPoolType="$ThreadPoolType",$labelFilters}[1m])) |

Dimensions

Dimension name | Dimension key |

Service name | service |

Service PID | pid |

Server IP address | serverIp |

Thread pool name (ARMS agent earlier than V4.1.x) | name |

Thread pool type (ARMS agent earlier than V4.1.x) | type |