Purposes

After you complete initial access to the Artificial Intelligence Recommendation (AIRec) service, you can evaluate and determine the recommendation effect improvement brought by using the AIRec service and compare the differences between AIRec policies and your own recommendation policies.

In addition, you can use the policy operations tools of AIRec to meet your business requirements. You can evaluate the recommendation effect by using the A/B testing feature provided by AIRec and then optimize policies based on A/B testing results.

Evaluation metrics

In terms of business improvement, recommendation algorithms are used to achieve the following purposes:

1. Implement personalized recommendations and filter items based on the preferences of each user to increase their willingness to browse the items.

2. Recommend the items that users are interested in to improve user stickiness and prevent user churn caused by failures to see their preferred items.

3. Display the items that users may be interested in and willing to purchase. Users may be attracted to click the items, view details, and then purchase the items. This increases the user conversion rate and sales.

Therefore, AIRec mainly focuses on and improves the following metrics:

PV_CTR: the click-through rate (CTR). Calculation formula: Total number of clicks/Total number of exposures. Duplicate records are counted.

UV_CTR: the proportion of users who click an item. Calculation formula: Number of users who click an item/Total number of users who view a page.

PV_CVR: the conversion rate (CVR). Calculation formula: Number of purchases/Number of clicks.

UV_CVR: the proportion of converted users. Calculation formula: Number of users who purchase an item/Number of users who click the item.

Number of active items: the total number of items on which users perform operations in a specified time range.

GMV: the total value of sales in a specified time range. Calculation formula: Number of purchased items × Unit price of items.

The preceding metrics can directly and truly reflect the interest of users in recommended items and their willingness to browse and purchase the items in the current business scenario. You can evaluate the rationality of recommendation algorithms and policies based on these metrics.

If you want to improve more metrics, you can contact us.

Reference: Perform a traffic switchover and check the recommendation effect.

Evaluation methods

Compare AIRec policies with your own recommendation policies:

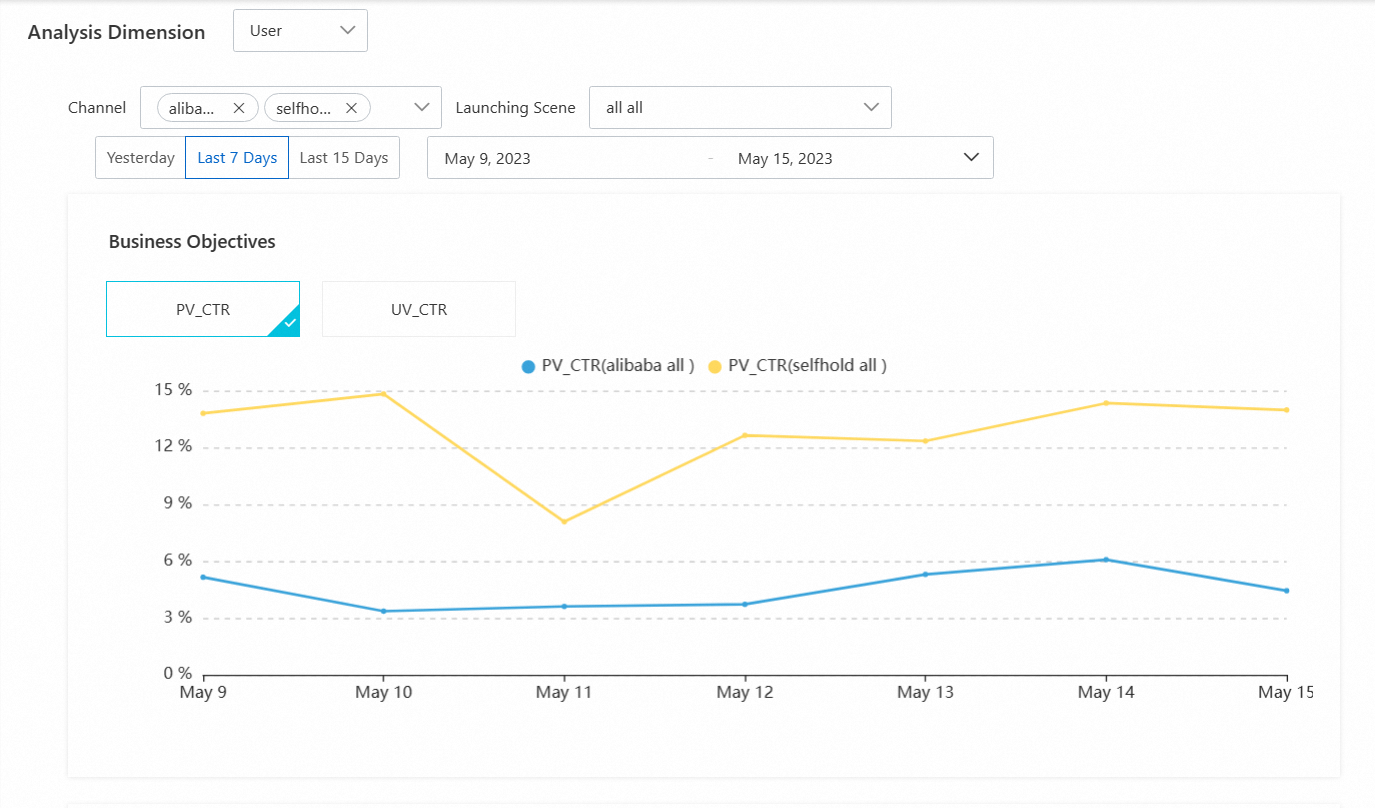

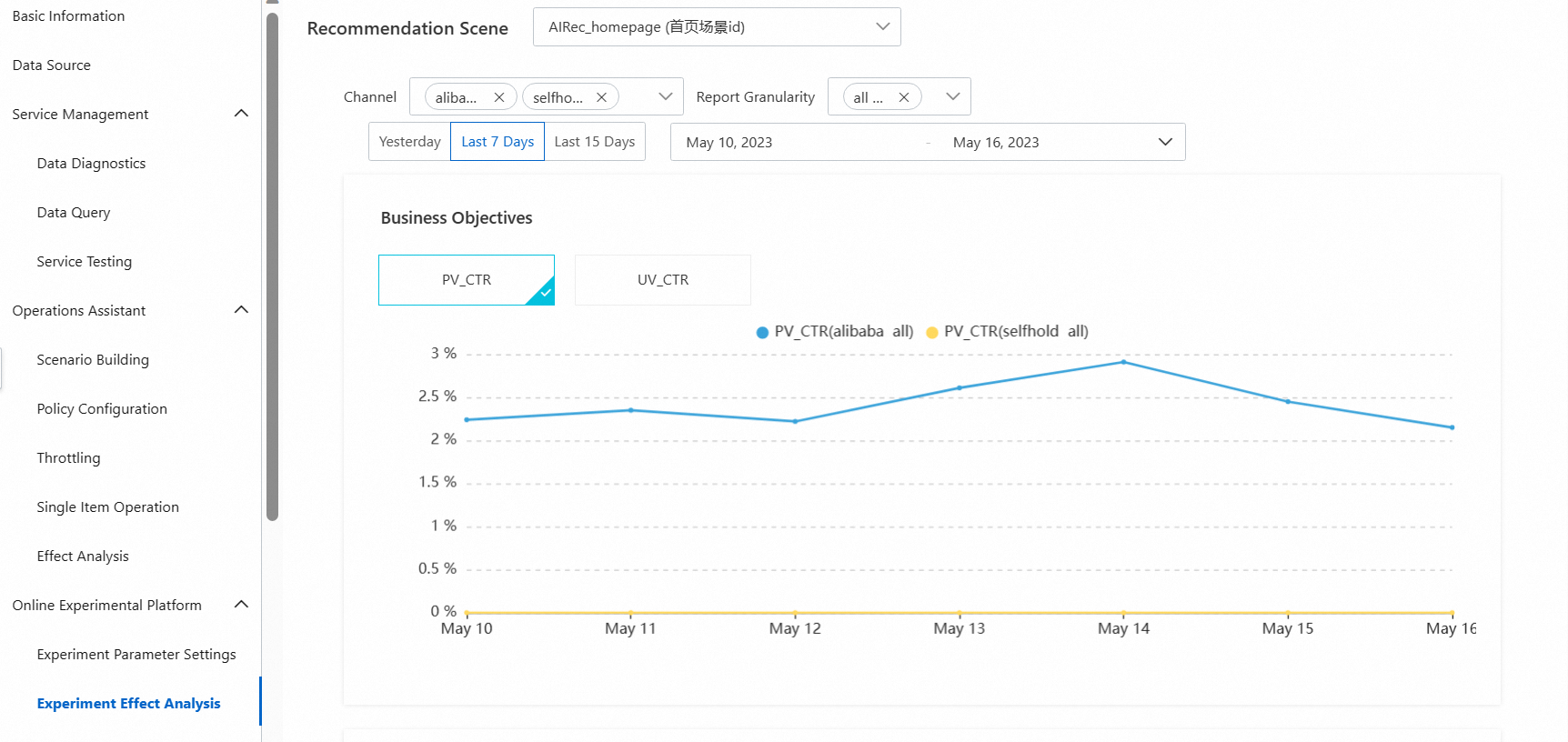

If you want to evaluate the differences between AIRec policies and your own recommendation policies, we recommend that you perform A/B testing. You must create two groups and allocate traffic to the two groups. The traffic allocation must be even, and a certain amount of traffic for each group must be maintained. Then, you collect the metric statistics about PV_CTR, UV_CTR, PV_CVR, and UV_CVR for the two groups each day and view the trends of the effect metrics by using line charts.

You can upload the behavioral data of the two groups to AIRec. The trace_id field uploaded together with the behavioral data identifies the group to which a user belongs. This way, you can view the metric differences between AIRec policies and your own recommendation policies on the Effect Analysis page in the AIRec console. This allows you to evaluate the recommendation effect of the policies in a visualized manner.

Compare different AIRec policies:

If you want to use different AIRec policies and operations tools to evaluate the recommendation effect, you can adjust one policy and then compare the same metrics before and after the adjustment in the same scenario. For example, you can compare the recommendation effect on this week with that on last week. Make sure that variables such as other policies remain unchanged to obtain credible results.

In addition, AIRec provides the A/B testing feature for the AIRec instances of Algorithm Configuration Edition based on the experimental platform. You can perform A/B testing to evaluate the recommendation effect and adjust recommendation policies and filtering algorithm configurations based on the A/B testing results. You can obtain accurate and credible data by allocating different traffic to experiments with different configurations and viewing the recommendation effect of different configurations.

Evaluation scope

Data:

The scope of data used for effect evaluation is limited to the AIRec-related data that is generated in your apps, such as the metrics of tabs, pages, and modules for items that are recommended by AIRec.

Time:

If you compare AIRec policies with your own recommendation policies, you must allocate traffic to both groups and observe the metrics for at least one month. If you compare different AIRec policies, we recommend that you make a comparison by observing the metrics in different time periods with the same length, such as last week and the following week. We also recommend that you perform continuous observation to prevent the results from being affected by certain timeliness events.

Evaluation criteria

To ensure the accuracy and reliability of the evaluation, you must control variables as much as possible. An algorithm is used as the only variable. The evaluation criteria include but are not limited to the following criteria:

1. Make sure that the same item pool and the same basic policies are used.

The item pool of the test group must be the same as that of the control group. This is the basis for obtaining credible A/B testing results.

In addition, the basic display policies such as discretization and exposure blocking must be the same.

2. Make sure that the event tracking data is collected based on the same criteria.

When you compare the recommendation effect, you must make sure that the event tracking data is collected based on the same criteria. This way, you can obtain credible comparison results.

If you use historical data to compare the recommendation effect in different time periods, you must confirm whether AIRec collects event tracking data based on the same criteria in different time periods. If AIRec collects the data based on different criteria, you must check whether the data can be corrected. You can also upload the data to AIRec and perform A/B testing for comparison.

3. Make sure that traffic is randomly allocated to both groups.

To ensure the fairness in effect comparison, the traffic must be randomly allocated. You cannot assign users with specific behavior to one control group. The principle of random allocation must be followed. Users are randomly assigned to the test group before the start of the experiment. You cannot change the test group to which they belong until the end of the experiment.

In addition, the number of users must be kept at a certain amount to prevent the accuracy of the experiment from being affected by the unconventional behavior of a user. Therefore, the greater the number of users is, the more reliable the results are.

4. Make sure that the experiments are performed in the same scenario and use the same policies.

For example, in the home page scenario, items recommended by AIRec and not recommended by AIRec must be displayed in the same position.

In addition, experiments using AIRec algorithm policies and other comparison experiments must use the same basic operations policies such as exposure blocking, diversity rules, and whether to use only items with images.

Optimization methods

You can optimize the recommendation effect of AIRec by optimizing policies or algorithms.

Policy optimization

Policy optimization refers to adjusting or customizing recommendation results by using the policy configuration feature and operations tools provided by AIRec. Policy optimization is usually performed to achieve the certain business effects and operations purposes such as improving the diversity of recommendation results, pinning specific items to the top, and implementing throttling. Policy optimization can also be performed to improve the browsing experience of users.

Algorithm optimization

Algorithm optimization refers to optimizing recommendation results by changing the values of parameters for AIRec filtering algorithms. The algorithms are optimized based on the experimental platform feature. Only the AIRec instances of Algorithm Configuration Edition support algorithm optimization.

If you have personalized requirements for algorithm optimization, you can contact us for further communication.

Policy optimization procedures:

The following content describes the features and procedures of policy optimization for AIRec:

1. Instance-based policy optimization:

Instance-based policy optimization takes effect for an instance in all scenarios.

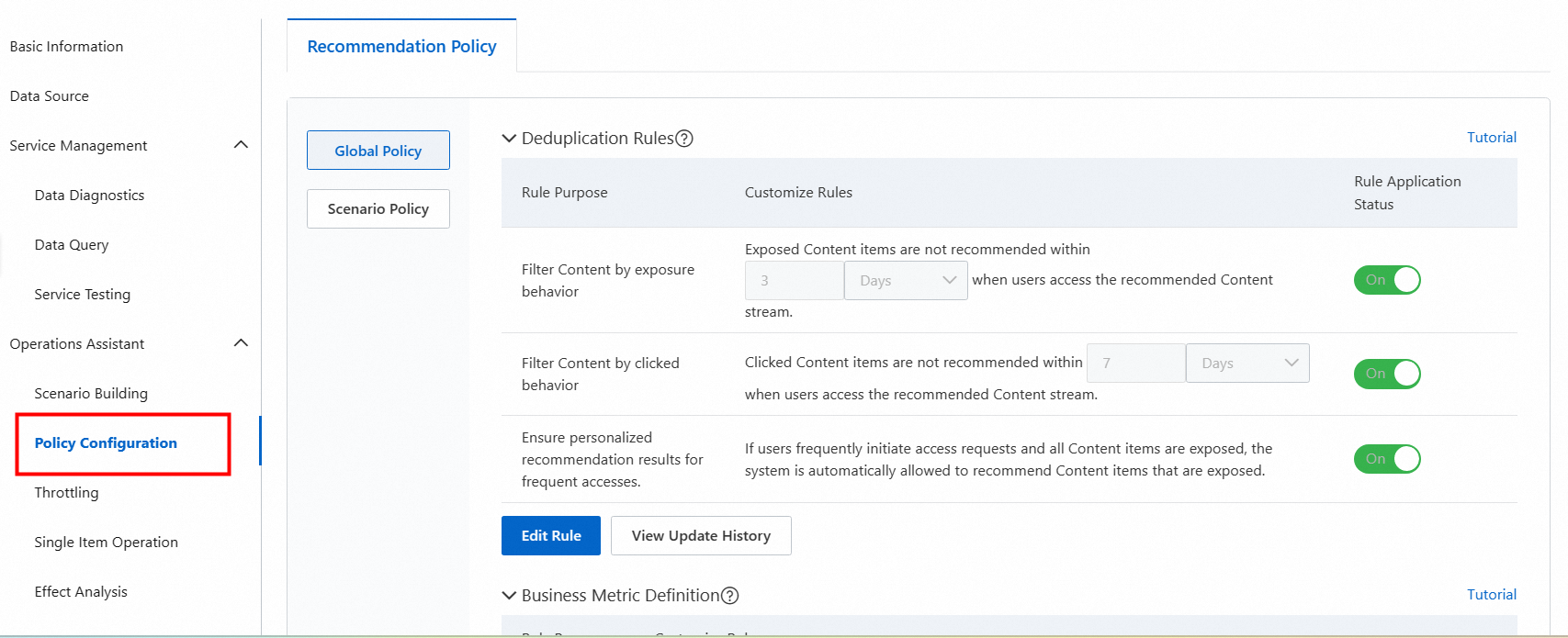

Policy configuration:

You can configure policies for an instance on the page that appears after you choose Operations Assistant > Policy Configuration in the AIRec console.

Display policies: You can configure fatigue rules to prevent exposed and clicked items from being repeatedly recommended within a short period of time and allow the system to recommend exposed and clicked items if no items can be recommended.

Quality control policies: You can perform operations such as publishing and unpublishing, weighting, deleting, and viewing for each single item.

References:

Improve the diversity of recommendations by using instance operation rules

Throttling:

You can implement traffic skew to increase or decrease the recommendation weight of items that are filtered by certain conditions. This can increase or decrease the number of exposures and CTR for these items. Throttling can be used to promote high-quality items.

A throttling policy can be applied to an instance or in a specific scenario.

You can configure a throttling policy on the page that appears after you choose Operations Assistant > Throttling in the AIRec console.

Reference:

Use the throttling feature to control item recommendations

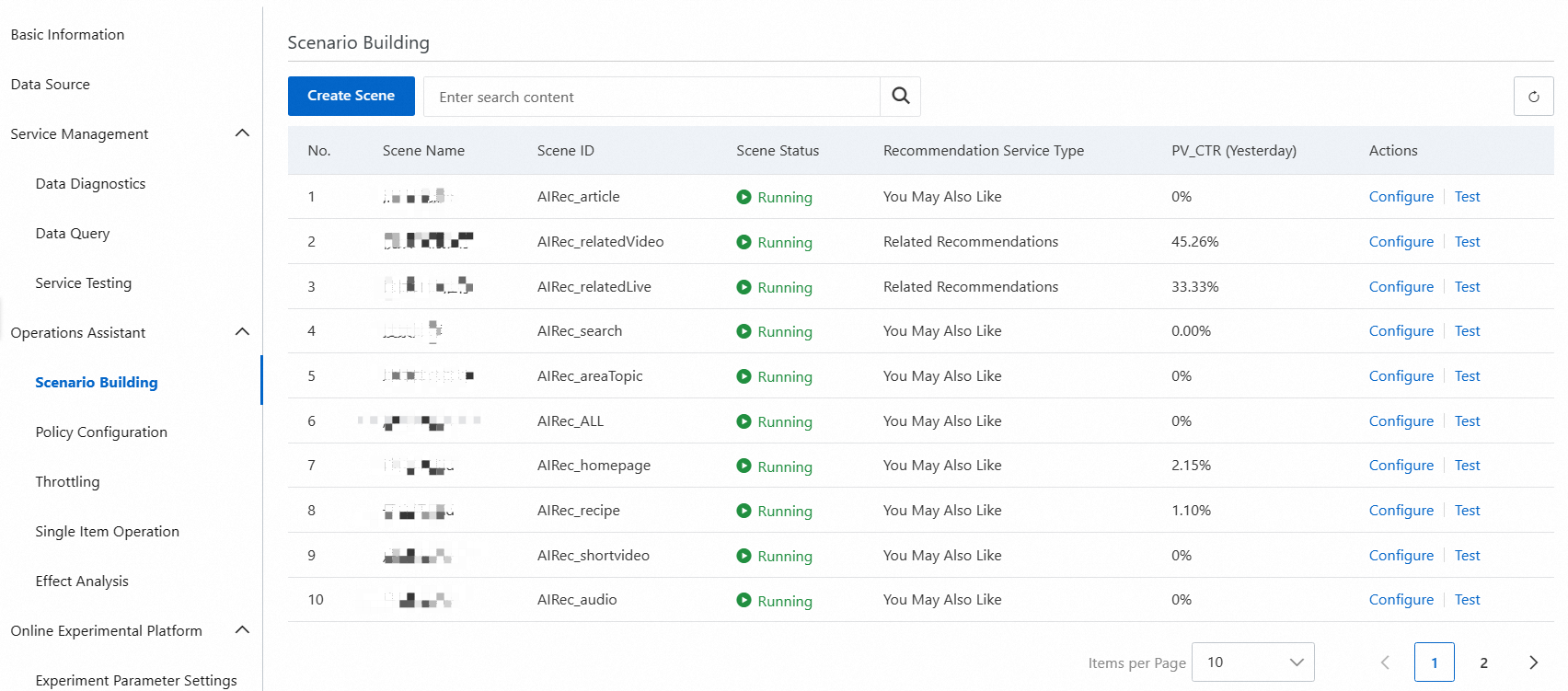

2. Scenario-based policy optimization:

Scenario-based policy optimization takes effect only in the current scenario.

You can configure policies for a scenario on the page that appears after you choose Operations Assistant > Scenario Building and click Configure in the Actions column of the scenario.

Item filtering rules: If you specify a filtering mode when you create a scenario, you can use item filtering rules to specify the item pool from which the items recommended in the scenario are selected. AIRec refreshes the items that meet filtering conditions in real time. You can edit filtering conditions on this page to allow the items recommended in the scenario to meet your business requirements.

Operations rules: You can customize and adjust policies such as fatigue rules and diversity rules for the current scenario. In terms of diversity rules, you can set the proportion of specified recommended items and configure field-based discretization rules.

Reference:

Create a recommendation scene by configuring product selection rules

3. Request-based policy optimization:

You can also customize the recommendation results of each request. The recommendation filtering and item pinning features are supported.

Recommendation filtering:

You can use some fields of item data to filter recommendation results. Recommendation filtering is used in business scenarios where users want to see items that meet specified conditions.

If you want to use the recommendation filtering feature, you must add the filter parameter to requests for recommendations. Reference:

Use the recommendation filtering feature to customize the filtering of feed streams

Item pinning:

You can use the item pinning feature of AIRec to specify the items that you want to display at the top of a recommendation page.

You can specify parameters in a request for recommendation results to determine whether to recommend pinned items in the request.

Reference:

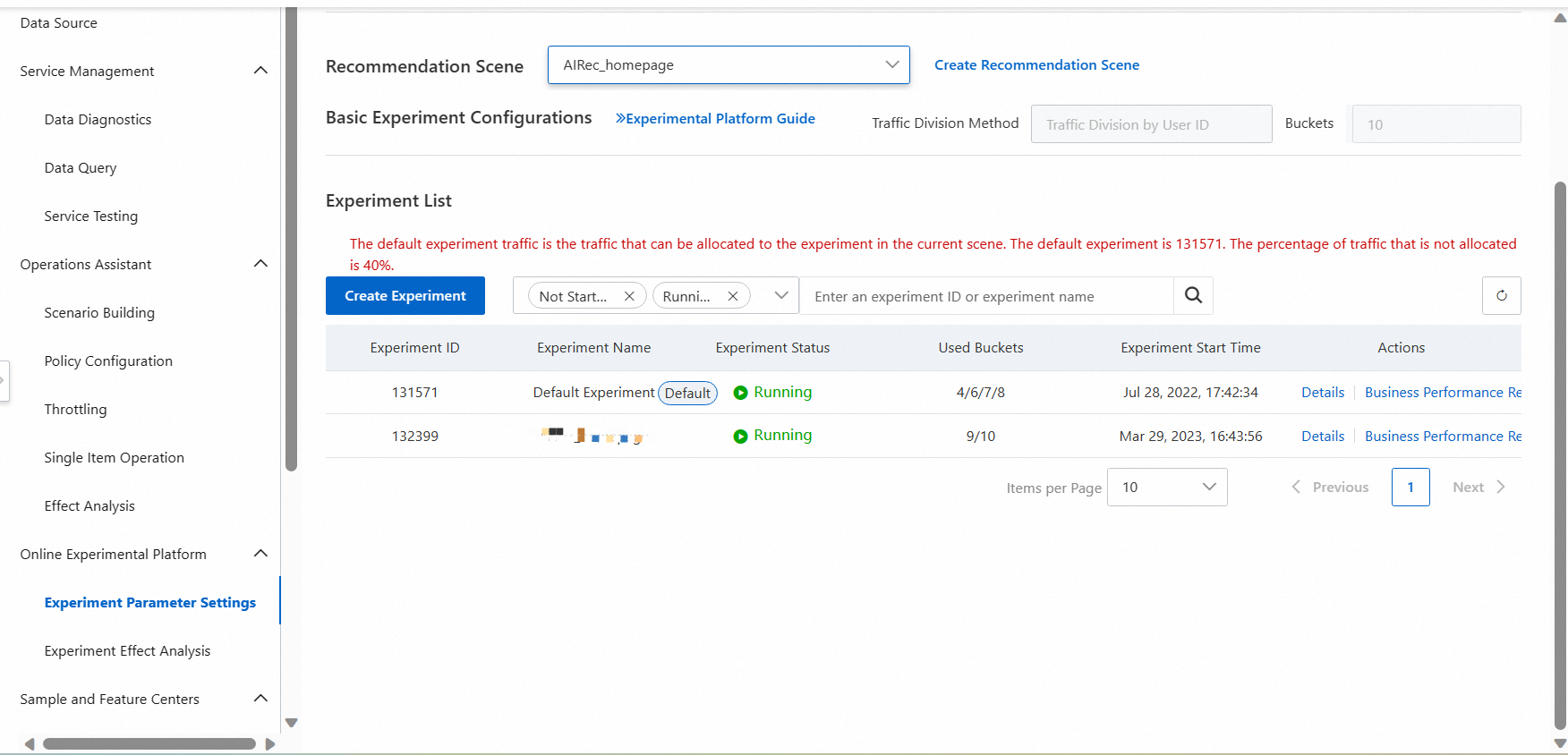

Algorithm optimization procedure (Algorithm Configuration Edition):

You can separately enable the experiment feature for each scenario. You can also perform custom adjustments for filtering algorithms. The experiment feature can be enabled or disabled only for a scenario, instead of globally.

You can perform algorithm optimization on the page that appears after you choose Online Experimental Platform > Experiment Parameter Settings and click Details in the Actions column of an experiment.

A/B testing: In the A/B testing scenario, you can create different experiments and configure different policies for the filtering algorithms of each experiment. Then, you allocate traffic to each experiment and check the recommendation effect of different configurations. You can find the optimal configurations by keeping comparing A/B testing results.

Traffic buckets: You can divide the traffic of each scenario into 10 or 20 traffic buckets based on specified rules. These traffic buckets can be randomly allocated to different experiments to test the effect of different policies in small batches.

Experiment configurations: For each experiment, you can customize the usage, parameters, and priority of each filtering algorithm and its child algorithms. For more information, you can view the related topics.

For more information about the operations such as creating an experiment, see the following topics:

If you have custom requirements for algorithm optimization, you can contact us for evaluation.

Effect observation

AIRec provides a series of reports about the preceding evaluation metrics for you to evaluate the recommendation effect. You can view the metrics on the Effect Analysis page in the AIRec console. For more information, see Perform a traffic switchover and check the recommendation effect.

If you use the experimental platform feature for the AIRec instances of Algorithm Configuration Edition, you can also view the comparison charts of the preceding metrics in different experiments on the Experiment Effect Analysis page in the AIRec console. This way, you can evaluate the effect of each experiment in a convenient manner. For more information, see Experiment effect analysis.