This topic describes how to install KubeRay Operator in an ACK managed Pro cluster and how to enable Simple Log Service and Managed Service for Prometheus for KubeRay. This improves log management, system observability, and system availability. You can create Kubernetes custom resources to manage Ray clusters and applications.

Prerequisites

To create a cluster, see Create an ACK managed cluster. To upgrade your cluster, see Manually upgrade a cluster. Create an ACK Managed Cluster Pro that meets the following requirements.

Cluster version: v1.24 or later.

Instance Type: Requires at least one node with a minimum of 8 vCPUs and 32 GB of memory.

The recommended minimum specifications are for a test environment. For production environments, use specifications that match your actual workload. If you require GPU acceleration, configure GPU-accelerated nodes.

For more information about supported ECS instance types, see Instance family.

You have kubectl installed on your local machine and are connected to your Kubernetes cluster. For more information, see Obtain the KubeConfig file of a cluster and connect to the cluster by using kubectl.

Install KubeRay

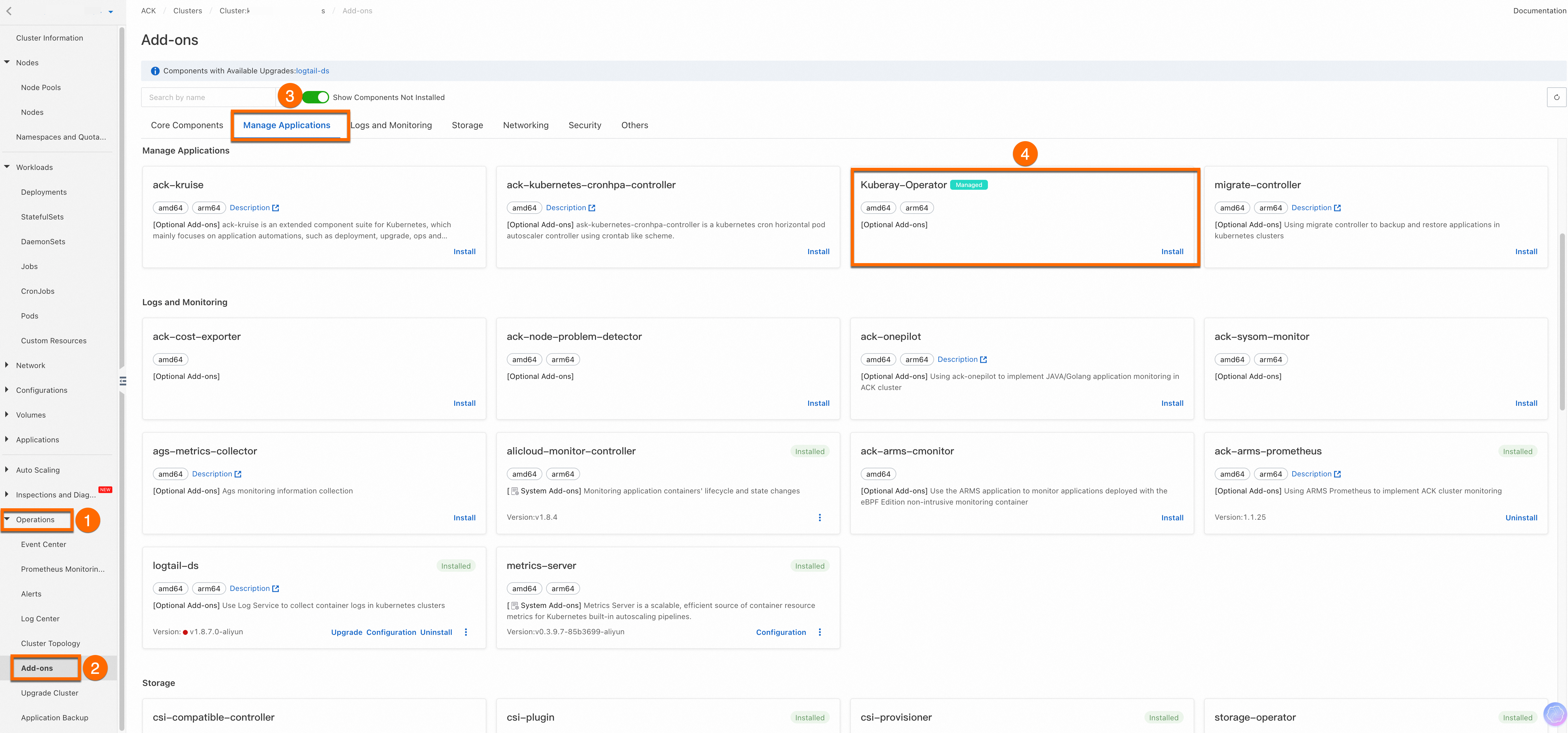

Log on to the ACK console. In the left navigation pane, click Clusters. Click the name of the cluster you created. On the cluster details page, click as indicated in the following figure to install Kuberay-Operator.

Kuberay-Operator is in invitational preview. To use Kuberay-Operator, submit a ticket.

Enable log collection for KubeRay

Go to the cluster details page and choose Operations > Log Center > Control Plane Component Logs > Enable Component Log Collection.

Select kuberay-operator from the drop-down list.

Enable log collection for Ray clusters

You can integrate Simple Log Service with a Ray cluster to persist logs.

Run the following command to create a global AliyunLogConfig object to enable the Logtail component in the ACK cluster to collect logs generated by the pods of Ray clusters and deliver the logs to a Simple Log Service project.

Parameter

Description

logPathCollects all logs in the

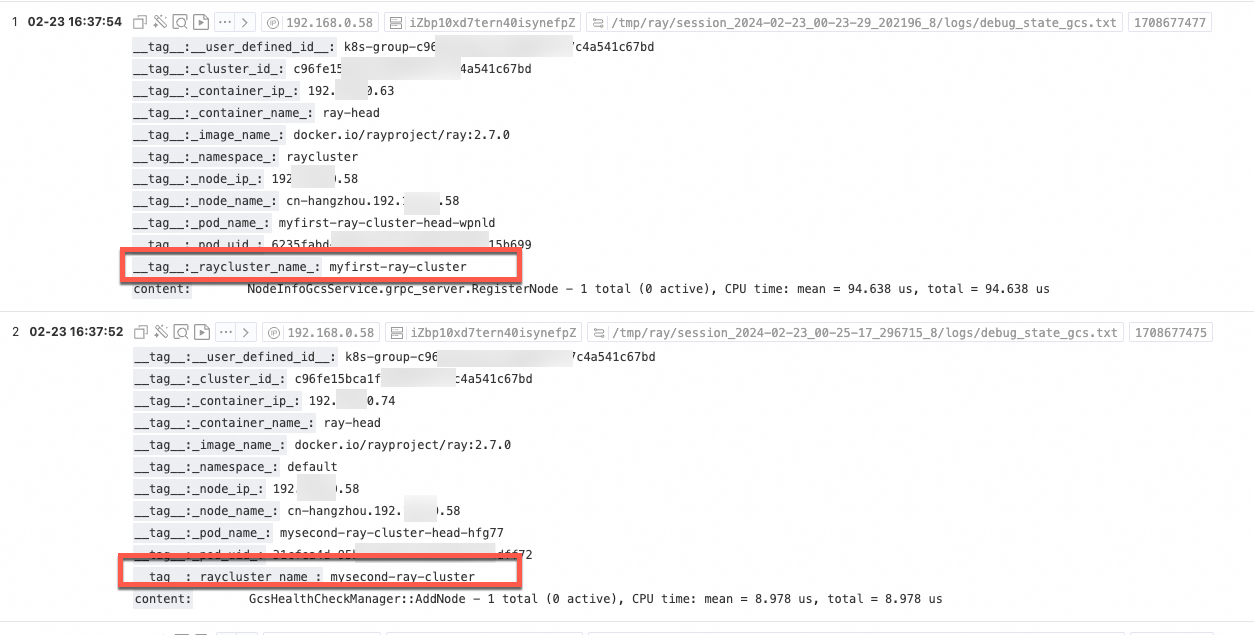

/tmp/ray/session_*-*-*_*/logsdirectory of the pods. You can specify a custom path.advanced.k8s.ExternalK8sLabelTagAdds tags to the collected logs to facilitate retrieval. By default, the

_raycluster_name_and_node_type_tags are added.For more information about the AliyunLogConfig parameters, see Use CRDs to collect container logs in DaemonSet mode. Simple Log Service is a paid service. For more information, see Billing overview.

View logs collected from Ray clusters.

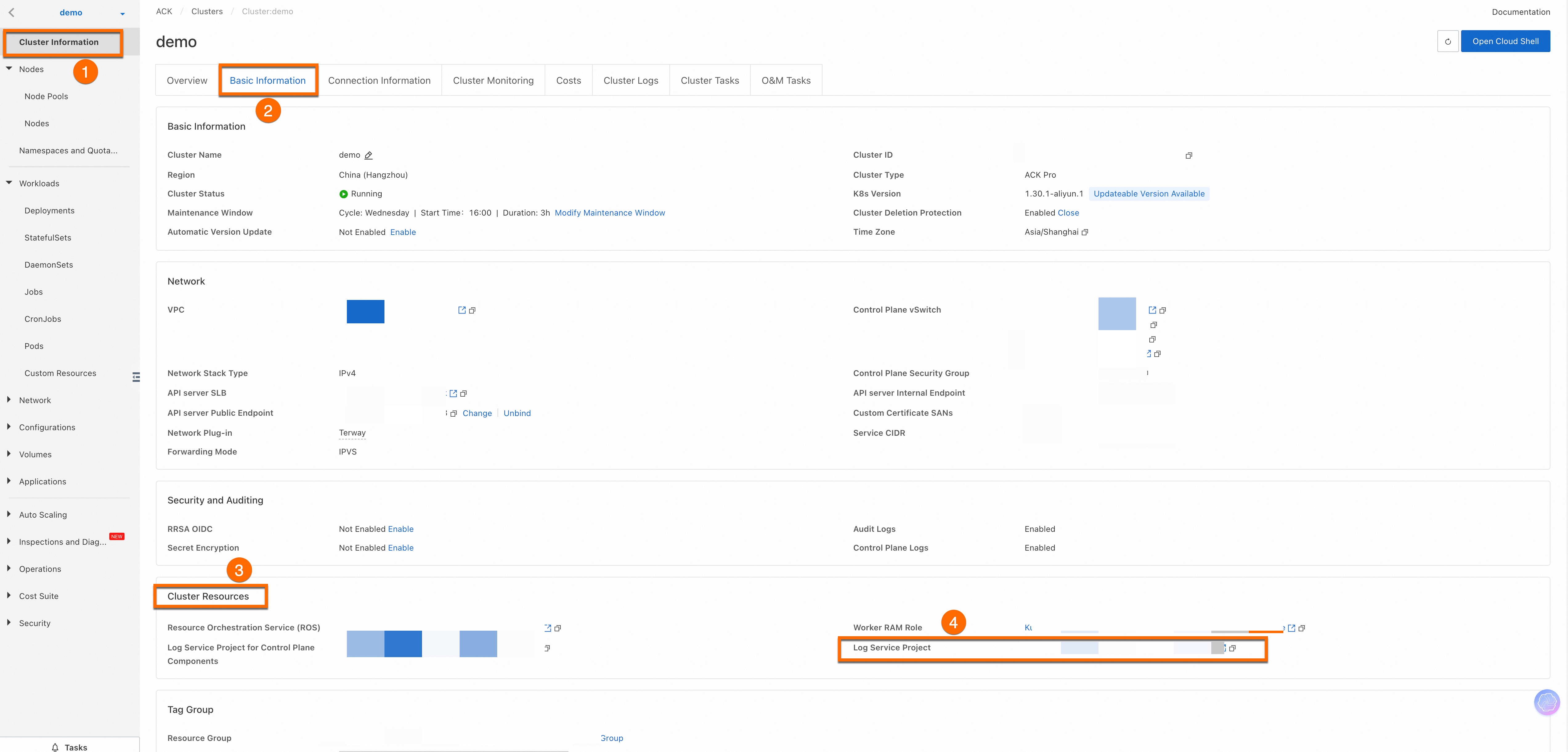

Log on to the ACK console. In the left-side navigation pane, click Clusters. Click the name of the cluster that you want to manage. On the cluster details page, click the callouts in the following figure in sequence. Choose Cluster Information > Basic Information > Cluster Resources. Then, click the hyperlink on the right side of Log Service Project to go to the details page of the Simple Log Service project.

Select the Logstore that corresponds to

rayclustersand view the log content.You can view the logs of different Ray clusters based on tags, such as

_raycluster_name_.

Enable monitoring for Ray clusters

You can enable Managed Service for Prometheus for a Ray cluster. For more information about Managed Service for Prometheus, see Connect to and configure Managed Service for Prometheus. For more information about the billing of Managed Service for Prometheus, see Managed Service for Prometheus instance billing.

Create a PodMonitor and ServiceMonitor to collect metrics from the Ray cluster.

Run the following command to create a PodMonitor:

apiVersion: monitoring.coreos.com/v1 kind: PodMonitor metadata: annotations: arms.prometheus.io/discovery: 'true' arms.prometheus.io/resource: arms name: ray-workers-monitor namespace: arms-prom labels: # `release: $HELM_RELEASE`: Prometheus can only detect PodMonitor with this label. release: prometheus #ray.io/cluster: raycluster-kuberay # $RAY_CLUSTER_NAME: "kubectl get rayclusters.ray.io" spec: namespaceSelector: any: true jobLabel: ray-workers # Only select Kubernetes Pods with "matchLabels". selector: matchLabels: ray.io/node-type: worker # A list of endpoints allowed as part of this PodMonitor. podMetricsEndpoints: - port: metrics relabelings: - action: replace regex: (.+) replacement: $1 separator: ; sourceLabels: - __meta_kubernetes_pod_label_ray_io_cluster targetLabel: ray_io_clusterRun the following command to create a ServiceMonitor:

apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: annotations: arms.prometheus.io/discovery: 'true' arms.prometheus.io/resource: arms name: ray-head-monitor namespace: arms-prom labels: # `release: $HELM_RELEASE`: Prometheus can only detect ServiceMonitor with this label. release: prometheus spec: namespaceSelector: any: true jobLabel: ray-head # Only select Kubernetes Services with "matchLabels". selector: matchLabels: ray.io/node-type: head # A list of endpoints allowed as part of this ServiceMonitor. endpoints: - port: metrics path: /metrics targetLabels: - ray.io/clusterLog on to the Application Real-Time Monitoring Service (ARMS) console and view resource integration information.

Log on to the ARMS console. In the left-side navigation pane, click Integration Center. Use the search bar to find Ray (②), then select it from the results (③). In the Ray panel, select the cluster you created and click OK.

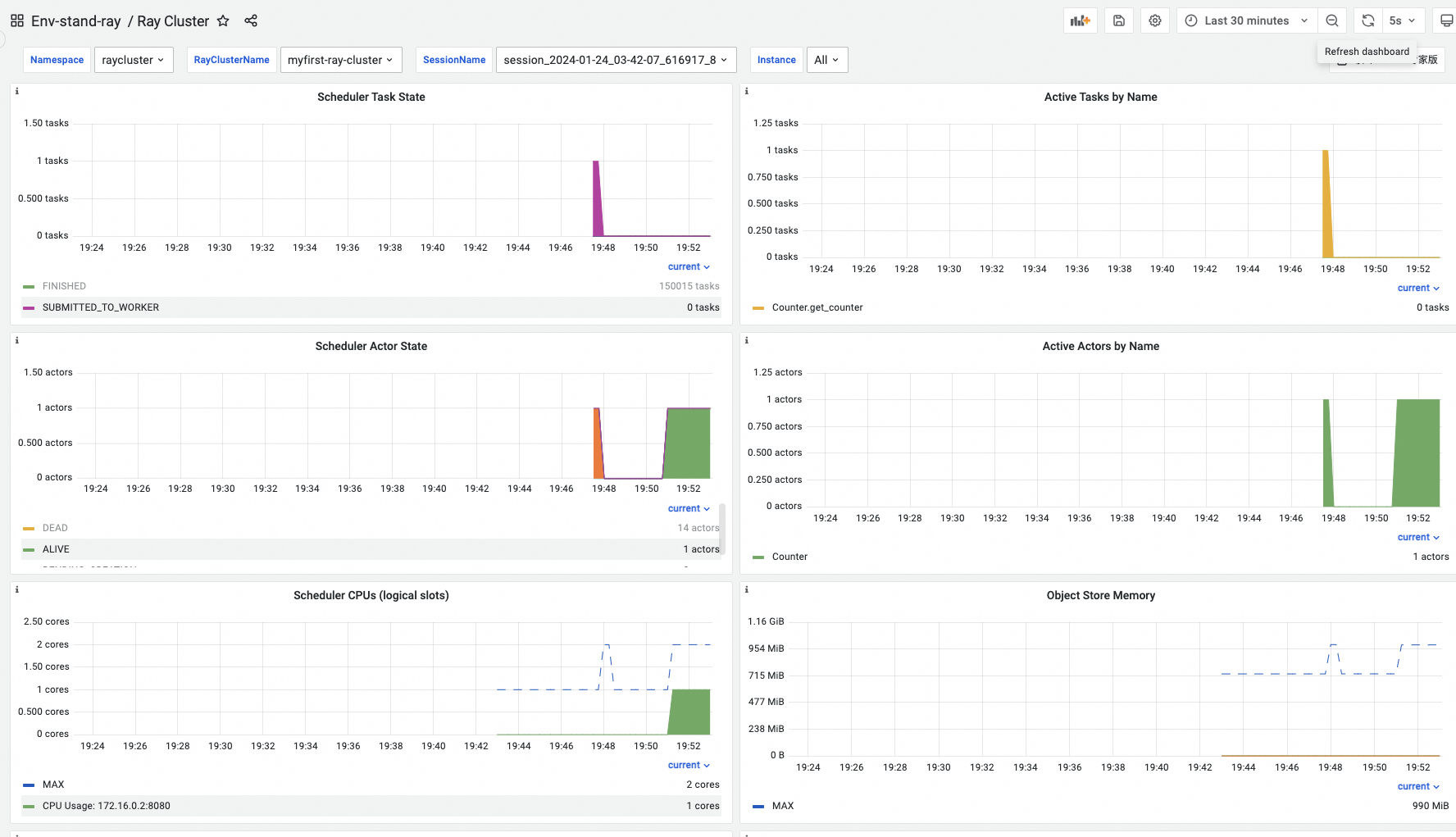

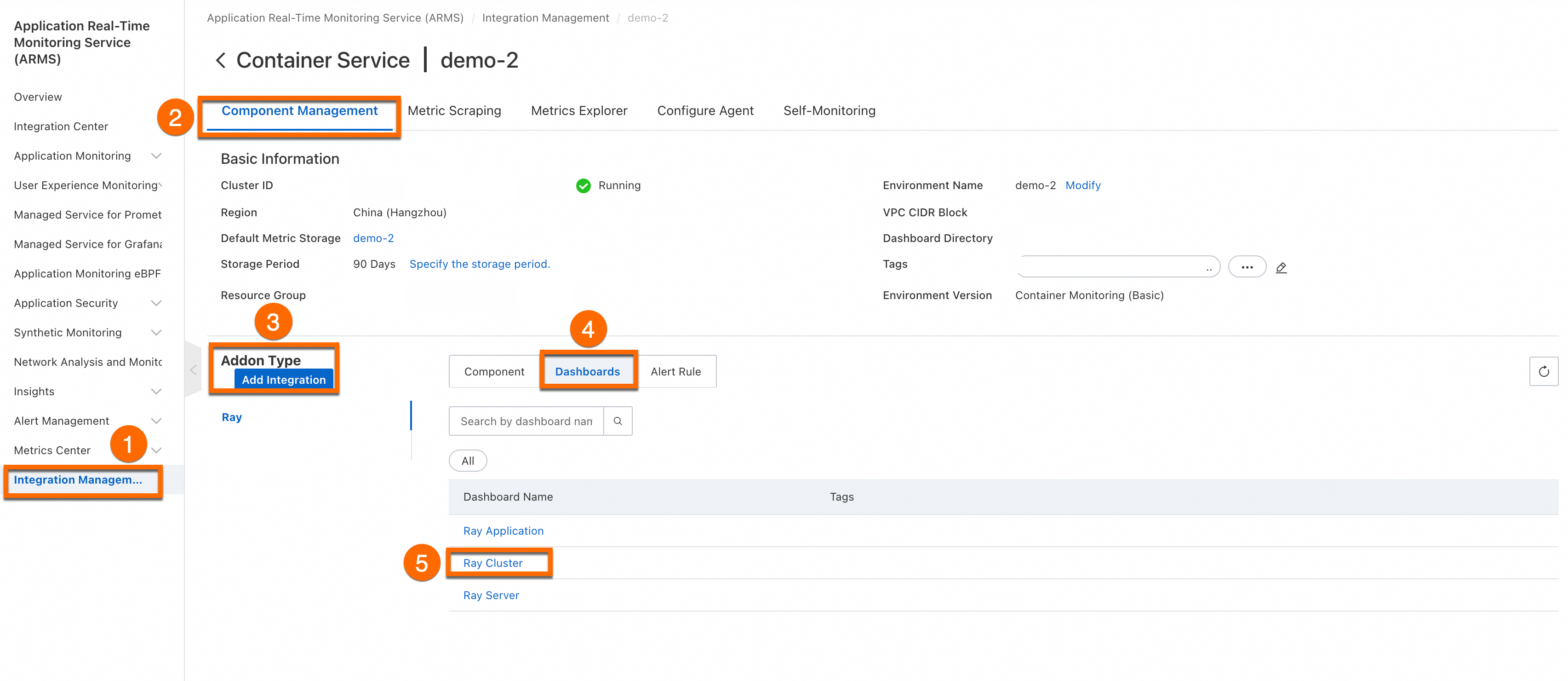

After the ACK cluster is integrated with Managed Service for Prometheus, click Integration Management from the left navigation pane, then click the target environment name. On the Component Management tab, click Dashboards in the Addon Type section, then click Ray Cluster.

Specify Namespace, RayClusterName, and SessionName to filter the monitoring data of tasks running in the Ray clusters.