Container Service for Kubernetes (ACK) provides the network diagnostics feature to help you troubleshoot common network issues, such as connection issues between pods, between an ACK cluster and the Internet, and between a LoadBalancer Service and the Internet. This topic describes how the network diagnostics feature works and how to use this feature to troubleshoot common network connection issues.

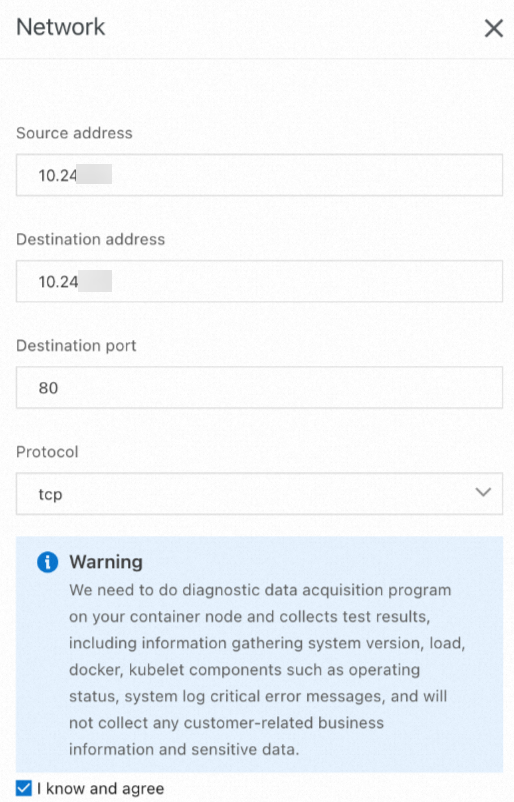

When you use the network diagnostics feature, ACK runs a data collection program on each node in the cluster and collects diagnostic results. ACK collects key error messages from the system log and operation information, such as the system version, loads, Docker, and Kubelet. The data collection program does not collect business information or sensitive data.

Prerequisites

An ACK managed cluster is created.

Introduction to network diagnostics

The network diagnostics feature in the ACK console allows you to specify the source and destination IP addresses, destination port, and protocol of a faulty connection to quickly diagnose the connection. You do not need knowledge about the container network architecture, network plug-ins, or system kernel maintenance to use the network diagnostics feature.

The network diagnostics feature is developed based on the open source KubeSkoop project. KubeSkoop is a Kubernetes networking diagnostic tool for different network plug-ins and Infrastructure as a service (IaaS) providers. You can use KubeSkoop to diagnose common network issues in Kubernetes clusters, and monitor and analyze the critical path of the kernel by using Extended Berkeley Packet Filter (eBPF). This topic introduces only network diagnostics. For more information about in-depth monitoring and analysis, see Use ACK Net Exporter to troubleshoot network issues.

How the network diagnostics feature works

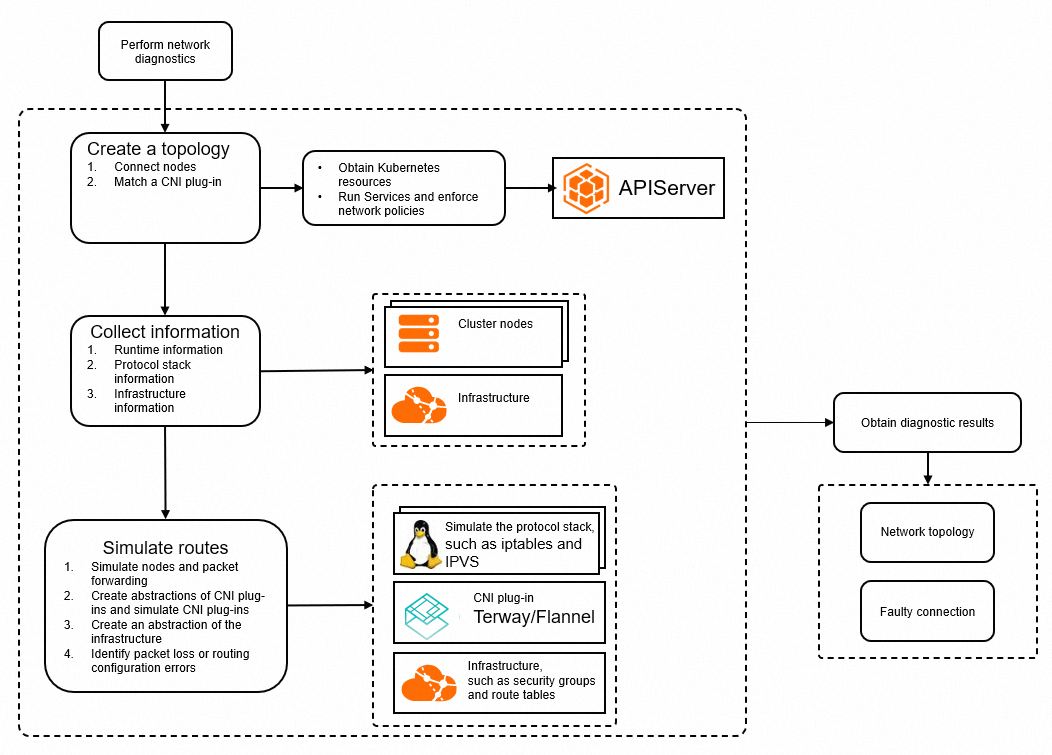

Create a topology: The feature automatically creates a topology based on the network information that you provide and the information collected from the ACK cluster, including the information about pods, nodes, Services, and network policies.

Collect information: The network diagnostics feature collects information about the runtime, network stack, and network infrastructure. The information is used for network troubleshooting and analysis.

Simulate network routes: The network diagnostics feature runs commands on Elastic Compute Service (ECS) instances or deploys a collector pod in the ACK cluster to collect the network stack information of the nodes or containers that are diagnosed, including network devices, sysctl, iptables, and IPVS. In addition, the feature also collects information about route tables, security groups, and NAT gateways in the cloud. The system compares the collected information with the expected network configurations to identify network configuration issues. For example, the system simulates iptables and routes, checks the status of network devices, and verifies the route tables and security group rules in the cloud.

Obtain diagnostic results: The network diagnostics feature compares the actual network configurations with the expected configurations, and highlights the abnormal nodes and network issues in a network topology.

Work with the network diagnostics feature

Limits

The pods to be diagnosed must be in the Running state.

LoadBalancer Services for external access support only Layer 4 Classic Load Balancer (CLB) instances and the number of backend pods must be no greater than 10.

ACK Serverless clusters and virtual nodes do not support network diagnostics.

Procedure

Log on to the ACK console. In the left-side navigation pane, click Clusters.

On the Clusters page, click the name of the cluster that you want to diagnose. In the left-side navigation pane, choose .

On the Diagnosis page, click Network diagnosis. On the Network diagnosis page, click Diagnosis.

In the Network panel, specify Source address, Destination address, Destination port, and Protocol, read the warning and select I know and agree, and then click Create diagnosis.

For more information about the parameters, see Parameters for common network issue diagnostics.

On the Diagnosis result page, you can view the diagnostic result. The Packet paths section displays all nodes that are diagnosed.

Abnormal nodes are highlighted. For more information about common diagnostic results, see Common diagnostic results and solutions.

Parameters for common network issue diagnostics

Scenario 1: Diagnose networks between pods and nodes

You can use the network diagnostics feature to diagnose networks between pods or between pods and nodes. The following table describes the parameters.

Parameter | Description |

Source address | The IP address of a pod or node. |

Destination address | The IP address of another pod or node. |

Destination port | The port to be diagnosed. |

Protocol | The protocol to be diagnosed. |

Scenario 2: Diagnose networks between pods and Services or between nodes and Services

You can specify the cluster IP of a Service to check whether a node or pod can access a Service and verify the network configurations of the Service. The following table describes the parameters.

Parameter | Description |

Source address | The IP address of a pod or node. |

Destination address | The cluster IP of a Service. |

Destination port | The port to be diagnosed. |

Protocol | The protocol to be diagnosed. |

Scenario 3: Diagnose DNS resolution

When the destination address is a domain name, you need to not only check the connection between the source and destination addresses but also check the DNS service in the cluster. You can use the network diagnostics feature to check whether the pod can access the kube-dns Service in the kube-system namespace.

Run the following command to query the cluster IP of the kube-dns Service in the kube-system namespace:

kubectl get svc -n kube-system kube-dnsExpected output:

kube-dns ClusterIP 172.16.XX.XX <none> 53/UDP,53/TCP,9153/TCP 6d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEThe output indicates that the cluster IP of the kube-dns Service is 172.16.XX.XX. Specify the cluster IP of the kube-dns Service as the destination address and configure other parameters.

Parameter | Description |

Source address | The IP address of a pod or node. |

Destination address | The cluster IP of the kube-dns Service. |

Destination port | 53 |

Protocol | udp |

Scenario 4: Diagnose networks between pods or nodes and the Internet

You can use the network diagnostics feature to diagnose networks between pods or nodes and public IP addresses. If the destination address is a domain name, you need to obtain the public IP address that is mapped to the domain name. The following table describes the parameters.

Parameter | Description |

Source address | The IP address of a pod or node. |

Destination address | A public IP address. |

Destination port | The port to be diagnosed. |

Protocol | The protocol to be diagnosed. |

Scenario 5: Diagnose networks between the Internet and a LoadBalancer Service

If external access to a LoadBalancer Service fails, you can specify the public IP address as the source address and the external IP address of the LoadBalancer Service as the destination address to diagnose the connection. The following table describes the parameters.

Parameter | Description |

Source address | A public IP address. |

Destination address | The external IP address of a LoadBalancer Service. |

Destination port | The port to be diagnosed. |

Protocol | The protocol to be diagnosed. |

Common diagnostic results and solutions

Diagnostic result | Description | Solution |

pod container ... is not ready | The containers in the pod are not ready. | Check the health status of the pod and fix the pod. |

network policy ... deny the packet from ... | Packets are blocked by a network policy. | Modify the network policy. |

no process listening on ... | No listener process in the container or on the node uses the current protocol to listen on the port. | Check whether the listener process runs as normal and verify the parameters for network diagnostics, such as the port and protocol. |

no route to host .../invalid route ... for packet ... | No route is found or the route does not point to the desired destination. | Check whether the network plug-in works as normal. |

... do not have same security roup | Two ECS instances use different security groups. Packets may be discarded. | Configure the ECS instances to use the same security group. |

security group ... not allow packet ... | Packets are blocked by the security group of the ECS instance. | Modify the security group rule to accept packets from the specified IP address. |

| The route table does not contain a route that points to the destination address. | If the destination address is a public IP address, check the configurations of the Internet NAT gateway. |

| The route in the route table does not point to the desired destination address. | If the destination address is a public IP address, check the configurations of the Internet NAT gateway. |

no snat entry on nat gateway ... | The desired SNAT entry is not found on the Internet NAT gateway. | Check the SNAT configurations of the Internet NAT gateway. |

backend ... health status for port ..., not "normal" | Some backend pods of the CLB instance fail to pass health checks. | Check whether the desired backend pods are associated with the CLB instance and whether the application run in the associated backend pods is healthy. |

cannot find listener port ... for slb ... | The listening port is not found on the CLB instance. | Check the configurations of the LoadBalancer Service and the parameters for network diagnostics, such as the port and protocol. |

status of loadbalancer for ... port ... not "running" | The listener of the CLB instance is not in the Running state. | Check whether the listening port is normal. |

service ... has no valid endpoint | The Service does not have an endpoint. | Check whether the label selector of the Service functions as expected, whether the endpoint of the Service exists, and whether the endpoint is healthy. For more information about internal access to a LoadBalancer Service, see The CLB instance cannot be accessed from within the cluster. |