Before you can use an ALB Ingress to access services in an ACK dedicated cluster, you must grant the required permissions to the ALB Ingress controller. This topic describes how to grant permissions to the ALB Ingress controller component in an ACK dedicated cluster.

Prerequisites

Usage notes

You must grant access permissions to the ALB Ingress controller only for ACK dedicated clusters. For ACK managed clusters and ACK Serverless clusters, you can use an ALB Ingress without granting permissions to the ALB Ingress controller.

Procedure

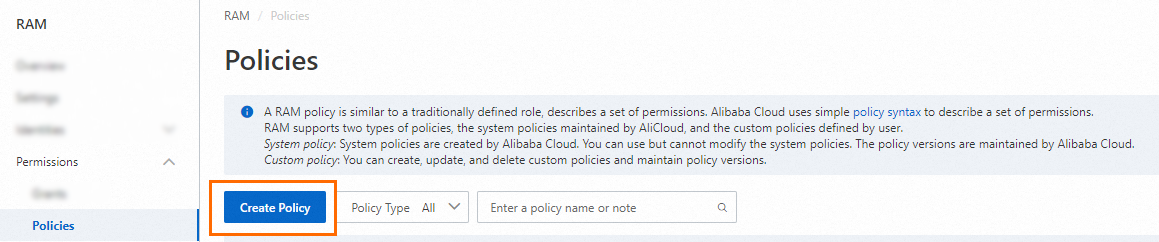

Create a custom policy.

Log on to the RAM console as a RAM user who has administrative rights.

In the left-side navigation pane, choose .

On the Policies page, click Create Policy.

On the Create Policy page, click the JSON tab.

Enter the following policy document. Then, on the Create Policy page, click OK.

{ "Version": "1", "Statement": [ { "Action": [ "alb:EnableLoadBalancerIpv6Internet", "alb:DisableLoadBalancerIpv6Internet", "alb:CreateAcl", "alb:DeleteAcl", "alb:ListAcls", "alb:ListAclRelations", "alb:AddEntriesToAcl", "alb:AssociateAclsWithListener", "alb:ListAclEntries", "alb:RemoveEntriesFromAcl", "alb:DissociateAclsFromListener", "alb:TagResources", "alb:UnTagResources", "alb:ListServerGroups", "alb:ListServerGroupServers", "alb:AddServersToServerGroup", "alb:RemoveServersFromServerGroup", "alb:ReplaceServersInServerGroup", "alb:CreateLoadBalancer", "alb:DeleteLoadBalancer", "alb:UpdateLoadBalancerAttribute", "alb:UpdateLoadBalancerEdition", "alb:EnableLoadBalancerAccessLog", "alb:DisableLoadBalancerAccessLog", "alb:EnableDeletionProtection", "alb:DisableDeletionProtection", "alb:ListLoadBalancers", "alb:GetLoadBalancerAttribute", "alb:ListListeners", "alb:CreateListener", "alb:GetListenerAttribute", "alb:UpdateListenerAttribute", "alb:ListListenerCertificates", "alb:AssociateAdditionalCertificatesWithListener", "alb:DissociateAdditionalCertificatesFromListener", "alb:DeleteListener", "alb:CreateRule", "alb:DeleteRule", "alb:UpdateRuleAttribute", "alb:CreateRules", "alb:UpdateRulesAttribute", "alb:DeleteRules", "alb:ListRules", "alb:UpdateListenerLogConfig", "alb:CreateServerGroup", "alb:DeleteServerGroup", "alb:UpdateServerGroupAttribute", "alb:UpdateLoadBalancerAddressTypeConfig", "alb:AttachCommonBandwidthPackageToLoadBalancer", "alb:DetachCommonBandwidthPackageFromLoadBalancer", "alb:UpdateServerGroupServersAttribute", "alb:MoveResourceGroup", "alb:DescribeZones", "alb:ListAScripts", "alb:CreateAScripts", "alb:UpdateAScripts", "alb:DeleteAScripts" ], "Resource": "*", "Effect": "Allow" }, { "Action": "ram:CreateServiceLinkedRole", "Resource": "*", "Effect": "Allow", "Condition": { "StringEquals": { "ram:ServiceName": [ "alb.aliyuncs.com", "audit.log.aliyuncs.com", "nlb.aliyuncs.com", "logdelivery.alb.aliyuncs.com" ] } } }, { "Action": [ "log:GetProductDataCollection", "log:OpenProductDataCollection", "log:CloseProductDataCollection" ], "Resource": "acs:log:*:*:project/*/logstore/alb_*", "Effect": "Allow" }, { "Action": [ "yundun-cert:DescribeSSLCertificateList", "yundun-cert:DescribeSSLCertificatePublicKeyDetail", "yundun-cert:CreateSSLCertificateWithName", "yundun-cert:DeleteSSLCertificate" ], "Resource": "*", "Effect": "Allow" }, { "Action": "vpc:DescribeVSwitches", "Resource": "*", "Effect": "Allow" }, { "Action": "ecs:DescribeNetworkInterfaces", "Resource": "*", "Effect": "Allow" } ] }NoteSeparate multiple policy statements with commas (,).

In the Create Policy dialog box, enter a Policy Name and Description, and then click OK.

Grant permissions to the worker RAM role of the cluster.

Log on to the ACK console. In the left navigation pane, click Clusters.

On the Clusters page, click the name of the target cluster, and then click the Basic Information tab.

On the Basic Information tab, click the link to the right of Worker RAM Role to go to the RAM console.

On the Permissions tab, click Grant Permission. In the Grant Permission panel, find and select the custom policy that you created in the previous step in the Policy section.

Click Grant Permissions.

Click Close.

Confirm the status of the instance RAM role.

In the left-side navigation pane of the details page, choose .

On the Nodes page, click the instance ID of the target node, for example,

i-2ze5d2qi9iy90pzb****.On the instance details page, click the Instance Details tab. In the Other Information section, check whether a RAM role is displayed to the right of RAM Role.

If no RAM role is attached, attach a RAM role to the ECS instance. For more information, see Detach or change an instance RAM role.

Delete and recreate the alb-ingress-controller pod to ensure that the component is working correctly.

ImportantPerform this operation during off-peak hours.

Query the name of the alb-ingress-controller pod.

kubectl -n kube-system get pod | grep alb-ingress-controllerExpected output:

NAME READY STATUS RESTARTS AGE alb-ingress-controller-*** 1/1 Running 0 60sDelete the alb-ingress-controller pod.

Replace

alb-ingress-controller-***with the value that you obtained in the previous step.kubectl -n kube-system delete pod alb-ingress-controller-***Expected output:

pod "alb-ingress-controller-***" deletedAfter a few minutes, check the status of the recreated pod.

kubectl -n kube-system get podExpected output:

NAME READY STATUS RESTARTS AGE alb-ingress-controller-***2 1/1 Running 0 60sThe output shows that the status of the recreated pod is Running and the component is working correctly.

What to do next

To learn how to use an ALB Ingress to access services in an ACK dedicated cluster, see Expose services with an ALB Ingress.