This topic describes the diagnostic procedure for storage and how to troubleshoot storage exceptions.

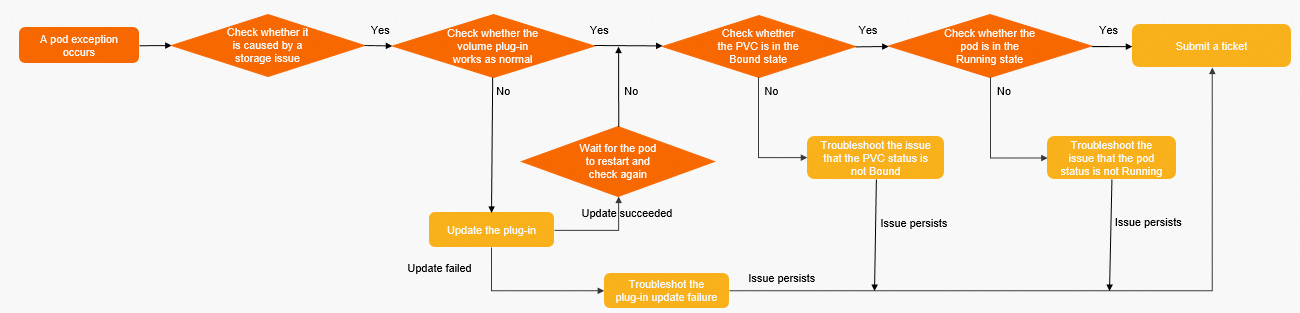

Diagnostic procedure

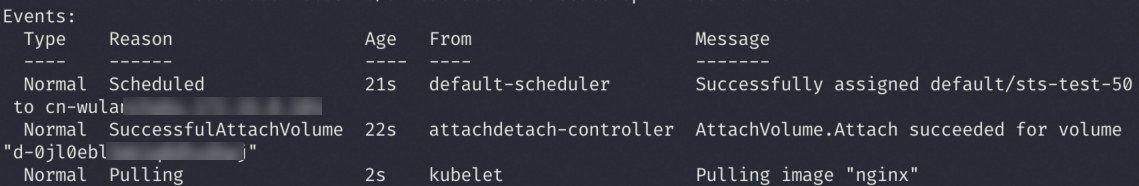

View the pod events to confirm that the pod cannot start due to storage issues.

kubectl describe pods <pod-name>If the pod is in the state shown in the following figure, the storage has been successfully mounted. In this case, if the pod does not start (for example, CrashLoopBackOff), it is not a storage issue. Please submit a ticket for assistance.

Check if the CSI storage plug-in is working properly.

kubectl get pod -n kube-system |grep csiExpected output:

NAME READY STATUS RESTARTS AGE csi-plugin-*** 4/4 Running 0 23d csi-provisioner-*** 7/7 Running 0 14dNoteIf the pod status is not Running, use

kubectl describe pods <pod-name> -n kube-systemto view the specific reason for the container exit and the pod events.Check if the CSI storage plug-in is the latest version.

kubectl get ds csi-plugin -n kube-system -oyaml |grep imageExpected output:

image: registry.cn-****.aliyuncs.com/acs/csi-plugin:v*****-aliyunFor information about the latest version of the storage plug-in, see csi-plugin and csi-provisioner. If the storage plug-in is not the latest version, upgrade the CSI plug-in.

For troubleshooting other storage component upgrade failures, see Troubleshoot component update failures.

Troubleshoot the pod pending issue.

For disk pod pending issues, see The status of the disk pod is not Running below.

For NAS pod pending issues, see The status of the NAS pod is not Running below.

For OSS pod pending issues, see The status of the OSS pod is not Running below.

Troubleshoot the issue that the status of the persistent volume claim (PVC) is not Bound.

For disk PVC non-Bound issues, see The status of the disk PVC is not Bound below.

For NAS PVC non-Bound issues, see The status of the NAS PVC is not Bound below.

For OSS PVC non-Bound issues, see The status of the OSS PVC is not Bound below.

If the issue persists after troubleshooting, please submit a ticket for assistance.

Troubleshoot component update failures

If you fail to update the csi-provisioner and csi-plugin components, perform the following steps to troubleshoot the issue.

Csi-provisioner

This component is a Deployment with 2 replicas by default, deployed on different nodes in a mutually exclusive manner. If the upgrade fails, first check if there is only one available node in the cluster.

The historical version of this component (1.14 and earlier) is a StatefulSet. If there is a StatefulSet type csi-provisioner in the cluster, execute

kubectl delete sts csi-provisionerto delete it, and then log on to the Container Service console to reinstall the csi-provisioner component. For more information, see Components.

Csi-plugin

Check if there are

NotReadynodes in the cluster. If there are, the DaemonSet corresponding to csi-plugin will fail to upgrade.If the component upgrade fails but all plugins are working normally, it may be because the component center detected a timeout and automatically rolled back. If you encounter this issue, please submit a ticket for assistance.

Disk troubleshooting

The node to which the pod belongs and the disk must be in the same region and zone. Cross-region and cross-zone usage is not supported.

Different types of ECS instances support different types of disks. For more information, see Instance family.

The status of the pod is not running

Issue

The status of the PVC is Bound but the status of the pod is not Running.

Cause

No node is available for scheduling.

An error occurs when the system mounts the disk.

The ECS instance does not support the specified disk type.

Solution

Schedule the pod to another node to quickly recover. For more information, see Schedule applications to specified nodes.

Use

kubectl describe pods <pod-name>to view the pod events.Troubleshoot the issue based on the event.

For disk mounting issues, see Disk volume FAQ.

For disk unmounting issues, see Disk volume FAQ.

If there is no relevant event information, please submit a ticket for assistance.

If the ECS instance does not support the specified disk type, select a disk type that is supported by the ECS instance. For more information, see Instance family.

For other ECS OpenAPI type issues, see ErrorCode.

The status of the PVC is not bound

Issue

The status of the PVC is not Bound and the status of the pod is not Running.

Cause

Static: The selectors of the PVC and PV fail to meet certain conditions. Therefore, the PV and PVC cannot be associated. For example, the selector configuration of the PVC is different from that of the PV, the selectors use different StorageClass names, or the status of the PV is Release.

Dynamic: The csi-provisioner component fails to create the disk.

Solution

Static: Check the relevant YAML content. For more information, see Use static disk volumes.

NoteIf the status of the PV is Release, the PV cannot be reused. You need to create a new PV to use the disk.

Dynamic: Use

kubectl describe pvc <pvc-name> -n <namespace>to view the PVC events.Troubleshoot the issue based on the event.

For disk creation issues, see Disk volume FAQ.

For disk expansion issues, see Disk volume FAQ.

If there is no relevant event information, please submit a ticket for assistance.

There might be an issue with the ECS OpenAPI when creating the disk. See ECS Error Center for troubleshooting. If troubleshooting fails, please submit a ticket for assistance.

Nas troubleshooting

To mount a NAS file system to a node, make sure that the node and NAS file system are deployed in the same virtual private cloud (VPC). If the node and NAS file system are deployed in different VPCs, use Cloud Enterprise Network (CEN) to connect them.

You can mount a NAS file system to a node that is deployed in a zone different from the NAS file system.

The mount directory for Extreme NAS file system and CPFS 2.0 must start with

/share.

The status of the pod is not running

Issue

The status of the PVC is Bound but the status of the pod is not Running.

Cause

When mounting the NAS file system,

fsGroupsis used, and there are many files, resulting in slow chmod speed.Port 2049 is blocked in the security group rules.

The NAS file system and node are deployed in different VPCs.

Solution

Check if

fsGroupsis set. If it is, remove it, restart the pod, and remount.Check whether port 2049 of the node that hosts the pod is blocked. If yes, unblock the port and try again. For more information, see Add security group rules.

If the NAS file system and node are deployed in different VPCs, use CEN to connect them.

For other issues, use

kubectl describe pods <pod-name>to view the pod events.Troubleshoot the issue based on the event. For more information, see NAS volume FAQ.

If there is no relevant event information, please submit a ticket for assistance.

The status of the PVC is not bound

Issue

The status of the PVC is Bound but the status of the pod is not Running.

Cause

Static: The selectors of the PVC and persistent volume (PV) fail to meet certain conditions. Therefore, the PV and PVC cannot be associated. For example, the selector configuration of the PVC is different from that of the PV, the selectors use different StorageClass names, or the status of the PV is Release.

Dynamic: The csi-provisioner component fails to mount the NAS file system.

Solution

Static: Check the relevant YAML content. For more information, see Use static NAS volumes.

NoteIf the status of the PV is Release, the PV cannot be reused. Create a new PV that uses the NAS file system.

Dynamic: Use

kubectl describe pvc <pvc-name> -n <namespace>to view the PVC events.Troubleshoot the issue based on the event. For more information, see NAS volume FAQ.

If there is no relevant event information, please submit a ticket for assistance.

Oss troubleshooting

When mounting an OSS bucket to a node, you need to fill in the AccessKey information in the PV, which can be used through the Secret method.

When using OSS across regions, you need to change the Bucket URL to a public network address. For the same region, it is recommended to use an internal network address.

The status of the pod is not running

Issue

The status of the PVC is Bound but the status of the pod is not Running.

Cause

When mounting the OSS bucket,

fsGroupsis used, and there are many files, resulting in slow chmod speed.The OSS bucket and node are created in different regions and the private endpoint of the OSS bucket is used. As a result, the node fails to connect to the bucket endpoint.

Solution

Check if

fsGroupsis set. If it is, remove it, restart the pod, and remount.Check if you are accessing the bucket across regions using an internal network address. If so, please use a public network address instead.

For other issues, use

kubectl describe pods <pod-name>to view the pod events.Troubleshoot the issue based on the event. For more information, see OSS volume FAQ.

If there is no relevant event information, please submit a ticket for assistance.

The status of the PVC is not bound

Issue

The status of the PVC is not Bound and the status of the pod is not Running.

Cause

Static: The selectors of the PVC and PV fail to meet certain conditions. For example, the selector configuration of the PVC is different from that of the PV, the selectors use different StorageClass names, or the status of the PV is Release.

Dynamic: The csi-provisioner component fails to mount the OSS bucket.

Solution

Static: Check the relevant YAML content. For more information, see Use static OSS volumes.

NoteIf the status of the PV is Release, the PV cannot be reused. You need to extract the bucket address and create a new PV.

Dynamic: Use

kubectl describe pvc <pvc-name> -n <namespace>to view the PVC events.Troubleshoot the issue based on the event. For more information, see OSS volume FAQ.

If there is no relevant event information, please submit a ticket for assistance.